True, and to the god of encryption, we will say the same today.

Here it will be about an unencrypted IPv4 tunnel, but not about a "warm lamp", but about a modern "LED" one. And then raw sockets are flashing, and there is work with packages in user space.

There are N tunneling protocols for every taste and color:

- stylish, trendy, youthful WireGuard

- multifunctional like Swiss knives, OpenVPN and SSH

- old and not evil GRE

- the simplest, fastest, not encrypted IPIP at all

- actively developing GENEVE

- many others.

But I'm a programmer, so I'll only increase N by a fraction, and leave the development of real protocols to the b-developers.

In another unborn project , which I am currently engaged in, I need to reach hosts behind NAT from the outside. Using protocols with adult cryptography for this, I never left the feeling that it was like a cannonball. Because the tunnel is used for the most part only to poke a hole in NAT-e, internal traffic is usually encrypted too, yet they are drowned for HTTPS.

While researching various tunneling protocols, my inner perfectionist's attention was drawn to IPIP time after time because of its minimal overhead. But it has one and a half significant drawbacks for my tasks:

- it requires public IPs on both sides,

- and no authentication for you.

Therefore, the perfectionist was driven back into the dark corner of the skull, or wherever he sits there.

And once, while reading articles on natively supported tunnels in Linux, I came across FOU (Foo-over-UDP), i.e. whatever, wrapped in UDP. So far, only IPIP and GUE (Generic UDP Encapsulation) are supported from anything else.

“Here is a silver bullet! Me and a simple IPIP for the eyes. " I thought.

In fact, the bullet was not entirely silver. Encapsulation in UDP solves the first problem - you can connect to clients behind NAT from the outside using a pre-established connection, but here half of the next disadvantage of IPIP blooms in a new light - anyone from the private network can be hidden behind the visible public IP and client port (in pure IPIP there is no problem).

To solve this one-and-a-half problem, the ipipou utility was born . It implements a self-made mechanism for authenticating a remote host, while not disrupting the work of a vigorous FOU, which will quickly and efficiently process packets in the kernel space.

Don't need your script!

Ok, if you know the public port and IP of the client (for example, all yours don't go anywhere after it, NAT tries to map ports 1-to-1), you can create an IPIP-over-FOU tunnel with the following commands, without any scripts.

on server:

# FOU

modprobe fou

# IPIP FOU.

# ipip .

ip link add name ipipou0 type ipip \

remote 198.51.100.2 local 203.0.113.1 \

encap fou encap-sport 10000 encap-dport 20001 \

mode ipip dev eth0

# FOU

ip fou add port 10000 ipproto 4 local 203.0.113.1 dev eth0

# IP

ip address add 172.28.0.0 peer 172.28.0.1 dev ipipou0

#

ip link set ipipou0 up

on the client:

modprobe fou

ip link add name ipipou1 type ipip \

remote 203.0.113.1 local 192.168.0.2 \

encap fou encap-sport 10001 encap-dport 10000 encap-csum \

mode ipip dev eth0

# local, peer, peer_port, dev , .

# peer peer_port FOU-listener-.

ip fou add port 10001 ipproto 4 local 192.168.0.2 peer 203.0.113.1 peer_port 10000 dev eth0

ip address add 172.28.0.1 peer 172.28.0.0 dev ipipou1

ip link set ipipou1 up

Where

ipipou*- the name of the local tunnel network interface203.0.113.1- public IP of the server198.51.100.2- public IP of the client192.168.0.2- client IP assigned to eth0 interface10001- local client port for FOU20001- public client port for FOU10000- public server port for FOUencap-csum— UDP UDP ;noencap-csum, , ( )eth0— ipip172.28.0.1— IP ()172.28.0.0— IP ()

As long as the UDP connection is alive, the tunnel will be in a working state, and how it breaks, how lucky - if the IP: port of the client remains the same - it will live, change - it will break.

The easiest way to turn things around is by unloading kernel modules:

modprobe -r fou ipip

Even if authentication is not required, the public IP and client port are not always known and are often unpredictable or changeable (depending on the NAT type). If you omit it

encap-dporton the server side, the tunnel will not work, it is not smart enough to take the remote connection port. In this case, ipipou can also help, well, or WireGuard and others like him to help you.

How it works?

The client (which is usually behind NAT) sets up a tunnel (as in the example above) and sends an authenticated packet to the server so that it can configure the tunnel from its side. Depending on the settings, it can be an empty packet (just so that the server sees the public IP: connection port), or with data by which the server can identify the client. The data can be a simple plaintext passphrase (an analogy with HTTP Basic Auth comes to mind) or specially formatted data signed with a private key (by analogy with HTTP Digest Auth, only stronger, see the function

client_authin the code).

On the server (side with public IP), when ipipou starts, it creates an nfqueue queue handler and configures netfilter so that the necessary packets are sent to where they should go: the packets initializing the connection to the nfqueue queue, and [almost] all the rest straight to the FOU listener.

Who is not in the subject, nfqueue (or NetfilterQueue) is such a special thing

For some programming languages there are bindings for working with nfqueue, for bash there was no (heh, not surprising), I had to use python: ipipou uses NetfilterQueue .

If performance is not critical, with the help of this thing you can relatively quickly and easily cook your own logic for working with packets at a fairly low level, for example, sculpt experimental data transfer protocols, or troll local and remote services with non-standard behavior.

Raw sockets work hand in hand with nfqueue, for example, when the tunnel is already configured, and FOU is listening on the desired port, it will not work in the usual way to send a packet from the same port - it is busy, but you can take and fire a randomly generated packet directly into the network interface using a raw socket, although generating such a packet will take a little more work. This is how packets with authentication are created in ipipou.

Since ipipou processes only the first packets from the connection (well, those that managed to leak into the queue before the connection was established), performance hardly suffers.

As soon as the ipipou server receives an authenticated packet, a tunnel is created and all subsequent packets in the connection are already processed by the kernel bypassing nfqueue. If the connection is bad, then the first packet of the next will be sent to the nfqueue queue, depending on the settings, if it is not an authentication packet, but from the last remembered IP and client port, it can either be passed on or discarded. If an authenticated packet comes from a new IP and port, the tunnel is reconfigured to use them.

The usual IPIP-over-FOU has another problem when working with NAT - you cannot create two IPIP tunnels encapsulated in UDP with the same IP, because the FOU and IPIP modules are quite isolated from each other. Those. a pair of clients behind one public IP will not be able to simultaneously connect to the same server in this way. In the future, it may be solved at the kernel level, but this is not certain. In the meantime, NAT problems can be solved by NAT - if it happens that a pair of IP addresses is already occupied by another tunnel, ipipou will NAT from the public to an alternative private IP, voila! - you can create tunnels until the ports run out.

Because not all packets in the connection are signed, then such a simple protection is vulnerable to MITM, so if a villain lurks on the path between the client and the server, who can listen to and control traffic, he can redirect authenticated packets through another address and create a tunnel from an untrusted host ...

If anyone has any ideas on how to fix this while keeping the bulk of the traffic in the core, feel free to speak up.

By the way, UDP encapsulation has proven itself very well. Compared to encapsulation over IP, it is much more stable and often faster despite the additional UDP header overhead. This is due to the fact that the majority of hosts on the Internet work tolerably well with only the three most popular protocols: TCP, UDP, ICMP. The tangible part can generally discard everything else, or process more slowly, because it is optimized only for these three.

For example, therefore, QUICK, on the basis of which HTTP / 3 was created, was created over UDP, not over IP.

Well enough words, it's time to see how it works in the "real world".

Battle

Used to emulate the real world

iperf3. In terms of the degree of closeness to reality, this is approximately the same as emulating the real world in Minecraft, but for now it will do.

The competition involves:

- reference master channel

- the hero of this article is ipipou

- OpenVPN with authentication but no encryption

- OpenVPN All Inclusive

- WireGuard without PresharedKey, with MTU = 1440 (for IPv4-only)

Technical data for geeks

Metrics are taken by the following commands

on the client:

UDP

TCP

ICMP latency

( ):

UDP

TCP

ipipou

openvpn ( , )

openvpn (c , , UDP, )

openvpn-manage

wireguard

on the client:

UDP

CPULOG=NAME.udp.cpu.log; sar 10 6 >"$CPULOG" & iperf3 -c SERVER_IP -4 -t 60 -f m -i 10 -B LOCAL_IP -P 2 -u -b 12M; tail -1 "$CPULOG"

# "-b 12M" , "-P", .

TCP

CPULOG=NAME.tcp.cpu.log; sar 10 6 >"$CPULOG" & iperf3 -c SERVER_IP -4 -t 60 -f m -i 10 -B LOCAL_IP -P 2; tail -1 "$CPULOG"

ICMP latency

ping -c 10 SERVER_IP | tail -1

( ):

UDP

CPULOG=NAME.udp.cpu.log; sar 10 6 >"$CPULOG" & iperf3 -s -i 10 -f m -1; tail -1 "$CPULOG"

TCP

CPULOG=NAME.tcp.cpu.log; sar 10 6 >"$CPULOG" & iperf3 -s -i 10 -f m -1; tail -1 "$CPULOG"

ipipou

/etc/ipipou/server.conf:

server

number 0

fou-dev eth0

fou-local-port 10000

tunl-ip 172.28.0.0

auth-remote-pubkey-b64 eQYNhD/Xwl6Zaq+z3QXDzNI77x8CEKqY1n5kt9bKeEI=

auth-secret topsecret

auth-lifetime 3600

reply-on-auth-ok

verb 3

systemctl start ipipou@server

/etc/ipipou/client.conf:

client

number 0

fou-local @eth0

fou-remote SERVER_IP:10000

tunl-ip 172.28.0.1

# pubkey of auth-key-b64: eQYNhD/Xwl6Zaq+z3QXDzNI77x8CEKqY1n5kt9bKeEI=

auth-key-b64 RuBZkT23na2Q4QH1xfmZCfRgSgPt5s362UPAFbecTso=

auth-secret topsecret

keepalive 27

verb 3

systemctl start ipipou@client

openvpn ( , )

openvpn --genkey --secret ovpn.key # ovpn.key

openvpn --dev tun1 --local SERVER_IP --port 2000 --ifconfig 172.16.17.1 172.16.17.2 --cipher none --auth SHA1 --ncp-disable --secret ovpn.key

openvpn --dev tun1 --local LOCAL_IP --remote SERVER_IP --port 2000 --ifconfig 172.16.17.2 172.16.17.1 --cipher none --auth SHA1 --ncp-disable --secret ovpn.key

openvpn (c , , UDP, )

openvpn-manage

wireguard

/etc/wireguard/server.conf:

[Interface]

Address=172.31.192.1/18

ListenPort=51820

PrivateKey=aMAG31yjt85zsVC5hn5jMskuFdF8C/LFSRYnhRGSKUQ=

MTU=1440

[Peer]

PublicKey=LyhhEIjVQPVmr/sJNdSRqTjxibsfDZ15sDuhvAQ3hVM=

AllowedIPs=172.31.192.2/32

systemctl start wg-quick@server

/etc/wireguard/client.conf:

[Interface]

Address=172.31.192.2/18

PrivateKey=uCluH7q2Hip5lLRSsVHc38nGKUGpZIUwGO/7k+6Ye3I=

MTU=1440

[Peer]

PublicKey=DjJRmGvhl6DWuSf1fldxNRBvqa701c0Sc7OpRr4gPXk=

AllowedIPs=172.31.192.1/32

Endpoint=SERVER_IP:51820

systemctl start wg-quick@client

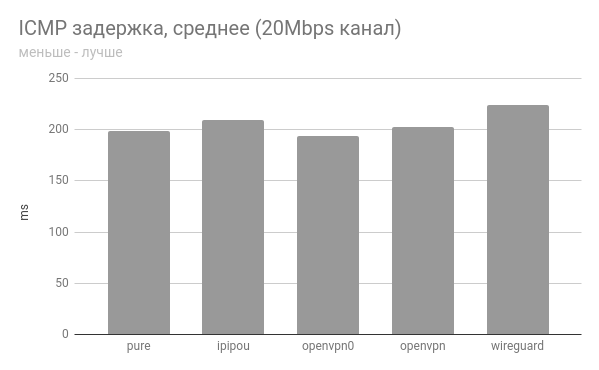

results

Raw Ugly Tablet

CPU , .. :

proto bandwidth[Mbps] CPU_idle_client[%] CPU_idle_server[%]

# 20 Mbps (4 core) VPS (1 core)

# pure

UDP 20.4 99.80 93.34

TCP 19.2 99.67 96.68

ICMP latency min/avg/max/mdev = 198.838/198.997/199.360/0.372 ms

# ipipou

UDP 19.8 98.45 99.47

TCP 18.8 99.56 96.75

ICMP latency min/avg/max/mdev = 199.562/208.919/220.222/7.905 ms

# openvpn0 (auth only, no encryption)

UDP 19.3 99.89 72.90

TCP 16.1 95.95 88.46

ICMP latency min/avg/max/mdev = 191.631/193.538/198.724/2.520 ms

# openvpn (full encryption, auth, etc)

UDP 19.6 99.75 72.35

TCP 17.0 94.47 87.99

ICMP latency min/avg/max/mdev = 202.168/202.377/202.900/0.451 ms

# wireguard

UDP 19.3 91.60 94.78

TCP 17.2 96.76 92.87

ICMP latency min/avg/max/mdev = 217.925/223.601/230.696/3.266 ms

## -1Gbps VPS (1 core)

# pure

UDP 729 73.40 39.93

TCP 363 96.95 90.40

ICMP latency min/avg/max/mdev = 106.867/106.994/107.126/0.066 ms

# ipipou

UDP 714 63.10 23.53

TCP 431 95.65 64.56

ICMP latency min/avg/max/mdev = 107.444/107.523/107.648/0.058 ms

# openvpn0 (auth only, no encryption)

UDP 193 17.51 1.62

TCP 12 95.45 92.80

ICMP latency min/avg/max/mdev = 107.191/107.334/107.559/0.116 ms

# wireguard

UDP 629 22.26 2.62

TCP 198 77.40 55.98

ICMP latency min/avg/max/mdev = 107.616/107.788/108.038/0.128 ms

channel for 20 Mbps

channel for 1 optimistic Gbps

In all cases ipipou is pretty close in terms of performance to the base channel, and that's great!

The unencrypted openvpn tunnel behaved rather oddly in both cases.

If anyone is going to test it, it will be interesting to hear feedback.

May IPv6 and NetPrickle be with us!