The first rule of the Hyper-V Community in Telegram

"And if you love VMware ESXi, then love PowerShell together with ESXi CLI and REST API"

Added by me

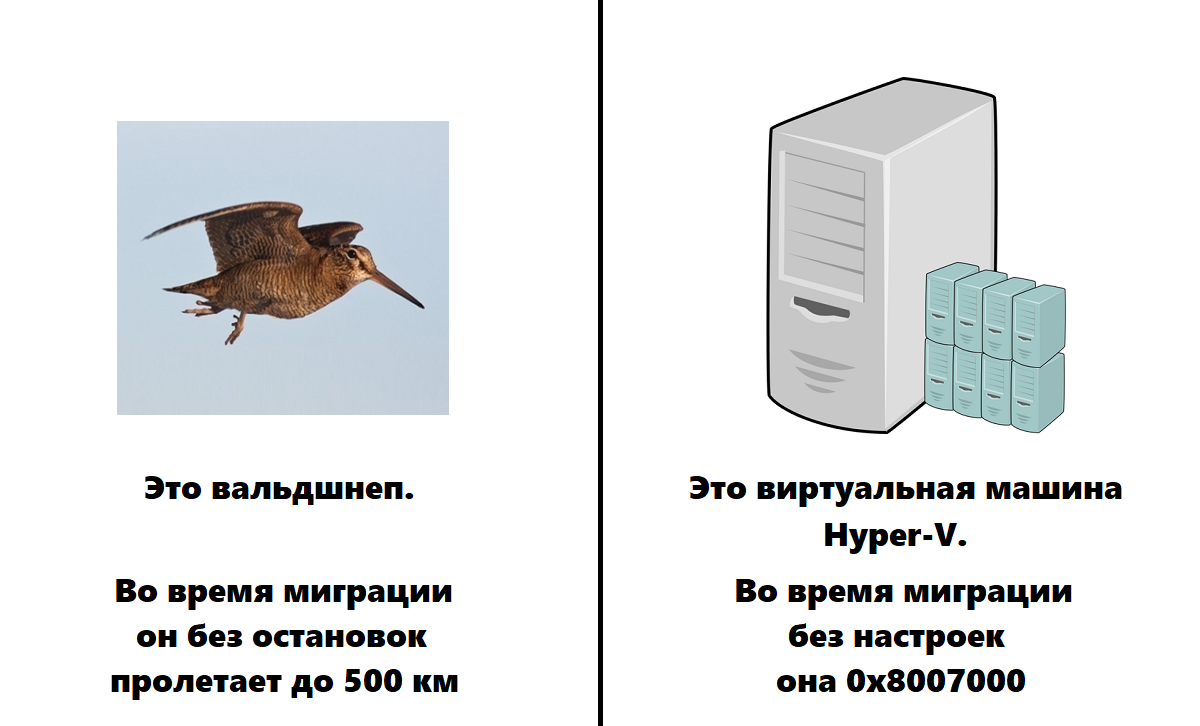

Live migration is a popular feature in Hyper -V. It allows you to migrate running virtual machines without visible downtime. There are many instructions for transferring VMs on the net, but many of them are outdated. In addition, not everyone looks into advanced settings and makes good use of Live Migration's capabilities.

I have collected the nuances and non-obvious parameters for the quick transfer of VMs within a cluster and between clusters. At the same time I will share little secrets in setting up and designing. I hope this article will be useful for novice admins.

Disclaimer : It is advisable to do all the steps described BEFORE entering the Hyper-V server into prod. Hyper-V never forgives design mistakes and will let you down at the first opportunity. That is, the very next day.

Remember the materiel

As usual, VM migration from one node to another within a Hyper-V cluster occurs:

- The VM configuration is copied from one cluster node to another.

- The virtual machine memory pages are marked for copying to the target host, and the process of moving them online begins.

- , . Hyper-V . , .

. - , , . . , .

This is called live migration. The scheme is valid for any hypervisor.

The more RAM a VM has and the more intensively it changes, the longer it will take to move. Therefore, live migration traffic requires a good channel and careful configuration.

This is how the classic live migration works inside the Failover Cluster. It requires a shared CSV volume served to all hosts in the cluster.

In addition there is a second type of Live Migration, live migration in the "nothing» (the Shared-for Nothing Live Migration). This scenario is typically used to migrate VMs without downtime between clusters. In addition to memory pages from one Hyper-V host to another, the VHD (X) disk is copied with the transfer and synchronization of the data delta written to it.

Let's analyze the main nuances of configuring interfaces.

Setting the protocol settings

- First, let's go to the Hyper-V manager and right-click the Hyper-V settings. In the Live Migration settings, we will specify the addresses of the network interfaces to which the hypervisor will access:

- Let's take a look at Advanced features. We are interested in both points: the authentication protocol and the transport that our VMs use.

- Authentication protocol : CredSSP - Credential Security Support Provider Protocol is installed by default. It's easy to use, but if there are multiple clusters in the infrastructure, we won't be able to transfer VMs between clusters.

We will choose Kerberos as more secure and suitable for moving VMs between different clusters.

- Performance options: . Switch Embedded Team SMB (Server Message Block).

Windows Server 2016. SMB (SMB Multi-channel). RDMA – . .

- Authentication protocol : CredSSP - Credential Security Support Provider Protocol is installed by default. It's easy to use, but if there are multiple clusters in the infrastructure, we won't be able to transfer VMs between clusters.

- Kerberos , (Kerberos Constrained Delegation) Computer Active Directory.

Windows Server 2016, NETWORK SERVICE, AD. (Unconstrained Delegation), , :

System Center Virtual Machine Manager (SC VMM), . SC VMM Shared-Nothing Live Migration. - SMB . , Live Migration SMB:

Set-SmbServerConfiguration -EncryptData $false -RejectUnencryptedAccess $false

. .

Windows Admin Center:

Hyper-V network optimization is a highly controversial topic in the community and a limitless field for experimentation (there is no limit to perfection in it by definition). So before setting up a network step by step, let's figure out how technology has changed recently and how you can use it.

As it was before . The old Hyper-V VM migration manuals describe scenarios using Load Balancing / Fail Over (LBFO) timing technology. LBFO made it possible to group physical network adapters and create interfaces on top of them. But there were also disadvantages, for example: there was no RDMA support, it was impossible to find out through which Tim port the traffic would go. And since live migration traffic requires a rather fat channel, this turned into a problem when all network workloads burst into one physical port.

Like now... In Windows Server 2019, you can't even create a virtual switch on top of the LBFO Team. The only supported solution for NIC port trunking in Hyper-V is Switch Embedded Team (SET) .

SET aggregates adapters, just like ESXi vSwitch. Physical network ports become a patch cord for different types of traffic (including for VMs), and virtual interfaces are cut on top of them.

, . , 2 , 3 ( ). - ESX (1+). Red Hat c . VMware vSphere 4.1 1 (bare-metal).

Microsoft VMware Switch Embedded Team Windows Server 2016. .

In newer versions, SET allows you to create different virtual interfaces for different workloads on top of a group of physical interfaces. In fact, these are virtual network adapters of the root partition that we can manage like virtual adapters of a VM.

How this affects the setup process . In Hyper-V, in addition to the management interface, we usually create interfaces for live migration and interfaces for cluster CSV traffic. To do this, we need to know the number of network ports included in the SET - this is how many virtual interfaces will need to be created. We also take into account the location of network ports on the PCI bus, the number of sockets for subsequent mapping of interfaces to NUMA nodes and the number of physical cores on each processor.

Let's look at the process step by step

- , . , on-board .

VLAN ID

Management

192.168.1.0/24

192.168.1.1

0 (Native)

1

LiveMigration

192.168.2.0/24

2

2

CSV

CSV-

192.168.3.0/24

3

2

- SET Virtual Switch VMM (Virtual Machine Manager). VMM , PowerShell Hyper-V:

New-VMSwitch -Name "SET" –NetAdapterName "NIC1","NIC2" -EnableEmbeddedTeaming $True -AllowManagementOS $true -MinimumBandwidthMode Weight

-. MinimumBandwidthMode weight, SET . . Network QoS Policies ( ).

SET RDMA-, MinimumBandwidthMode . , Network QoS Policies RDMA .

- Dynamic Hyper-V Port ( Windows Server 2019). Dynamic Address Hash Hyper-V Port , :

Set-VMSwitchTeam "SET" -LoadBalancingAlgorithm Dynamic

, SET SC VM Host Default. Windows Server 2016 Dynamic. Windows Server 2019 Hyper-V Port, .

- , IP- .

«» CSV- :

# Live Migration Add-VMNetworkAdapter –ManagementOS –Name "LiveMigration01" –SwitchName MGMT-Switch -NumaAwarePlacement $true Add-VMNetworkAdapter –ManagementOS –Name "LiveMigration02" –SwitchName MGMT-Switch -NumaAwarePlacement $true # VLAN Set-VMNetworkAdapterVlan -ManagementOS -VMNetworkAdapterName "LiveMigration*" -VlanId 2 -Access # IP- New-NetIPAddress –InterfaceAlias "vEthernet (LiveMigration01)" -IPAddress 192.168.2.2 -PrefixLength 24 -Confirm:$false New-NetIPAddress –InterfaceAlias "vEthernet (LiveMigration02)" -IPAddress 192.168.2.3 -PrefixLength 24 -Confirm:$false # CSV- Add-VMNetworkAdapter –ManagementOS –Name "CSV01" –SwitchName MGMT-Switch -NumaAwarePlacement $true Add-VMNetworkAdapter –ManagementOS –Name "CSV02" –SwitchName MGMT-Switch -NumaAwarePlacement $true # VLAN Set-VMNetworkAdapterVlan -ManagementOS -VMNetworkAdapterName "CSV*" -VlanId 3 -Access # IP- New-NetIPAddress –InterfaceAlias "vEthernet (CSV01)" -IPAddress 192.168.3.2 -PrefixLength 24 -Confirm:$false New-NetIPAddress –InterfaceAlias "vEthernet (CSV02)" -IPAddress 192.168.3.3 -PrefixLength 24 -Confirm:$false - . , Jumbo Frames 9K , Management.

: .

Set-NetAdapterAdvancedProperty -Name "NIC1" -DisplayName "Jumbo Packet" -DisplayValue 9014 Set-NetAdapterAdvancedProperty -Name "NIC2" -DisplayName "Jumbo Packet" -DisplayValue 9014 Set-NetAdapterAdvancedProperty -Name "vEthernet (CSV01)" -DisplayName "Jumbo Packet" -DisplayValue "9014 Bytes" Set-NetAdapterAdvancedProperty -Name "vEthernet (CSV02)" -DisplayName "Jumbo Packet" -DisplayValue "9014 Bytes" Set-NetAdapterAdvancedProperty -Name "vEthernet (LiveMigration01)" -DisplayName "Jumbo Packet" -DisplayValue "9014 Bytes" Set-NetAdapterAdvancedProperty -Name "vEthernet (LiveMigration02)" -DisplayName "Jumbo Packet" -DisplayValue "9014 Bytes"

, Windows Server, . , Windows. SET Management’. Get-NetAdapterAdvancedProperties.

- , :

CSV-. :

Set-NetIPInterface -InterfaceIndex 16 -InterfaceMetric 10000 Set-NetIPInterface -InterfaceIndex 3 -InterfaceMetric 10000 Set-NetIPInterface -InterfaceIndex 9 -InterfaceMetric 10500 Set-NetIPInterface -InterfaceIndex 6 -InterfaceMetric 10500

, . - RDMA, . RDMA CPU. RDMA Get-NetAdapterRdma.

: . statemigration.com.

RDMA (, , ). - PCI-. Virtual Machine Queues (VMQ).

, , .

Set-VMNetworkAdapterTeamMapping -ManagementOS -PhysicalNetAdapterName "NIC1" -VMNetworkAdapterName "LiveMigration01" Set-VMNetworkAdapterTeamMapping -ManagementOS -PhysicalNetAdapterName "NIC2" -VMNetworkAdapterName "LiveMigration02" Set-VMNetworkAdapterTeamMapping -ManagementOS -PhysicalNetAdapterName "NIC1" -VMNetworkAdapterName "CSV01" Set-VMNetworkAdapterTeamMapping -ManagementOS -PhysicalNetAdapterName "NIC2" -VMNetworkAdapterName "CSV02" - VMQ PCI-. , . : -, , -, 3 . . (SMB)! , RSS.

Windows Server 2019 VMQ , dVMMQ. , 90%. Windows Server 2019 VMQ .

:

Set-NetAdapterRss -Name "NIC1" -BaseProcessorGroup 0 -BaseProcessorNumber 2 -MaxProcessors 8 -MaxProcessorNumber 16 Set-NetAdapterRss -Name "NIC2" -BaseProcessorGroup 0 -BaseProcessorNumber 16 -MaxProcessors 8 -MaxProcessorNumber 30

, . , 2 16 . 32 . Excel 0 31:

Base Processor Number 2. . 16 – MaxProcessorNumber.

BaseProcessor 16 ( ). . , Live Migration.

Using the same cmdlets, you can also set the number of RSS Queues. Their number depends on the specific model of the network card, so before setting up RSS Queues, you need to study the documentation for the network card.

Setting up migration for a clustered scenario

On the Failover Cluster side, we will additionally unscrew the cluster timeout settings:

(Get-Cluster).SameSubnetDelay = 2000

(Get-Cluster).SameSubnetThreshold = 30

- SameSubnetDelay indicates how many times at what time we send heartbits. By default, it is set to 1 second.

If the cluster nodes are on the same network, this is sufficient. If they are on different networks, then you need to configure CrossSubnetDelay with the same values. - SameSubnetThreshold shows how many heartbits we can skip as much as possible. By default, this is 5 heartbits, the maximum is 120. We will set the optimal value - 30 heartbits.

For highly loaded machines, it is important to unscrew both parameters. If we do not meet, then with a high probability such a machine will not run over.

So that the live migration traffic is used only on a specific network, we will also leave a separate network in the Failover Cluster settings:

Actually, this is the minimum gentleman's set of settings for Live Migration to work correctly in Hyper-V.