Introduction

Mass Effect is a popular sci-fi RPG franchise. The first part was first released by BioWare in late 2007 exclusively for the Xbox 360 under an agreement with Microsoft. A few months later, in mid-2008, the game received a PC port developed by Demiurge Studios. The port was decent and had no noticeable flaws until AMD released its new Bulldozer architecture processors in 2011. When launching a game on a PC with modern AMD processors in two game locations (Noveria and Ilos), serious graphic artifacts appear:

Yes, it looks ugly.

While this doesn't make the game unplayable, these artifacts are annoying. Fortunately, there is a solution, for example, you can turn off the lighting with console commands or modify the game maps to remove broken lights , but it seems that no one has ever fully understood the cause of this problem. Some sources claim that the FPS Counter mod also fixes this problem, but I could not find information about it: the source code of the mod does not seem to be posted online, and there is no documentation on how the mod fixes the error.

Why is this problem so interesting? Bugs that occur only on hardware from certain manufacturers are quite common, and they have been encountered in games for many decades. However, according to my information, this is the only case where the graphics problem is caused by the processor and not the graphics card. In most cases, the problems arise with the products of a certain GPU manufacturer and do not affect the CPU in any way, but in this case, the opposite is true. Therefore, this error is unique and therefore worth investigating.

After reading online discussions, I have come to the conclusion that the problem seems to be with AMD FX and Ryzen chips. Unlike older AMD processors, these chips lack the 3DNow! Instruction set! ... Perhaps the error has nothing to do with this, but in general, there is a consensus in the gamer community that this is the cause of the bug and that when it finds an AMD processor, the game tries to use these commands. Considering that there are no known cases of this bug on Intel processors, and that 3DNow! only used AMD, no wonder the community blamed this instruction set as the reason.

But are they the problem, or is something completely different causing the error? Let's find out!

Part 1 - Research

Prelude

Although it is extremely easy to recreate this problem, for a long time I was unable to evaluate it for a simple reason - I did not have a PC with an AMD processor at hand! Fortunately, I am not doing my research this time alone - Rafael Rivera supported me through the study by providing a test environment with an AMD chip, and also shared his assumptions and thoughts while I made blind guesses, as is usually the case when I search sources of such unknown problems.

Since we now had a test environment, the first, of course, we tested the theory

cpuid- if people are right in assuming that 3DNow! Teams are to blame, then there should be a place in the game code that checks their presence, or at least identifies the manufacturer of the CPU. However, there is a mistake in such reasoning; if the game really tried to use 3DNow! on any AMD chip without checking the possibility of their support, then it would most likely crash when trying to execute an invalid command. Moreover, a brief examination of the game's code shows that it does not test the capabilities of the CPU. Therefore, whatever the cause of the error, it does not appear to be caused by a misidentification of processor functionality, because the game is not interested in it at all.

When the case began to seem impossible to debug, Raphael informed me of his discovery - disabling PSGP(Processor Specific Graphics Pipeline) fixes the issue and all characters are lit properly! PSGP is not the most extensively documented concept; In short, this is a legacy function (only for older DirectX versions) that allows Direct3D to perform optimizations for specific processors:

In previous versions of DirectX, there was a code execution path called PSGP that allowed vertex processing. Applications had to take this path into account and maintain a path for vertex processing by the processor and graphics cores.

With this approach, it is logical that disabling PSGP eliminates artifacts on AMD - the path chosen by modern AMD processors was somehow flawed. How can I disable it? Two ways come to mind:

- You can pass a

IDirect3D9::CreateDeviceflag to the functionD3DCREATE_DISABLE_PSGP_THREADING. It is described as follows:

. , (worker thread), .

, . , «PSGP», , . - DirectX PSGP D3D PSGP D3DX –

DisablePSGPDisableD3DXPSGP. . . Direct3D .

It seems to be

DisableD3DXPSGPable to solve this problem. Therefore, if you do not like downloading third-party fixes / modifications or want to fix the problem without making any changes to the game, then this is a completely working method. If you set this flag only for Mass Effect and not for the whole system, then everything will be fine!

PIX

As usual, if you run into problems with the graphics, it will most likely help diagnose the PIX. We captured similar scenes on Intel and AMD hardware and then compared the results. One difference immediately caught my eye - unlike my previous projects, where the captures did not record a bug and the same capture could look different on different PCs (which indicates a driver bug or d3d9.dll), these captures wrote down a bug! In other words, if you open a capture made on AMD hardware on a PC with an Intel processor, the bug will be displayed.

The capture from AMD to Intel looks exactly the same as it looked on the hardware where it was taken:

What does this tell us?

- PIX « », D3D , , Intel , AMD .

- , , ( GPU ), , .

In other words, this is almost certainly not a driver bug. It looks like the incoming GPU-prepared data is corrupted in some way 1 . This is a very rare case indeed!

At this stage, in order to find the bug, it is necessary to find all the discrepancies between the captures. It's boring work, but there is no other way.

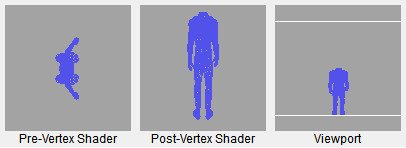

After a long study of the captured data, my attention was drawn to the call to draw the entire character body:

In the takeover of Intel, this call outputs most of the character's body along with lighting and textures. In AMD's grab, it outputs a solid black model. Looks like we got the right trail.

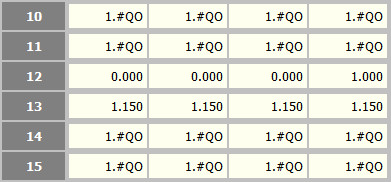

The first obvious candidate for checking will be the corresponding textures, but they seem to be fine and are the same in both captures. However, some pixel shader constants look strange. They not only contain NaN (Not a Number), but they are also only found in AMD capture:

1. # QO stands for NaN

Looks promising - NaN values often cause weird graphical artifacts. Funnily enough , the PlayStation 3 version of Mass Effect 2 had a very similar issue in the RPCS3 emulator , also related to NaN!

However, you shouldn't be too happy for now - these may be values left over from previous calls and not used in the current one. Fortunately, in our case, it is clear that these NaNs are being passed to D3D for this particular rendering ...

49652 IDirect3DDevice9::SetVertexShaderConstantF(230, 0x3017FC90, 4)

49653 IDirect3DDevice9::SetVertexShaderConstantF(234, 0x3017FCD0, 3)

49654 IDirect3DDevice9::SetPixelShaderConstantF(10, 0x3017F9D4, 1) // Submits constant c10

49655 IDirect3DDevice9::SetPixelShaderConstantF(11, 0x3017F9C4, 1) // Submits constant c11

49656 IDirect3DDevice9::SetRenderState(D3DRS_FILLMODE, D3DFILL_SOLID)

49657 IDirect3DDevice9::SetRenderState(D3DRS_CULLMODE, D3DCULL_CW)

49658 IDirect3DDevice9::SetRenderState(D3DRS_DEPTHBIAS, 0.000f)

49659 IDirect3DDevice9::SetRenderState(D3DRS_SLOPESCALEDEPTHBIAS, 0.000f)

49660 IDirect3DDevice9::TestCooperativeLevel()

49661 IDirect3DDevice9::SetIndices(0x296A5770)

49662 IDirect3DDevice9::DrawIndexedPrimitive(D3DPT_TRIANGLELIST, 0, 0, 2225, 0, 3484) // Draws the character model... and the pixel shader used in this rendering refers to both constants:

// Registers:

//

// Name Reg Size

// ------------------------ ----- ----

// UpperSkyColor c10 1

// LowerSkyColor c11 1It looks like both constants are taken directly from the Unreal Engine and as their name suggests, they can affect lighting. Bingo!

The in-game test confirms our theory - on an Intel machine, a vector of four NaN values is never passed as pixel shader constants; however, on an AMD machine, NaN values start appearing as soon as the player enters the spot where the lighting breaks!

Does this mean the job is done? Far from it, because finding broken constants is only half the battle. The question still remains - where do they come from and can they be replaced? In the in-game test, changing the NaN values partially fixed the problem - the ugly black spots were gone, but the characters still look too dark:

Almost correct ... but not quite.

Given how important these lighting values can be to a scene, we can't stop at this solution. However, we know that we are on the right track!

Alas, any attempts to track down the sources of these constants pointed to something resembling a render stream, not the actual destination. While debugging this is possible, it is clear that we need to try a new approach before spending a potentially infinite amount of time tracking data flows between in-game and / or UE3-related structures.

Part 2 - Taking a closer look at D3DX

Taking a step back, we realized that we had missed something earlier. Recall that to "fix" the game, you need to add one of two entries to the registry -

DisablePSGPand DisableD3DXPSGP. If we assume that their names indicate their purpose, then it DisableD3DXPSGPshould be a subset DisablePSGP, with the former disabling PSGP only in D3DX, and the latter in both D3DX and D3D. Having made this assumption, let's turn our eyes to D3DX.

Mass Effect imports the D3DX feature set by composing

d3dx9_31.dll:

D3DXUVAtlasCreate

D3DXMatrixInverse

D3DXWeldVertices

D3DXSimplifyMesh

D3DXDebugMute

D3DXCleanMesh

D3DXDisassembleShader

D3DXCompileShader

D3DXAssembleShader

D3DXLoadSurfaceFromMemory

D3DXPreprocessShader

D3DXCreateMeshIf I saw this list, not knowing the information we got from the captures, I would assume that the likely culprit could be

D3DXPreprocessShadereither D3DXCompileShader- shaders could be incorrectly optimized and / or compiled on AMD, but fixing them can be insanely difficult.

However, we already have knowledge, so for us one function is highlighted from the list - it

D3DXMatrixInverseis the only function that can be used to prepare the pixel shader constants.

This function is only called from one place in the game:

int __thiscall InvertMatrix(void *this, int a2)

{

D3DXMatrixInverse(a2, 0, this);

return a2;

}However ... it hasn't been implemented very well. A brief study

d3dx9_31.dllshows that it D3DXMatrixInversedoes not touch the output parameters and, if the inversion of the matrix is impossible (because the input matrix is degenerate), it returns nullptr, but the game does not care at all. The output matrix may remain uninitialized, ah-yay! In fact, the inversion of degenerate matrices occurs in the game (most often in the main menu), but whatever we did to make the game handle them better (for example, zeroing the output or assigning it an identity matrix), nothing graphically changed. That's how it goes.

Having disproved this theory, we returned to PSGP - what exactly does PSGP do in D3DX? Rafael Rivera looked into this question and the logic behind this pipeline turned out to be pretty simple:

AddFunctions(x86)

if(DisablePSGP || DisableD3DXPSGP) {

// All optimizations turned off

} else {

if(IsProcessorFeaturePresent(PF_3DNOW_INSTRUCTIONS_AVAILABLE)) {

if((GetFeatureFlags() & MMX) && (GetFeatureFlags() & 3DNow!)) {

AddFunctions(amd_mmx_3dnow)

if(GetFeatureFlags() & Amd3DNowExtensions) {

AddFunctions(amd3dnow_amdmmx)

}

}

if(GetFeatureFlags() & SSE) {

AddFunctions(amdsse)

}

} else if(IsProcessorFeaturePresent(PF_XMMI64_INSTRUCTIONS_AVAILABLE /* SSE2 */)) {

AddFunctions(intelsse2)

} else if(IsProcessorFeaturePresent(PF_XMMI_INSTRUCTIONS_AVAILABLE /* SSE */)) {

AddFunctions(intelsse)

}

}If PSGP is not disabled, then D3DX selects features that are optimized for a specific instruction set. This is logical and brings us back to the original theory. As it turned out, D3DX has features optimized for AMD and the 3DNow! Instruction set, so the game ultimately uses them indirectly. Modern AMD processors that lack 3DNow! Instructions follow the same path as Intel processors - that is, by

intelsse2.

Summarize:

- When PSGP is disabled, both Intel and AMD follow the normal code execution path

x86. - Intel processors always go through code path

intelsse22 . - AMD processors with 3DNow! go through the code execution path

amd_mmx_3dnoworamd3dnow_amdmmx, and processors without 3DNow go throughintelsse2.

Having received this information, we will put forward a hypothesis - there is probably something wrong with the AMD SSE2 commands, and the matrix inversion results calculated on AMD along the way

intelsse2are either too inaccurate or completely incorrect .

How can we test this hypothesis? Tests, of course!

PS: You might be thinking "is used in the game

d3dx9_31.dll, but the latest D3DX9 library has a version d3dx9_43.dll, and, most likely, this bug was fixed in newer versions?" We tried to "upgrade" the game to link the newest DLL, but nothing changed.

Part 3 - Independent tests

We have prepared a simple, independent program to test the accuracy of matrix inversion. During a short game session, at the location of the bug, we recorded all the input and output data

D3DXMatrixInversein a file. This file is read by an independent test program and the results are recalculated. The output of the game is compared with the data calculated by the test program to verify correctness.

After several attempts based on data collected from Intel and AMD chips with PSGP enabled / disabled, we compared the results of different machines. The results are shown below, indicating success (

, results are equal) and failures (

, results are equal) and failures ( , results are not equal) runs. The last column indicates if the game is processing data correctly or is "buggy". We intentionally ignore the inaccuracy of floating point calculations and compare the results using

, results are not equal) runs. The last column indicates if the game is processing data correctly or is "buggy". We intentionally ignore the inaccuracy of floating point calculations and compare the results using memcmp:

| Data source | Intel SSE2 | AMD SSE2 | Intel x86 | AMD x86 | Is the data accepted by the game? |

|---|---|---|---|---|---|

| Intel SSE2 | ️ |

|

|

|

️ |

| AMD SSE2 |  |

️ |

|

|

|

| Intel x86 |  |

|

️ |

️ |

️ |

| AMD x86 |  |

|

️ |

️ |

️ |

D3DXMatrixInverse Test Results

Curiously, the results show that:

- Computing with SSE2 is not portable between Intel and AMD machines.

- Computing without SSE2 is ported between machines.

- Computations without SSE2 are "accepted" by the game, despite the fact that they differ from computations on Intel SSE2.

Therefore, the question arises: what exactly is wrong with the calculations with AMD SSE2, because of what they lead to glitches in the game? We don't have an exact answer to it, but it looks like it's the result of two factors:

-

D3DXMatrixInverseSSE2 — , SSE2 Intel/AMD (, - ), , . - , .

At this stage, we are ready to create a fix that will replace

D3DXMatrixInversethe rewritten x86 variation of the D3DX function, and that's it. However, I had another random thought - D3DX is deprecated and has been replaced by DirectXMath . I decided that if we want to replace this matrix function anyway, we can change it to XMMatrixInverse, which is a "modern" replacement for the function D3DXMatrixInverse. It XMMatrixInversealso uses SSE2 commands, that is, it will be as optimal as with the function from D3DX, but I was almost sure that the errors in it would be the same.

I quickly wrote the code, sent it to Raphael, and ...

It worked great! (?)

Ultimately, what we perceived to be a problem due to small differences in SSE2 teams may be an extremely numerical problem. Even though it

XMMatrixInversealso uses SSE2, it gave perfect results on both Intel and AMD. Therefore, we ran the same tests again and the results were unexpected, to say the least:

| Data source | Intel | AMD | Is the data accepted by the game? |

|---|---|---|---|

| Intel | ️ |

️ |

️ |

| AMD | ️ |

️ |

️ |

Benchmark Results with XMMatrixInverse

Not only does the game work well, but the results match up perfectly and carry over between machines!

With this in mind, we revised our theory about the causes of the bug - without a doubt, a game that is too sensitive to problems is to blame; however, after conducting additional tests, it seemed to us that D3DX was written for fast calculations, and DirectXMath is more concerned with the accuracy of calculations. This seems logical, since D3DX is a 2000s product and it makes sense that speed was its top priority. DirectXMath was developed later, so the authors could pay more attention to precise, deterministic calculations.

Part 4 - Putting It All Together

The article turned out to be quite long, I hope you are not tired. Let's summarize what we've done:

- , 3DNow! ( DLL).

- , PSGP AMD.

- PIX — NaN .

- —

D3DXMatrixInverse. - , Intel AMD, SSE2.

- ,

XMMatrixInverse.

The only thing left for us to implement is the correct replacement! This is where SilentPatch for Mass Effect comes into play . We decided that the cleanest solution to this problem was to create a spoofer

d3dx9_31.dllthat would redirect all exported Mass Effect functions to the system DLL, except for the function D3DXMatrixInverse. For this feature, we have developed a XMMatrixInverse.

The replacement DLL provides a very clean and reliable installation and works great with the Origin and Steam versions of the game. It can be used immediately, without the need for ASI Loader or any other third-party software.

As far as we understand, the game now looks as it should, without the slightest deterioration in lighting:

Noveria

Ilos

Downloads

The modification can be downloaded from Mods & Patches . Click here to go directly to the game page:

Download SilentPatch for Mass Effect

After downloading, just extract the archive into the game folder, and that's it! If you are unsure of what to do next, read the setup instructions .

The complete source code of the mod is published on GitHub and can be freely used as a starting point:

Source code on GitHub

Notes

- In theory, it could also be a bug inside d3d9.dll, which would complicate things a little. Fortunately, this was not the case.

- Assuming they have the SSE2 instruction set, of course, but any Intel processor without these instructions is much weaker than the minimum system requirements for Mass Effect.

See also:

- " Noon, fall and revival of AMD's »

- " Why is the current increase in AMD Ryzen not kill your CPU "