There was a lot of controversy about how to create software with increased reliability, methodologies, approaches to the organization of development, tools were discussed. But among all these discussions, what is lost is that software development is a process , and quite well-studied and formalized. And if you look at this process, you will notice that this process focuses not only on how the code is written / generated, but on how this code is verified. Most importantly, development requires the use of tools that you can "trust".

This short tour is over, and let's see how the code is proven to be reliable. First, you need to understand the characteristics of the code that meets the reliability requirements. The very term "code reliability" looks rather vague and contradictory. Therefore, I prefer not to invent anything, and when assessing the reliability of the code I am guided by industry standards, for example, GOST R ISO 26262 or KT-178S. The wording is different, but the idea is the same: reliable code is developed according to a single standard (the so-called coding standard ) and the number of runtime errors in it is minimized. However, everything is not so simple here - the standards provide for situations when, for example, compliance with the coding standard is not possible and such a deviation needs to be documented

Dangerous quagmire MISRA and the like

Coding standards are intended to restrict the use of programming language constructs that can be potentially dangerous. In theory, this should improve the quality of the code, right? Yes, this ensures the quality of the code, but it is always important to remember that 100% compliance with the coding rules is not an end in itself. If a code is 100% compliant with the rules of some MISRA, then this does not mean at all that it is good and correct. You can spend a lot of time refactoring, cleaning up violations of the coding standard, but all this will be wasted if the code ends up working incorrectly or contains runtime errors. Moreover, the rules from MISRA or CERT are usually only part of the coding standard adopted at the enterprise.

Static analysis is not a panacea

The standards prescribe a systematic code review in order to find defects in the code and analyze the code for coding standards.

The static analysis tools commonly used for this purpose are good at spotting flaws, but they do not prove that the source code is free of runtime errors. And a lot of errors detected by static analyzers are actually false positives of tools. As a result, the use of these tools does not greatly reduce the time spent on checking the code due to the need to check the results of the check. Worse, they may not detect runtime errors, which is unacceptable for applications that require high reliability.

Formal code verification

So, static analyzers are not always able to catch runtime errors. How can they be detected and eliminated? In this case, formal verification of the source code is required.

First of all, you need to understand what kind of animal is it? Formal verification is the proof of the error-free code using formal methods. It sounds scary, but in fact - it's like a proof of a theorem from matan. There is no magic here. This method differs from traditional static analysis, as it uses an abstract interpretationrather than heuristics. This gives us the following: we can prove that there are no specific runtime errors in the code. What are these mistakes? These are all sorts of array overruns, division by zero, integer overflow, and so on. Their meanness lies in the fact that the compiler will collect code containing such errors (since such code is syntactically correct), but they will appear when the code is run.

Let's look at an example. Below in the spoilers is the code for a simple PI controller:

View Code

pictrl.c

#include "pi_control.h"

/* Global variable definitions */

float inp_volt[2];

float integral_state;

float duty_cycle;

float direction;

float normalized_error;

/* Static functions */

static void pi_alg(float Kp, float Ki);

static void process_inputs(void);

/* control_task implements a PI controller algorithm that ../

*

* - reads inputs from hardware on actual and desired position

* - determines error between actual and desired position

* - obtains controller gains

* - calculates direction and duty cycle of PWM output using PI control algorithm

* - sets PWM output to hardware

*

*/

void control_task(void)

{

float Ki;

float Kp;

/* Read inputs from hardware */

read_inputs();

/* Convert ADC values to their respective voltages provided read failure did not occur, otherwise do not update input values */

if (!read_failure) {

inp_volt[0] = 0.0048828125F * (float) inp_val[0];

inp_volt[1] = 0.0048828125F * (float) inp_val[1];

}

/* Determine error */

process_inputs();

/* Determine integral and proprortional controller gains */

get_control_gains(&Kp,&Ki);

/* PI control algorithm */

pi_alg(Kp, Ki);

/* Set output pins on hardware */

set_outputs();

}

/* process_inputs computes the error between the actual and desired position by

* normalizing the input values using lookup tables and then taking the difference */

static void process_inputs(void)

{

/* local variables */

float rtb_AngleNormalization;

float rtb_PositionNormalization;

/* Normalize voltage values */

look_up_even( &(rtb_AngleNormalization), inp_volt[1], angle_norm_map, angle_norm_vals);

look_up_even( &(rtb_PositionNormalization), inp_volt[0], pos_norm_map, pos_norm_vals);

/* Compute error */

normalized_error = rtb_PositionNormalization - rtb_AngleNormalization;

}

/* look_up_even provides a lookup table algorithm that works for evenly spaced values.

*

* Inputs to the function are...

* pY - pointer to the output value

* u - input value

* map - structure containing the static lookup table data...

* valueLo - minimum independent axis value

* uSpacing - increment size of evenly spaced independent axis

* iHi - number of increments available in pYData

* pYData - pointer to array of values that make up dependent axis of lookup table

*

*/

void look_up_even( float *pY, float u, map_data map, float *pYData)

{

/* If input is below range of lookup table, output is minimum value of lookup table (pYData) */

if (u <= map.valueLo )

{

pY[1] = pYData[1];

}

else

{

/* Determine index of output into pYData based on input and uSpacing */

float uAdjusted = u - map.valueLo;

unsigned int iLeft = uAdjusted / map.uSpacing;

/* If input is above range of lookup table, output is maximum value of lookup table (pYData) */

if (iLeft >= map.iHi )

{

(*pY) = pYData[map.iHi];

}

/* If input is in range of lookup table, output will interpolate between lookup values */

else

{

{

float lambda; // fractional part of difference between input and nearest lower table value

{

float num = uAdjusted - ( iLeft * map.uSpacing );

lambda = num / map.uSpacing;

}

{

float yLeftCast; // table value that is just lower than input

float yRghtCast; // table value that is just higher than input

yLeftCast = pYData[iLeft];

yRghtCast = pYData[((iLeft)+1)];

if (lambda != 0) {

yLeftCast += lambda * ( yRghtCast - yLeftCast );

}

(*pY) = yLeftCast;

}

}

}

}

}

static void pi_alg(float Kp, float Ki)

{

{

float control_output;

float abs_control_output;

/* y = integral_state + Kp*error */

control_output = Kp * normalized_error + integral_state;

/* Determine direction of torque based on sign of control_output */

if (control_output >= 0.0F) {

direction = TRUE;

} else {

direction = FALSE;

}

/* Absolute value of control_output */

if (control_output < 0.0F) {

abs_control_output = -control_output;

} else if (control_output > 0.0F) {

abs_control_output = control_output;

}

/* Saturate duty cycle to be less than 1 */

if (abs_control_output > 1.0F) {

duty_cycle = 1.0F;

} else {

duty_cycle = abs_control_output;

}

/* integral_state = integral_state + Ki*Ts*error */

integral_state = Ki * normalized_error * 1.0e-002F + integral_state;

}

}

pi_control.h

/* Lookup table structure */

typedef struct {

float valueLo;

unsigned int iHi;

float uSpacing;

} map_data;

/* Macro definitions */

#define TRUE 1

#define FALSE 0

/* Global variable declarations */

extern unsigned short inp_val[];

extern map_data angle_norm_map;

extern float angle_norm_vals[11];

extern map_data pos_norm_map;

extern float pos_norm_vals[11];

extern float inp_volt[2];

extern float integral_state;

extern float duty_cycle;

extern float direction;

extern float normalized_error;

extern unsigned char read_failure;

/* Function declarations */

void control_task(void);

void look_up_even( float *pY, float u, map_data map, float *pYData);

extern void read_inputs(void);

extern void set_outputs(void);

extern void get_control_gains(float* c_prop, float* c_int);

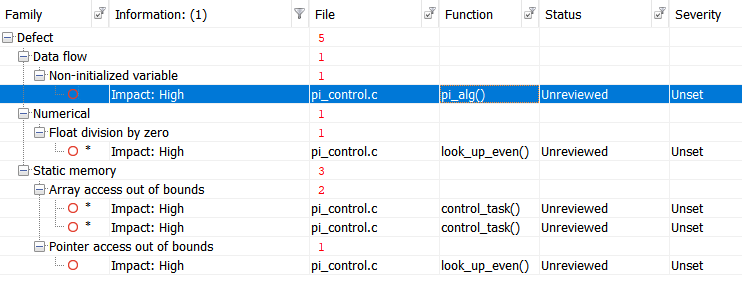

Let's run the test with Polyspace Bug Finder , a certified and qualified static analyzer, and get the following results:

For convenience, let's tabulate the results:

View Results

|

|

|

|

| Non-initialized variable

|

Local variable 'abs_control_output' may be read before being initialized.

|

159

|

| Float division by zero

|

Divisor is 0.0.

|

99

|

| Array access out of bounds

|

Attempt to access element out of the array bounds.

Valid index range starts at 0. |

38

|

| Array access out of bounds

|

Attempt to access element out of the array bounds.

Valid index range starts at 0. |

39

|

| Pointer access out of bounds

|

Attempt to dereference pointer outside of the pointed object at offset 1.

|

93

|

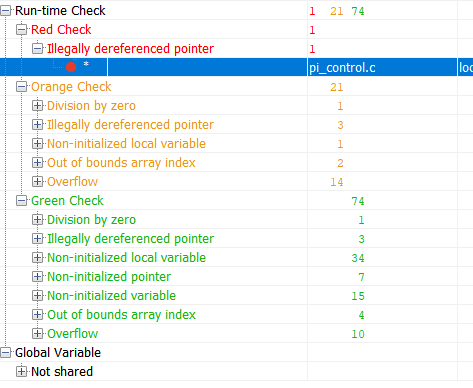

And now we verify the same code using the Polyspace Code Prover formal verification tool:

Green in the results is code that has been proven to be free of runtime errors. Red - proven error. Orange - The tool is out of data. The results marked in green are the most interesting. If for a part of the code the absence of a runtime error has been proven, then for this part of the code the amount of testing can be significantly reduced (for example, testing for robustness can no longer be performed) And now, let's look at the summary table of potential and proven errors:

View Results

|

|

|

|

| Out of bounds array index

|

38

|

Warning: array index may be outside bounds: [array size undefined]

|

| Out of bounds array index

|

39

|

Warning: array index may be outside bounds: [array size undefined]

|

| Overflow

|

70

|

Warning: operation [-] on float may overflow (on MIN or MAX bounds of FLOAT32)

|

| Illegally dereferenced pointer

|

93

|

Error: pointer is outside its bounds

|

| Overflow

|

98

|

Warning: operation [-] on float may overflow (result strictly greater than MAX FLOAT32)

|

| Division by zero

|

99

|

Warning: float division by zero may occur

|

| Overflow

|

99

|

Warning: operation [conversion from float32 to unsigned int32] on scalar may overflow (on MIN or MAX bounds of UINT32)

|

| Overflow

|

99

|

Warning: operation [/] on float may overflow (on MIN or MAX bounds of FLOAT32)

|

| Illegally dereferenced pointer

|

104

|

Warning: pointer may be outside its bounds

|

| Overflow

|

114

|

Warning: operation [-] on float may overflow (result strictly greater than MAX FLOAT32)

|

| Overflow

|

114

|

Warning: operation [*] on float may overflow (on MIN or MAX bounds of FLOAT32)

|

| Overflow

|

115

|

Warning: operation [/] on float may overflow (on MIN or MAX bounds of FLOAT32)

|

| Illegally dereferenced pointer

|

121

|

Warning: pointer may be outside its bounds

|

| Illegally dereferenced pointer

|

122

|

Warning: pointer may be outside its bounds

|

| Overflow

|

124

|

Warning: operation [+] on float may overflow (on MIN or MAX bounds of FLOAT32)

|

| Overflow

|

124

|

Warning: operation [*] on float may overflow (on MIN or MAX bounds of FLOAT32)

|

| Overflow

|

124

|

Warning: operation [-] on float may overflow (on MIN or MAX bounds of FLOAT32)

|

| Overflow

|

142

|

Warning: operation [*] on float may overflow (on MIN or MAX bounds of FLOAT32)

|

| Overflow

|

142

|

Warning: operation [+] on float may overflow (on MIN or MAX bounds of FLOAT32)

|

| Non-uninitialized local variable

|

159

|

Warning: local variable may be non-initialized (type: float 32)

|

| Overflow

|

166

|

Warning: operation [*] on float may overflow (on MIN or MAX bounds of FLOAT32)

|

| Overflow

|

166

|

Warning: operation [+] on float may overflow (on MIN or MAX bounds of FLOAT32)

|

This table tells me the following:

There was a runtime error on line 93 that is guaranteed to happen. The rest of the warnings tell me that I either configured the verification incorrectly, or I need to write a security code or overcome them in another way.

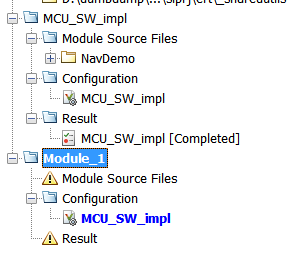

It may seem that formal verification is very cool and the whole project should be verified uncontrollably. However, as with any instrument, there are limitations, primarily related to the time spent. In short, formal verification is slow. So slow. Performance is limited by the mathematical complexity of both the abstract interpretation itself and the volume of the code to be verified. Therefore, you should not try to quickly verify the Linux kernel. All verification projects in Polyspace can be divided into modules that can be verified independently of each other, and each module has its own configuration. That is, we can adjust the verification thoroughness for each module separately.

"Trust" in tools

When you deal with industry standards such as KT-178C or GOST R ISO 26262, then you constantly come across things like "trust in the tool" or "qualification of the tool". What is it? This is a process in which you show that the results of the development or testing tools that were used in the project can be trusted and their errors are documented. This process is a topic for a separate article, since not everything is obvious. The main thing here is this: the tools used in the industry always come with a set of documents and tests that help in this process.

Outcome

With a simple example, we looked at the difference between classical static analysis and formal verification. Can it be applied outside of projects that require adherence to industry standards? Yes, of course you can. You can even ask for a trial version here .

By the way, if you are interested, you can make a separate article about instrument certification. Write in the comments if you need such an article.