Introduction

Google Dorks or Google Hacking is a technique used by the media, investigators, security engineers, and anyone else to query various search engines to discover hidden information and vulnerabilities that can be found on public servers. It is a technique in which conventional website search queries are used to their fullest extent to determine the information hidden on the surface.

How does Google Dorking work?

This example of collecting and analyzing information, acting as an OSINT tool, is not a Google vulnerability or a device for hacking website hosting. On the contrary, it acts as a conventional data retrieval process with advanced capabilities. This is not new, as there are a huge number of websites that are over a decade old and serve as repositories for exploring and using Google Hacking.

Whereas search engines index, store headers and page content, and link them together for optimal search queries. But unfortunately, the web spiders of any search engine are configured to index absolutely all information found. Even though the administrators of the web resources had no intention of publishing this material.

However, the most interesting thing about Google Dorking is the huge amount of information that can help everyone in the process of learning the Google search process. Can help newcomers to find missing relatives, or can teach how to extract information for their own benefit. In general, each resource is interesting and amazing in its own way and can help everyone in what exactly he is looking for.

What information can I find through Dorks?

Ranging from remote access controllers of various factory machinery to configuration interfaces of critical systems. There is an assumption that no one will ever find a huge amount of information posted on the net.

However, let's look at it in order. Imagine a new CCTV camera that lets you watch it live on your phone anytime you want. You set up and connect to it via Wi-Fi, and download the app to authenticate the security camera login. After that, you can access the same camera from anywhere in the world.

In the background, not everything looks so simple. The camera sends a request to the Chinese server and plays the video in real time, allowing you to log in and open the video feed hosted on the server in China from your phone. This server may not require a password to access the feed from your webcam, making it publicly available to anyone looking for the text contained on the camera view page.

And unfortunately, Google is ruthlessly efficient at finding any device on the Internet running on HTTP and HTTPS servers. And since most of these devices contain some kind of web platform to customize them, this means that a lot of things that weren't meant to be on Google end up there.

By far the most serious file type is the one that carries the credentials of users or the entire company. This usually happens in two ways. In the first, the server is configured incorrectly and exposes its administrative logs or logs to the public on the Internet. When passwords are changed or the user is unable to log in, these archives can leak along with credentials.

The second option occurs when configuration files containing the same information (logins, passwords, database names, etc.) become publicly available. These files must be hidden from any public access, as they often leave important information. Any of these errors can lead to the fact that an attacker finds these loopholes and obtains all the necessary information.

This article illustrates the use of Google Dorks to show not only how to find all of these files, but also how vulnerable platforms can be that contain information in the form of a list of addresses, email, pictures, and even a list of publicly available webcams.

Parsing search operators

Dorking can be used on various search engines, not just Google. In day-to-day use, search engines such as Google, Bing, Yahoo, and DuckDuckGo take a search query or search query string and return relevant results. Also, these same systems are programmed to accept more advanced and complex operators that greatly narrow down these search terms. An operator is a keyword or phrase that has special meaning to a search engine. Examples of commonly used operators are: "inurl", "intext", "site", "feed", "language". Each operator is followed by a colon, followed by the corresponding key phrase or phrases.

These operators allow you to search for more specific information, such as specific lines of text within pages of a website, or files hosted at a specific URL. Among other things, Google Dorking can also find hidden login pages, error messages that reveal information about available vulnerabilities and shared files. The main reason is that the website administrator may have simply forgotten to exclude from public access.

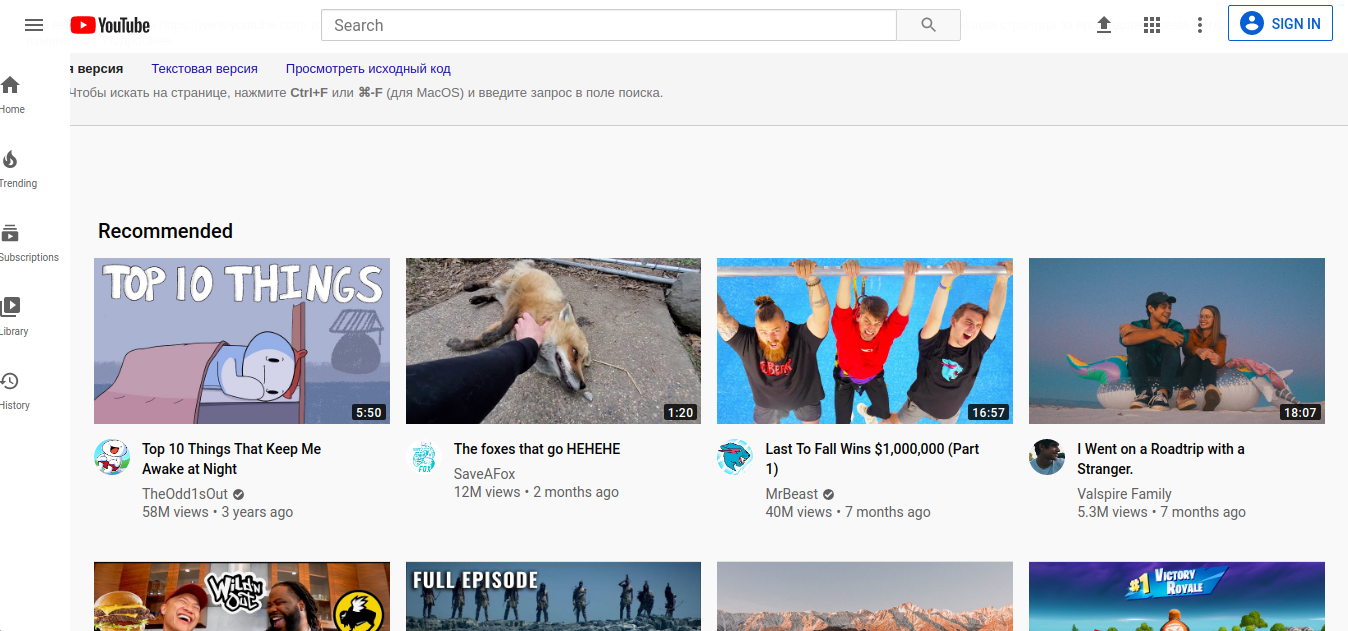

The most practical and at the same time interesting Google service is the ability to search for deleted or archived pages. This can be done using the "cache:" operator. The operator works in such a way that it shows the saved (deleted) version of the web page stored in the Google cache. The syntax for this operator is shown here:

cache: www.youtube.com

After making the above request to Google, access to the previous or outdated version of the Youtube web page is provided. The command allows you to call the full version of the page, the text version, or the page source itself (complete code). The exact time (date, hour, minute, second) of the indexing made by the Google spider is also indicated. The page is displayed as a graphic file, although the search within the page itself is carried out in the same way as in a normal HTML page (the keyboard shortcut CTRL + F). The results of the "cache:" command depend on how often the web page has been indexed by Google. If the developer himself sets the indicator with a certain frequency of visits in the head of the HTML document, then Google recognizes the page as secondary and usually ignores it in favor of the PageRank ratio.which is the main factor in the frequency of page indexing. Therefore, if a particular web page has been changed between visits by the Google crawler, it will not be indexed or read using the "cache:" command. Examples that work especially well when testing this feature are frequently updated blogs, social media accounts, and online portals.

Deleted information or data that was placed by mistake or needs to be deleted at some point can be recovered very easily. The negligence of the web platform administrator can put him at risk of spreading unwanted information.

User information

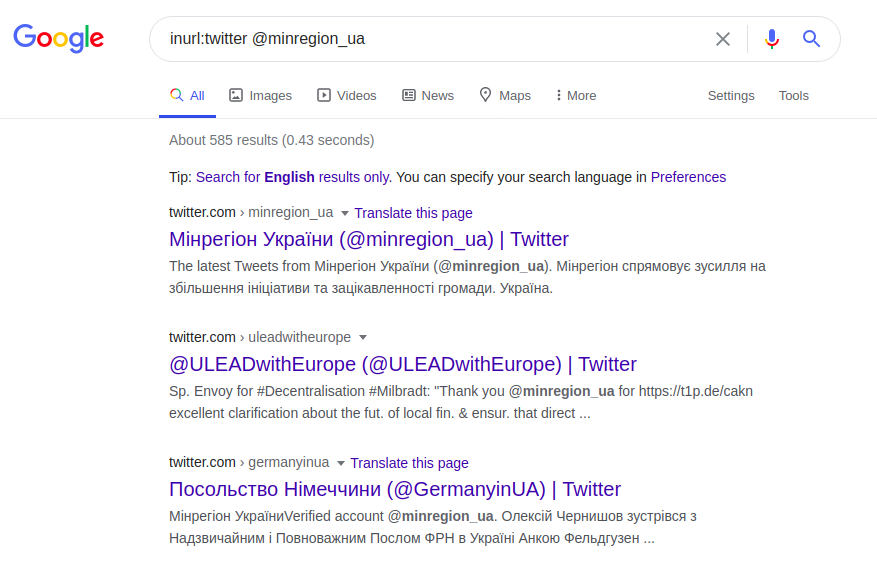

Searching for information about users is used using advanced operators, which make search results accurate and detailed. The operator "@" is used to search for indexing users in social networks: Twitter, Facebook, Instagram. Using the example of the same Polish university, you can find its official representative, on one of the social platforms, using this operator as follows:

inurl: twitter @minregion_ua

This Twitter request finds the user "minregion_ua". Assuming that the place or name of the work of the user we are looking for (the Ministry for the Development of Communities and Territories of Ukraine) and his name are known, you can make a more specific request. And instead of having to tediously search the entire institution's web page, you can ask the correct query based on the email address and assume that the address name must include at least the name of the requested user or institution. For instance:

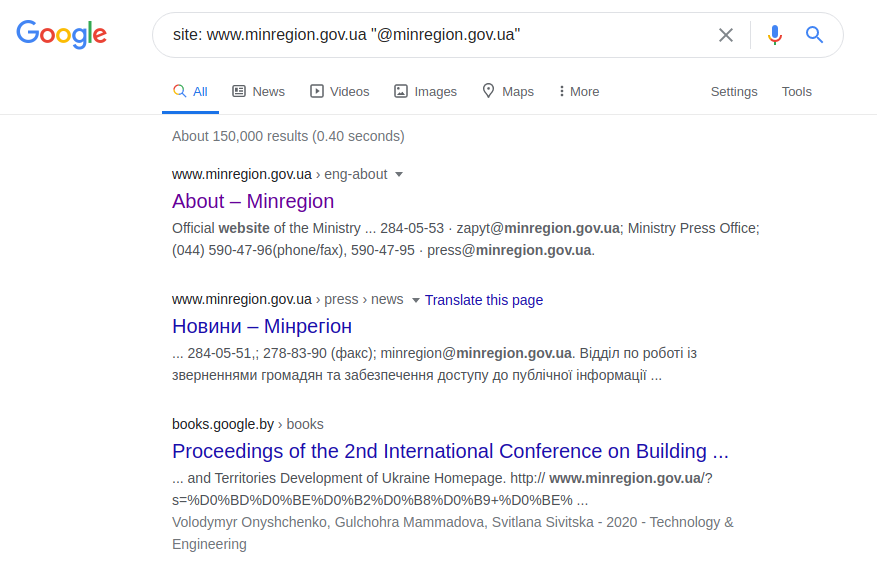

site: www.minregion.gov.ua "@ minregion.ua"

You can also use a less complicated method and send a request only to email addresses, as shown below, in the hope of luck and lack of professionalism of the web resource administrator.

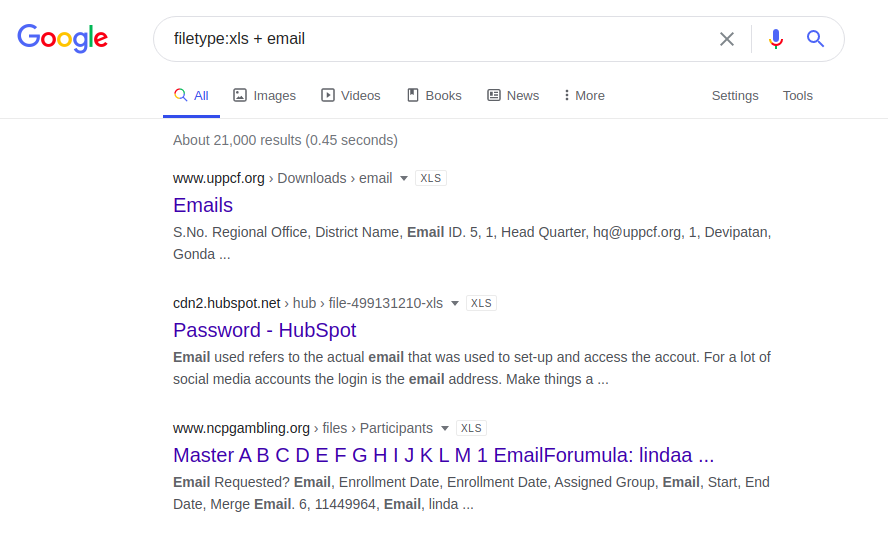

email.xlsx

filetype: xls + email

In addition, you can try to get email addresses from a web page with the following request:

site: www.minregion.gov.ua intext: e-mail

The above query will search for the keyword "email" on the web page of the Ministry for the Development of Communities and Territories of Ukraine. Finding email addresses is of limited use and generally requires little preparation and gathering user information in advance.

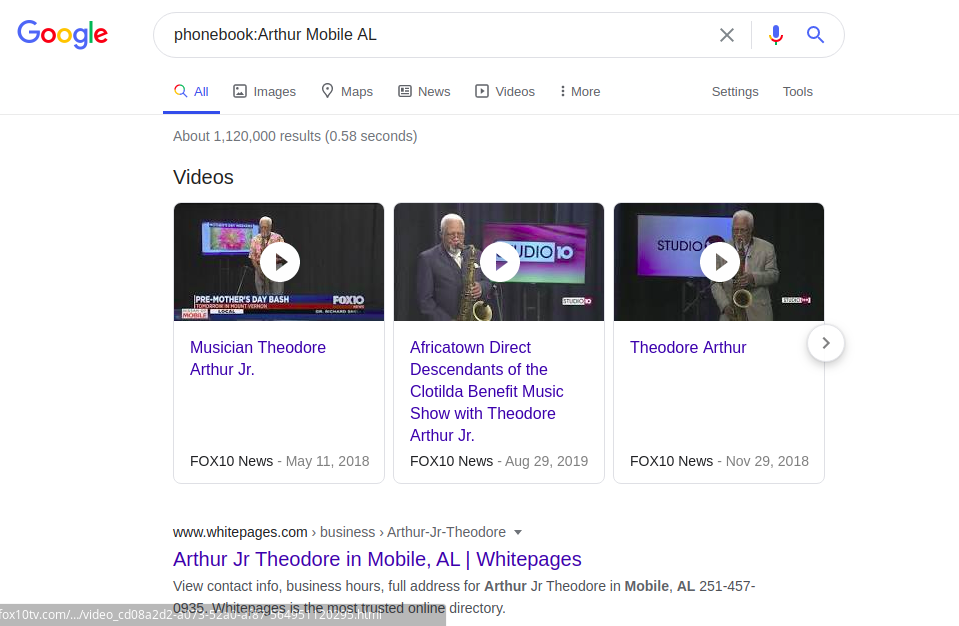

Unfortunately, searching for indexed phone numbers through Google's phonebook is limited to the United States only. For instance:

phonebook: Arthur Mobile AL

Searching for user information is also possible through Google "image search" or reverse image search. This allows you to find identical or similar photos on sites indexed by Google.

Web resource information

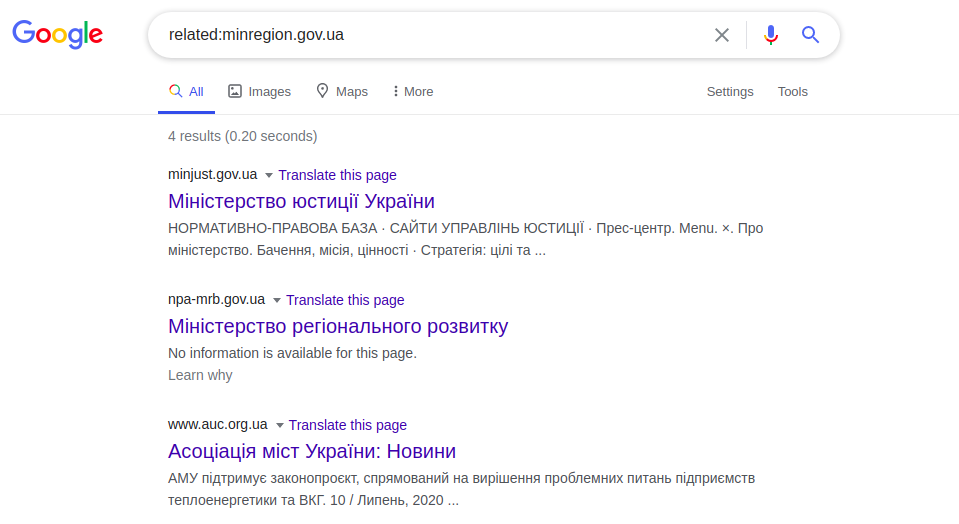

Google has several useful operators, in particular "related:", which displays a list of "similar" websites to the desired one. Similarity is based on functional links, not logical or meaningful links.

related: minregion.gov.ua

This example displays pages of other Ministries of Ukraine. This operator works like the "Related Pages" button in advanced Google searches. In the same way, the “info:” request works, which displays information on a specific web page. This is the specific information of the web page presented in the website title (), namely in the meta description tags (<meta name = “Description”). Example:

info: minregion.gov.ua

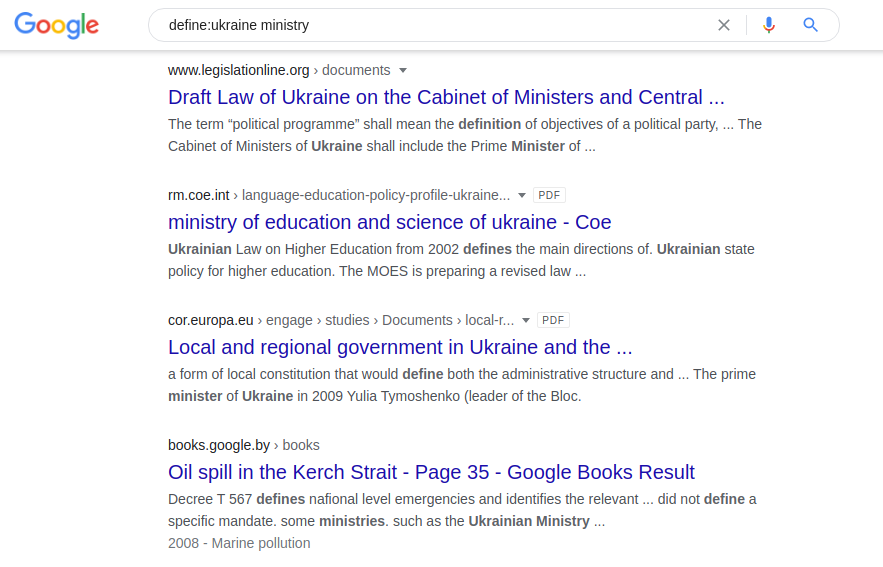

Another query, "define:", is quite useful in finding scientific work. It allows you to get definitions of words from sources such as encyclopedias and online dictionaries. An example of its application:

define: ukraine territories The

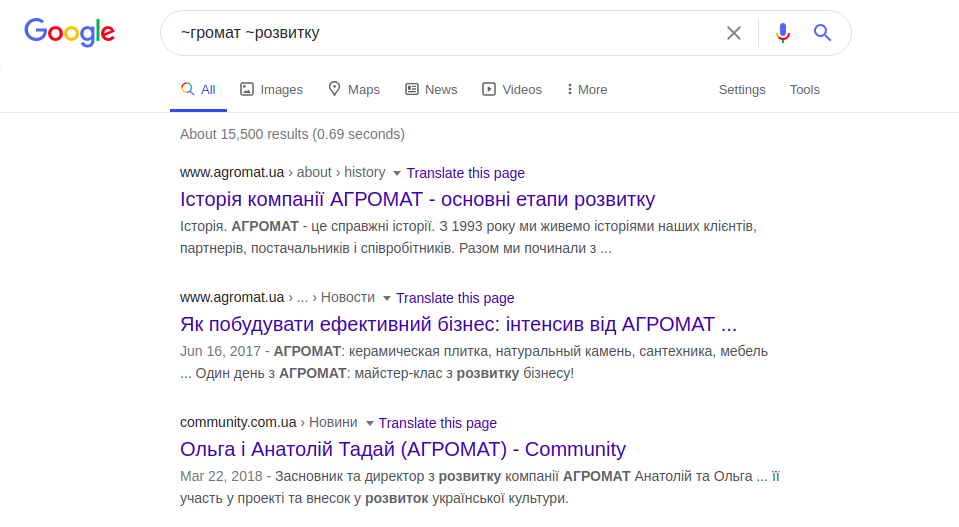

universal operator - tilde ("~"), allows you to search for similar words or synonyms:

~ communities ~ development

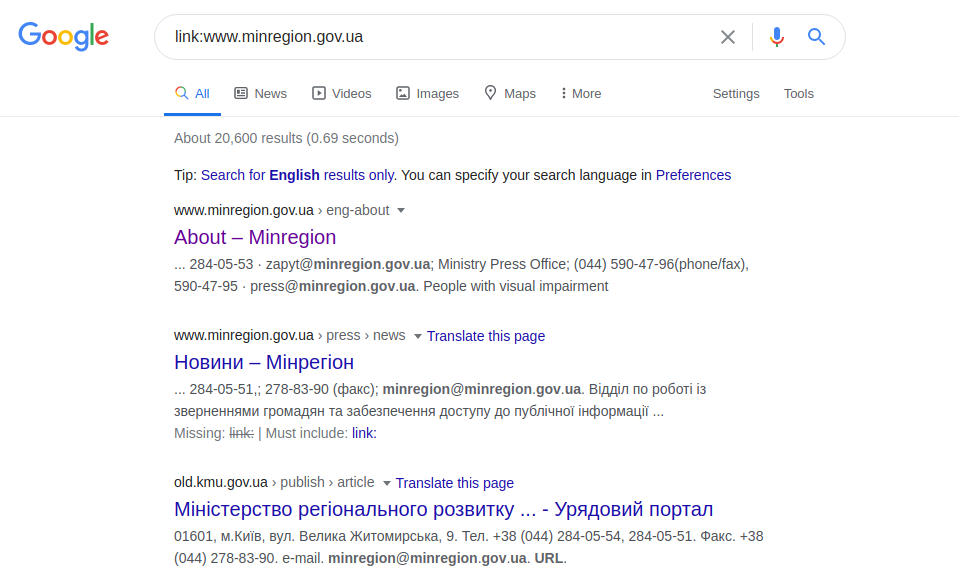

The above query displays both websites with the words “communities” (territories) and “development” (development), and websites with the synonym “communities”. The "link:" operator, which modifies the query, limits the search range to the links specified for a specific page.

link: www.minregion.gov.ua

However, this operator does not display all results and does not expand the search criteria.

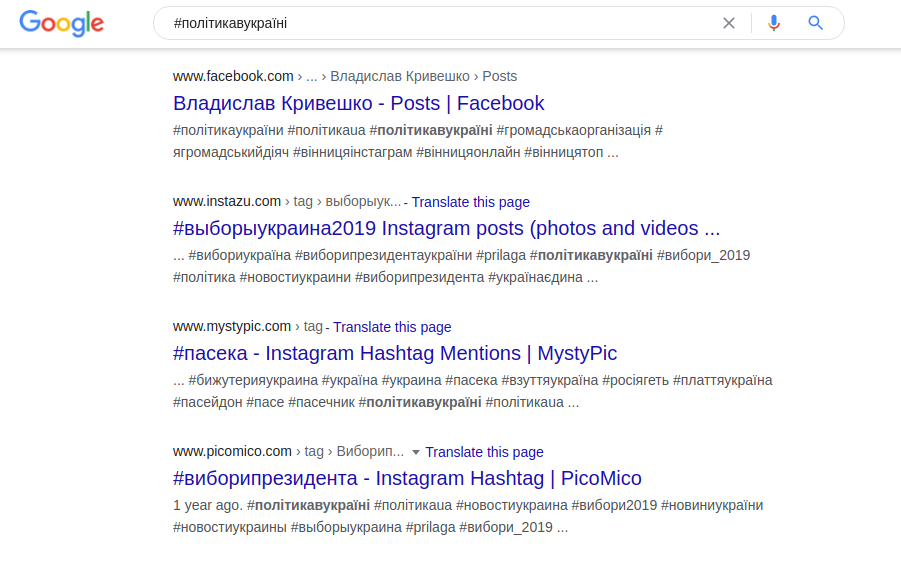

Hashtags are a kind of identification numbers that allow you to group information. They are currently used on Instagram, VK, Facebook, Tumblr and TikTok. Google allows you to search many social networks at the same time or only recommended ones. An example of a typical query for any search engine is:

#polyticavukrainі

Operator "AROUND (n)" allows you to search for two words located at a distance of a certain number of words from each other. Example:

Ministry of AROUND (4) of Ukraine

The result of the above query is to display websites that contain these two words ("ministry" and "Ukraine"), but they are separated from each other by four other words.

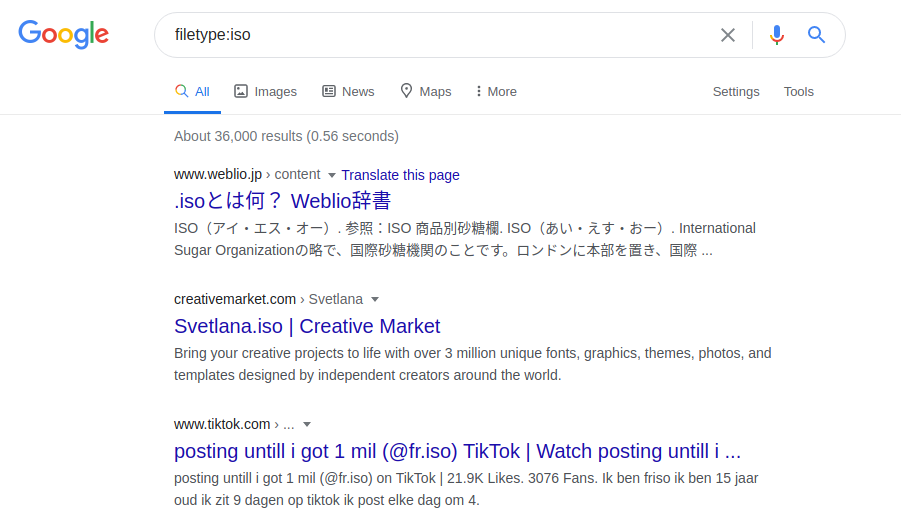

Searching by file type is also extremely useful, as Google indexes content according to the format in which it was recorded. To do this, use the "filetype:" operator. There is a very wide range of file searches currently in use. Of all the search engines available, Google provides the most sophisticated set of operators to search for open source.

As an alternative to the above operators, tools such as Maltego and Oryon OSINT Browser are recommended. They provide automatic data retrieval and do not require the knowledge of special operators. The mechanism of the programs is very simple: using the correct query sent to Google or Bing, documents published by the institution of interest to you are found and the metadata from these documents is analyzed. A potential information resource for such programs is each file with any extension, for example: ".doc", ".pdf", ".ppt", ".odt", ".xls" or ".jpg".

In addition, it should be said about how to properly take care of "cleaning up your metadata" before making files public. Some web guides provide at least several ways to get rid of meta information. However, it is impossible to deduce the best way, because it all depends on the individual preferences of the administrator himself. It is generally recommended that you write the files in a format that does not initially store metadata, and then make the files available. There are numerous free metadata cleaning programs on the Internet, mainly for images. ExifCleaner can be considered as one of the most desirable. In the case of text files, it is highly recommended that you clean up manually.

Information unknowingly left by site owners

Resources indexed by Google remain public (for example, internal documents and company materials left on the server), or they are kept for convenience by the same people (for example, music files or movie files). Searching for such content can be done with Google in many different ways, and the easiest one is just guessing. If, for example, there are files 5.jpg, 8.jpg and 9.jpg in a certain directory, you can predict that there are files from 1 to 4, from 6 to 7 and even more 9. Therefore, you can get access to materials that should not were to be in public. Another way is to search for specific types of content on websites. You can search for music files, photos, movies and books (e-books, audiobooks).

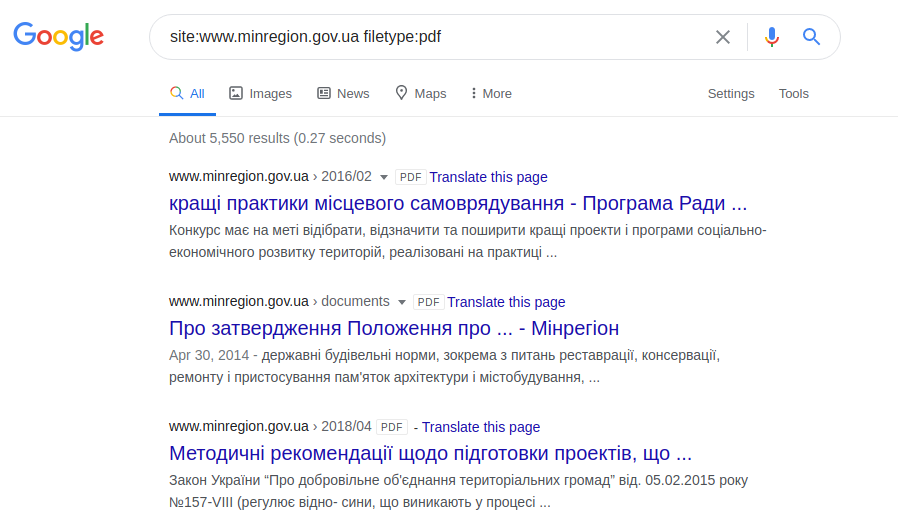

In another case, it may be files that the user unknowingly left in public access (for example, music on an FTP server for his own use). This information can be obtained in two ways: using the "filetype:" operator or the "inurl:" operator. For instance:

filetype: doc site: gov.ua

site: www.minregion.gov.ua filetype: pdf

site: www.minregion.gov.ua inurl: doc

You can also search for program files using a search query and filtering the desired file by its extension:

filetype: iso

Information about the structure of web pages

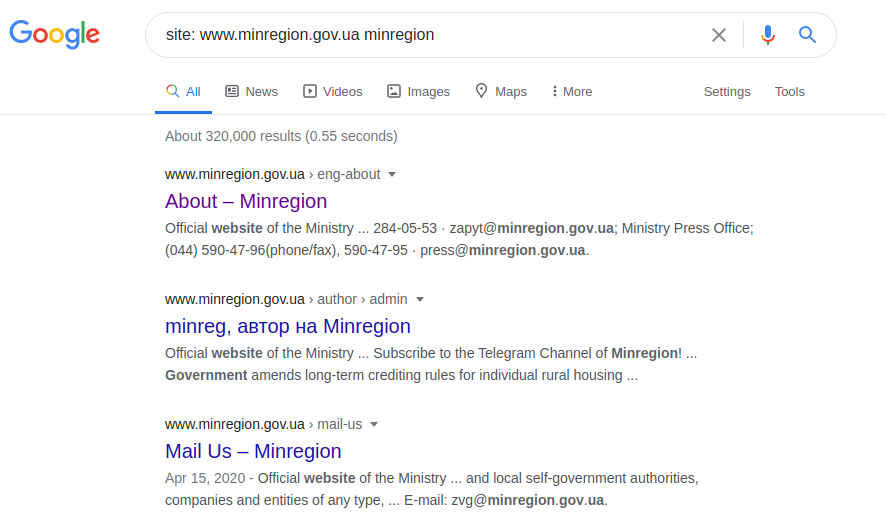

In order to view the structure of a particular web page and reveal its entire structure, which will help the server and its vulnerabilities in the future, you can do this using only the "site:" operator. Let's analyze the following phrase:

site: www.minregion.gov.ua minregion

We start searching for the word “minregion” in the domain “www.minregion.gov.ua”. Every site from this domain (Google searches both in text, in headings and in the title of the site) contains this word. Thus, getting the complete structure of all sites for that particular domain. Once the directory structure is available, a more accurate result (although this may not always happen) can be obtained with the following query:

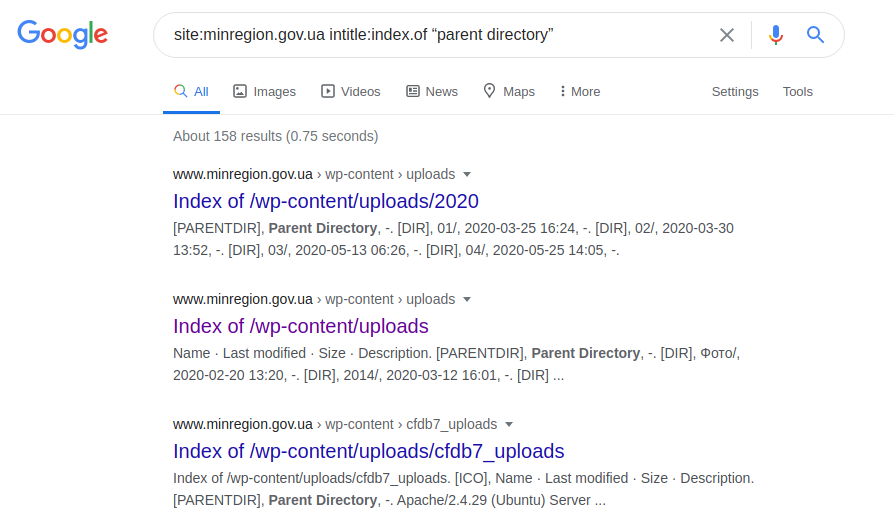

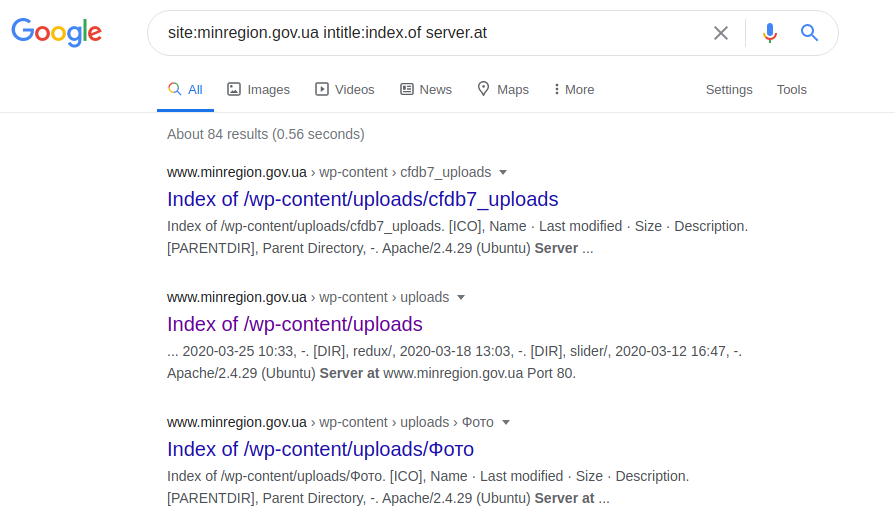

site: minregion.gov.ua intitle: index.of "parent directory"

It shows the least protected subdomains of "minregion.gov.ua", sometimes with the ability to search the entire directory, along with the possible download of files. Therefore, of course, such a request is not applicable to all domains, since they can be protected or run under the control of some other server.

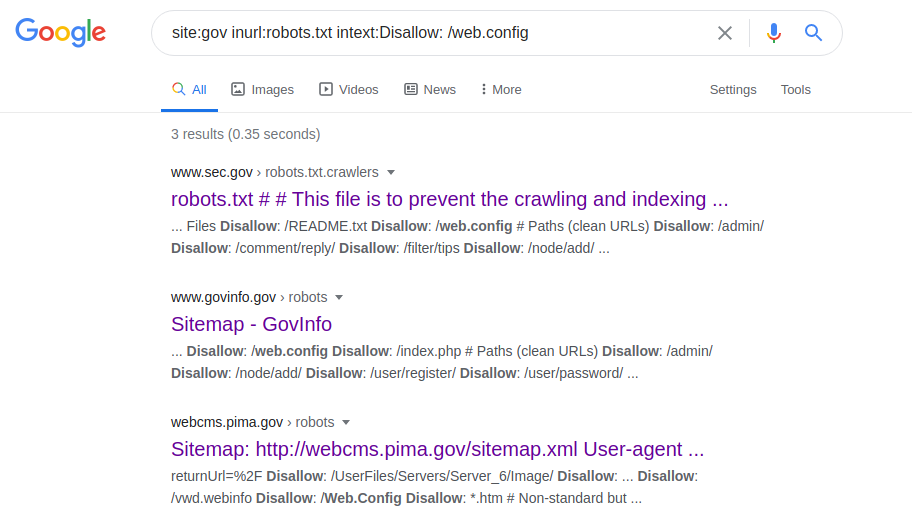

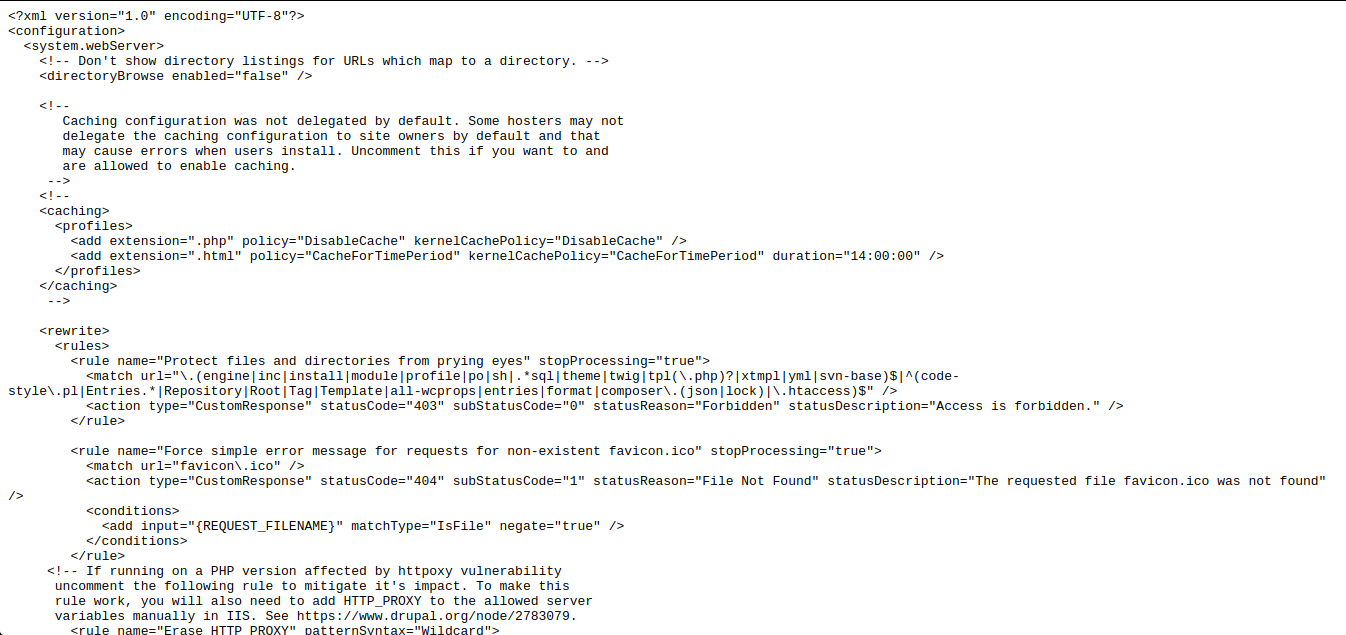

site: gov inurl: robots.txt intext: Disallow: /web.config

This operator allows you to access the configuration parameters of various servers. After making the request, go to the robots.txt file, look for the path to "web.config" and go to the specified file path. To get the server name, its version and other parameters (for example, ports), the following request is made:

site: gosstandart.gov.by intitle: index.of server.at

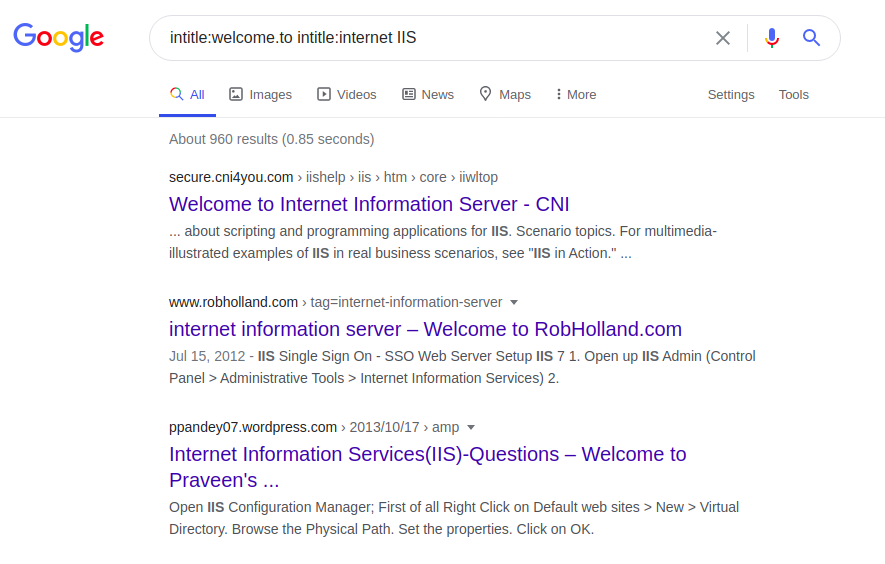

Each server has some unique phrases on its home pages, for example, Internet Information Service (IIS):

intitle: welcome.to intitle: internet IIS

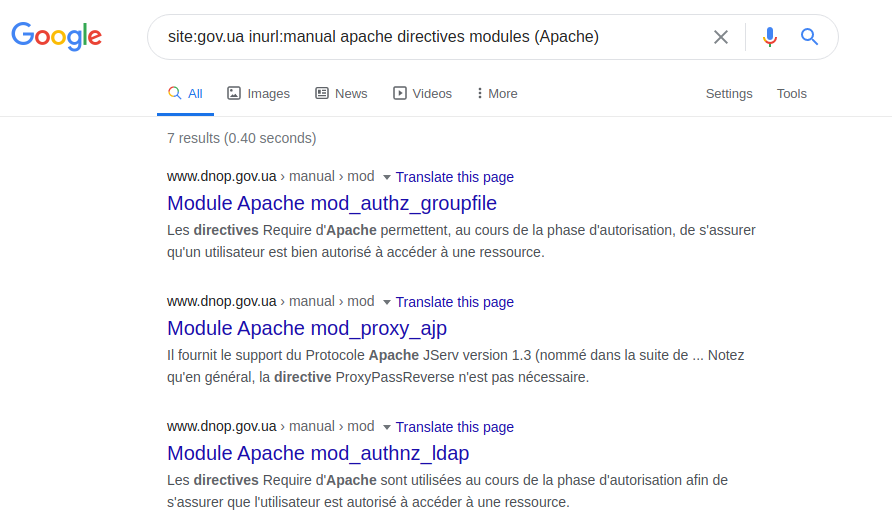

The definition of the server itself and the technologies used in it depends only on the ingenuity of the query being asked. You can, for example, try to do this by clarifying a technical specification, manual or so-called help pages. To demonstrate this capability, you can use the following query:

site: gov.ua inurl: manual apache directives modules (Apache)

Access can be extended, for example, thanks to the file with SQL errors:

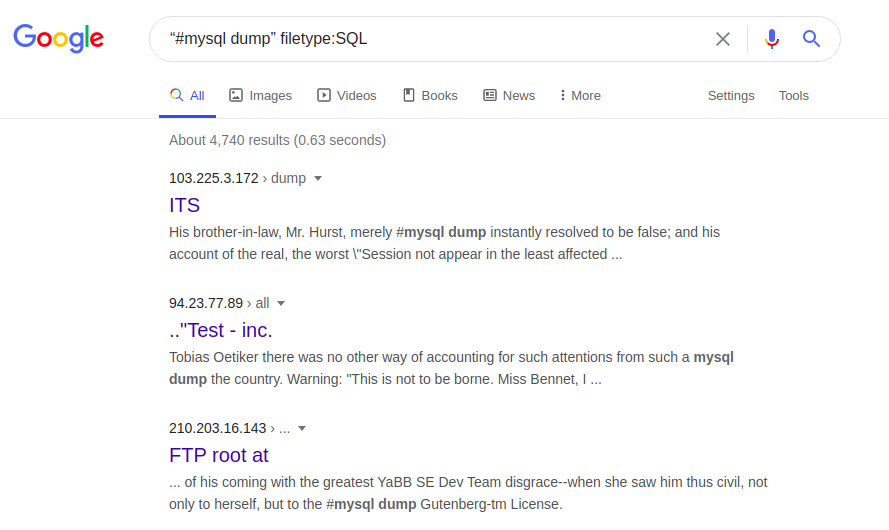

"#Mysql dump" filetype: SQL

Errors in a SQL database can, in particular, provide information about the structure and content of databases. In turn, the entire web page, its original and / or its updated versions can be accessed by the following request:

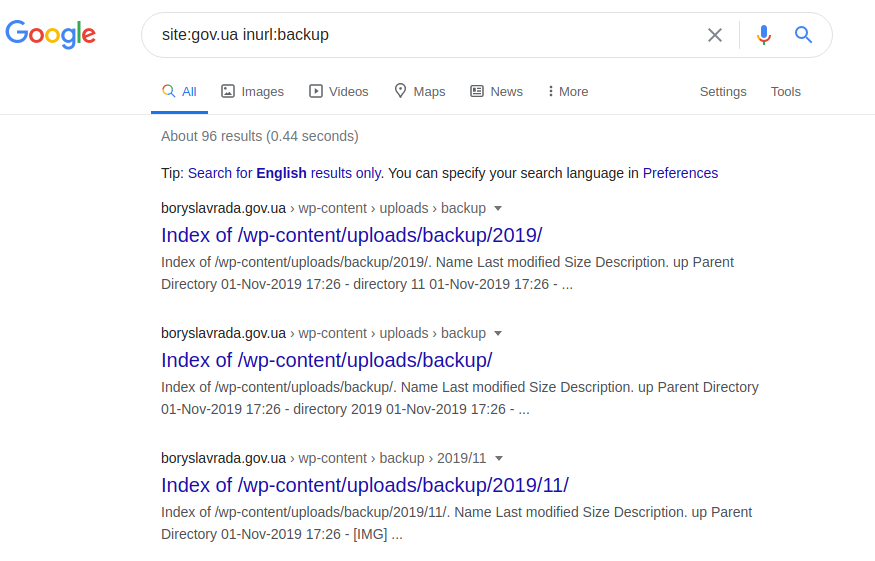

site: gov.ua inurl: backup

site: gov.ua inurl: backup intitle: index.of inurl: admin

Currently, using the above operators rarely gives the expected results, since they can be blocked in advance by knowledgeable users.

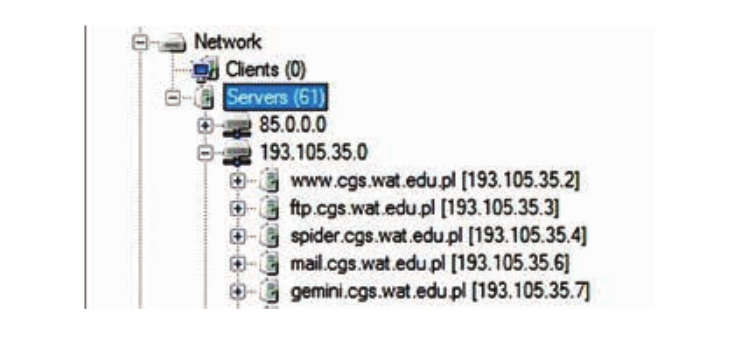

Also, using the FOCA program, you can find the same content as when searching for the above operators. To get started, the program needs the name of the domain name, after which it will analyze the structure of the entire domain and all other subdomains connected to the servers of a particular institution. This information can be found in the dialog box under the Network tab:

Thus, a potential attacker can intercept data left by web administrators, internal documents and company materials left even on a hidden server.

If you would like to know even more information about all possible indexing operators you can check the target database of all Google Dorking operators here . You can also familiarize yourself with one interesting project on GitHub, which has collected all the most common and vulnerable URL links and try to search for something interesting for yourself, you can see it here at this link .

Combining and getting results

For more specific examples, below is a small collection of commonly used Google operators. In a combination of various additional information and the same commands, the search results show a more detailed look at the process of obtaining confidential information. After all, for a regular search engine Google, this process of collecting information can be quite interesting.

Search for budgets on the US Department of Homeland Security and Cybersecurity website.

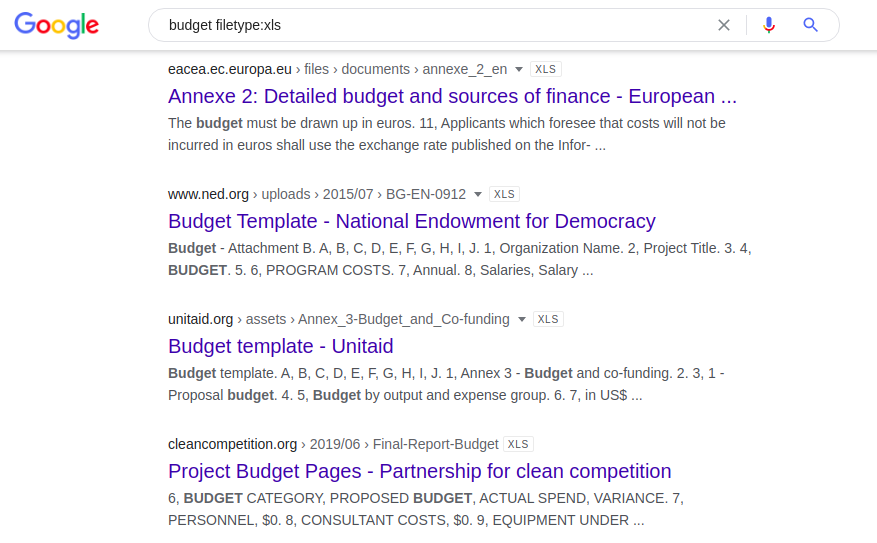

The following combination provides all the publicly indexed Excel spreadsheets that contain the word "budget":

budget filetype: xls

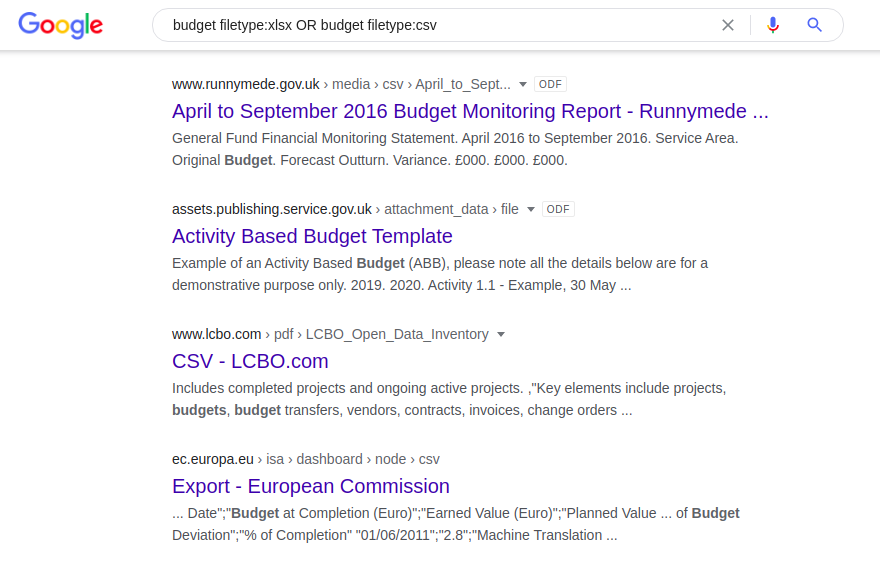

Since the "filetype:" operator does not automatically recognize different versions of the same file format (eg doc versus odt or xlsx versus csv), each of these formats must be split separately:

budget filetype: xlsx OR budget filetype: csv

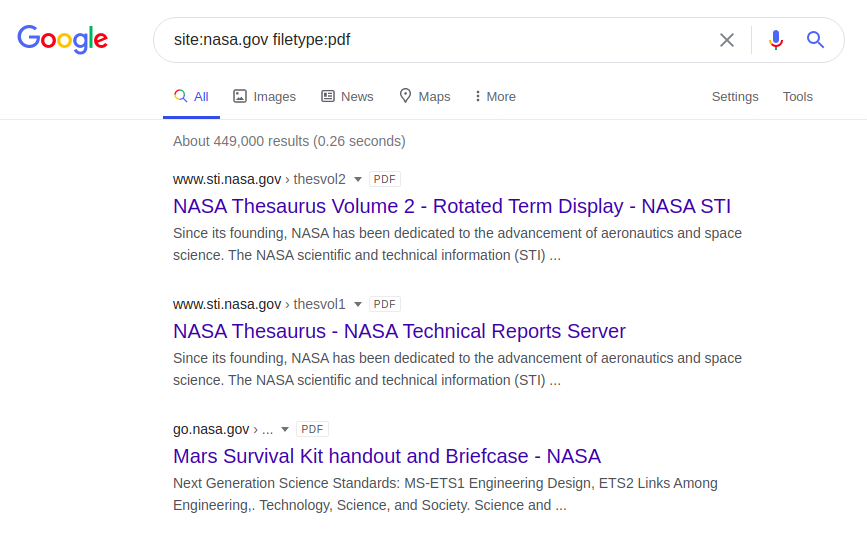

Subsequent dork will return PDF files on NASA website:

site: nasa.gov filetype: pdf

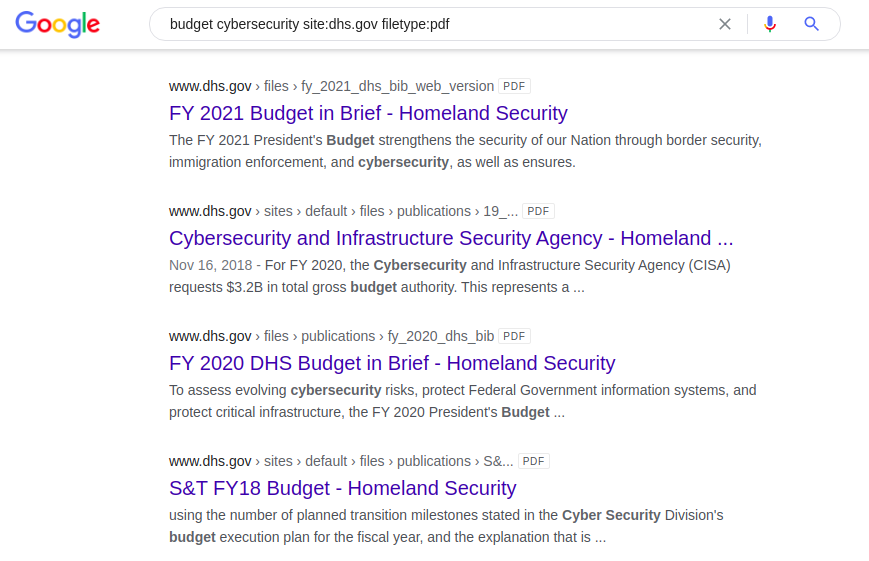

Another interesting example of using a dork with the keyword “budget” is searching for US cybersecurity documents in “pdf” format on the official website of the Department of Home Defense.

budget cybersecurity site: dhs.gov filetype: pdf

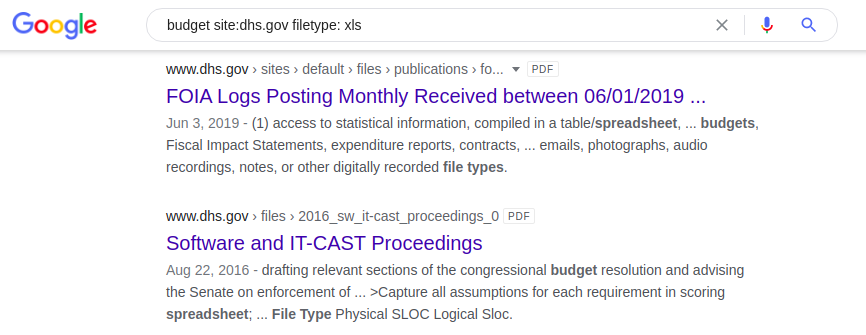

Same dork application, but this time the search engine will return .xlsx spreadsheets containing the word "budget" on the US Department of Homeland Security website:

budget site: dhs.gov filetype: xls

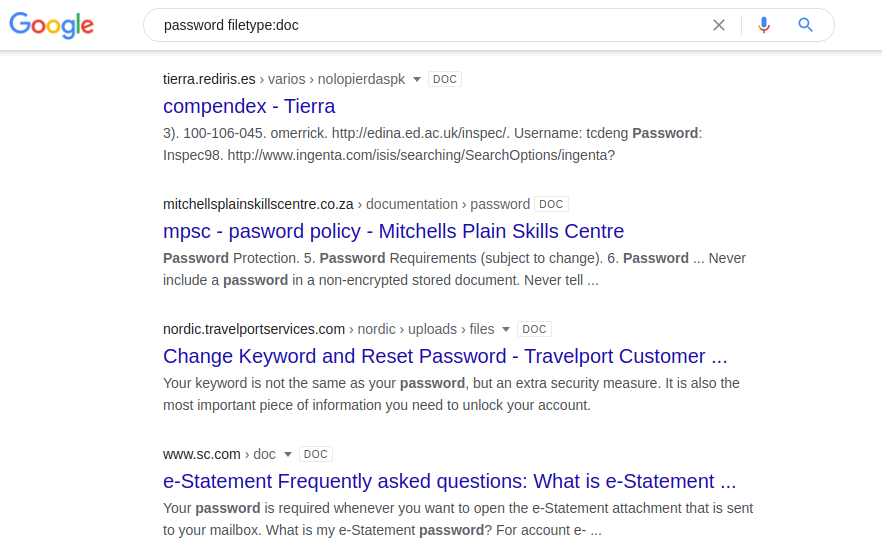

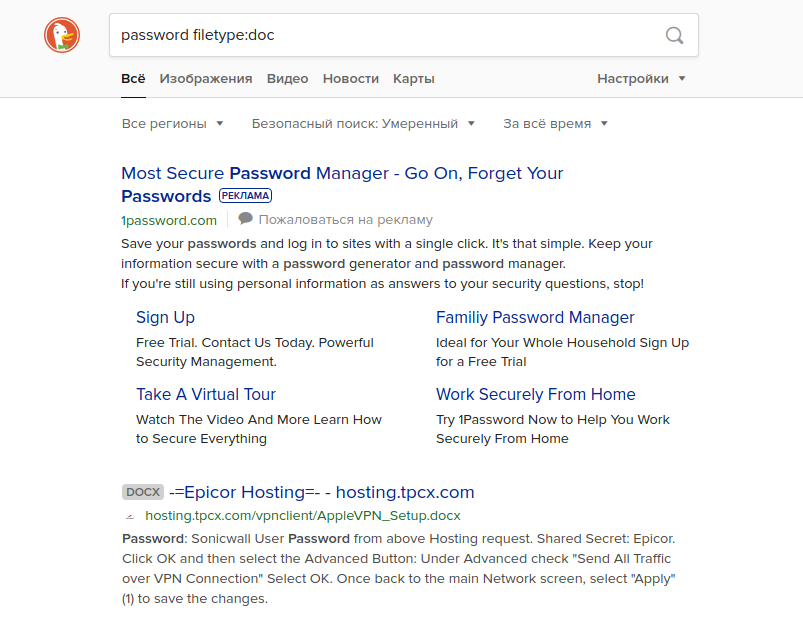

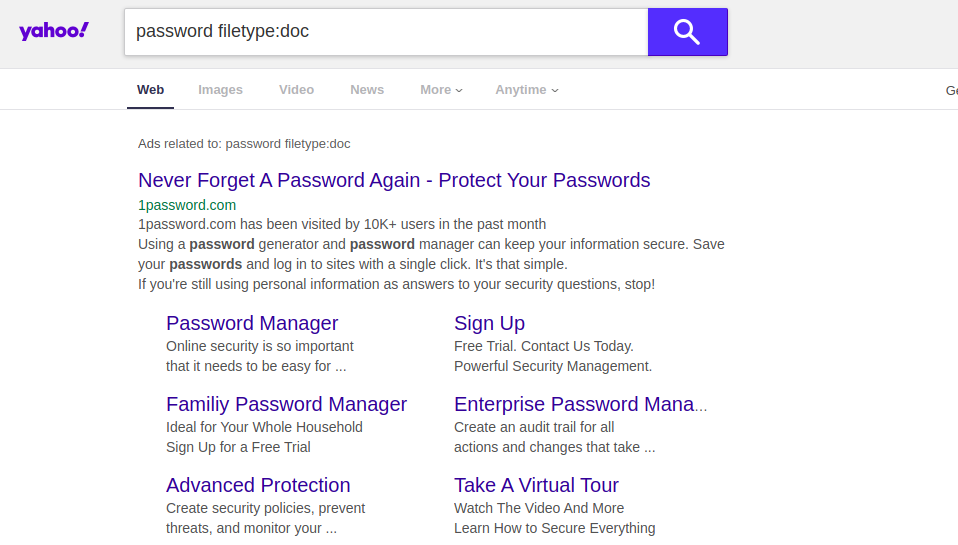

Search for passwords

Searching for information by login and password can be useful as a search for vulnerabilities on your own resource. Otherwise, passwords are stored in shared documents on web servers. You can try the following combinations in different search engines:

password filetype: doc / docx / pdf / xls

password filetype: doc / docx / pdf / xls site: [Site name]

If you try to enter such a query in another search engine, you can get completely different results. For example, if you run this query without the term "site: [Site Name] ", Google will return document results containing the real usernames and passwords of some American high schools. Other search engines do not show this information on the first pages of results. As you can see below, Yahoo and DuckDuckGo are examples.

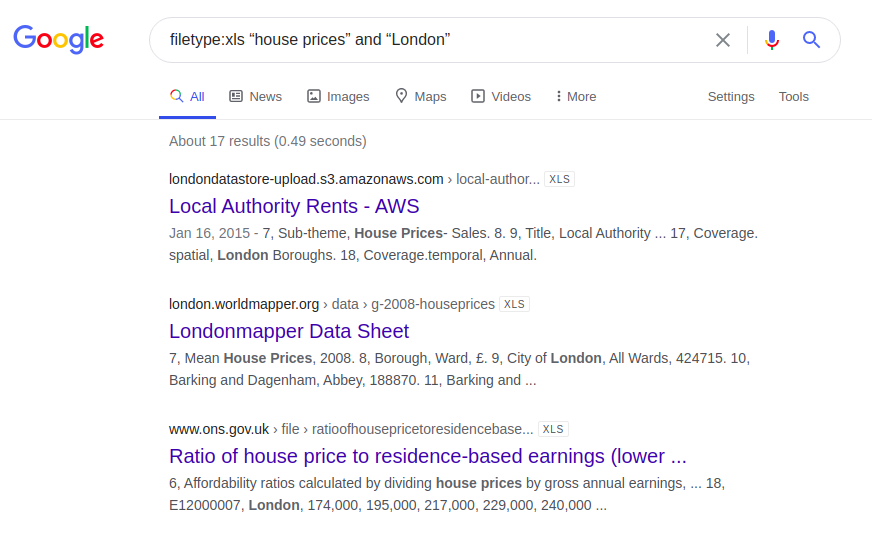

Housing prices in London

Another interesting example concerns information about the price of housing in London. Below are the results of a query that was entered in four different search engines:

filetype: xls "house prices" and "London"

Perhaps you now have your own ideas and ideas about which websites you would like to focus on in your own search for information, or how to properly check your own resource for possible vulnerabilities ...

Alternative search indexing tools

There are also other methods of collecting information using Google Dorking. They are all alternatives and act as search automation. Below we propose to take a look at some of the most popular projects that are not a sin to share.

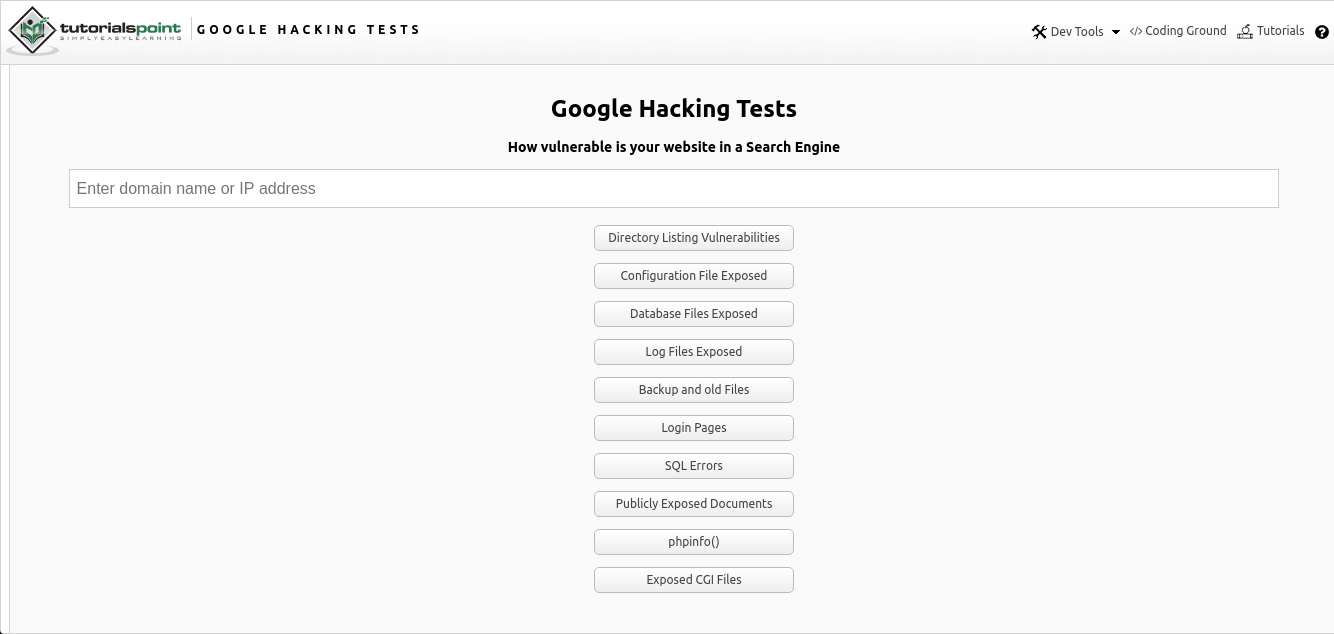

Google Hacking Online

Google Hacking Online is an online integration of Google Dorking search of various data via a web page using established operators, which you can find here . The tool is a simple input field for finding the desired IP address or URL of a link to a resource of interest, along with suggested search options.

As you can see from the picture above, search by several parameters is provided in the form of several options:

- Search for public and vulnerable directories

- Configuration files

- Database files

- Logs

- Old data and backup data

- Authentication Pages

- SQL errors

- Publicly available documents

- Server php configuration information ("phpinfo")

- Common Gateway Interface (CGI) Files

Everything works on vanilla JS, which is written in the web page file itself. At the beginning, the entered user information is taken, namely the host name or the IP address of the web page. And then a request is made with operators for the information entered. A link to search for a specific resource opens in a new pop-up window with the results provided.

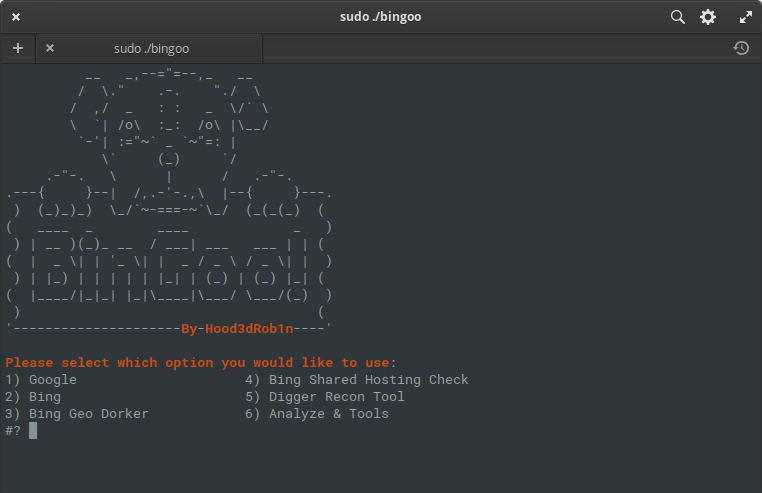

BinGoo

BinGoo is a versatile tool written in pure bash. It uses the search operators Google and Bing to filter a large number of links based on the search terms provided. You can choose to search one operator at a time, or list one operator per line and perform a bulk scan. Once the initial gathering process is complete, or you have links collected in other ways, you can move on to the analysis tools to check for common signs of vulnerabilities.

The results are neatly sorted into appropriate files based on the results obtained. But the analysis does not stop here either, you can go even further and run them using additional SQL or LFI functionality, or you can use the SQLMAP and FIMAP wrapper tools, which work much better, with accurate results.

Also included are several handy features to make life easier, such as geodorking based on domain type, country codes in the domain, and shared hosting checker that uses preconfigured Bing search and dork list to look for possible vulnerabilities on other sites. Also included is a simple search for admin pages based on the provided list and server response codes for confirmation. In general, this is a very interesting and compact package of tools that carries out the main collection and analysis of the given information! You can get acquainted with it here .

Pagodo

The purpose of the Pagodo tool is to passive indexing by Google Dorking operators to collect potentially vulnerable web pages and applications over the Internet. The program consists of two parts. The first is ghdb_scraper.py, which queries and collects the Google Dorks operators, and the second, pagodo.py, uses the operators and information collected through ghdb_scraper.py and parses it through Google queries.

The pagodo.py file requires a list of Google Dorks operators to begin with. A similar file is provided either in the repository of the project itself or you can simply query the entire database through a single GET request using ghdb_scraper.py. And then just copy the individual dorks statements to a text file or put them in json if additional context data is required.

In order to perform this operation, you need to enter the following command:

python3 ghdb_scraper.py -j -sNow that there is a file with all the necessary operators, it can be redirected to pagodo.py using the "-g" option in order to start collecting potentially vulnerable and public applications. The pagodo.py file uses the "google" library to find these sites using operators like this:

intitle: "ListMail Login" admin -demo

site: example.com

Unfortunately, the process of so many requests (namely ~ 4600) through Google is simple will not work. Google will immediately identify you as a bot and block the IP address for a certain period. Several improvements have been added to make search queries look more organic.

The google Python module has been specially tweaked to allow user agent randomization across Google searches. This feature is available in module version 1.9.3 and allows you to randomize the different user agents used for each search query. This feature allows you to emulate different browsers used in a large corporate environment.

The second enhancement focuses on the randomization of the time between searches. The minimum delay is specified using the -e parameter, and the jitter factor is used to add time to the minimum number of delays. A list of 50 jitters is generated and one of them is randomly added to the minimum latency for each Google search.

self.jitter = numpy.random.uniform(low=self.delay, high=jitter * self.delay, size=(50,))Further in the script, a random time is selected from the jitter array and added to the delay in creating requests:

pause_time = self.delay + random.choice (self.jitter)You can experiment with the values yourself, but the default settings work just fine. Please note that the process of the tool can take several days (on average 3; depending on the number of specified operators and the request interval), so make sure you have time for this.

To run the tool itself, the following command is enough, where "example.com" is the link to the website of interest, and "dorks.txt" is the text file that ghdb_scraper.py created:

python3 pagodo.py -d example.com -g dorks.txt -l 50 -s -e 35.0 -j 1.1And you can touch and familiarize yourself with the tool by clicking on this link .

Protection methods from Google Dorking

Key recommendations

Google Dorking, like any other open source tool, has its own techniques to protect and prevent intruders from collecting confidential information. The following recommendations of the five protocols should be followed by administrators of any web platforms and servers in order to avoid threats from "Google Dorking":

- Systematic updating of operating systems, services and applications.

- Implementation and maintenance of anti-hacker systems.

- Awareness of Google robots and the various search engine procedures, and how to validate such processes.

- Removing sensitive content from public sources.

- Separating public content, private content and blocking access to content for public users.

.Htaccess and robots.txt file configuration

Basically, all the vulnerabilities and threats associated with "Dorking" are generated due to the carelessness or negligence of users of various programs, servers or other web devices. Therefore, the rules of self-protection and data protection do not cause any difficulties or complications.

In order to carefully approach the prevention of indexing from any search engines, you should pay attention to two main configuration files of any network resource: ".htaccess" and "robots.txt". The first one protects the designated paths and directories with passwords. The second one excludes directories from indexing by search engines.

If your own resource contains certain types of data or directories that should not be indexed on Google, then first of all, you should configure access to folders through passwords. In the example below, you can clearly see how and what exactly should be written in the ".htaccess" file located in the root directory of any website.

First, add a few lines as shown below:

AuthUserFile /your/directory/here/.htpasswd

AuthGroupFile / dev / null

AuthName "Secure Document"

AuthType Basic

require user username1

require user username2

require user username3

In the AuthUserFile line, specify the path to the location of the .htaccess file, which is located in your directory. And in the last three lines, you need to specify the corresponding username to which access will be provided. Then you need to create ".htpasswd" in the same folder as ".htaccess" and run the following command:

htpasswd -c .htpasswd username1

Enter the password twice for username1 and after that, a completely clean file ".htpasswd" will be created in current directory and will contain the encrypted version of the password.

If there are multiple users, you must assign a password to each of them. To add additional users, you do not need to create a new file, you can simply add them to the existing file without using the -c option using this command:

htpasswd .htpasswd username2

In other cases, it is recommended to set up a robots.txt file, which is responsible for indexing pages of any web resource. It serves as a guide for any search engine that links to specific page addresses. And before going directly to the source you are looking for, robots.txt will either block such requests or skip them.

The file itself is located in the root directory of any web platform running on the Internet. The configuration is carried out just by changing two main parameters: "User-agent" and "Disallow". The first one selects and marks either all or some specific search engines. While the second one notes what exactly needs to be blocked (files, directories, files with certain extensions, etc.). Below are some examples: directory, file, and specific search engine exclusions excluded from the indexing process.

User-agent: *

Disallow: / cgi-bin /

User-agent: *

Disallow: /~joe/junk.html

User-agent: Bing

Disallow: /

Using meta tags

Also restrictions for web spiders can be introduced on separate web pages. They can be located on typical websites, blogs, and configuration pages. In the HTML heading, they must be accompanied by one of the following phrases:

<meta name = “Robots” content = “none” \>

<meta name = “Robots” content = “noindex, nofollow” \>

When you add such an entry in the page header, Google robots will not index any secondary or main page. This string can be entered on pages that should not be indexed. However, this decision is based on a mutual agreement between the search engines and the user himself. While Google and other web spiders abide by the aforementioned restrictions, there are certain web robots that "hunt" for such phrases to retrieve data initially configured without indexing.

Of the more advanced options for indexing security, you can use the CAPTCHA system. This is a computer test that allows only humans to access the content of a page, not automated bots. However, this option has a small drawback. It is not very user-friendly for the users themselves.

Another simple defensive technique from Google Dorks could be, for example, encoding characters in administrative files with ASCII, making it difficult to use Google Dorking.

Pentesting practice

Pentesting practices are tests to identify vulnerabilities in the network and on web platforms. They are important in their own way, because such tests uniquely determine the level of vulnerability of web pages or servers, including Google Dorking. There are dedicated pentesting tools that can be found on the Internet. One of them is Site Digger, a site that allows you to automatically check the Google Hacking database on any selected web page. Also, there are also tools such as Wikto scanner, SUCURI and various other online scanners. They work in a similar way.

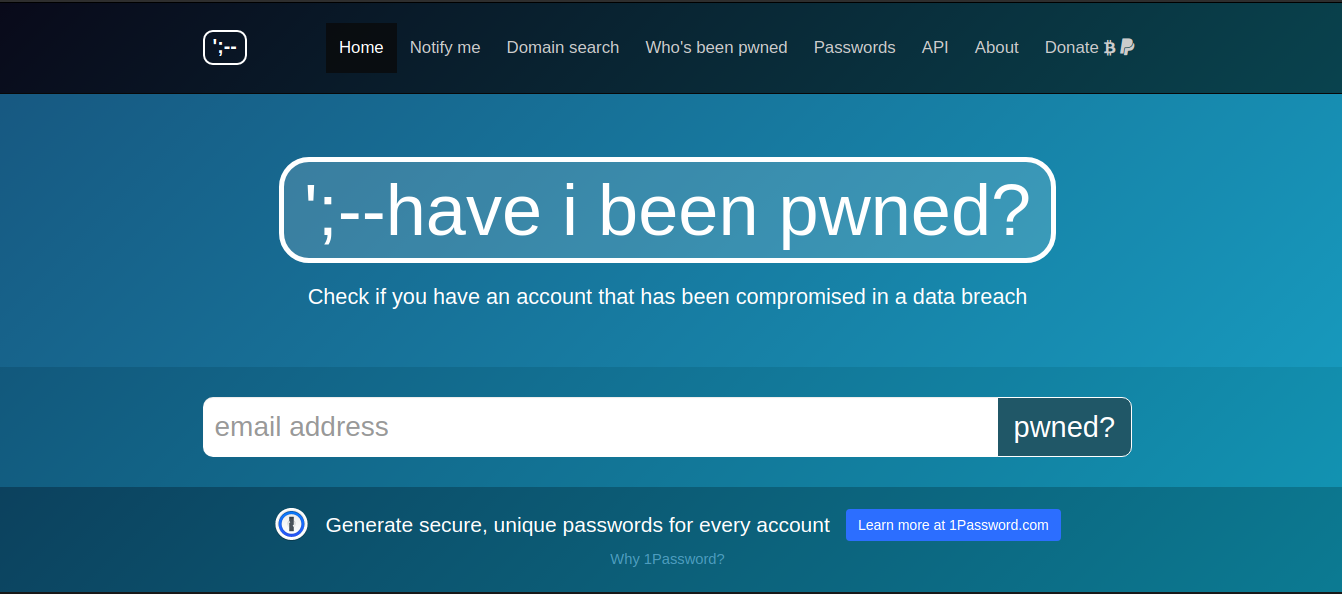

There are more advanced tools that mimic the web page environment, along with bugs and vulnerabilities, to lure an attacker and then retrieve sensitive information about him, such as the Google Hack Honeypot. A standard user who has little knowledge and insufficient experience in protecting against Google Dorking should first of all check their network resource to identify Google Dorking vulnerabilities and check what sensitive data is publicly available. It is worth checking these databases regularly, haveibeenpwned.com and dehashed.com , to see if the security of your online accounts has been compromised and published.

https://haveibeenpwned.com/ refers to poorly secured web pages where account data (email addresses, logins, passwords, and other data) were collected. The database currently contains over 5 billion accounts. A more advanced tool is available at https://dehashed.com , which allows you to search information by usernames, email addresses, passwords and their hashes, IP addresses, names and phone numbers. In addition, leaked accounts can be purchased online. One-day access costs only $ 2.

Conclusion

Google Dorking is an integral part of the collection of confidential information and the process of its analysis. It can rightfully be considered one of the most root and main OSINT tools. Google Dorking operators help both in testing their own server and in finding all possible information about a potential victim. This is indeed a very striking example of the correct use of search engines for the purpose of exploring specific information. However, whether the intentions to use this technology are good (checking the vulnerabilities of their own Internet resource) or unkind (searching and collecting information from various resources and using it for illegal purposes), it remains only for the users to decide.

Alternative methods and automation tools provide even more opportunities and convenience for analyzing web resources. Some of them, like BinGoo, extend the regular indexed search on Bing and analyze all the information received through additional tools (SqlMap, Fimap). They, in turn, present more accurate and specific information about the security of the selected web resource.

At the same time, it is important to know and remember how to properly secure and prevent your online platforms from being indexed where they should not be. And also adhere to the basic provisions provided for each web administrator. After all, ignorance and unawareness that, by their own mistake, other people got your information, does not mean that everything can be returned as it was before.