In X5, the system that will track labeled goods and exchange data with the government and suppliers is called “Markus”. Let's tell in order how and who developed it, what kind of technology stack it has, and why we have something to be proud of.

Real HighLoad

“Markus” solves many problems, the main one of which is the integration interaction between information systems X5 and the state information system of labeled products (GIS MP) to track the movement of labeled products. The platform also stores all the marking codes received by us and the entire history of the movement of these codes across objects, helps to eliminate the re-sorting of marked products. On the example of tobacco products, which were included in the first groups of labeled goods, only one truck of cigarettes contains about 600,000 packs, each of which has its own unique code. And the task of our system is to track and check the legality of the movements of each such pack between warehouses and stores, and ultimately check the admissibility of their implementation to the end customer. And we record cash transactions about 125,000 per hour,and it is also necessary to record how each such pack got into the store. Thus, taking into account all movements between objects, we expect tens of billions of records per year.

Team M

Despite the fact that "Markus" is considered a project within the X5, it is being implemented according to the product approach. The team works on Scrum. The start of the project was last summer, but the first results came only in October - their own team was fully assembled, the system architecture was developed and equipment was purchased. Now the team has 16 people, six of whom are engaged in the development of backend and frontend, three in system analysis. Six more people are involved in manual, load, automated testing and product support. In addition, we have an SRE specialist.

The code in our team is written not only by developers, almost all the guys know how to program and write autotests, load scripts and automation scripts. We pay special attention to this, since even product support requires a high level of automation. We always try to advise and help our colleagues who have not programmed before, to give some small tasks to work.

In connection with the coronavirus pandemic, we transferred the entire team to remote work, the availability of all development management tools, the built workflow in Jira and GitLab made it easy to go through this stage. The months spent at a remote location showed that the productivity of the team did not suffer from this, for many the comfort in work increased, the only thing is that there is not enough live communication.

Team meeting before the distance

Remote meetings

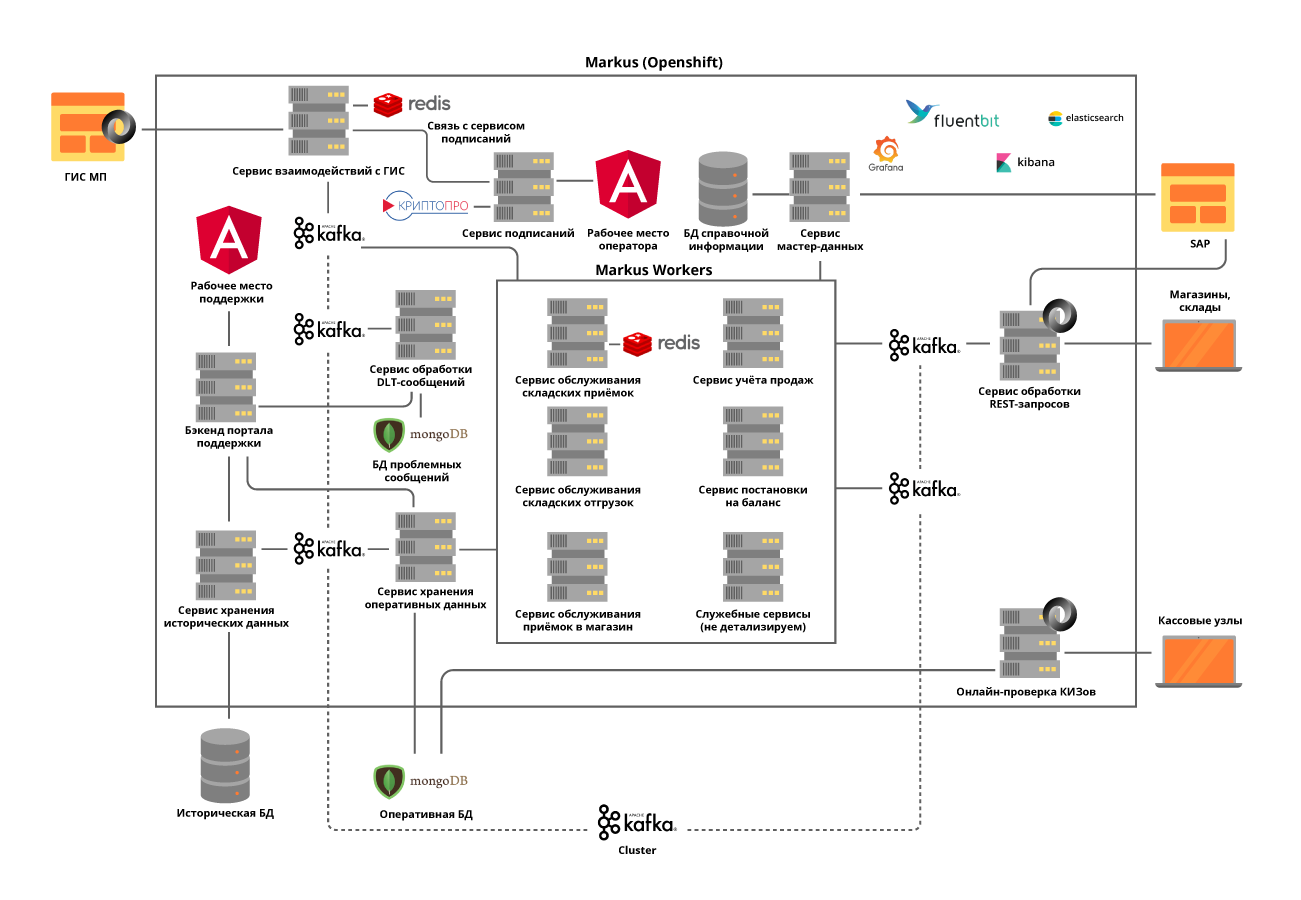

Solution technology stack

The standard repository and CI / CD tool for X5 is GitLab. We use it for code storage, continuous testing, deployment to test and production servers. We also use the practice of code review, when at least 2 colleagues need to approve the changes made by the developer to the code. The static code analyzers SonarQube and JaCoCo help us keep the code clean and provide the required level of unit test coverage. All changes in the code must go through these checks. All test scripts that are manually run are subsequently automated.

For the successful execution of business processes by “Markus” we had to solve a number of technological problems, each in order.

Task 1. The need for horizontal scalability of the system

To solve this problem, we have chosen a microservice approach to architecture. At the same time, it was very important to understand the areas of responsibility of the services. We tried to divide them into business operations, taking into account the specifics of the processes. For example, acceptance at a warehouse is not a very frequent, but a very voluminous operation, during which it is necessary to obtain from the state regulator as quickly as possible information about the received units of goods, the number of which in one delivery reaches 600,000, check the admissibility of accepting this product to the warehouse and give all necessary information for the warehouse automation system. But shipping from warehouses has a much higher intensity, but at the same time operates with small amounts of data.

We implement all services on the stateless principle and even try to divide internal operations into steps, using what we call Kafka self-topics. This is when a microservice sends a message to itself, which allows balancing the load for more resource-intensive operations and simplifies product maintenance, but more on that later.

We decided to separate modules for interaction with external systems into separate services. This made it possible to solve the problem of frequently changing APIs of external systems, with practically no impact on services with business functionality.

All microservices are deployed in the OpenShift cluster, which solves both the problem of scaling each microservice and allows us not to use third-party Service Discovery tools.

Task 2. The need to maintain a high load and very intensive data exchange between platform services: only at the project launch phase, about 600 operations per second are performed. We expect this value to increase up to 5000 op / sec as trading objects connect to our platform.

This task was solved by deploying a Kafka cluster and almost completely abandoning synchronous communication between platform microservices. This requires a very careful analysis of the system requirements, since not all operations can be asynchronous. At the same time, we not only transmit events through the broker, but also transmit all the required business information in the message. Thus, the message size can be up to several hundred kilobytes. The limitation on the volume of messages in Kafka requires us to accurately predict the size of messages, and, if necessary, we divide them, but the division is logical, associated with business operations.

For example, the goods arrived in the car, we divide them into boxes. For synchronous operations, separate microservices are allocated and rigorous load testing is performed. Using Kafka posed another challenge for us - testing our service with Kafka integration makes all our unit tests asynchronous. We solved this problem by writing our own utilitarian methods using the Embedded Kafka Broker. This does not obviate the need to write unit tests for individual methods, but we prefer to test complex cases using Kafka.

We paid a lot of attention to tracing logs so that their TraceId is not lost when exceptions are thrown during the operation of services or when working with Kafka batch. And if there were no special questions with the first, then in the second case we are forced to write to the log all the TraceId with which the batch came and select one to continue tracing. Then, when searching for the initial TraceId, the user will easily find out with which trace the trace continued.

Objective 3. The need to store a large amount of data: more than 1 billion labels per year for tobacco alone are sent to X5. They require constant and quick access. In total, the system must process about 10 billion records on the history of the movement of these marked goods.

To solve the third problem, MongoDB NoSQL database was chosen. We have built a shard of 5 nodes and in each node a Replica Set of 3 servers. This allows you to scale the system horizontally, adding new servers to the cluster, and ensure its fault tolerance. Here we faced another problem - ensuring transactionality in the mongo cluster, taking into account the use of horizontally scalable microservices. For example, one of the tasks of our system is to detect attempts to resell goods with the same marking codes. Here overlays appear with erroneous scans or with erroneous cashier operations. We found that such duplicates can occur both within one batch being processed in Kafka, and within two batch processed in parallel. Thus, checking for duplicates by querying the database gave nothing.For each of the microservices, we solved the problem separately based on the business logic of this service. For example, for receipts, we added a check inside batch and a separate processing for the appearance of duplicates when inserted.

So that the user's work with the history of operations does not affect the most important thing - the functioning of our business processes, we have separated all historical data into a separate service with a separate database, which also receives information through Kafka. Thus, users work with an isolated service without affecting the services that process data on current operations.

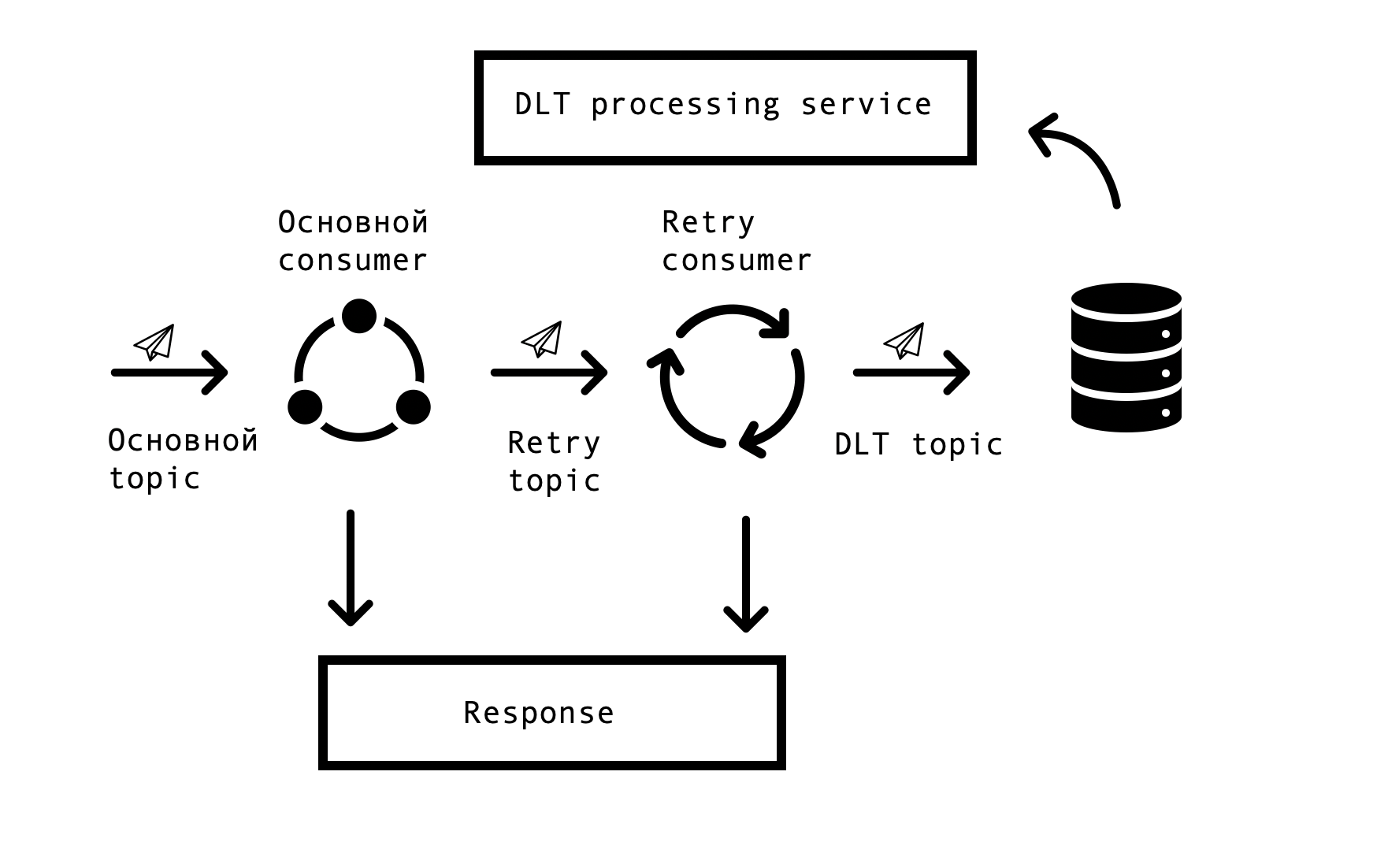

Task 4. Reprocessing queues and monitoring:

In distributed systems, problems and errors in the availability of databases, queues, and external data sources inevitably arise. In the case of Markus, the source of such errors is integration with external systems. It was necessary to find a solution that would allow repeated requests for erroneous responses with a specified timeout, but at the same time not stop processing successful requests in the main queue. For this, the so-called “topic based retry” concept was chosen. For each main topic, one or several retry topics are created, to which erroneous messages are sent, and at the same time, the delay in processing messages from the main topic is eliminated. Interaction scheme -

To implement such a scheme, we needed the following - to integrate this solution with Spring and avoid code duplication. In the vastness of the network, we came across a similar solution based on Spring BeanPostProccessor, but it seemed to us unnecessarily cumbersome. Our team made a simpler solution that allows us to integrate into Spring's consumer creation cycle and additionally add Retry Consumers. We offered a prototype of our solution to the Spring team, you can see it here . The number of Retry Consumers and the number of attempts of each consumer is configured through the parameters, depending on the needs of the business process, and for everything to work, all that remains is to put the annotation org.springframework.kafka.annotation.KafkaListener, which is familiar to all Spring developers.

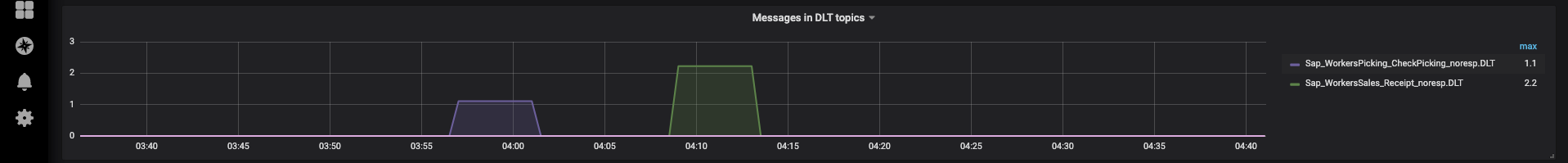

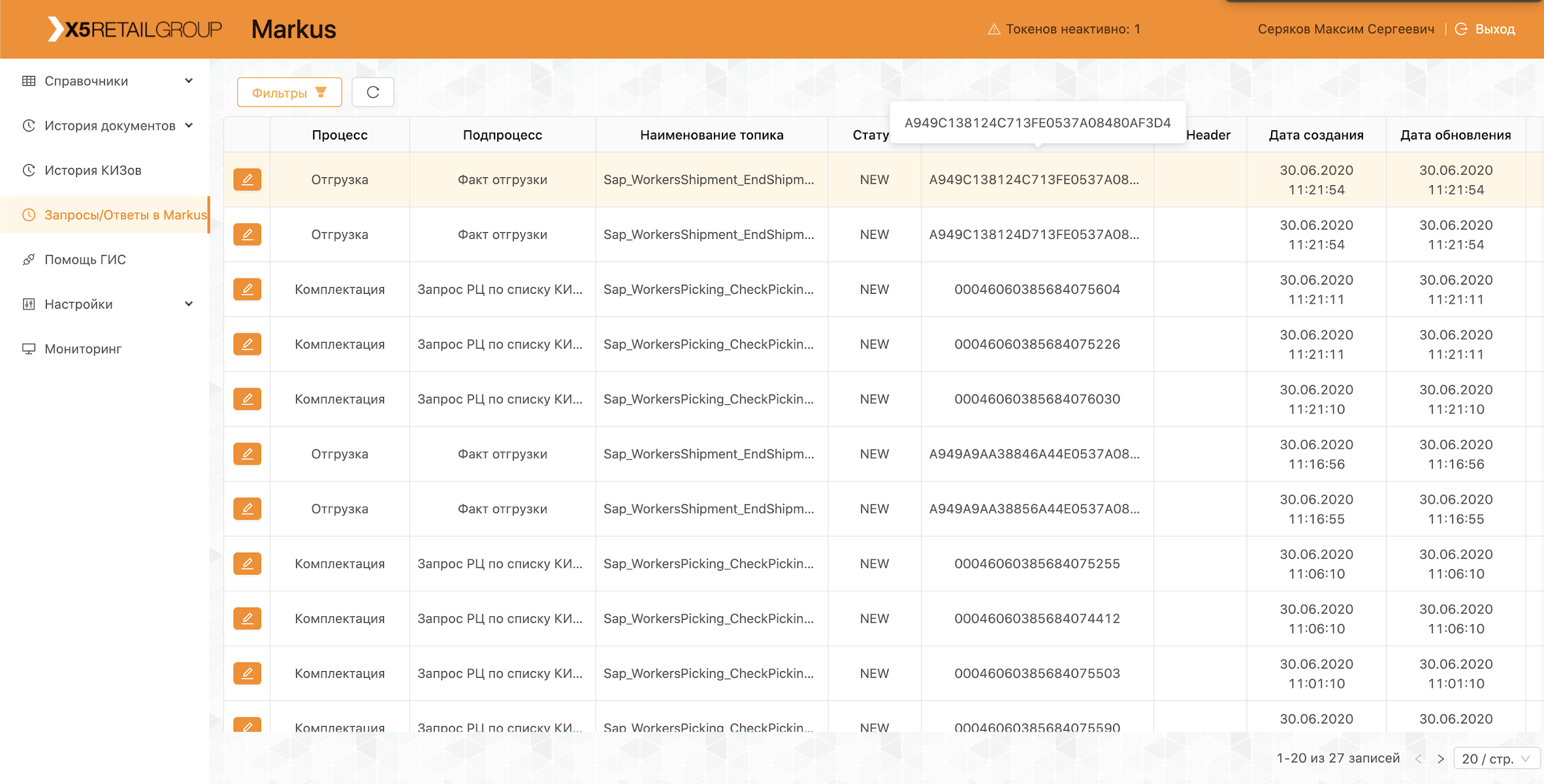

If the message could not be processed after all retry attempts, it is sent to the DLT (dead letter topic) using Spring DeadLetterPublishingRecoverer. At the request of support, we have expanded this functionality and made a separate service that allows you to view messages, stackTrace, traceId and other useful information on them that got into DLT. In addition, monitoring and alerts were added to all DLT topics, and now, in fact, the appearance of a message in a DLT topic is a reason for parsing and establishing a defect. This is very convenient - by the name of the topic, we immediately understand at which step of the process the problem arose, which significantly speeds up the search for its root cause.

More recently, we have implemented an interface that allows us to re-send messages by our support, after eliminating their causes (for example, restoring the operability of the external system) and, of course, establishing the corresponding defect for analysis. This is where our self-topics came in handy, so as not to restart a long processing chain, you can restart it from the desired step.

Platform operation

The platform is already in productive operation, every day we carry out deliveries and shipments, connect new distribution centers and stores. As part of the pilot, the system works with the groups of goods “Tobacco” and “Shoes”.

Our entire team is involved in conducting pilots, analyzing emerging problems and making proposals for improving our product from improving logs to changing processes.

In order not to repeat our mistakes, all cases found during the pilot are reflected in automated tests. The presence of a large number of autotests and unit tests allows you to carry out regression testing and put a hotfix in just a few hours.

Now we continue to develop and improve our platform, and constantly face new challenges. If you are interested, we will tell you about our solutions in the following articles.