DRBD (Distributed Replicated Block Device) is a distributed, flexible and universally replicated storage solution for Linux. It reflects the contents of block devices such as hard drives, partitions, logical volumes, etc. between servers. It creates copies of data on two storage devices so that if one of them fails, the data on the other can be used.

We can say that this is something like a RAID 1 network configuration with disks mapped to different servers. However, it works quite differently from RAID (even networked).

Initially, DRBD was used primarily in high availability (HA) computer clusters, however, starting with version nine, it can be used to deploy cloud storage solutions.

In this article, we'll walk you through how to install DRBD on CentOS and briefly demonstrate how to use it to replicate a storage (partition) across two servers. This is the perfect article for getting started with DRBD on Linux.

Test environment

We will be using a two node cluster for this setup.

- Node 1: 192.168.56.101 - tecmint.tecmint.lan

- Node 2: 192.168.56.102 - server1.tecmint.lan

Step 1: Install DRBD packages

DRBD is implemented as a Linux kernel module. It is a virtual block device driver, so it sits at the very bottom of the system's I / O stack.

DRBD can be installed from ELRepo or EPEL. Let's start by importing the ELRepo package signing key and connecting the repository on both nodes as shown below.

# rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org

# rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpmThen you need to install the DRBD kernel module and utilities on both nodes using:

# yum install -y kmod-drbd84 drbd84-utilsIf you have SELinux enabled, you need to configure policies to free DRBD processes from SELinux control.

# semanage permissive -a drbd_t

Also, if your system is running a firewalld, you need to add DRBD port 7789 to allow data synchronization between the two nodes.

Run these commands on the first node:

# firewall-cmd --permanent --add-rich-rule='rule family="ipv4" source address="192.168.56.102" port port="7789" protocol="tcp" accept'

# firewall-cmd --reloadThen run these commands on the second node:

# firewall-cmd --permanent --add-rich-rule='rule family="ipv4" source address="192.168.56.101" port port="7789" protocol="tcp" accept'

# firewall-cmd --reloadStep 2. Prepare low-level storage

Now that we have DRBD installed on both nodes of the cluster, we must prepare storage areas on them of approximately the same size. This can be a hard disk partition (or an entire physical hard disk), a software RAID device , an LVM logical volume, or any other type of block device found on your system.

For this tutorial, we will create a 2 GB test block device using the dd command.

# dd if=/dev/zero of=/dev/sdb1 bs=2024k count=1024Let's assume it's an unused partition (/ dev / sdb1) on a second block device (/ dev / sdb) connected to both nodes.

Step 3. Configuring DRBD

The main DRBD config file is

/etc/drbd.conf, and additional config files can be found in the directory /etc/drbd.d.

To replicate storage, we need to add the necessary configurations to the file

/etc/drbd.d/global_common.confthat contains the global and general DRBD configuration sections, and we need to define resources in the .resfiles.

Back up the original file on both nodes and then open the new file for editing (use your preferred text editor).

# mv /etc/drbd.d/global_common.conf /etc/drbd.d/global_common.conf.orig

# vim /etc/drbd.d/global_common.conf Add the following lines to both files:

global {

usage-count yes;

}

common {

net {

protocol C;

}

}Save the file and then close the editor.

Let's briefly focus on the protocol C line. DRBD supports three different replication modes (i.e. three degrees of replication synchronicity), namely:

- protocol A: asynchronous replication protocol; most commonly used in long-distance replication scenarios.

- protocol B: Semi-synchronous replication protocol or synchronous memory protocol.

- protocol C: Typically used for nodes on short distance networks; it is by far the most commonly used replication protocol in DRBD settings.

Important : The choice of replication protocol affects two deployment factors: security and latency. By contrast, throughput is largely independent of the replication protocol chosen.

Step 4. Adding a resource

Resource is a collective term that refers to all aspects of a specific dataset being replicated. We will define our resource in a file

/etc/drbd.d/test.res.

Add the following to the file on both nodes (remember to replace the variables with the actual values for your environment).

Pay attention to the hostnames, we need to provide the network hostname which can be obtained using the uname command

-n.

resource test {

on tecmint.tecmint.lan {

device /dev/drbd0;

disk /dev/sdb1;

meta-disk internal;

address 192.168.56.101:7789;

}

on server1.tecmint.lan {

device /dev/drbd0;

disk /dev/sdb1;

meta-disk internal;

address 192.168.56.102:7789;

}

}

}Where:

- on hostname : The on section to which the nested configuration statement belongs.

- test : This is the name of the new resource.

- device / dev / drbd0 : Specifies a new virtual block device managed by DRBD.

- disk / dev / sdb1 : This is a block device partition that is a backup device for a DRBD device.

- meta-disk : defines where DRBD stores its metadata. Internal means DRBD stores its metadata on the same physical low-level device as the actual data in production.

- address : specifies the IP address and port number of the corresponding host.

Also note that if the parameters have the same values on both hosts, you can specify them directly in the resources section.

For example, the above configuration could be refactored to:

resource test {

device /dev/drbd0;

disk /dev/sdb1;

meta-disk internal;

on tecmint.tecmint.lan {

address 192.168.56.101:7789;

}

on server1.tecmint.lan {

address 192.168.56.102:7789;

}

}Step 5. Initializing and starting the resource

To interact with DRBD, we will use the following administration tools (which interact with the kernel module to configure and administer DRBD resources):

- drbdadm : A high-level DRBD administration tool.

- drbdsetup : A lower-level administration tool for connecting DRBD devices to their backup devices, configuring DRBD device pairs to mirror their backup devices, and for checking the configuration of running DRBD devices.

- Drbdmeta : a metadata management tool.

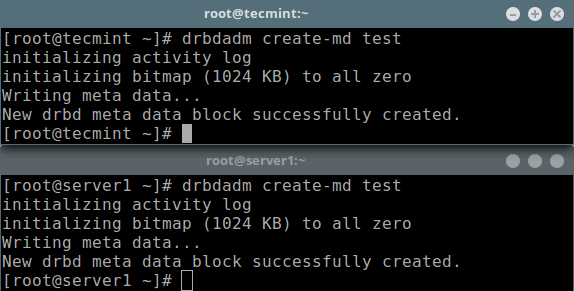

After adding all the initial resource configurations, we need to call the resource on both nodes.

# drbdadm create-md test

Initializing the metadata store

Next, we must start it, which will connect the resource to its backup device, then set the replication parameters and connect the resource to its peer:

# drbdadm up testNow if you run the lsblk command , you will notice that the DRBD device / volume drbd0 is associated with the backup device

/dev/sdb1:

# lsblk

Block device list

To disable a resource, run:

# drbdadm down testTo check the status of the resource, run the following command (note that the Inconsistent / Inconsistent state is expected at this point ):

# drbdadm status test

OR

# drbdsetup status test --verbose --statistics #for a more detailed status

Checking the status of a resource for

evil

Step 6: Setting the main resource / initial device sync source

At this point, DRBD is ready to go. Now we need to specify which node should be used as the source of the initial device sync.

Run the following command on only one node to start the initial full sync:

# drbdadm primary --force test

# drbdadm status test

Setting the Primary Node as the Start Device

After synchronization is complete, the status of both disks should be UpToDate.

Step 7: testing the DRBD setup

Finally, we need to check if the DRBD device will work as it should to store the replicated data. Remember that we used an empty disk volume, so we have to create a file system on the device and mount it to see if we can use it to store replicated data.

We need to create a file system on the device using the following command on the node from which we started the initial full sync (which has a resource with a primary role):

# mkfs -t ext4 /dev/drbd0

Create a filesystem on the Drbd volume

Then mount it as shown (you can give the mount point a suitable name):

# mkdir -p /mnt/DRDB_PRI/

# mount /dev/drbd0 /mnt/DRDB_PRI/

Now copy or create some files at the above mount point and make a long list with the ls command :

# cd /mnt/DRDB_PRI/

# ls -l

List the contents of the primary Drbd volume

Next, unmount the device (make sure the mount is not open, change the directory after unmounting to avoid errors) and change the node role from primary to secondary:

# umount /mnt/DRDB_PRI/

# cd

# drbdadm secondary testMake another node (which has a resource with a secondary role) primary, then attach a device to it and run a long list of mount points. If the setup works fine, all files stored on the volume should be there:

# drbdadm primary test

# mkdir -p /mnt/DRDB_SEC/

# mount /dev/drbd0 /mnt/DRDB_SEC/

# cd /mnt/DRDB_SEC/

# ls -l

Checking the DRBD setup running on the secondary node.

For more information, refer to the administration tools man pages:

# man drbdadm

# man drbdsetup

# man drbdmetaHelp: DRBD User Guide .

Summary

DRBD is extremely flexible and versatile, making it a storage replication solution suitable for adding HA to almost any application. In this article, we showed you how to install DRBD on CentOS 7 and briefly demonstrated how to use it to replicate storage. Feel free to share your thoughts with us using the feedback form below.

Learn more about the course.