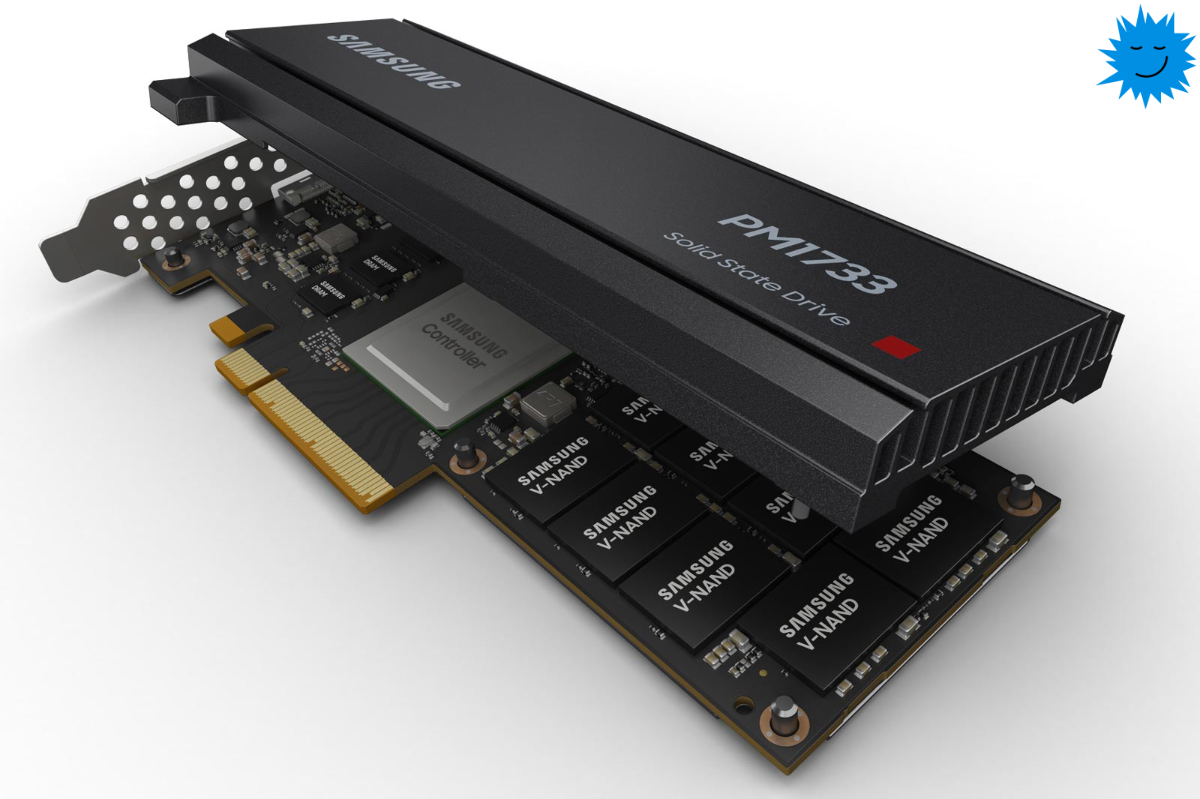

Pictured here is the Samsung PM1733 SSD.

Solid-State Drives (SSDs) based on flash memory have already replaced many magnetic disks as standard drives. From a programmer's point of view, SSDs and disks are very similar: both are persistent storage devices that provide page access through file systems and system calls, and have a large amount.

However, they also have important differences that become significant if you want to achieve optimal SSD performance. As we will see, SSDs are more complex, and if you think of them simply as fast drives, their performance can behave in a rather mysterious way. The purpose of this post is to show you why SSDs behave this way, which will help you create software that can take advantage of their features. (It should be noted that I will be talking about NAND memory, not Intel Optane memory, which has different characteristics.)

Drives, not discs

SSDs are often called disks, but this is not true because they store data in semiconductor devices rather than a mechanical disk. To read or write to an arbitrary block, the disk mechanically moves the head to the desired location, which takes about 10 ms. However, a random read operation from an SSD takes about 100 μs - 100 times faster. With such low latency, booting from an SSD is much faster than booting from a disk.

Another important difference between disks and SSDs is that disks have a single disk head and perform well only on sequential access. In contrast, SSDs are made up of tens or even hundreds of flash chips ("parallel blocks") that can be accessed in parallel.

The SSD transparently splits large files across flash chips into page-sized chunks, and a hardware prefetch device ensures that sequential scans use all available flash chips. However, at the level of flash memory, there is not much difference between sequential and random reads. Most SSDs are capable of reaching full bandwidth when reading arbitrary pages. This requires scheduling hundreds of concurrent random I / O requests to keep all flash chips running at the same time. This can be done by running multiple threads or using asynchronous I / O interfaces such as libaio or io_uring.

Recording

Things get even more interesting when it comes to recording. For example, if you study write latency, you can measure results from 10 μs - 10 times faster than reading. However, latencies seem so low only because SSDs cache writes to volatile RAM. The true write latency of NAND memory is approximately 1ms - 10 times slower than reading. On consumer-grade SSDs, this can be measured by issuing a sync / reset command after writing to ensure that the data is saved to flash. In most SSD servers, write latency cannot be measured directly: sync / reset is completed instantly, since the battery guarantees the safety of the write cache even in the event of a power outage.

In order to achieve high write bandwidth despite a fairly high write latency, SSDs use the same trick as reading: they provide concurrent access to multiple chips. Because the write cache can write pages asynchronously, it is not even necessary to schedule very many concurrent writes to get good write performance. However, write latency cannot always be completely hidden: for example, since writing is 10 times longer on a flash chip than reading, writes cause significant "tail delays" for reading from the same flash chip.

Out of order write operations

One important fact we're missing is that NAND pages cannot be overwritten. Page writes can only be performed sequentially, within blocks that were previously erased. These erasable blocks are several megabytes in size, and therefore consist of hundreds of pages. On the new SSD, all blocks are erased and the user can directly start adding new data.

Updating pages, however, is not an easy process. It would be too costly to erase an entire block just to overwrite a single page. Therefore, SSDs perform page updates by writing the new version of the page to a new location. This means that the logical and physical page addresses are separate. The mapping table stored in the SSD translates logical (software) addresses to physical (hardware) locations. This component is also called Flash Translation Layer (FTL). For example, let's say we have an SSD with three erasable blocks, each with four pages. The sequence of writing pages P1, P2, P0, P3, P5, P1 can lead to the following physical state of the SSD:

| Block 0 | P1 (old) | P2 | P0 | P3 |

| Block 1 | P5 | P1 | → | |

| Block 2 |

Garbage collection

When using a mapping table and non-sequential writes, everything works well until the SSD runs out of free blocks. The old version of the overwritten pages must be restored sooner or later. If we continue the previous example by writing pages P3, P4, P7, P1, P6, P2, we get the following situation:

| Block 0 | P1 (old) | P2 (old) | P0 | P3 (old) |

| Block 1 | P5 | P1 (old) | P3 | P4 |

| Block 2 | P7 | P1 | P6 | P2 |

At this stage, we no longer have free erasable blocks (although from the point of view of logic, space may remain). Before writing another page, the SSD must first erase the block. In our example, it is best for the garbage collector to erase block 0 because only one of its pages is being used. After erasing block 0, we free up space for three writes, and the SSD looks like this:

| Block 0 | P0 | →

|

||

| Block 1 | P5 | P1 (old) | P3 | P4 |

| Block 2 | P7 | P1 | P6 | P2 |

Write Amplification and Overprovisioning

To garbage collect block 0, we need to physically move page P0, although logically nothing happens to that page. In other words, flash SSDs tend to have more physical writes (to flash) than logical (software) writes. The relationship between these two parameters is called write amplification. In our example, to make space for 3 new pages in block 0, we had to move 1 page. We got 4 physical write operations for 3 logical write operations, i.e. the recording gain is 1.33.

High write gains decrease the performance and lifespan of flash memory. The value of the coefficient depends on the access pattern and the occupancy of the SSD. Bulky sequential writes have a low write amplification, and the worst case is random writes.

Let's say our SSD is 50% full and we are doing random writes. When we erase a block, on average about half of the pages in the block are still in use and need to be moved. That is, the write amplification factor at a 50% duty cycle is 2. Typically, the worst write amplification at a duty cycle f is 1 / (1-f):

| f | 0.1 | 0.2 | 0.3 | 0,4 | 0.5 | 0.6 | 0.7 | 0.8 | 0.9 | 0.95 | 0.99 |

| WA | 1.11 | 1.25 | 1.43 | 1.67 | 2.00 | 2.50 | 3.33 | five | 10 | twenty | 100 |

Since the write amplification is extremely high at close to 1 duty cycle, most SSDs have overprovisioning. This volume is usually equal to 10-20% of the total volume. Of course, you can also add more overprovisioning by creating an empty section and not writing anything there.

Conclusion and additional sources

SSDs have become quite cheap and have very high performance. For example, the Samsung PM1733 Server SSD costs roughly € 200 per terabyte and offers nearly 7 GB / s read and 4 GB / s write bandwidth. To achieve such high performance, you need to understand how an SSD works, so in this post I have described the most important internal mechanisms of a flash SSD. I tried to be laconic, so I oversimplified a few things. To learn more, you can start with this tutorial , which provides links to helpful articles. It should also be noted that due to the high speed of SSDs, the OS I / O stack often becomes a performance bottleneck. Experimental results on Linux can be found in our article for the CIDR 2020 conference...

Advertising

Our cloud servers use only NVMe NAS with triple data replication. You can use a rented server for any task - development, hosting sites, using a VPN, and even getting a remote machine on Windows! There can be a lot of ideas and we will help to translate any of them into reality!

Subscribe to our chat on Telegram .