Apache Airflow is a simple and convenient batch-oriented tool for building, planning and monitoring data pipelines. Its key feature is that using Python code and built-in function blocks, you can connect many different technologies used in the modern world. The main working entity of Airflow - DAG - directed acyclic graph, in which nodes are tasks, and dependencies between tasks are represented by directed edges.

Those who use Apache Airflow to orchestrate data loading tasks into storage will probably appreciate the flexibility it provides for solving templated tasks. When the whole development process comes down to filling in a configuration file with a description of the DAG parameters and a list of tasks to be performed. At Leroy Merlin, this approach is successfully used to create tasks for transferring data from the raw layer to the ods storage layer. Therefore, it was decided to extend it to the tasks of filling data marts.

The main difficulty was that we do not yet have a unified methodology for developing data marts and procedures for filling them out. And each developer solved the problem based on their personal preferences and experience. This fits into one of the main corporate IT principles - "You build it - you run it", which means that the developer is responsible for his decision and supports it himself. This principle is good for quickly working out hypotheses, but for things of the same type, the standard solution is more suitable.

As it was

, . GreenPlum, DAG , GitHub, DAG Airflow . :

Python Apache Airflow;

, , DAG Airflow, ;

;

DAG, , ;

SQL- . , «» Airflow.

, . DAG- , , , , DummyOperator- PostgresOperator-. , , , :

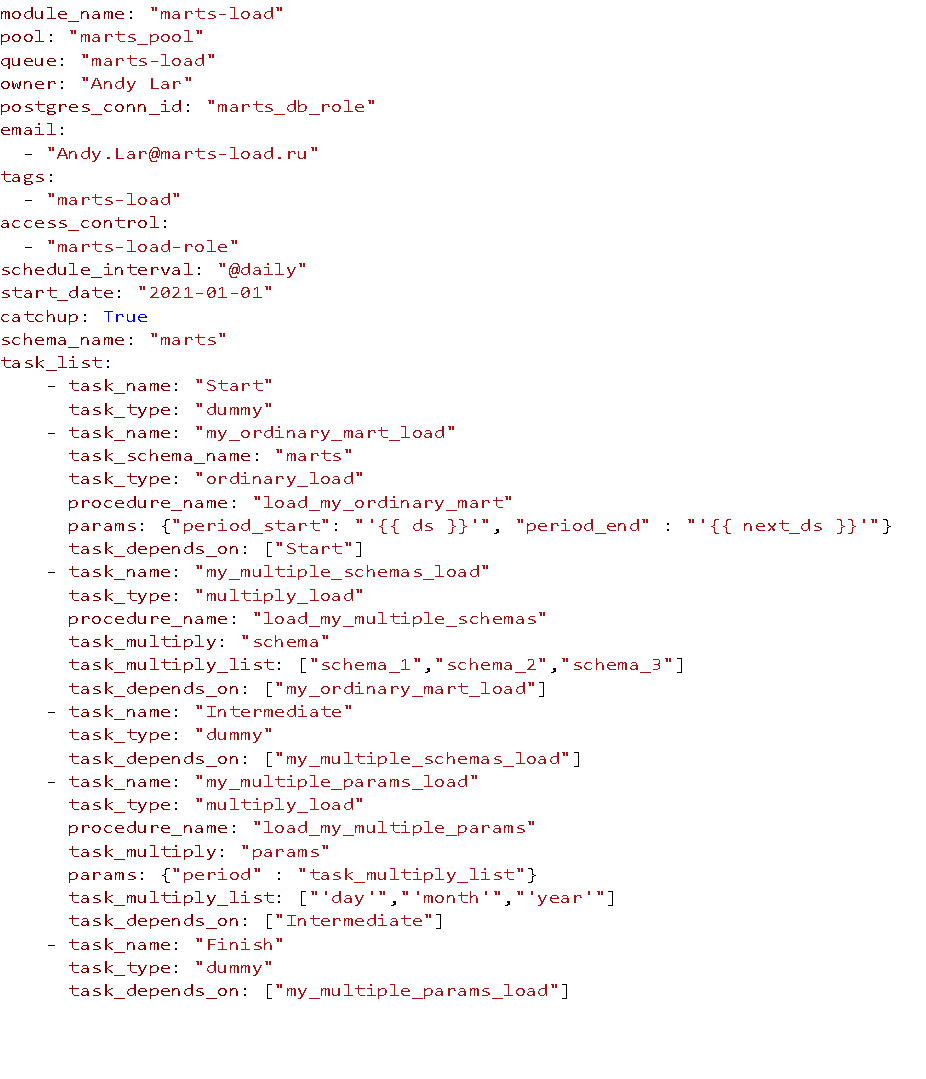

DAG- YAML, , -: , , , , . . YAML , API;

, , ;

, DAG- Airflow.

:

DAG:

:

module_name – DAG_ID;

pool – , ;

queue – ;

owner – DAG;

postgres_conn_id – ;

email – ;

tags – DAG UI;

access_control: DAG;

schedule_interval – DAG;

start_date catchup – , . Airflow . , start_date end_date ( ) , schedule_interval. catchup True, DAG start_date, False, ;

schema_name – , ;

task_list – DAG.

:

task_name – task_id Airflow

task_type –

task_schema_name - , ,

task_conn_id – ,

procedure_name –

params –

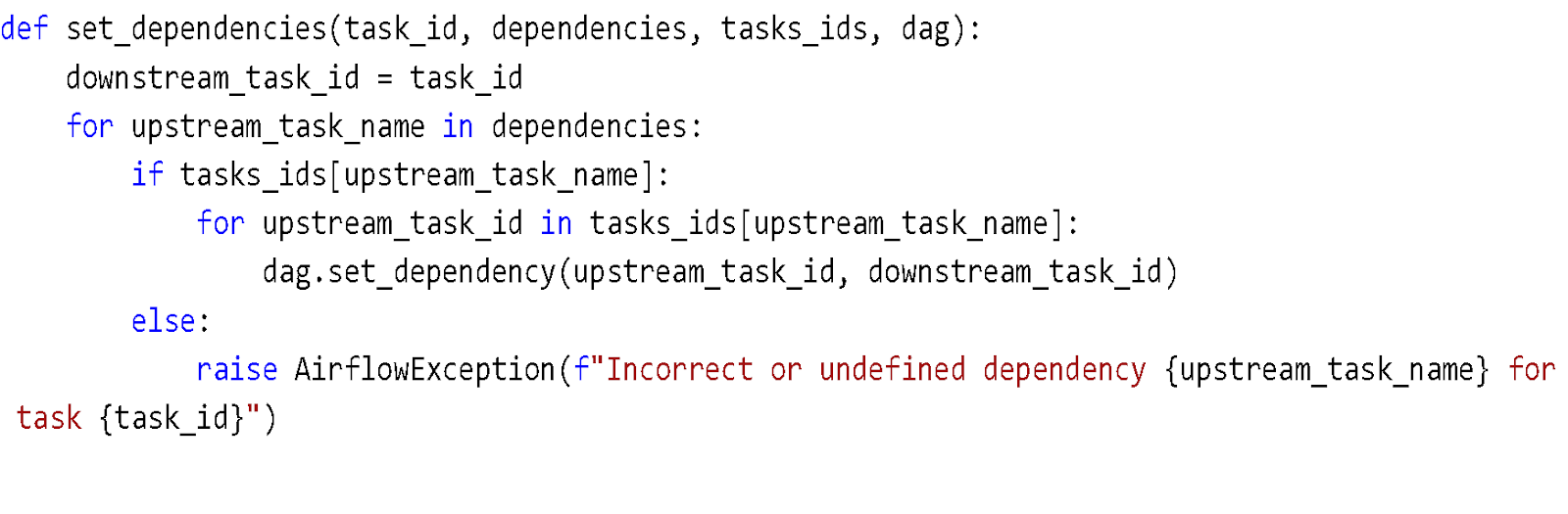

task_depends_on – ,

priority_weight –

task_concurrency - DAG

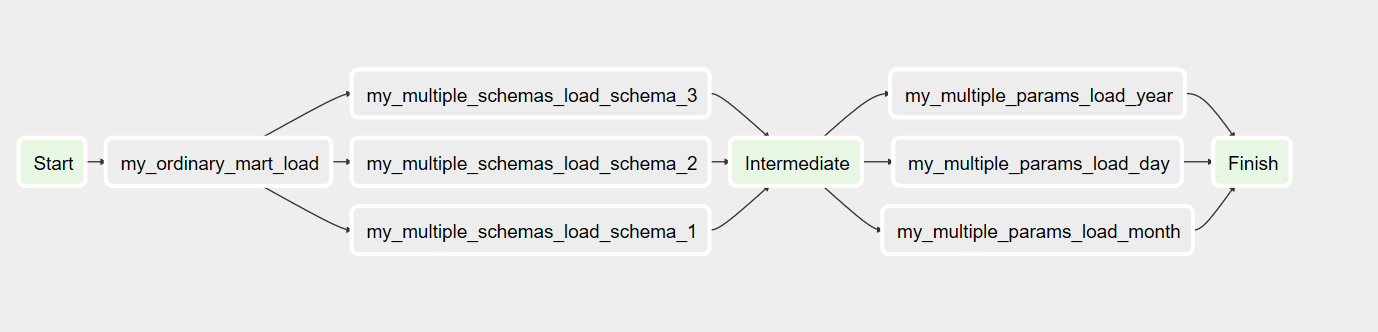

(task_type):

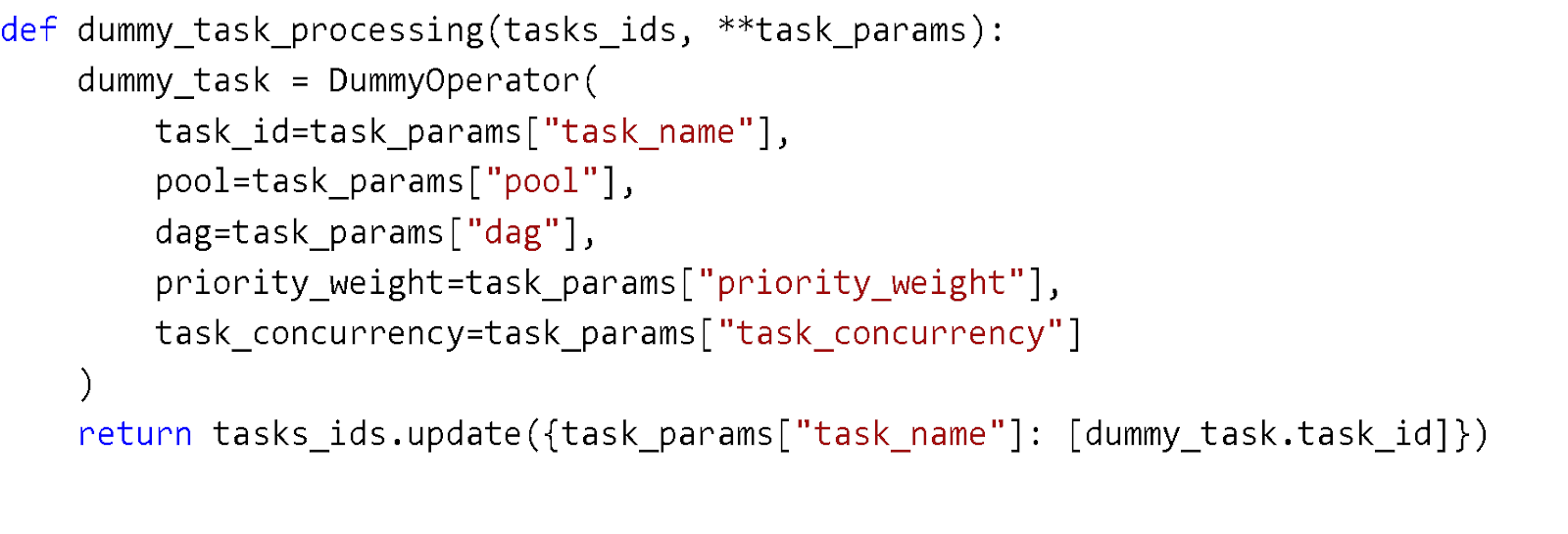

1) Dummy – DummyOperator. , , .

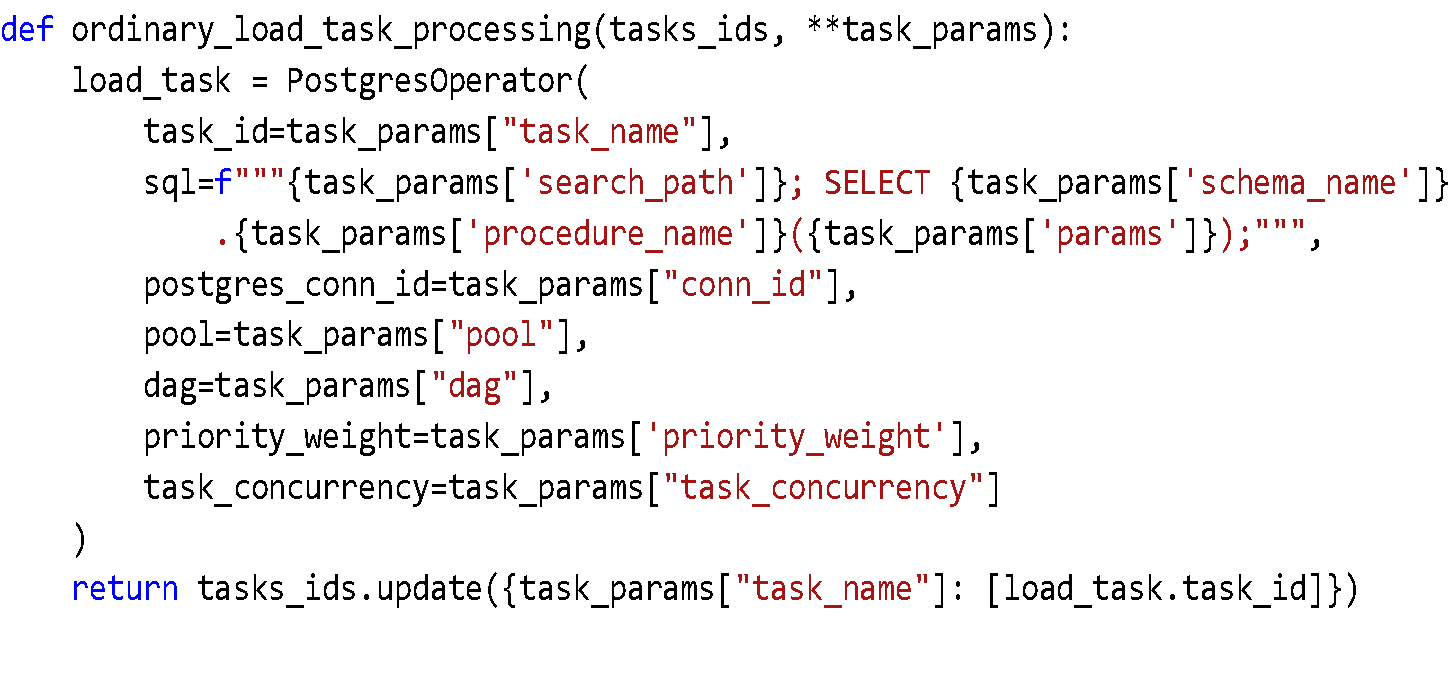

2) – PostgresOperator Airflow

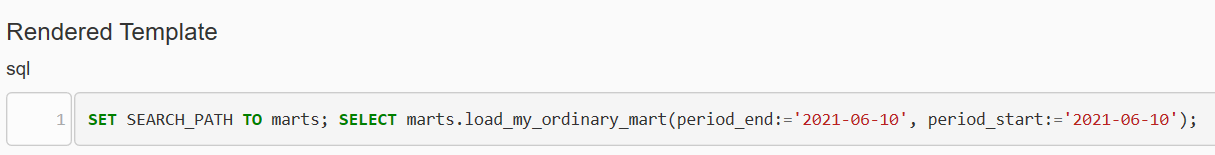

SQL-, :

3) – PostgresOperator( , )

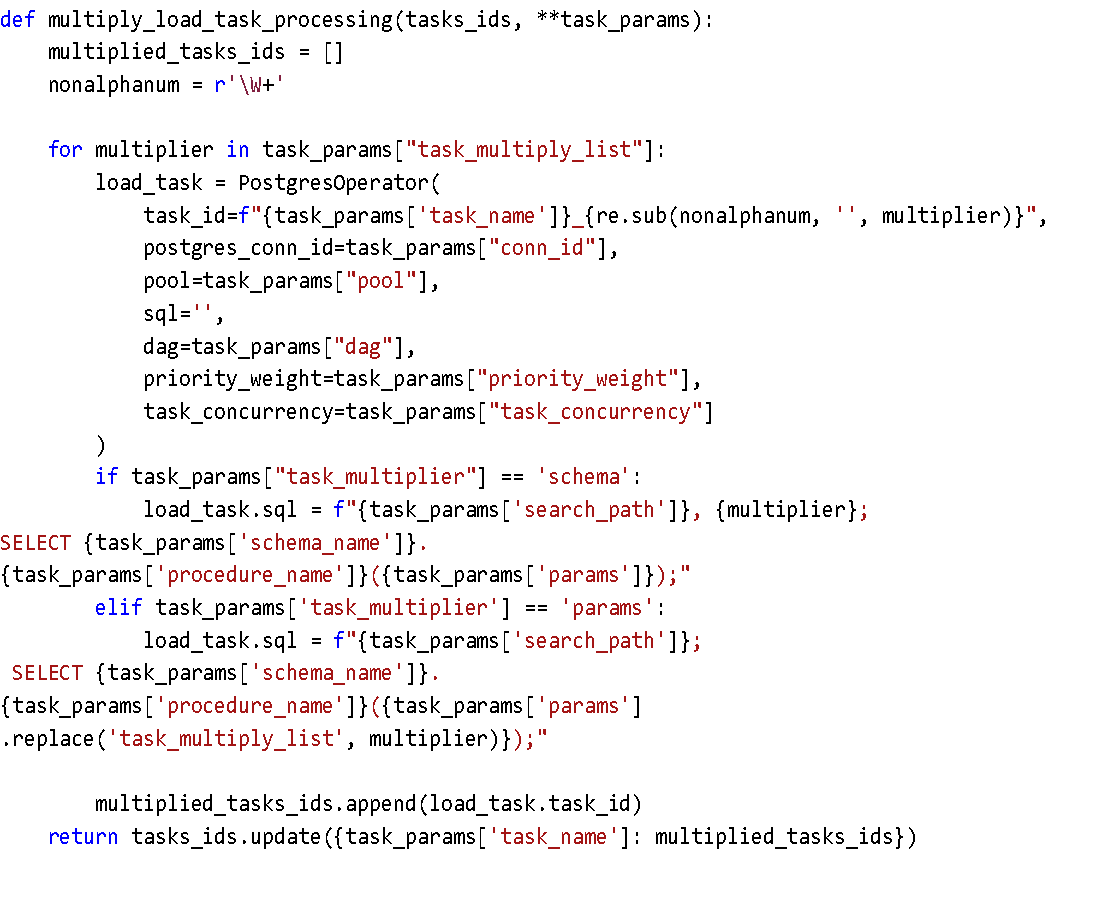

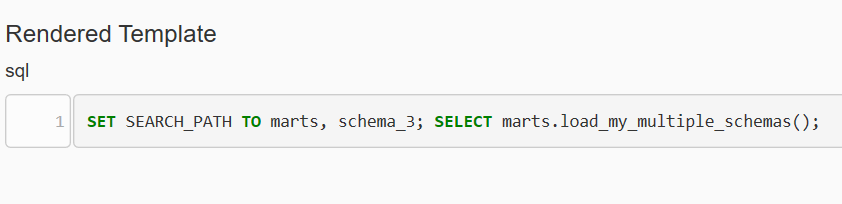

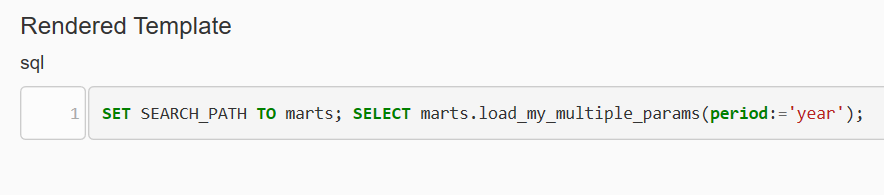

:

task_multiply - 2 : "schema" "params". schema", task_multiply_list SEARCH_PATH. "params", task_multiply_list params, 'task_multiply_list’

task_multiply_list - ,

SQL-.

“schema”:

“params”:

:

DAG. Apache Airflow , - . 10-15. , , . : . DAG .