Major cloud providers connect virtual drives to dedicated physical servers. But if you look into the server OS, there will be a physical disk with the provider's name in the "manufacturer" field. Today we will analyze how this is possible.

What is Smart NIC?

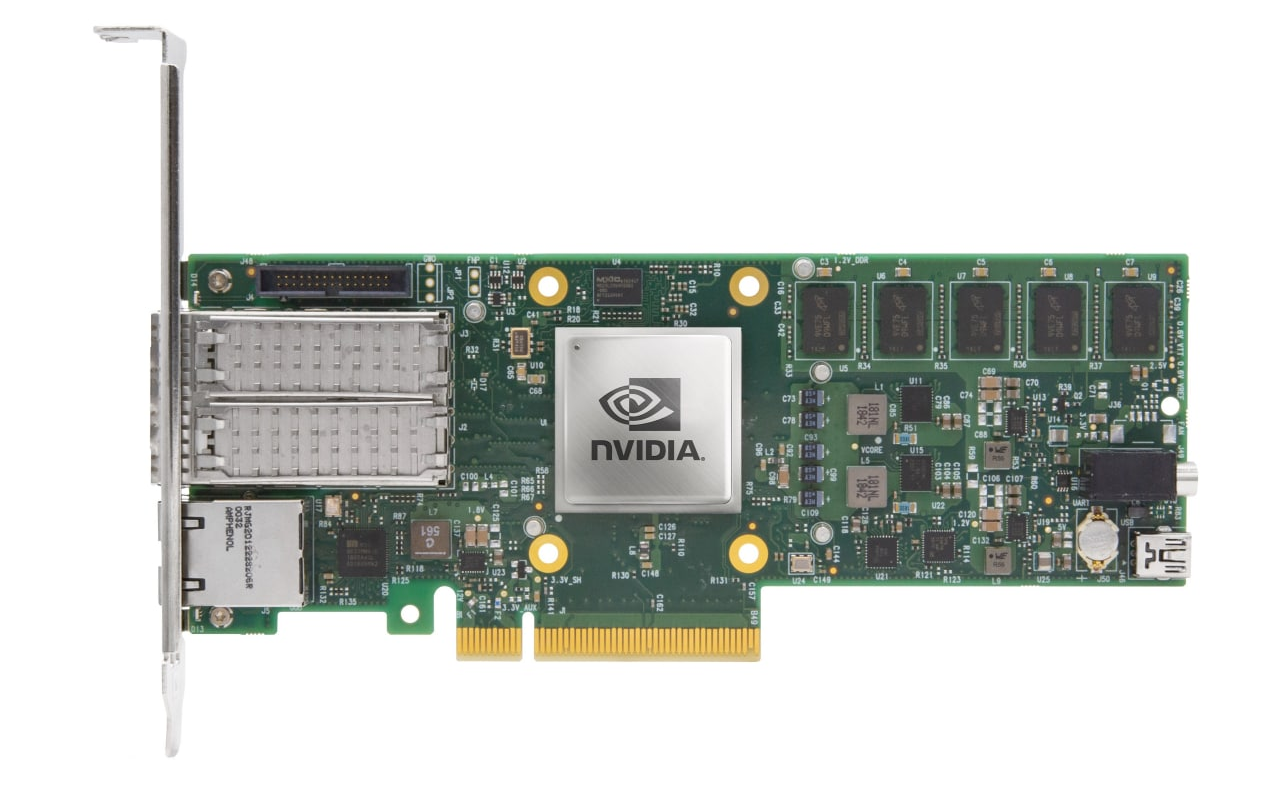

At the heart of this "magic" are smart network cards (Smart NICs). These network cards have their own processor, RAM, and storage. In fact, this is a mini-server made in the form of a PCIe card. The main task of smart cards is to unload the CPU from I / O operations.

In this article we will talk about a specific device - NVIDIA BlueField 2. NVIDIA calls such devices DPU (Data Processing Unit), the purpose of which the manufacturer sees is to "free" the central processor from a variety of infrastructure tasks - storage, networking, information security, and even host management.

We have a device that looks like a regular 25GE network card, but it has an eight-core ARM Cortex-A72 processor with 16 GB of RAM and 64 GB of permanent eMMC memory.

Additionally, Mini-USB, NC-SI and RJ-45 connectors are visible. The first connector is intended solely for debugging and is not used in product solutions. NC-SI and RJ-45 allow you to connect to the server BMC module through the card ports.

Enough theory, time to launch.

First start

After installing the network card, the first start of the server will be unusually long. The thing is that the server's UEFI firmware polls the connected PCIe devices, and the smart network card blocks this process until it boots itself. In our case, the server boot process took about two minutes.

After booting the server OS, you can see two ports of the smart network card.

root@host:~# lspci 98:00.0 Ethernet controller: Mellanox Technologies MT42822 BlueField-2 integrated ConnectX-6 Dx network controller (rev 01) 98:00.1 Ethernet controller: Mellanox Technologies MT42822 BlueField-2 integrated ConnectX-6 Dx network controller (rev 01) 98:00.2 DMA controller: Mellanox Technologies MT42822 BlueField-2 SoC Management Interface (rev 01)

At this point, the smart network card behaves like a normal one. To interact with the card, you need to download and install the BlueField drivers from the NVIDIA DOCA SDK page . At the end of the process, the installer will prompt you to restart the openibd service so that the installed drivers are loaded. Reboot:

/etc/init.d/openibd restart

If everything was done correctly, then a new network interface tmfifo_net0 will appear in the OS .

root@host:~# ifconfig tmfifo_net0 tmfifo_net0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet6 fe80::21a:caff:feff:ff02 prefixlen 64 scopeid 0x20<link> ether 00:1a:ca:ff:ff:02 txqueuelen 1000 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 13 bytes 1006 (1.0 KB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

By default, BlueField is 192.168.100.2/24, so we assign 192.168.100.1/24 to the tmfifo_net0 interface. Then we start the rshim service and put it on autoload.

systemctl enable rshim systemctl start rshim

After that, access to the OS of the card from the server is possible in two ways:

- via SSH and tmfifo_net0 interface ;

- through the console - the character device / dev / rshim0 / console .

The second method works regardless of the OS state of the card.

Other methods of remote connection to the OS of the card are also possible, which do not require access to the server in which the card is installed:

- via SSH via 1GbE out-of-band mgmt port or via uplink interfaces (including those with PXE boot support);

- console access through a dedicated RS232 port;

- RSHIM interface via dedicated USB port.

Inside view

Now that we have gained access to the OS of the card, it is possible to be surprised by the fact that there is a smaller server inside our server. The OS image preinstalled on the card contains all the software you need to manage the card and, it seems, even more. The network card contains a full-fledged Linux distribution, in our case, Ubuntu 20.04.

If necessary, it is possible to install any Linux distribution kit on the network card that supports the aarch64 (ARMv8) architecture and UEFI. If connected through the console, pressing ESC while loading the card will take you to its own UEFI Setup Utility. There are unusually few settings here, compared to the server counterpart.

The OS on BlueField 2 can be loaded using PXE, and the UEFI Setup Utility allows you to customize the Boot Order. Just imagine: the network card PXE first boots itself, and then the server!

So it was discovered that docker is available in the OS by default, although it seems that this is redundant for a network card. Although, if we are talking about redundancy, then we installed the JVM from the package manager and launched the Minecraft server on the network card. Although no serious tests have been carried out, it is quite comfortable to play on the server with a small company.

The OS of the network card displays many network interfaces:

ubuntu@bluefield:~$ ifconfig | grep -E '^[^ ]' docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500 lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536 oob_net0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500 p0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 p1: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500 p0m0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 p1m0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 pf0hpf: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 pf0sf0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 pf1hpf: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 pf1sf0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 tmfifo_net0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

Interfaces tmfifo_net0 , lo and docker0 we already know. The oob_net0 interface corresponds to the out-of-band mgmt port RJ-45. The rest of the interfaces are connected to optical ports:

- pX - representor device of the physical port of the card;

- pfXhpf is a Host Physical Function, an interface that is accessible to the host;

- pfXvfY - representation of the host virtual function (Host Virtual Function), virtual interfaces used to virtualize SR-IOV on the host;

- pXm0 is a special PCIe Sub-Function interface that is used to communicate between the card and the port. This interface can be used to access the card to the network;

- pfXsf0 - PCIe Sub-Function representation of the pXm0 interface.

The easiest way to understand the purpose of the interfaces is from the diagram:

The interfaces are directly connected to the Virtual Switch, which is implemented in BlueField 2. But we'll talk about that in another article. In the same article, we want to look at NVMe emulation, which allows you to connect software-defined storage (SDS) to dedicated servers as physical disks. This will allow you to use all the advantages of SDS on the bare metal.

Setting up NVMe emulation

By default, NVMe emulation mode is disabled. We enable it with the mlxconfig command .

mst start # mlxconfig -d 03:00.0 s INTERNAL_CPU_MODEL=1 PF_BAR2_ENABLE=0 PER_PF_NUM_SF=1 mlxconfig -d 03:00.0 s PF_SF_BAR_SIZE=8 PF_TOTAL_SF=2 mlxconfig -d 03:00.1 s PF_SF_BAR_SIZE=8 PF_TOTAL_SF=2 # NVMe mlxconfig -d 03:00.0 s NVME_EMULATION_ENABLE=1 NVME_EMULATION_NUM_PF=1

After enabling NVMe Emulation mode, you need to reboot the card. Setting up NVMe emulation comes down to two steps:

- Configuring SPDK.

- Setting up snap_mlnx.

At the moment, only two methods of accessing remote storage have been officially announced: NVMe-oF and iSCSI. And only NVMe-oF has hardware acceleration. However, it is possible to use other protocols as well. Our interest is to connect the ceph repository.

Unfortunately, there is no out-of-the-box support for rbd. Therefore, to connect to the ceph storage, you must use the rbd kernel module, which will create the / dev / rbdX block device . And the stack for NVMe emulation, in turn, will work with a block device.

First of all, you need to indicate where the storage is located, which we will represent as NVMe. This is done through the arguments of the spdk_rpc.py script ... For persistence on map reboots, commands are written to /etc/mlnx_snap/spdk_rpc_init.conf .

To connect a block device, use the bdev_aio_create <path to block device> <name> <block size in bytes> command . The <name> parameter is used later in the settings, and it does not have to be the same as the name of the block device. For example:

bdev_aio_create /dev/rbd0 rbd0 4096

For direct connection of rbd device it is necessary to recompile SPDK and mlnx_snap with rbd support. We got the executables compiled with rbd support from tech support. To connect, we used the bdev_rbd_create <pool name> <rbd name> <block size in bytes> command . This command does not allow you to specify the device name, but comes up with it itself and displays it upon completion. In our case, Ceph0. The name of the device needs to be remembered, we will need it in further configuration.

Although the decision to connect rbd through a kernel module and use a block device instead of directly using rbd seems to be a somewhat incorrect decision, it turned out that this is the case when the "crutches" work better than the "smart" solution. In performance tests, it turned out that the "correct" solution was slower.Next, you need to configure the view that the host will see. Configuration is done via snap_rpc.py , and commands are saved in /etc/mlnx_snap/snap_rpc_init.conf . First, we create a drive with the following command.

subsystem_nvme_create <NVMe Qualified Name (NQN)> < > <>

Next, we create a controller.

controller_nvme_create <NQN> < > -c < > --pf_id 0

The emulation manager is most often called mlx5_x . If you are not using hardware acceleration, then you can use the first one that comes across , that is, mlx5_0 . After executing this command, the name of the controller will be displayed. In our case - NvmeEmu0pf0 .

Finally, add a namespace (NVMe Namespace) to the created controller.

controller_nvme_namespace_attach < > < > -c < >

We always have the device type spdk , we got the device ID at the spdk configuration stage, and the controller name in the previous step. As a result, the file /etc/mlnx_snap/snap_rpc_init.conf looks like this:

subsystem_nvme_create nqn.2020-12.mlnx.snap SSD123456789 "Selectel ceph storage" controller_nvme_create nqn.2020-12.mlnx.snap mlx5_0 --pf_id 0 -c /etc/mlnx_snap/mlnx_snap.json controller_nvme_namespace_attach -c NvmeEmu0pf0 spdk Nvme0n10 1

Restart the mlnx_snap service :

sudo service mlnx_snap restart

If everything is configured correctly, the service will start. On the NVMe host, the disk will not appear on its own. You need to reload the nvme kernel module.

sudo rmmod nvme

sudo modprobe nvme

And so, our virtual physical disk appeared on the host.

root@host:~# nvme list Node SN Model Namespace Usage Format FW Rev ---------------- -------------------- ---------------------------------------- --------- -------------------------- ---------------- -------- /dev/nvme0n1 SSD123456789 Selectel ceph storage 1 515.40 GB / 515.40 GB 4 KiB + 0 B 1.0

We now have a virtual disk presented to the system as a physical one. Let's check it on tasks usual for physical disks.

Testing

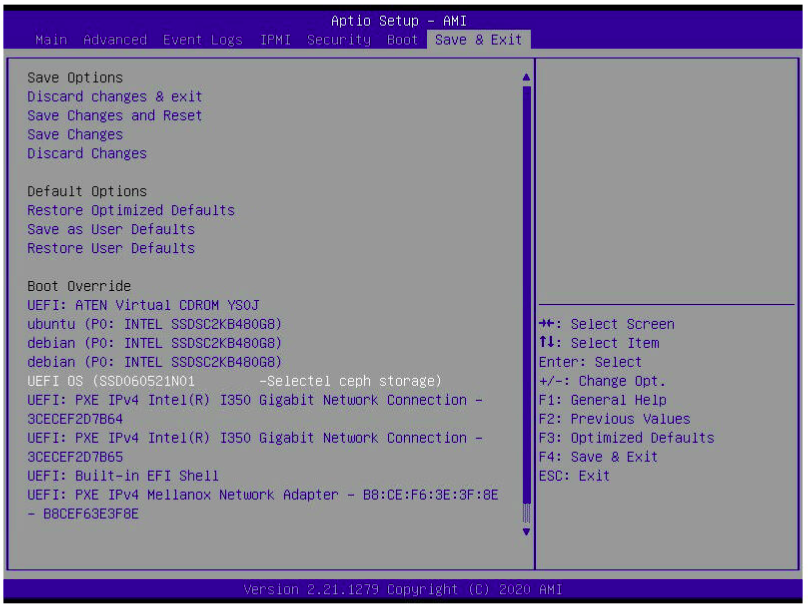

First of all, we decided to install the OS on a virtual disk. The CentOS 8 installer was used. He saw the disk without any problems.

Installation was carried out as usual. We check the CentOS bootloader in the UEFI Setup Utility.

We booted into the installed CentOS and made sure that the FS root is on the NVMe disk.

[root@localhost ~]# lsblk NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT sda 8:0 0 447.1G 0 disk |-sda1 8:1 0 1M 0 part |-sda2 8:2 0 244M 0 part |-sda3 8:3 0 977M 0 part `-sda4 8:4 0 446G 0 part `-vg0-root 253:3 0 446G 0 lvm sdb 8:16 0 447.1G 0 disk sr0 11:0 1 597M 0 rom nvme0n1 259:0 0 480G 0 disk |-nvme0n1p1 259:1 0 600M 0 part /boot/efi |-nvme0n1p2 259:2 0 1G 0 part /boot `-nvme0n1p3 259:3 0 478.4G 0 part |-cl-root 253:0 0 50G 0 lvm / |-cl-swap 253:1 0 4G 0 lvm [SWAP] `-cl-home 253:2 0 424.4G 0 lvm /home

[root@localhost ~]# uname -a Linux localhost.localdomain 4.18.0-305.3.1.el8.x86_64 #1 SMP Tue Jun 1 16:14:33 UTC 2021 x86_64 x86_64 x86_64 GNU/Linux

We also tested the emulated disk with the fio utility. Here's what happened.

| Test | AIO bdev, IOPS | Ceph RBD bdev, IOPS |

|---|---|---|

| randread, 4k, 1 | 1,329 | 849 |

| randwrite, 4k, 1 | 349 | 326 |

| randread, 4k, 32 | 15 100 | 15,000 |

| randwrite, 4k, 32 | 9445 | 9 712 |

Conclusion

"Smart" network cards are a real technical magic that allows not only offloading the central processor from I / O operations, but also "tricking" it, presenting a remote drive as local.

This approach allows you to take advantage of software-defined storage on dedicated servers: at the click of your fingers, transfer disks between servers, resize, take snapshots and deploy ready-made images of operating systems in seconds.