There is no cure for Alzheimer's. But the sooner it is diagnosed, the more likely it is to slow down the progression.

A team of researchers from IBM and the University of Tsukuba (Japan) has developed an AI model that helps detect the onset of mild cognitive impairment (MCD, the transition between the natural aging process and dementia) by asking older people everyday questions. The article, published in the journal Frontiers in Digital Health , provides the first empirical evidence of the effectiveness of automated patient assessment based on speech analysis using tablets.

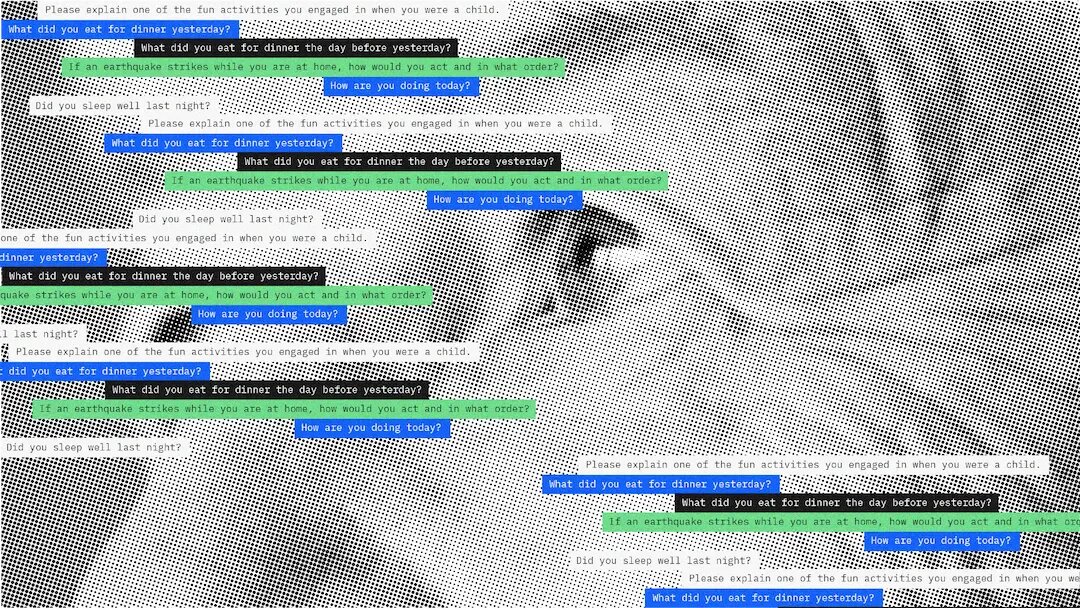

Unlike previous studies, this AI-powered model analyzes verbal responses to everyday questions using an app on a smartphone or tablet. For example, patients may be asked questions about their mood, plans for the day, well-being, or yesterday's dinner. Earlier studies mainly analyzed speech responses during cognitive tests. For example, patients were asked to count down from 925 in increments of three, or to describe the image shown to them in as much detail as possible.

The researchers found that the accuracy of detecting early signs of PCR in tests with answers to such simple questions was almost 90%. This compares with the results of more complex cognitive tests. Thus, to monitor the health status, this AI algorithm can be integrated into smart speakers or home devices so that during their operation it is possible to track signs of LCR.

The results of this study appear promising because cognitive tests are challenging tests for patients. They are forced to follow difficult instructions and are subject to high mental stress. Therefore, such assessments are not carried out as often, which can interfere with the detection of early signs of Alzheimer's disease. The analysis of everyday speech data allows the assessment to be carried out much more often, and it requires fewer resources.

For the purposes of the study, the speech responses of 76 elderly Japanese were collected, including those with LCR. Then the scientists analyzed the features of their speech: the pitch of the voice, the frequency of pauses during a conversation, etc.

The research team knew that identifying subtle cognitive differences in everyday speech when cognitive load is low is not easy. Under such conditions, the differences in speech between healthy people and those with PCD are less noticeable than with cognitive tests.

Scientists at IBM and the University of Tsukuba have addressed this challenge by combining multiple-question responses to identify changes in the ability to remember information and perform targeted actions, as well as changes in speech function itself, indicating LCR and dementia. For example, an AI application asks the question, "What did you eat for dinner yesterday?" An elderly person with LCR will answer, "Japanese noodles with tempura - a tempura made from shrimp, radish, and mushrooms."

At first glance, the answer is okay. But AI detects differences in pitch, speech pauses, and other acoustic characteristics. The researchers found that, compared to cognitive tests, everyday life questions help identify less obvious but statistically significant differences in speech patterns, indicating mild PCD. The accuracy of detecting mild cognitive disorder using this AI model was 86.4%, which is comparable to the accuracy of cognitive tests.

Original material in English