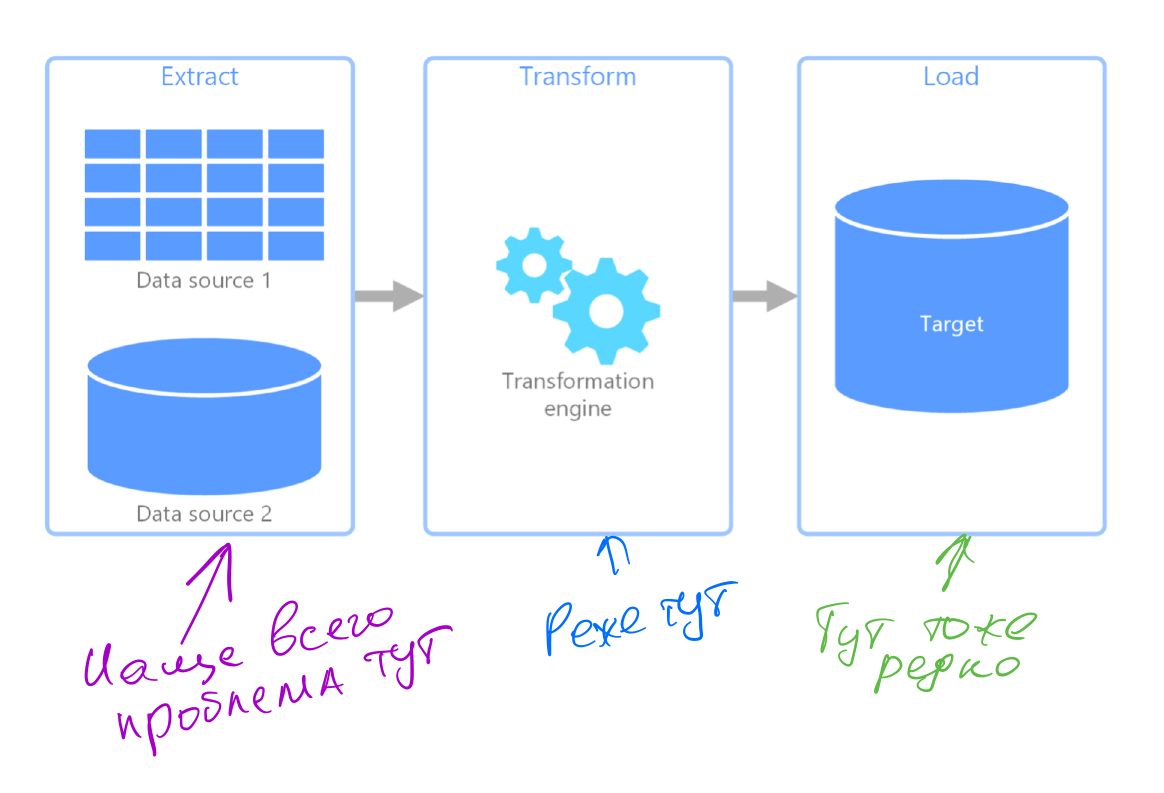

For the past few years, I have been doing data engineering: I build pipelines of various levels of complexity, extract the data that the business needs, transform it and save, in general, I build classic ETLs.

In this case, problems can be expected from anywhere and at every step: the data source is lying down, the data came broken, the source changed the data format or access to it without declaring war, the storage is dull, the data suddenly became less or more and many other fun.

To understand how to monitor all this, let's figure out who generally works with the data that we mined with such difficulty:

Business is all those people who are not particularly good at engineering, but make important decisions based on the data we have obtained: whether to increase advertising costs, how to quickly increase the audience size for rolling out an update, how various partners work, etc.

Techies are us engineers, we can put our hands in the logs, refine the logic, make sure that nothing falls without urgent need.

Code - the next pipeline in the chain is launched, transformations occur, graphs are drawn, etc.

Date pipelines may differ slightly from the classic backend - if any of the pipelines crashed, this does not mean that everything is bad and you need to run it immediately, the data may continue to remain relevant for a certain time. You will say here that the backend can also successfully crash in parts, that's how it is, but in this context I consider the backend and the data pipeline as one logical entity, and not a set of duplicate microservices and databases with replications.

Here are some examples:

The pipeline works every 10 minutes, and people look at the reports, on average, once an hour. The fall of one of the jobs is not critical, and if the data source is lying down, there is still nothing you can do about it.

, API ( Apple), , , . , , , , , - - , .

- - , : - , , .

, Airflow , , , .. , ...

:

Airflow ELK , , .

, , , , . , , .

, . , .. , , . , - (, ).

:

, .

, .

, , , .

, , , , , , . , , , Sensorpad.

?

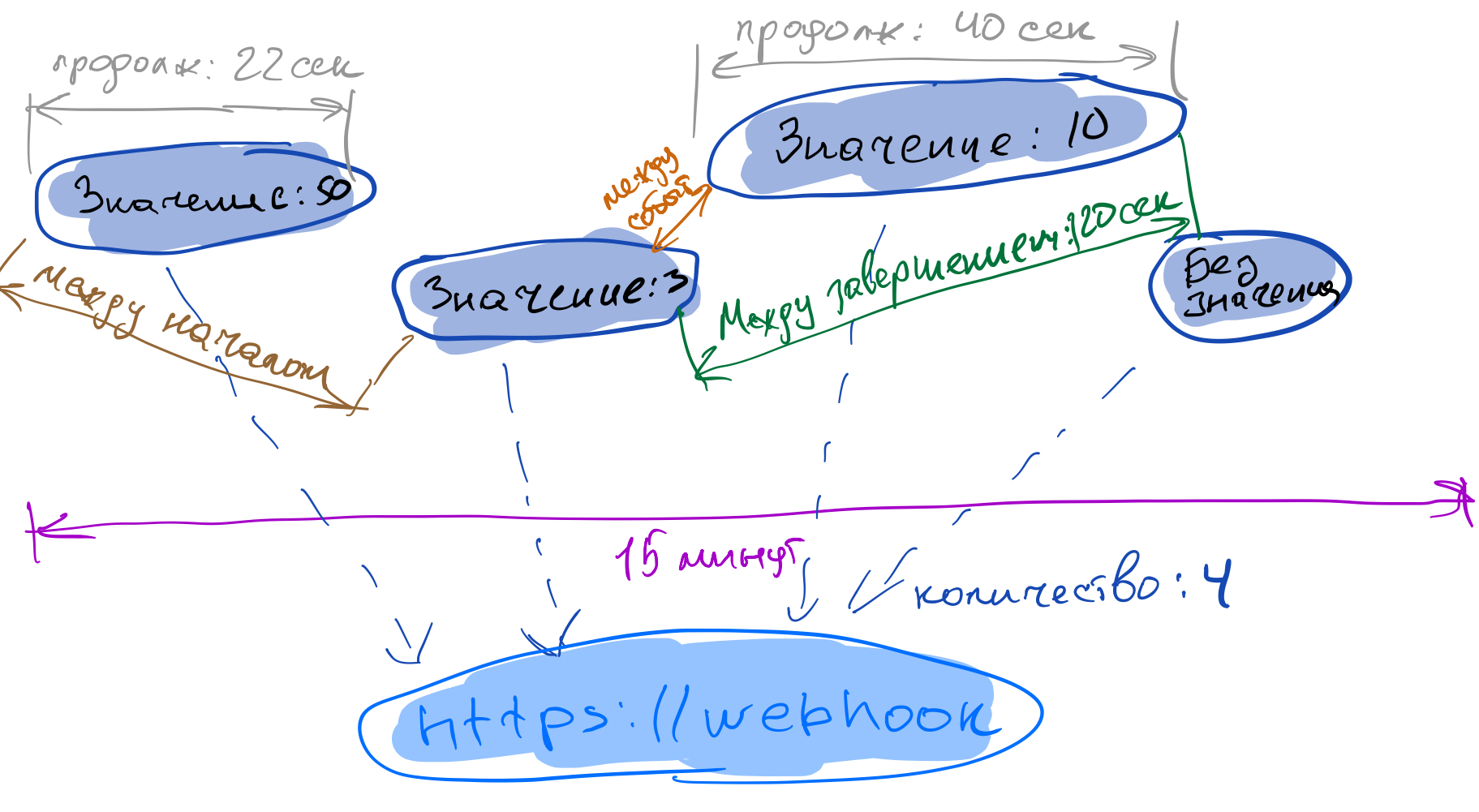

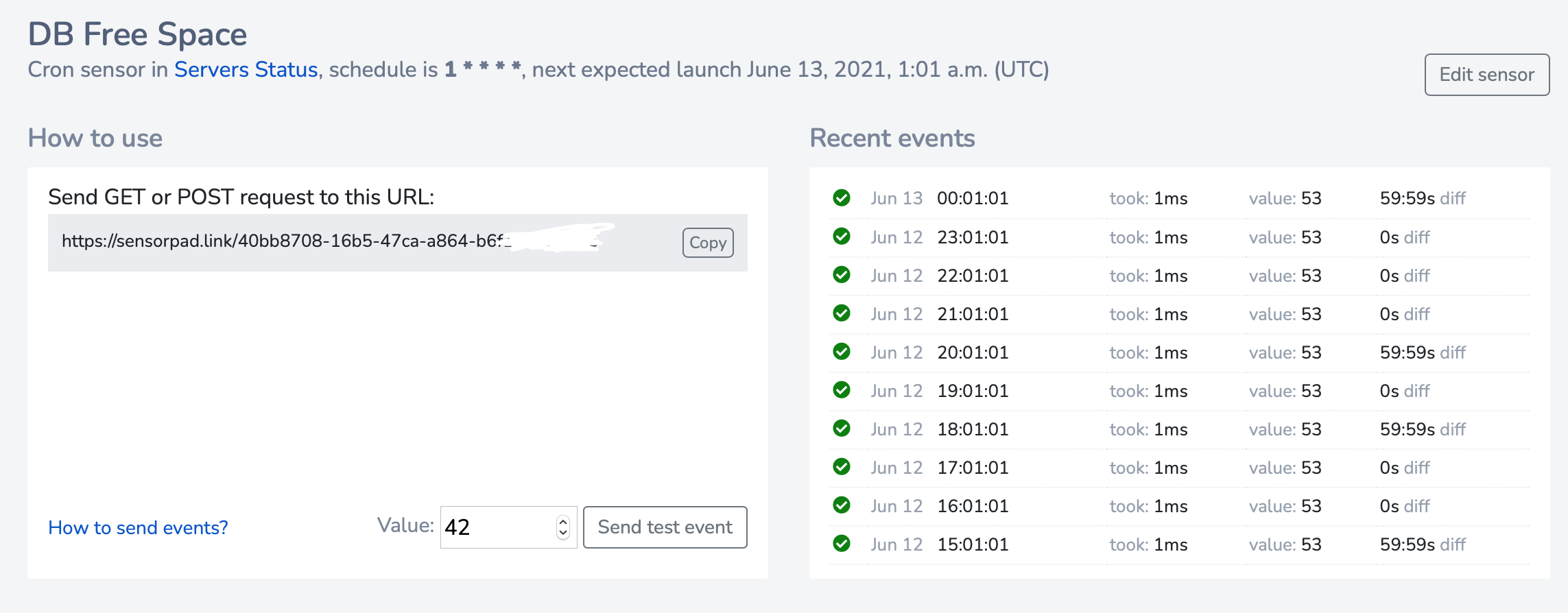

: , , http- . , , .

, , , ( ):

10 ?

( , > 0, ) 15% ?

, 20 ?

?

?

, , , .

, - , , - Nomadlist, - :

- , , .

-, , -, , . , , , , , .

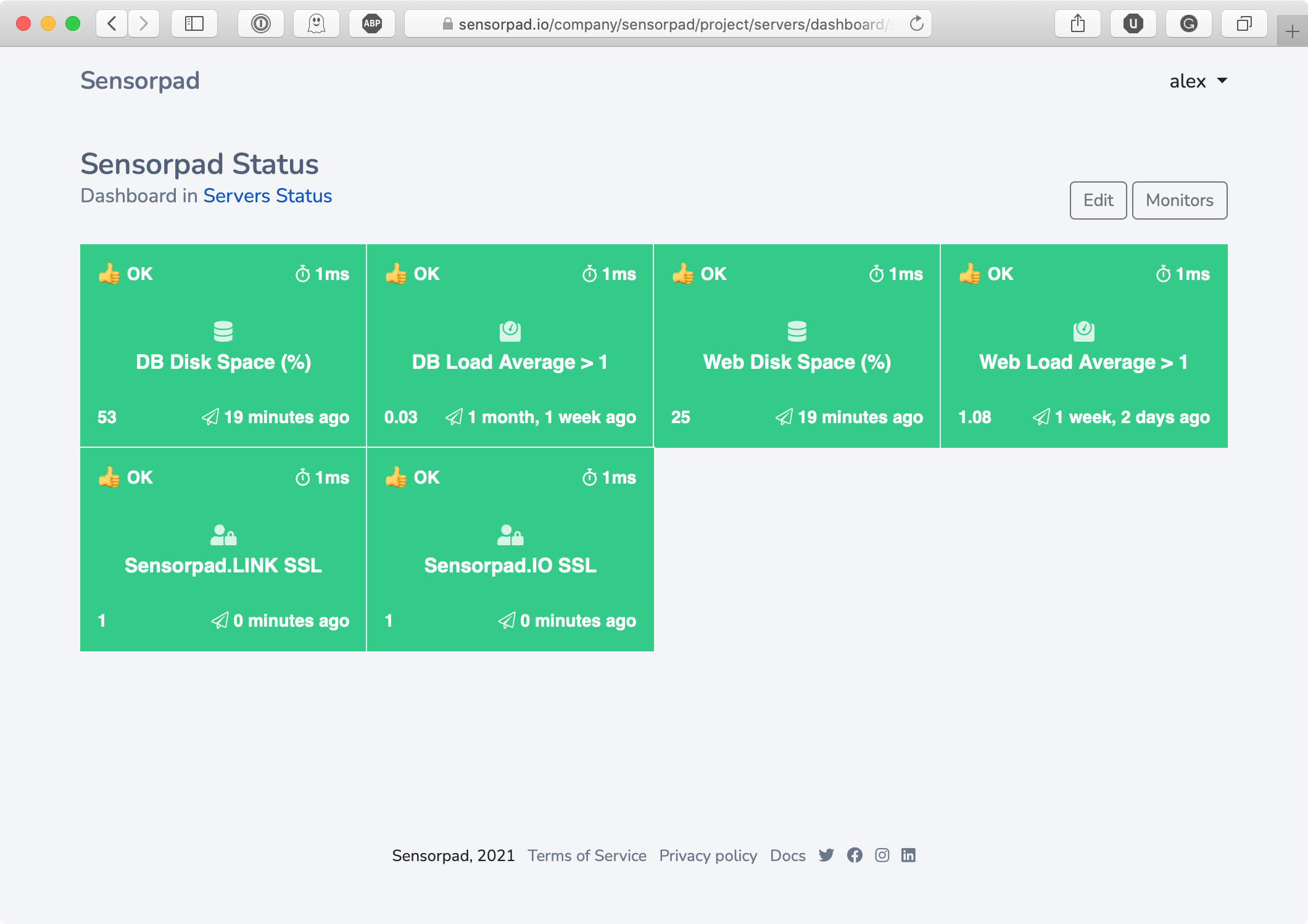

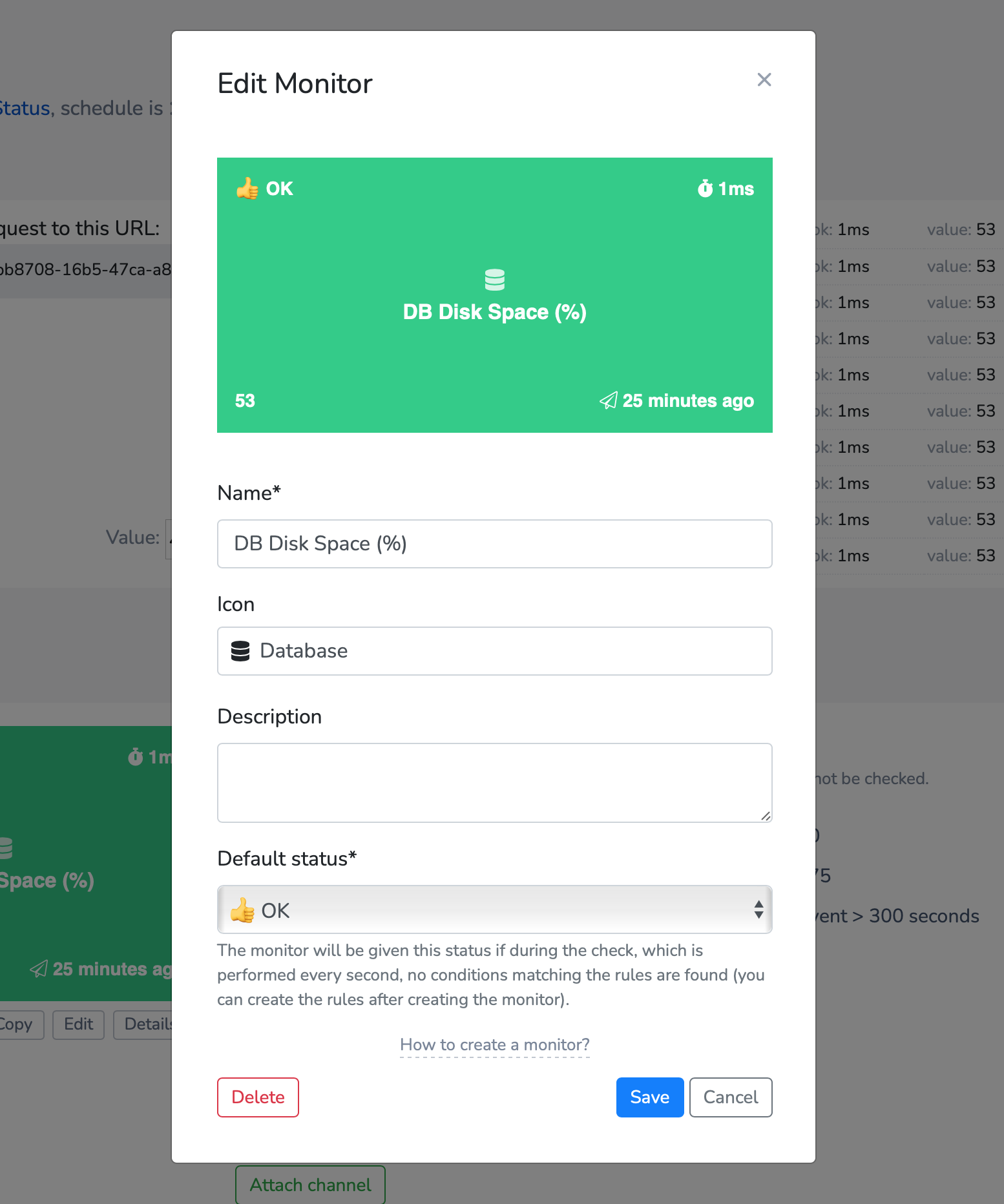

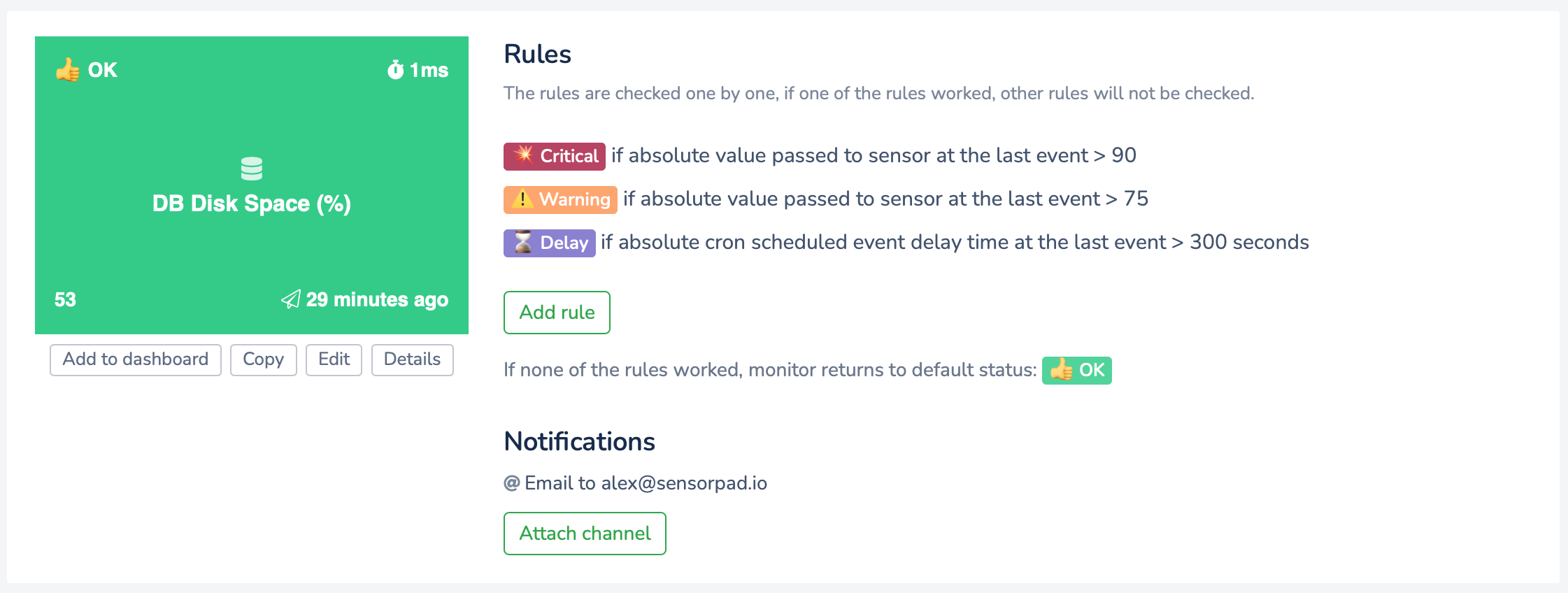

:

( , -);

, 25 ;

( 53 - );

:

;

;

;

, , , -, - .

?

, , .

- , :

df -h |grep vda1 | awk '{ print $5 }'| sed 's/.$//' | xargs -I '{}' curl -G "https://sensorpad.link/< ID>?value={}" > /dev/null 2>&1

, : ( , , , , )

, .

.

.

?

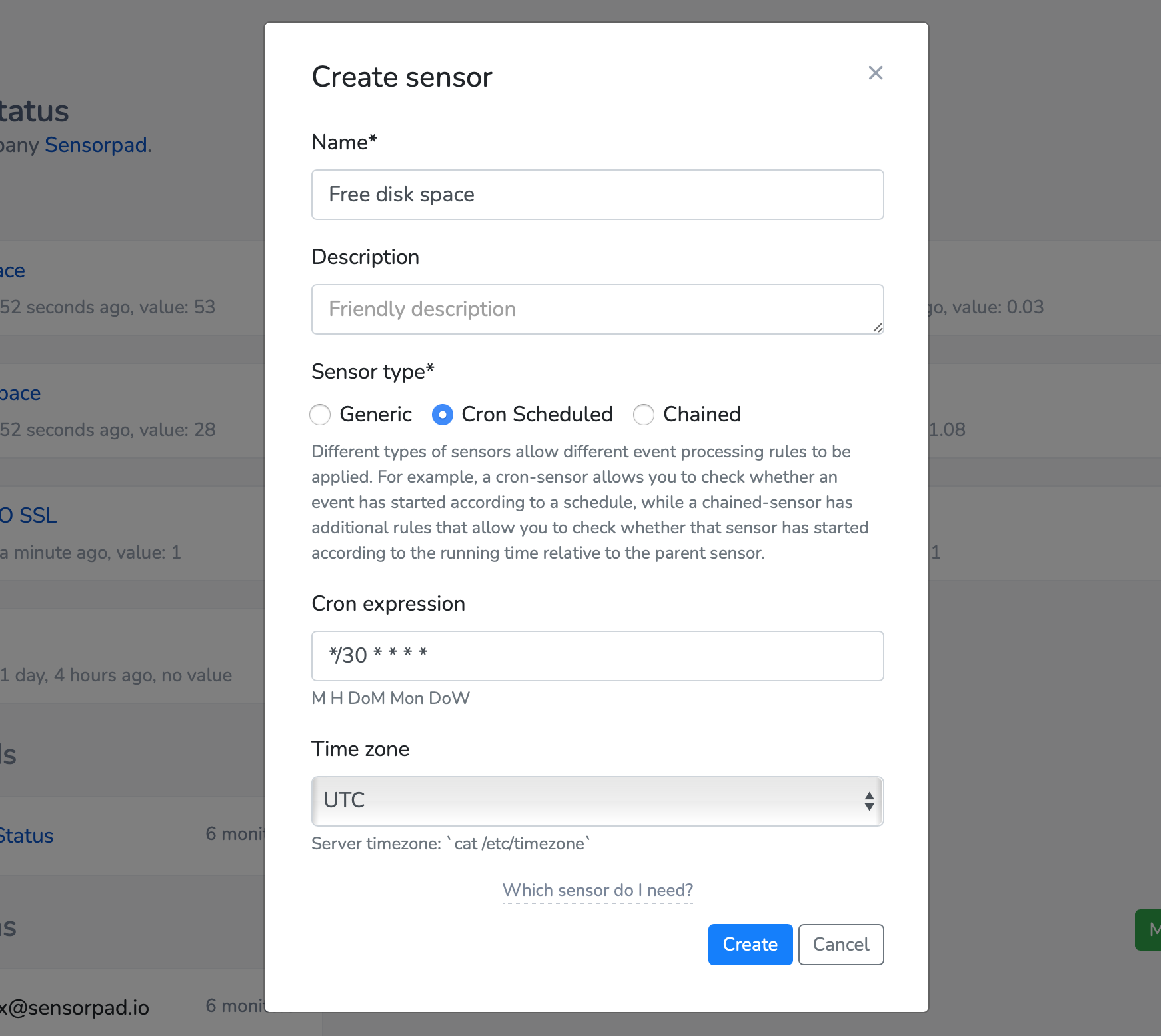

:

, 80% ;

cron-, cron- ;

chain-, , ;

, ( js) , , Curl :

- , .

: , " ", "- ", " ", , - .

, , - : .

, . , True, . , - , .

, .

, : " Warning, 5 , 10 ".

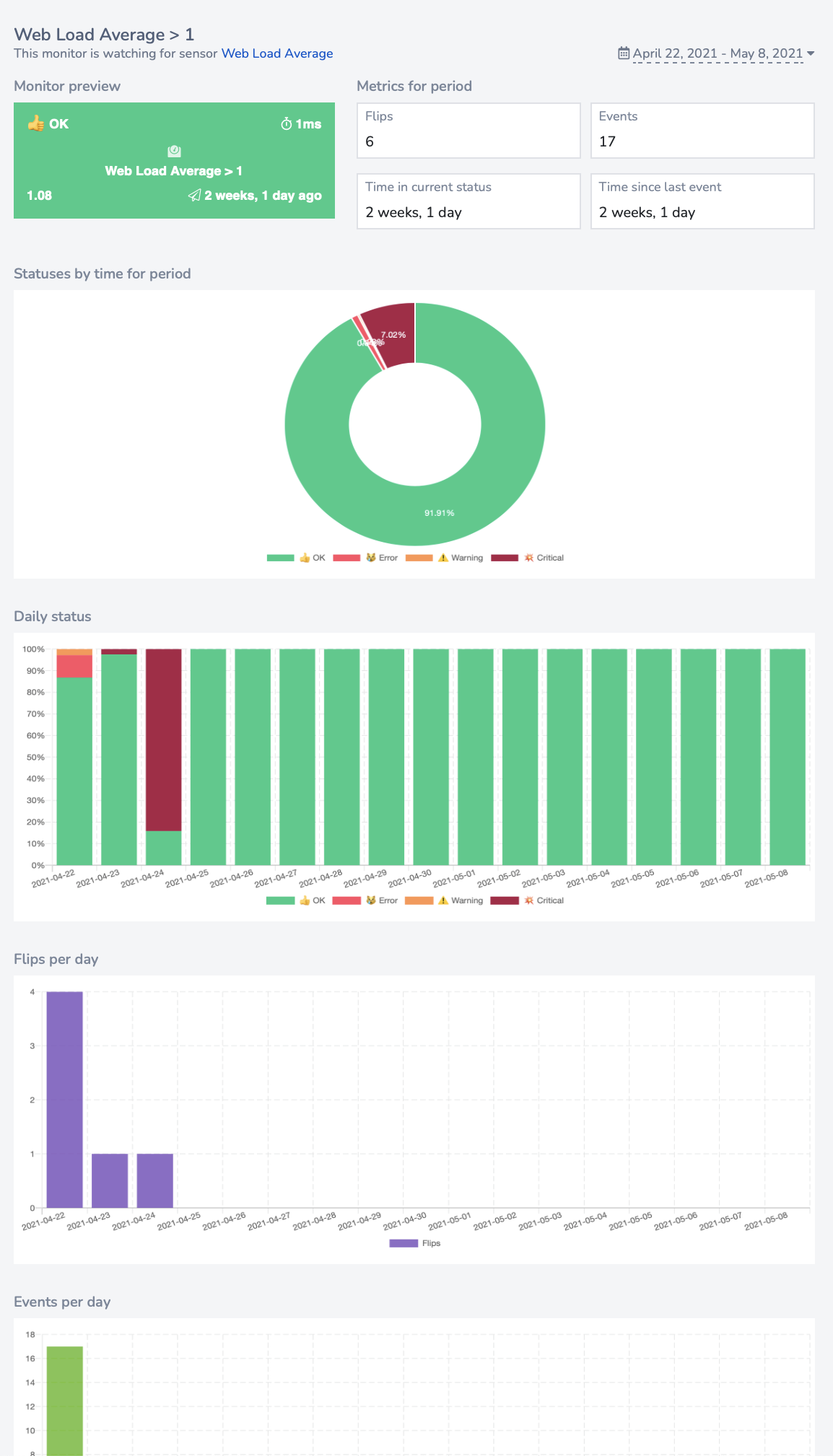

:

?

. - . - cron jobs, , ( , , ):

Cron job, Airflow DAG ;

20% ;

2 ;

1 (, );

2 ( );

20 ( 5, - ).

, , , .

- !

. sensorpad , , (, , ). : , , .

I'm thinking of adding the ability to fumble these pages using a secret link outside the account, then such a page can be used no worse than any status page.

Here's a concept. What is missing?

Sensorpad is a weekend project that was done in free time from the main work, without a ton of investment and even in one person, which is why the opinion of the community is so important to me: how do you like it? What to add, what to improve, whether to continue to develop?

Poke him live, at the same time check out what my mom is like a landing page designer: https://sensorpad.io