A recent article on the importance of using linear algorithms inspired me to optimize the "hot" quadratic function, how I did it, and what results it led to - I'll tell you today. Brew Pu Er in a cup, sit back in your chair:

▍Everything - JavaScript

… In essence, there is no happiness, there is only the consciousness of happiness. Or, in other words, there is only consciousness.At the beginning of this year's

© Victor Pelevin "Yellow Arrow"

js(x)

,

ts(x)

and

flow

files, but

markdown

,

yaml

,

ignore

,

json

and any others that implement the interface engine.

The processor takes the

JavaScript

-code from the files, and glues everything back together, for example, in the Markdown processor .

Either converts

json

,

yaml

and

ignore

in

JavaScript

for finding and correcting errors plugins .

The processor interface looks like this:

process(rawSource, {fix}) -> [processedSource, processedPlaces]

- returns the processed source code or found errors in accordance with the flagfix

;preProcess(processedSource) -> preProcessedList

- withdrawsJavaScript

fromprocessedSource

for verification and correction;postProcess(processedSource, preProcessedList) -> processedSource

- glues the correctedJavaScript

;

The heart of Putout consists of 4 parts (engines):

- The parser translates a string into

AST

andAST

back into a string; - The loader finds and loads plugins (including for

Babel

), and code modsjscodeshift

- The performer recognizes and processes all kinds of plugins (now there are 4 of them);

- The processor controls the operation of the previous trinity;

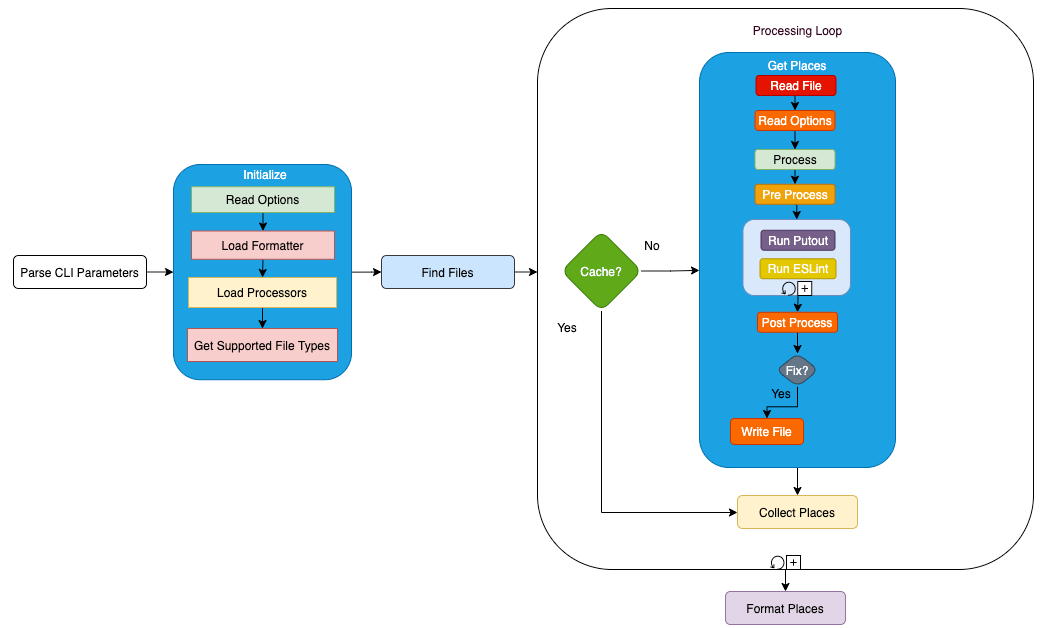

The scheme of work may look like this:

▍We are looking for "hot" functions

The thing is that we are constantly going on a journey that ended a second before we had time to leave.In accordance with Pareto's law : 20% of correctly directed efforts will give us 80% of the result, so the first thing it always makes sense to find the “hottest” functions and work with them.

© Victor Pelevin "Yellow Arrow"

First, get the

isolate

-file in

node v14

using the --prof key , run it from the root of the repository:

node --prof packages/putout/bin/putout.mjs --fresh .

Then we will process it using the key

--prof-process

:

node --prof-process isolate-0x1049e5000-86428-v8.log > processed.txt

Looking through the information that pertains to

engine-processor

in,

processed.txt

we notice the line:

334 93.6% LazyCompile: *module.exports.runProcessors /Users/coderaiser/putout/packages/engine-processor/lib/processor.js:26:32

Yeah, the Processor engine fulfills 334 clock cycles and 93.6% of calls when the parent function is called.

▍A little about Big O

The past is the locomotive that pulls the future with it. It happens that this is the past, in addition to someone else's. You ride with your back forward and you see only what has already disappeared. And to get off the train, you need a ticket. You hold it in your hands, but who will you present it to?For a function

© Victor Pelevin "Yellow Arrow"

fn

that takes as input

data

and returns

result

:

const result = fn(data);

Big O provides information about the most pessimistic scenario.

The execution time

fn

can be obtained using a function

complexity

, it takes as input

operationsCount

:

const time = complexity(operationsCount);

Time is expressed in a letter

n

, it is more convenient than using numbers, since there are many of them, but their meaning is not particularly important (we cut off Occam's Razor ): technology is changing, new, more productive computers are replacing weak computers. What really matters is the ability to compare algorithms . And it exists! This is Big O , and here are its simplest examples:

- O(1) — , , . , , :

time = complexity(2 + n); O(1); const fn = (name) => { const str = `hello ${name}`; return str; }

- O(n) — , , . 10 , 100 :

time = complexity(n * 10); O(n); const fn = (files) => { const results = []; for (const file of files) { results.push(processFile(file)); } return results; }

- O(n^2) — , , . ,

n

.runners

5 ,run

10 , :

time = complexity(5 * 10); O(n * n); function process(runners) { const processed = []; for (const run of runners) { const files = run(); for (const file of files) { processed.push(processFile(file)); } return processed; }

▍Optimize

- Remember, when a person stops hearing the sound of wheels and agrees to go further, he becomes a passenger.A simplified version of starting the Processor Engine contains a loop in a loop, that is

- Nobody asks us whether we agree or not. We don't even remember how we got here. We just drive and that's it. Nothing remains.

- The most difficult thing in life remains. Ride on a train and not be a passenger.

© Victor Pelevin "Yellow Arrow"

, it looks like this:

function process(runners) {

const processed = [];

for (const run of runners) {

const processed = run();

for (const file of files) {

processed.push(processFile(file));

}

return processed;

}

The simplified, revised version contains two consecutive loops, and

looks like this:

function process(runners) {

const processed = [];

for (const run of runners) {

files.push(...run());

}

for (const file of files) {

processed.push(processFile(file));

}

return processed;

}

And ideally , functions with loops are taken out and called from the main function:

function process(runners) {

const files = getFiles(runners);

const linted = lintFiles(files);

return linted;

}

function getFiles(runners) {

const files = [];

for (const run of runners) {

files.push(...run());

}

return files;

}

function lintFiles(files) {

const linted = [];

for (const file of files) {

linted.push(processFile(file));

}

return linted;

}

▍ We take measurements

This whole world is a yellow arrow that hit you, a train on which you go to the destroyed bridge.For taking measurements, I used

© Victor Pelevin "Yellow Arrow"

time

. This is not the most accurate way, since the execution time can vary greatly on different computers, however, within the same system, the + time is the same and the difference between most of the runs is not very significant. For example, on a Mac, the execution time is two times less than on a remote Linux, in accordance with the characteristics, your time may differ.

When I write, I

putout

often run checks (already more than) 1800 files, and one and a half minutes may not seem like a lot, but if you compare it with the execution time of 3000 tests in 15 seconds, it becomes clear: there is room to grow! Thus, we will select a tag

v17.5.0

and launch a fresh lint using redrun:

git checkout v17.5.0 && time redrun fresh:lint

> putout . --fresh

real 1m32.712s

user 1m25.502s

sys 0m6.542s

git checkout master && time redrun fresh:lint

> putout . --fresh

real 1m20.898s

user 1m13.502s

sys 0m6.542s

We are interested in the first lines: minute 33 seconds, and minute 20 seconds - it became faster by 13 seconds, this is about 12% to them, we add a measurement after optimization to the ideal version and we get all 13%.

Repeating the search for "hot" functions, we get the following numbers:

73 54.9% LazyCompile: *module.exports.runProcessors /Users/coderaiser/putout/packages/engine-processor/lib/processor.js:26:32

The number of cycles worked are 4 times less, and the percentages have decreased from 93% to 54%, which is not a bad thing in itself. I would be grateful if someone in the comments expands the information about the data that the profiler saves to the file.

▍ Far reaching conclusions

, , , , , , “Fleetwood Mac”. , , . , , .

© « »

As a result of optimization, the code became not only faster to process, but also easier to understand, and therefore more maintainable and extensible. Therefore, I boldly declare: optimization of "hot" spots is the root of all blessings!

UPDATE Apparently I made a mistake in calculating the algorithmic complexity and what I call the linear algorithm is O (n * m) , thanks a lot @Yavanosta,faiwer, nvgordeev ! , , , Big O - . , V8.

▍

, , . , . : , , , . ? . , – ? , ».

© « »