What are Model Ensembles?

As the name suggests, an ensemble is just a few machine learning algorithms put together. This approach is often used in order to enhance the "positive qualities" of individual algorithms, which by themselves may work poorly, but in a group - an ensemble, give a good result. When using ensemble methods, the algorithms learn simultaneously and can correct each other's mistakes. Typical examples of methods aimed at uniting "weak" students into a group of strong ones are (Fig. 1):

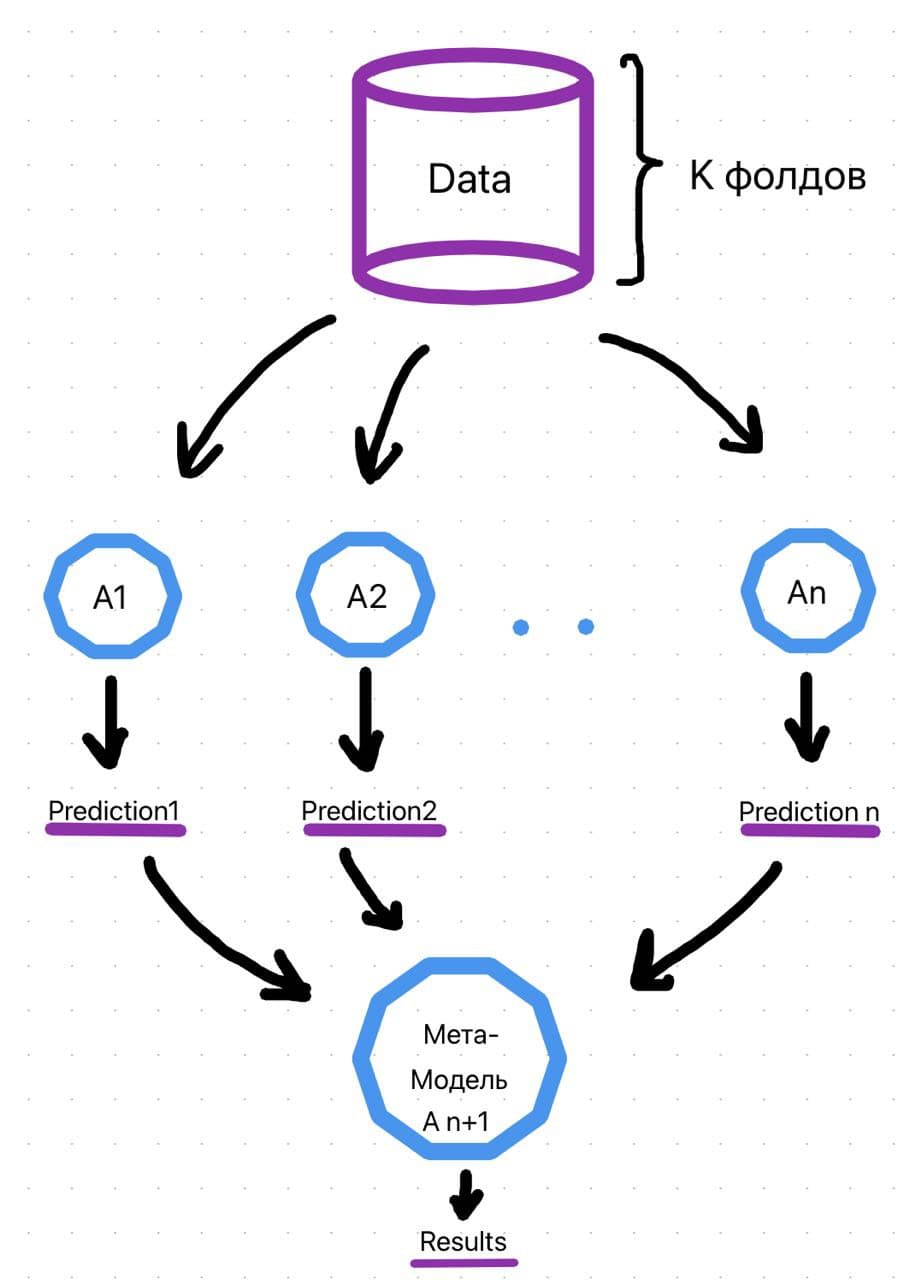

Staking . Dissimilar individual models may be considered. There is a meta-model that takes the base models as input and the final forecast as the output.

Bagging. Homogeneous models are considered, which are trained independently and in parallel, and then their results are simply averaged. A striking representative of this method is the random forest.

Boosting. We consider homogeneous models that are trained sequentially, and the subsequent model must correct the errors of the previous one. Of course, gradient boosting comes to mind as an example here.

These three methods will be discussed in more detail below.

Staking

. . sklearn.ensemble python AdaBoost, Bagging, GradientBoosting, Stacking ( ).

: . , (SVM), k- (KNN) . . ( ), - .

(. 2):

k ( , -).

, k- , , k-1 . .

.

.

2

:

, .

, , . , , , .

, , , . , , . n , n - . , ~ 0.632*n . , m m .

. , .

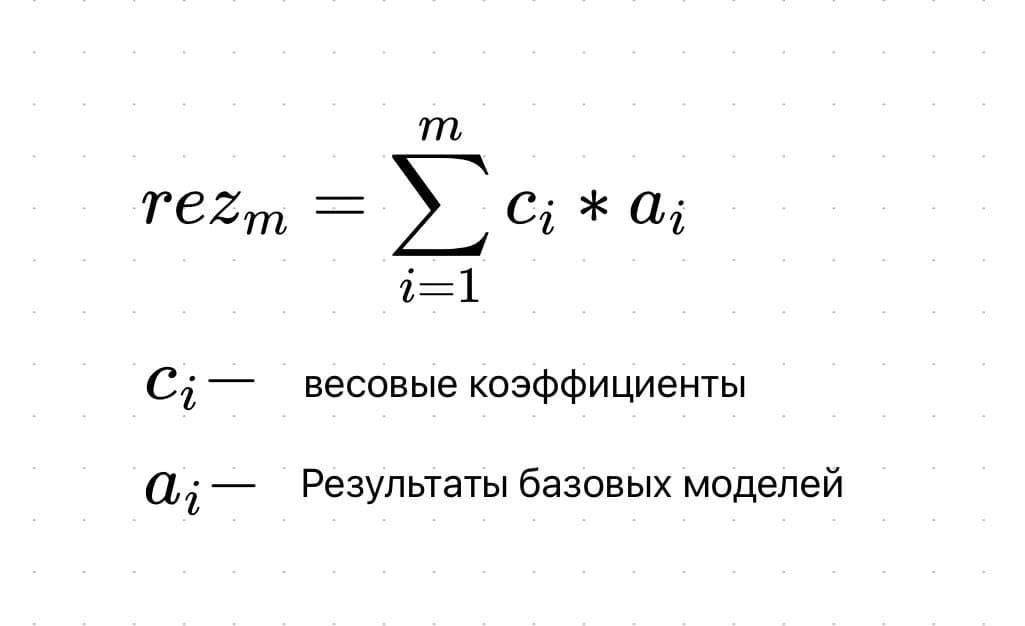

, , (. 3). ai .

(. 4):

() , , - . , , . , , . , , (. 5). .

:

, , . , - , , . : - .

, . , , .

: AdaBoost. - , (. 6).

. :

, .

,

, ,

- AdaBoost, , , . , , .

:

Thus, we saw that in order to improve the quality of functioning of individual machine learning models, there are a number of techniques for combining them into ensembles. These techniques are already embedded in software products and you can use them to improve your solution. However, from my point of view, when solving a problem, you should not immediately take on them. It is better to first try one simple, separate model, understand how it functions on specific data, and then use ensembles.