Author: Eugenio Culurciello, original title: The fall of RNN / LSTM

Translation: Davydov A.N.

Link to original

We loved RNNs (Recurrent Neural Networks), LSTMs (Long-short term memory), and all their variants. And now it's time to give them up!

In 2014, LSTM and RNN were resurrected. But we were young and inexperienced. For several years they have been a way of solving problems such as sequential learning, sequence translation (seq2seq). They also made it possible to achieve amazing results in understanding speech and translating it into text. These networks have fueled the rise of voice assistants such as Siri, Cortana, Google, and Alexa. Let's not forget machine translation, which allowed us to translate documents into different languages. Or neural network machine translation, which allows you to translate images to text, text to images, make subtitles for videos, etc.

Then, in the following years (2015-16), ResNet and Attention appeared. Then the understanding began to come that LSTM was a clever technique to bypass, not solve a problem. Attention also showed that MLP networks (Multi-Layer Perceptron Neural Networks) can be replaced by averaging networks driven by a context vector. (more on this later).

Only 2 years have passed, and today we can say for sure:

"Knock off with RNN and LSTM, they are not that good!"

, , Attention , , . RNN Attention . RNN , , Attention.

?

, RNN, LSTM . :

, , . , , 0, .

LSTM. , , ResNet. () . LSTM . .

. , , . , LSTM, GRU ! (100), .

RNN , . , ( ), . RNN . , , , Amazon Echo!

?

2018 - (Pervasive Attention)

2D , RNN/LSTM, Attention, Transformer

Transformer 2017 , . , .

: , , « » , « », - ( ) , , . , / .

« », , :

- , Ct

, , . (TCN), 3 .

«» , 100 , 100 «» 100 . 100100 . 10 000 .

, .

, , , : , N (log(N)), N – . , RNN, – . T N (T>>N)

, 3-4 100!

, , «». , , .

? , . , , : , – , . , , . , . , , , .

– RNN . Attention. , - , !

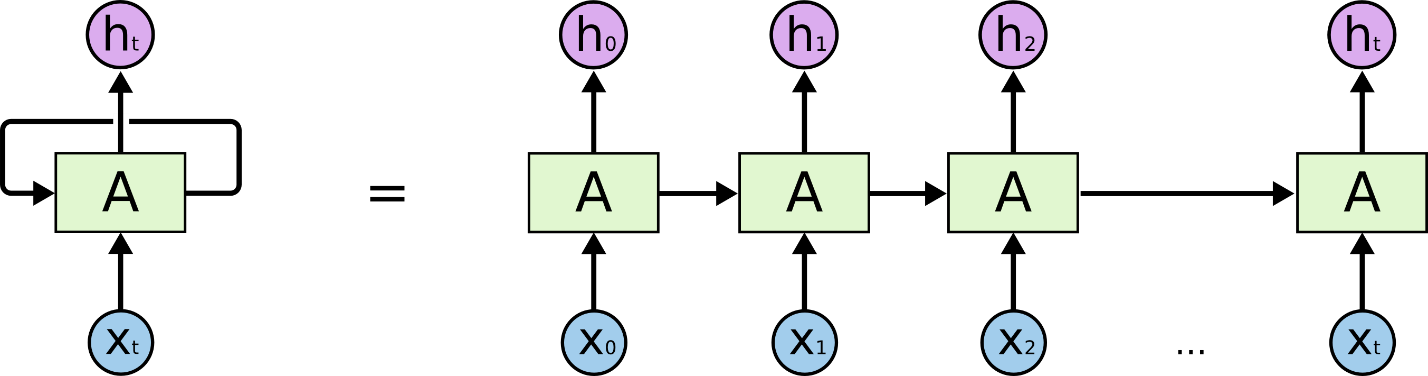

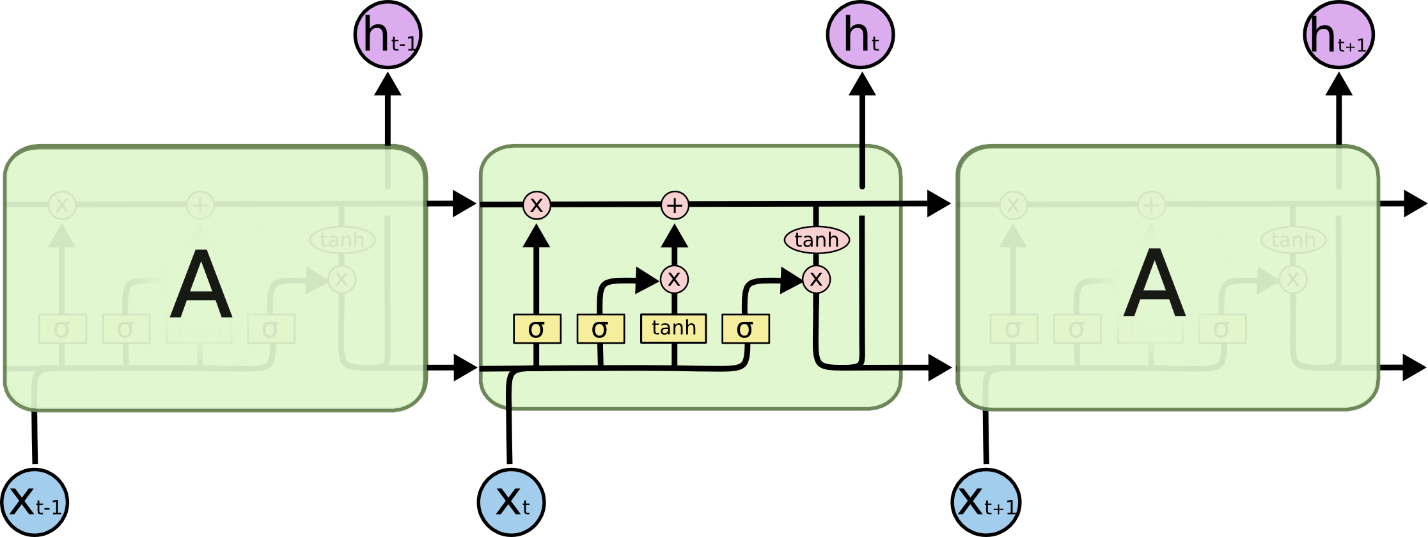

RNN\LSTM: RNN LSTM , , . , LSTM 4 ( MLP) . , , . , ( , . .). RNN / LSTM , . , , FWDNXT.

1: WaveNet. . : .

2: RNN LSTM - ( . ). () , / , ! , - . - , ( ).

3: , CNN RNN. (TCN) « , LSTM, , ».

4: , , . « , , 858 534 22 30, . , »- , «» (Attention), , , (TCN). , RNN, , . , (, 5 ), , , , .

5: , , , . «» . , ! !

6: Attention Transformer, . VGG NLP? , LSTM , . LSTM Transformer ( ), , , « LSTM Transformer - MRPC »

7: Transformer !