about the project

There are many interesting problems in the field of programming languages processing, the automatic solution of which can be useful for creating convenient tools for developers.

The source code of programs differs in many ways from natural language texts, but it can also be thought of as a sequence of tokens and similar methods can be used. For example, in the field of natural language processing, the BERT language model is actively used. The process of its training involves two stages: pre-training on a large set of unlabeled data and additional training for specific tasks on smaller marked-up datasets. This approach allows many tasks to be solved with very good quality.

Recent works ( 1 , 2, 3 ) showed that if you train the BERT model on a large dataset of program code, then it copes well with several tasks in this area (among them, for example, localization and elimination of incorrectly used variables and generation of comments to methods).

The project aims to investigate the use of BERT for other source code tasks. In particular, we focused on the task of automatically generating commit messages.

About the task

Why did we choose this task?

Firstly, version control systems are used in the development of many projects, so a tool for automatically solving this problem can be relevant for a wide range of developers.

Second, we hypothesized that using BERT for this task could lead to good results. This is due to several reasons:

- in existing works ( 4 , 5 , 6 ), data are collected from open sources and require serious filtering, so there are few examples for training. This is where the ability of BERT to train on small datasets can come in handy;

- The state-of-the-art result at the time of the work on the project was in the Transformer architecture model, which was pre-trained in a rather specific way on a small dataset ( 6 ). It was interesting for us to compare it with the BERT-based model, which is pretrained in a different way, but on a much larger amount of data.

For the semester, I had to do the following:

- study the subject area;

- find a dataset and select a representation of the input data;

- develop a pipeline for training and quality assessment;

- conduct experiments.

Data

There are several open datasets for this task, I chose the most filtered one ( 5 ).

The dataset was collected from the top 1000 open GitHub repositories in the Java language. After filtering, from the original millions of examples, about 30 thousand remained.

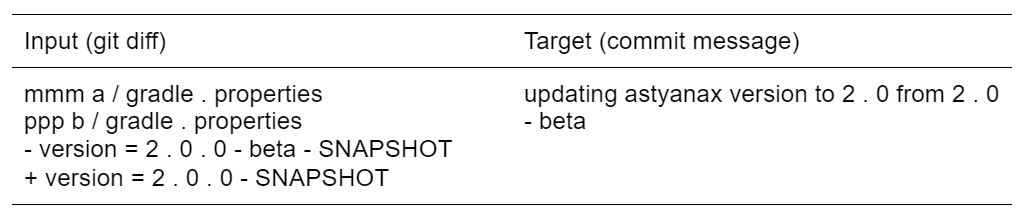

The examples themselves are pairs from the output of the git diff command and the corresponding short message in English. It looks something like this:

Both changes and messages in the dataset are short - no more than 100 and 30 tokens, respectively.

In most of the existing work investigating the problem of automatically generating messages for commits, a sequence of tokens from git diff is fed to the models.

There is another idea: to explicitly select two sequences, before and after changes, and align them using the classical algorithm for calculating the editorial distance. Thus, the changed tokens are always in the same positions.

Ideally, we would like to try several approaches and understand how they affect the quality of solving this problem. At the initial stage, I used a fairly simple one: two sequences were fed to the model input, before and after changes, but without any alignment.

BERT for sequence-to-sequence tasks

Both the input and output data for the task of automatically generating messages for commits are sequences, the length of which may differ.

To solve such problems, an encoder-decoder architecture is usually used, which consists of two components:

- the encoder model builds a vector representation based on the input sequence,

- the model-decoder generates an output sequence based on the vector representation.

The BERT model is based on an encoder from the Transformer architecture and by itself is not suitable for such a task. Several options are possible to get a full-fledged sequence-to-sequence model, the simplest is to use some kind of decoder with it. This approach with a decoder from the Transformer architecture has shown itself well, for example, for the task of neural machine translation ( 7 ).

Pipeline

To conduct the experiments, code was needed to train and evaluate the quality of such a sequence-to-sequence model.

To work with the BERT model, I used the HuggingFace's Transformers library, and for the implementation in general, I used the PyTorch framework.

Since in the beginning I had little experience with PyTorch, I largely relied on existing examples for sequence-to-sequence models of other architectures, gradually adapting to the specifics of my task. Unfortunately, this approach resulted in a lot of poor quality code.

At some point, it was decided to start refactoring, practically rewriting the pipeline. The PyTorch Lightning library helped to structure the code, which allows you to collect all the main logic of the model in one module and automate it in many ways.

Experiments

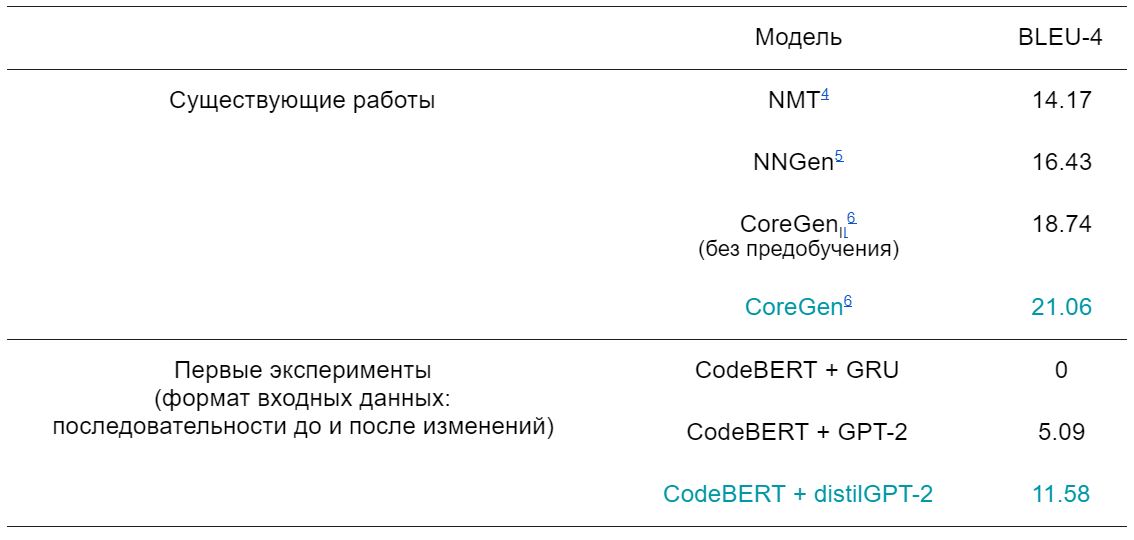

During the experiments, we wanted to understand whether the use of the pretrained BERT model improves the state-of-the-art result in this area.

Among the BERT models trained on the code, only CodeBERT ( 1 ) came up to us , since only it had the Java programming language in the training examples. First, using CodeBERT as an encoder, I tried decoders of different architectures:

- GRU.

, - . GRU Transformer, , .

- . - Transformer.

, — .

, GPT-2 (8) — Transformer, , — distilGPT-2 (9).

There was not enough time for further experiments in the fall semester; I continued them in the winter. We looked at several other ways of presenting input: we tried aligning the sequences before and after changes, and also just filed a git diff.

The main results of the experiments are as follows:

Summing up

In general, the assumption about the benefits of using CodeBERT for this task was not confirmed; in all cases, the Transformer model trained from scratch showed higher quality. The CoreGen6 model remains the best method in this area: this is also a Transformer, but additionally pre-trained using the objective function proposed by the authors.

To solve this problem, many more ideas can be considered: for example, try data representation based on abstract syntax trees, which is often used when working with program code ( 10 , 11), try other pre-trained models, or do some field-specific pre-training if resources are available. In the spring semester, we focused on a more practical application of the results obtained and were engaged in autocompletion of messages to commits. I will tell you about this in the second part :)

In conclusion, I want to say that it was really interesting to participate in the project, I plunged into a new subject area for myself and learned a lot during this time. The work on the project was very well organized, for which many thanks to my leaders.

Thanks for attention!

Sources of

- Feng, Zhangyin, et al. "Codebert: A pre-trained model for programming and natural languages." 2020

- Buratti, Luca, et al. «Exploring Software Naturalness through Neural Language Models.» 2020

- Kanade, Aditya, et al. «Learning and Evaluating Contextual Embedding of Source Code.» 2020

- Jiang, Siyuan, Ameer Armaly, and Collin McMillan. «Automatically generating commit messages from diffs using neural machine translation.» 2017

- Liu, Zhongxin, et al. «Neural-machine-translation-based commit message generation: how far are we?.» 2018

- Nie, Lun Yiu, et al. «CoreGen: Contextualized Code Representation Learning for Commit Message Generation.» 2021

- Rothe, Sascha, Shashi Narayan, and Aliaksei Severyn. «Leveraging pre-trained checkpoints for sequence generation tasks.» 2020

- Radford, Alec, et al. «Language models are unsupervised multitask learners.» 2019

- Sanh, Victor, et al. «DistilBERT, a distilled version of BERT: smaller, faster, cheaper and lighter.» 2019

- Yin, Pengcheng, et al. «Learning to represent edits.» 2018

- Kim, Seohyun, et al. «Code prediction by feeding trees to transformers.» 2021