Hello, Habr! I'm Sasha Fedoseev, junior Python developer at Selectel. A few years ago, when our company began to grow especially rapidly, we faced a problem. All sorts of unscrupulous spammers

Why did we decide to fight them? Well, mail is sent from accounts, what's wrong with that ... However, from the point of view of a cloud provider, there are reasons why it is still worth stopping the "multiplication" of spammers.

Why fight spammers

They spoil white IPs

To send some kind of mail to the outside, it is necessary that the virtual machine has an IP address. White "IPs" are a rather limited resource, so it is important for us to understand that they are occupied by decent users, not spammers. The latter provoke an IP ban on the part of large email services: the "IP address" is blacklisted, and we cannot even always understand this. Only when we transfer such an IP to another client who needs to send mail, and in the end he cannot do this. Removing an IP from a ban is a rather difficult process, so it's better to just not get there.

Noisy Neighbor Effect

It is not uncommon for spammers to deploy many spam machines. And users who are unlucky enough to be on the same virtualization host can get hurt. It is unpleasant.

Takes up IP address and CPU time

Why does Selectel need such clients, if in their place there can be good users who are interested in the quality of our services and spend their resources on developing their business?

Reputation problems

No provider will like it if they say about him that he has divorced spammers and he trades in this.

As it was before

From the very beginning, antispam was manual: the problem was dealt with by the technical support staff, who analyzed each incident. Technical support carried all this burden on itself. In addition, the administrators periodically reviewed the registrations to make sure that 10 accounts were not registered by a spammer and this is just a coincidence: 10 different users registered at the same time.

It is clear that such a system is rather ineffective. First, there is an unacceptably long delay between identifying a spammer and banning them. Secondly, technical support employees can spend their work time more efficiently. So we started looking for other options.

Possible solutions to the problem

Registration by documents

This would scare off spammers, but make onboarding much more difficult for respectable clients. In addition, the support staff would have to go through every registration. It seems not the most pleasant job. And the presence of a jpg file is not a guarantee of the existence of a real document.

Bottom line: we decided not to arrange "paper, please" and refused this option.

Close the ability to send mail for clients

It was possible to warn all customers that we do not support sending mail from the word at all. But, perhaps, it would be strange. Users who do not send spam would lose their standard and familiar features. They would not even be able to maintain a working postal correspondence and would definitely be sad because of this.

Bottom line: we didn’t want to deprive clients of some obviously necessary capabilities and force them to look for solutions “on the side”.

Create a Gateway to Send Mail

In general, the Mail Gateway is the server through which all mail passes. He is able to analyze mail and make a decision whether this or that letter is spam. In the case of an infrastructure provider like Selectel, it would be correct to frame this as a service. But such a solution requires a separate development team, constant support and development.

Bottom line: the creation of such a service was not in the company's plans at the time of the decision.

Use a third party service

We also considered this option, but found more than one argument against it. First, integration with a third-party service is a non-trivial technical task. Secondly, we would put ourselves in a situation where we are dependent on some kind of external service. Finally, it would increase the price tag for our services, because we would have to take into account the cost of the external service. And I would not like to do this, of course.

Bottom line: the use of an external service could affect the cost of our services, but we could not go for it.

In general, we dismissed all of the above options, so we decided to make our own antispam system.

50 shades of a spammer

If we want to independently identify spammers among users, we need to determine by what criteria to do this.

It is worth paying attention to the user's behavioral characteristics and analyzing traffic:

→ date of account registration. If it was registered 5 minutes ago, and has already come to the attention of anti-spam, it is highly likely that the account was created exclusively for spam purposes.

→ mail name. We all call postal addresses so that the name has some meaning (the name of the company or the surname of the sender). If the name is vague and looks suspicious, this is quite a strong argument in favor of the ban.

→ means of payment.If it is a disposable card or PayPal, then such an account is also suspicious.

→ geolocation of the IP address during registration. The overwhelming majority of Selectel's clients are from Russia and the CIS countries. If another user has registered in sunny Brazil or Morocco (these countries are traditionally the most spammers), this is cause for concern. You can also check the country code and phone number against the information obtained from the geolocation of the IP address.

→ username. If the username looks like he randomly ran his fingers over the keyboard, that's suspicious, agree.

I will add that we immediately dismissed the analysis of traffic at the L7 level. After all, this would mean that we need to open each email and "read" what's in it. Such an approach, perhaps, could be afforded by a large and influential company like Google, setting up semantic analysis of mail content and imposing an NDA and lawyers. But this option does not suit us.

But it is already possible to work with information at the L4 level: it is impersonal and is expressed in several metrics. Here we can look at the number of addresses to which mail is sent from our IP addresses, and at the size of the transmitted information . It is logical that spammers usually have a very large database of addresses to which they send letters.

As a result, we decided that the complex of all these criteria is enough to make a sufficiently reasoned decision whether the spammer is in front of us or not.

Solution concept

Account rating

We decided to automate the process of assessing users based on the listed criteria - according to the rating model, a kind of "trust index". We don't read mail, but we weigh envelopes and analyze metrics of public traffic that comes out of the cloud.

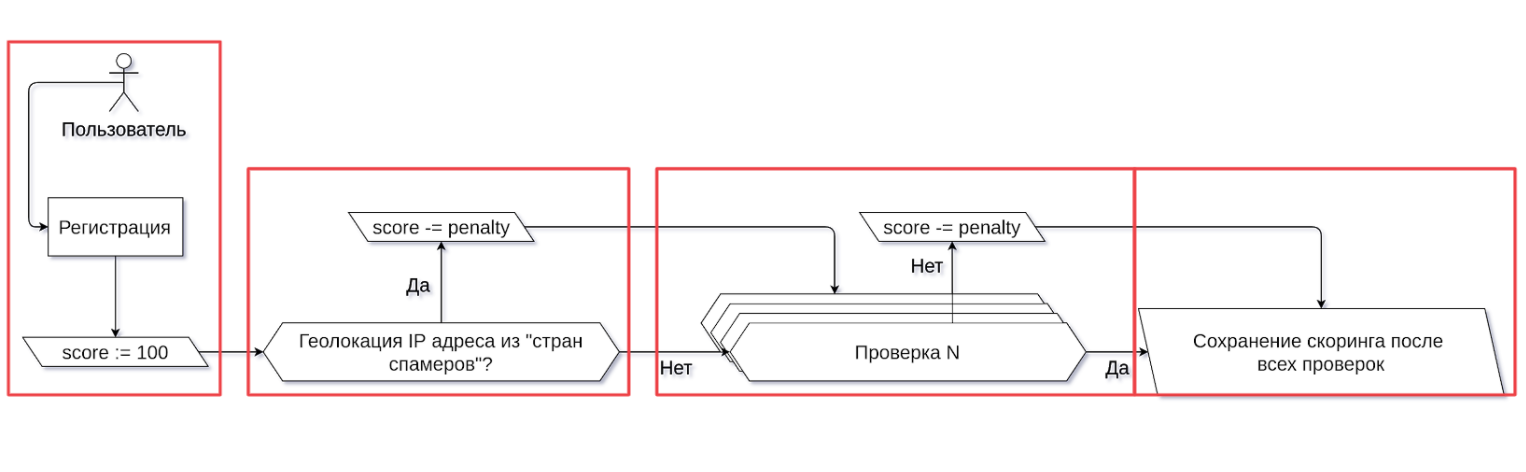

How it works. From the very beginning, when a user signs up, he is assigned the highest possible rating equal to 100 points. Initially, we trust everyone and believe that all our clients will work normally and not send spam.

Then the user goes through a chain of checks. Each failed check will be reflected in the final rating of the user in the form of penalties. We established their size based on our experience with spammers.

First of all, we usually pay attention to the geolocation of the IP-address: whether it corresponds to the country where the majority of spammers come from. We have a list of such countries, which we periodically replenish. Then we "run" the new user through the rest of the filters that I mentioned above. As a result, we have the final score of the account, which we save for further use in the antispam service.

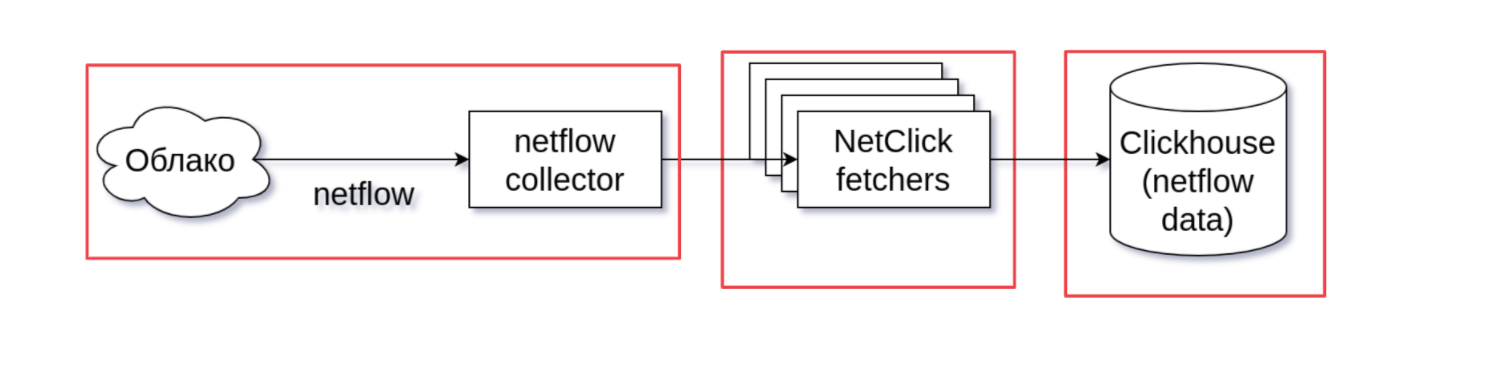

Weigh envelopes and view IP addresses

Our network equipment is able to produce metrics of public traffic using the Netflow protocol. These include SMTP packets, SMTP bytes, the number of SMTP streams, and the number of IP addresses for each source address. We will collect all these metrics in the Netflow Collector. The latter will format them a little for more convenient use, and then, with the help of auxiliary services called NetClick fetchers, they will get into the Clickhouse for further use in anti-spam.

Gotoh

The Selectel developers have a small tradition: we call self-written services by the names of characters from the Hunter x Hunter universe. Hence the name - in honor of the manga hero Goto.

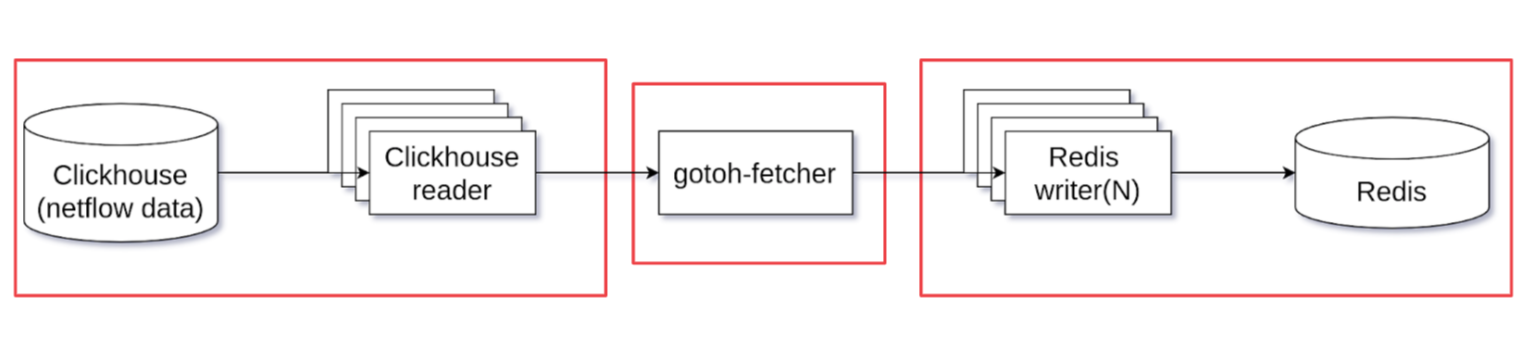

Antispam consists of two parts and is written in Go. The first part of the service is called Gotoh-fetcher. He is engaged in taking the data of the mentioned traffic metrics that reach us via Netflow in several Clickhouse readers. This data goes to the Gotoh-fetcher core for processing. There they are formatted into a more convenient format and sent to the Redis writer for further writing. This concludes the work of the Gotoh-fetcher.

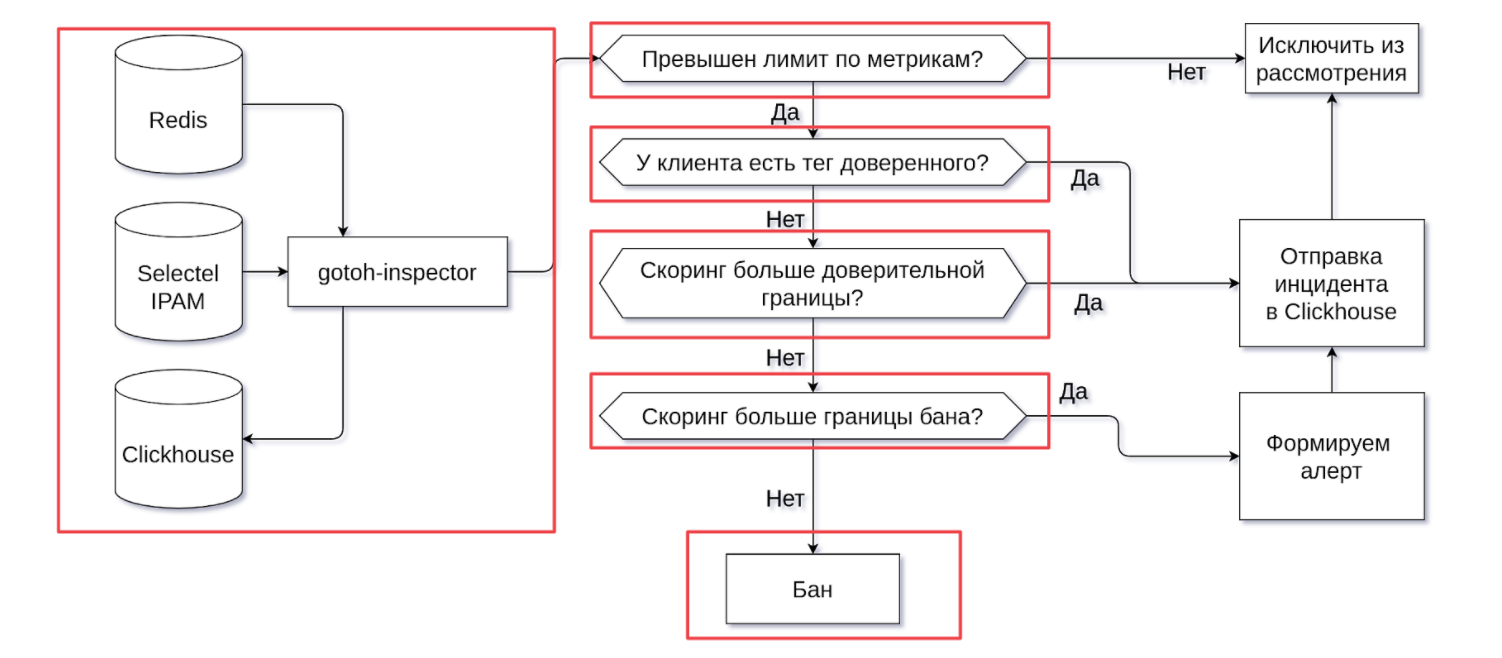

Next, Gotoh-inspector, which lives up to its name, gets down to work. He plays the role of a decision-maker, a judge. Where does Gotoh-inspector go? It looks to Redis for the data it fetched from the Gotoh-fetcher.

After receiving the data, our "inspector" begins to go through the checks. First, it will see if the limit for any metric has been exceeded. If not, then further verification does not make sense - Gotoh-inspector excludes this part of the data from consideration.

If the limits are exceeded for at least one metric, then we first see if the client has a trusted tag. It was specially introduced in order to separate spammers and users who send out normal mail, simply in large quantities. A user's trusted tag can appear only after talking with Selectel specialists.

We have to make sure that we are dealing with a person from a real company who is just sending out normal business mail, there is just a lot of it. If there is a trusted tag, we generate an incident in Clickhouse and no longer consider this piece of data. But, if the client does not have a tag, we continue the "investigation".

We look at the rating, and here the fun begins. Scoring has two boundaries. The first is the confidence limit. If, according to the results of the verification, the account was assigned a good rating, then we will simply send the incident to Clickhouse and forget about it. In general, there is nothing else to do with a user with good scoring.

If the scoring is less than the confidence limit, then we look at whether it is far from the ban limit. Two boundaries are needed for a fairer assessment. If the scoring is good, then we will not ban the user, but if the rating raises questions, we will look at the number of incidents associated with this account.

If an account falls into the intermediate zone, when its rating is less than the confidence limit, but more than the ban limit, the system will send an alert to the chat to the duty officer. They, in turn, will manually check whether spam is being sent from the account or virtual machine.

If the rating does not even reach the limit of the ban, then everything is quite simple: the account gets banned, because we have enough reason to believe that we are facing a violator.

Result

This is how we created our simple antispam system. The question arises: has it gotten better?

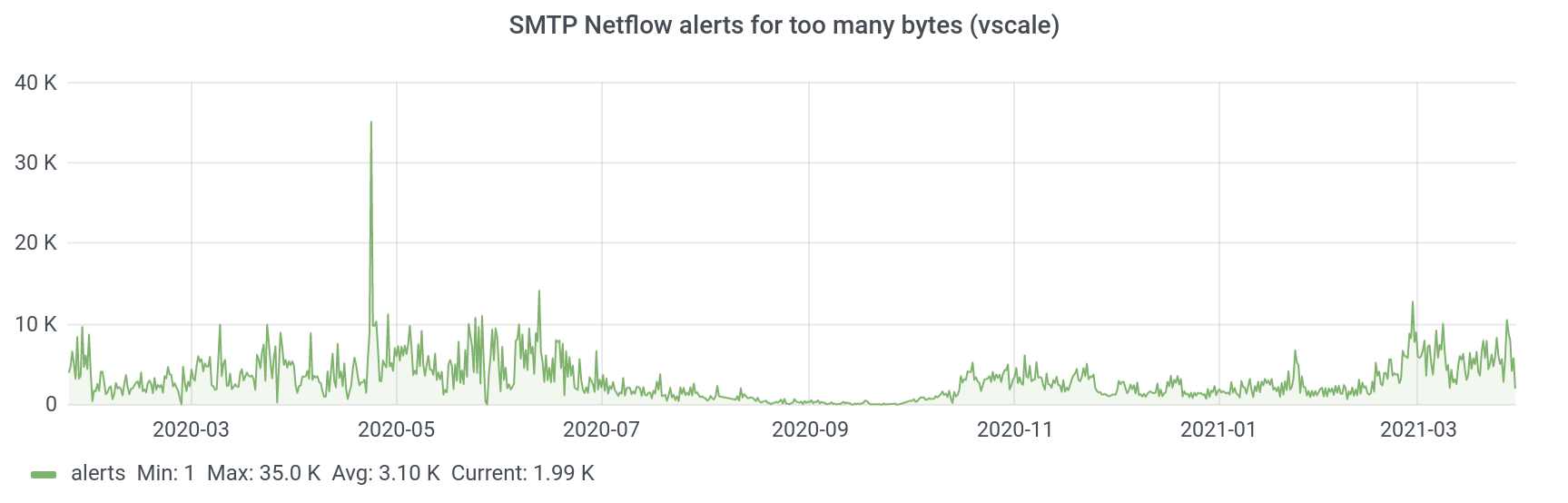

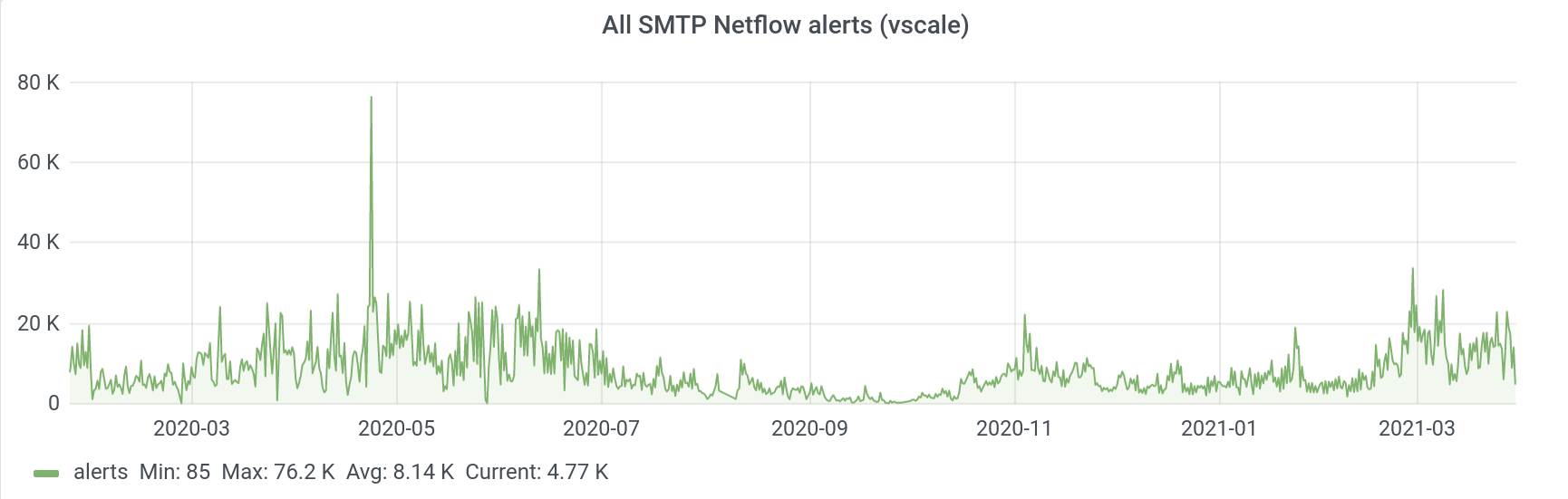

The picture above shows the number of alerts since the launch of this service in early 2020. The number of alerts at the peak was more than 60,000.

With manual anti-spam, we simply could not process such an amount of information - a large amount of data passed us by. And now we can somehow work with this data, analyze it.

We looked at the total number of alerts arriving for all four traffic metrics that we analyzed. Let's take a look at the graph for each metric.

Number of bytes transferred

This is the most numerous metric in terms of the number of alerts. In general, this graph is quite similar to the previous one, because these alerts almost completely form the picture of spam alerts. The logic here is simple: the more mail is sent from an account, the faster it gets into the field of view of antispam and the more alerts will be generated for it.

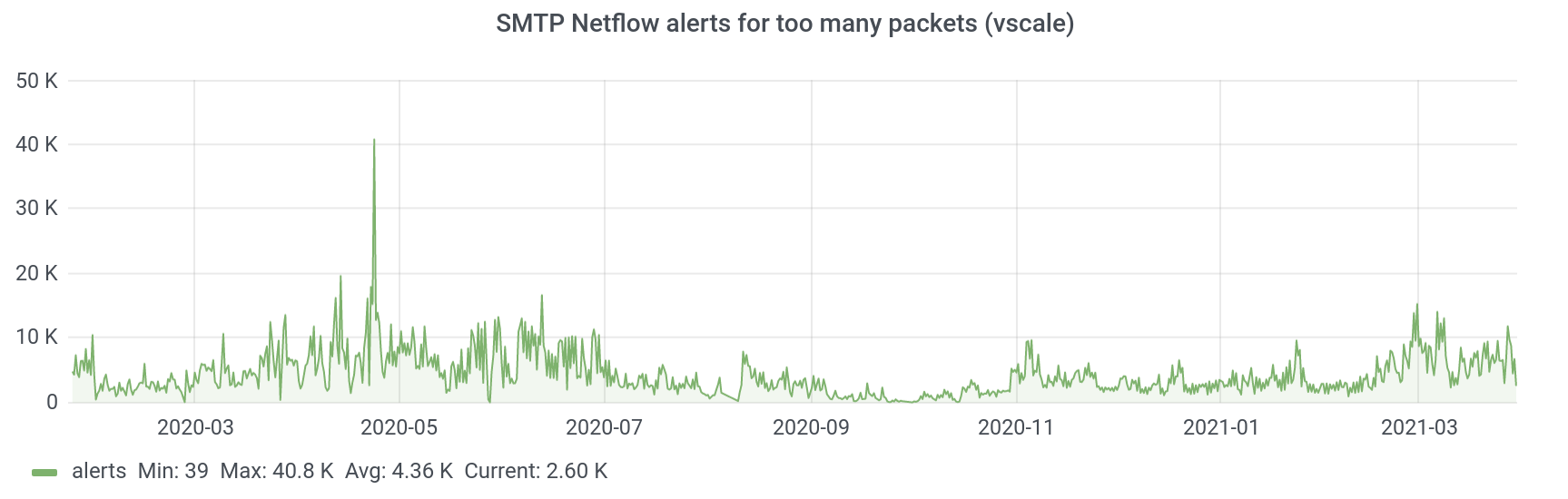

Number of SMTP packets

There is a connection between this metric and the previous one. But why do we analyze the number of bytes and packets separately? Because there were cases when an alert was generated for the account by the number of packets, but not by the number of bytes. For example, a spam account sends out many small letters, each of which is sent in a separate packet - in this case, an alert is generated based on the number of packets.

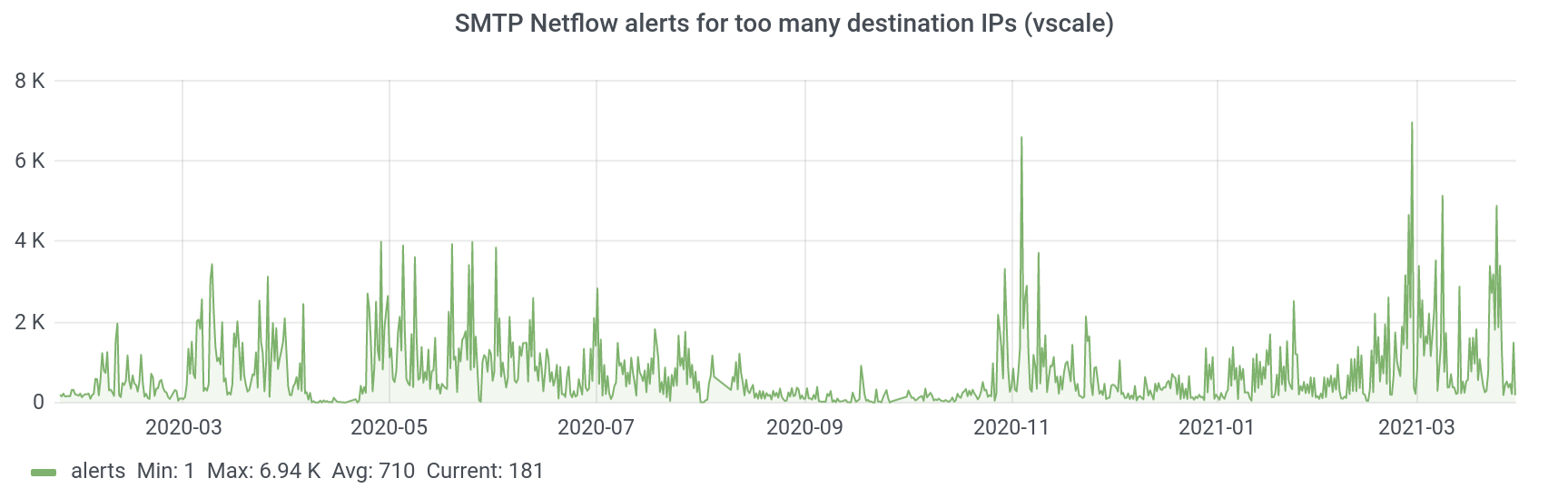

Number of destination IP addresses

This graph is not easy to analyze, but it can be an additional source of information. On each account, spamming is organized differently: someone sends IP to 10 or 100 different addresses, and someone sends to 10 fixed ones and does not fall into the field of view of antispam according to this metric.

Number of SMTP streams open simultaneously

The most "unpopular" metric - alerts for it arrive infrequently. If in the previous illustrations there were thousands on the Y scale, here it is hundreds. Here, apparently, spammers are still reinsured and do not send mail from a large number of open connections. Although the graph is not representative at first glance, it actually helps to better understand how spam might be sent.

Conclusion

Of course, this system can be improved, and we plan to improve it in the future. But now he shows himself quite effectively in work.

For example, we have never banned a respectable user. And if this does happen (well, what if someone has a university project related to mailing lists), then everything is reversible. If you see that your Selectel account has been banned (the reason for blocking will be indicated in your account), just write to technical support. We will see what influenced the rating downgrade and how you can avoid it in the future. This is not a lifetime ban.

I also talked about our antispam system at Selectel DevTalks. If you want to watch the talk, here is the link .