Graph networks are a way of applying classical neural network models to graph data. Graphs, not having a regular structure like images (each pixel has 8 neighbors) or texts (a sequence of words), for a long time remained out of sight of classical neural models, which are widespread in the field of machine learning and artificial intelligence. Most of the graph vectorization models (constructing a vector representation of vertices in a graph) were rather slow and used algorithms based on matrix factorization or spectral graph decomposition. In 2015-16, more efficient random walk models (DeepWalk, Line, Node2vec, Hope) appeared. However, they also had limitations, because they did not in any way affect additional features when constructing a vector model of the graph,which can be stored at vertices or edges. The emergence of graph neural networks was a logical continuation of research in the field of graph embeddings and made it possible to unify the previous approaches under a single framework.

What are they for and how they are arranged

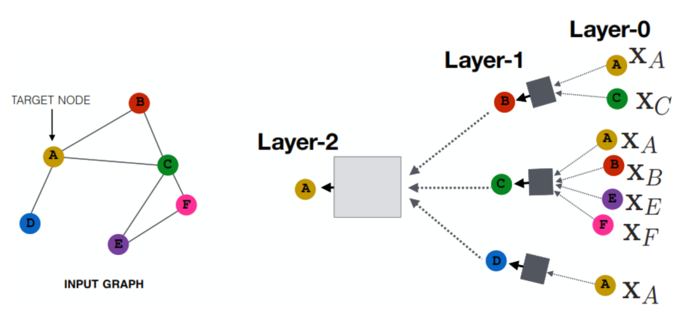

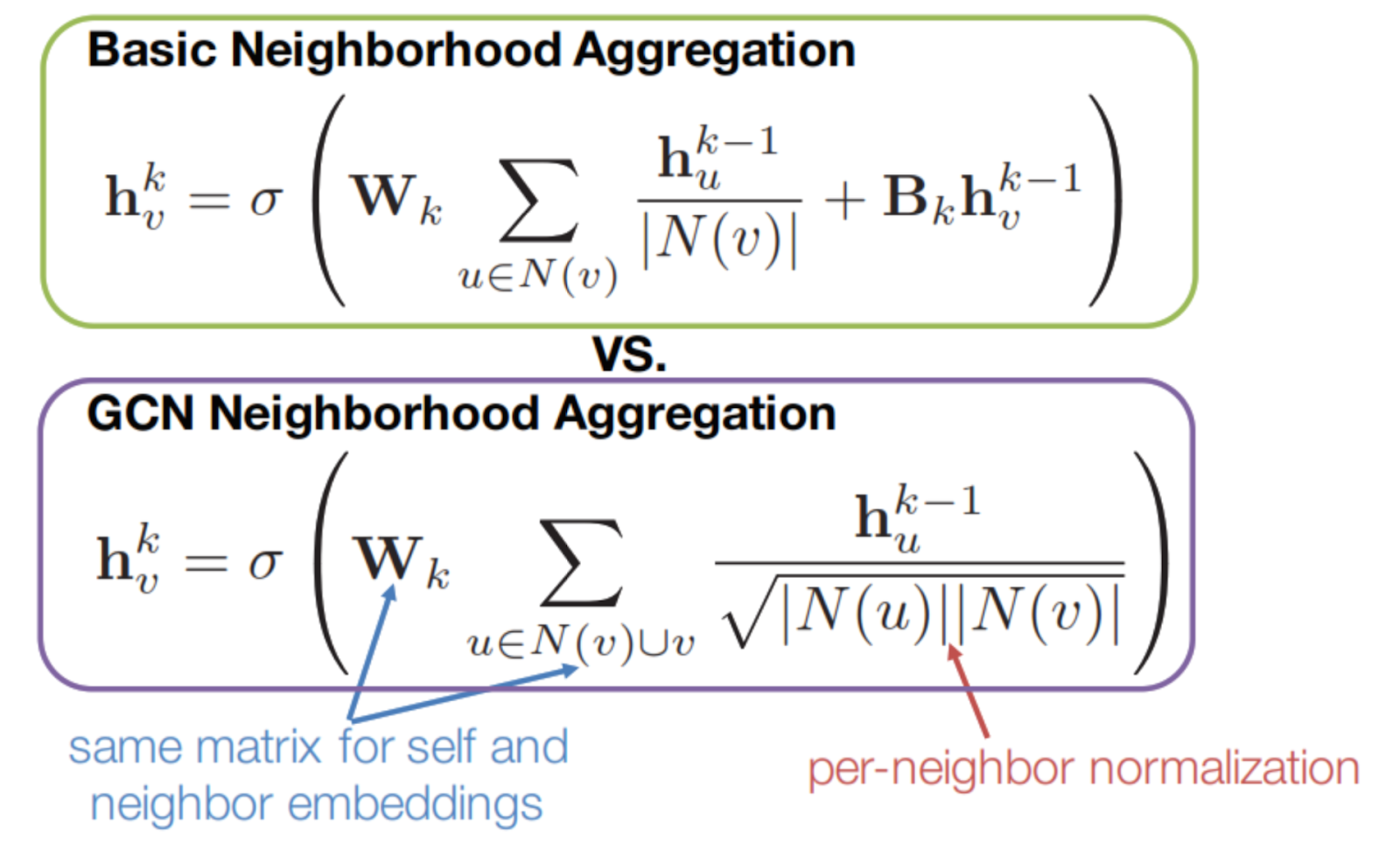

One layer of a graph neural network is an ordinary fully-connected layer of a neural network, but the weights in it are applied not to all input data, but only to those that are neighbors of a particular vertex in the graph, in addition to its own representation from the previous one. layer. Weights for neighbors and the vertex itself can be set by a common weight matrix or by two separate ones. Normalizations can be added to speed up convergence; non-linear functions of activations may vary, but the general construction remains similar. At the same time, graph convolutional networks got their name due to the aggregation of information from their neighbors, although graph mechanisms of attention (GAT) or inductive learning model (GraphSAGE) are much closer to this definition.

Application

Recommender systems

Graphs evolve in the context of user interactions with products on e-commerce platforms. As a result, many companies use graphical neural networks to create recommender systems. Usually, graphs are used to model the interaction of users with products, teach embeddings taking into account a properly selected negative sample, and by ranking the results, personalized offers for products are selected and shown to specific users in real time. One of the first services with such a mechanism was Uber Eats : the GraphSage neural network selects recommendations for food and restaurants.

While the graphs are relatively small for food recommendations due to geographic constraints, some companies use neural networks with billions of connections. For example, the Chinese giant Alibaba has launched graph embeddings and graph neural networks for billions of users and products. Just creating such graphs is a developer nightmare. But thanks to the Aligraph pipeline you can build a graph of 400 million nodes in just five minutes. Impressive. Aligraph supports efficient, distributed graph storage, optimized fetch operators, and a bunch of native graph neural networks. This pipeline is now being used for recommendations and personalized searches across the company's numerous products.

Pinterest proposed a PinSage model that efficiently matches neighbors using personalized PageRank and updates vertex embeddings by aggregating information from neighbors. The next PinnerSage model can already work with multi-embeddings to take into account the different tastes of users. These are just a couple of notable examples in the area of recommender systems. You can also read about Amazon 's exploration of knowledge graphs and graph neural networks , or Fabula AI's use of graph neural networks to detect fake news . But even without this, it is obvious that graph neural networks show promising results with a significant signal from user interactions.

Combinatorial optimization

Combinatorial optimization solutions are at the heart of many important products in finance, logistics, energy, science, and electronics design. Most of these tasks are described using graphs. And over the last century, a lot of effort has been spent creating more efficient algorithmic solutions. However, the machine learning revolution has given us new, compelling approaches. Google Brain

team used graph neural networks to optimize power consumption, area and performance of chips for new hardware like Google TPU... A computer processor can be represented as a memory graph and logic components, each with its own coordinates and type. Determining the location for each component, taking into account the constraints on the density of placement and load routing, is still a laborious process, a work of art for electrical engineers. The combination of the graph model with policy and reinforcement learning allows finding optimal chip placement and creating more performing chips compared to those designed by humans.

Another approach involves integrating the machine learning model into existing solution tools. For example, a team led by M. Gassproposed a graph network that learns branch-and-bound variable selection policies: a critical operation in mixed-integer linear program (MILP) solution tools. As a result, the learned representations try to minimize the duration of the solution tools and demonstrate a good trade-off between output speed and solution quality.

In more recent collaborationDeepMind and Google's graph networks are used in two key subproblems solved by MILP tools: co-assignment of variables and constraint on target values. The proposed neural network-based approach turned out to be 2-10 times faster than existing solution tools using huge datasets, including those used by Google for product packaging and planning systems. If you are interested in this area, then you can recommend a couple of recent studies ( 1 , 2 ), which discuss the combination of graph neural networks, machine learning, and combinatorial optimization much more deeply.

Computer vision

Objects in the real world are deeply interconnected, so images of these objects can be successfully processed using graph neural networks. For example, you can perceive the content of an image through scene graphs - a set of objects in a picture with their relationships. Scene graphs are used to find images, understand and make sense of their content, add subtitles, answer visual questions, and generate images. These graphs can greatly improve the performance of your models.

In one of the works of Facebookdescribed that you can put objects from the popular COCO dataset into a frame, set their positions and sizes, and based on this information, a scene graph will be created. With its help, the graph neural network determines the embeddings of objects, from which, in turn, the convolutional neural network creates object masks, frames and outlines. End users can simply add new nodes to the graph (by defining the relative position and size of the nodes) so that neural networks can generate images with these objects.

Another source of graphs in computer vision is the juxtaposition of two related images. This is a classic problem that used to be solved manually by creating descriptors. Magic Leap, a 3D graphics company created an architecture based on graph neural networks called SuperGlue . This architecture allows real-time video matching for 3D scene replay, location recognition, simultaneous localization and mapping (SLAM). SuperGlue consists of a graphical neural network based on the attention mechanism. It teaches you to find the key points of the image, which are then transferred to the optimal transport layer for matching. On modern video cards, the model is capable of working in real time and can be integrated into SLAM systems. More details about the combination of graphs and computer vision are described in these studies: 1 , 2 .

Physics and chemistry

Representation of interactions between particles or molecules in the form of graphs and predicting the properties of new materials and substances using graph neural networks allows solving various natural science problems. For example, through the Open Catalyst project, Facebook and CMU are looking for new ways to store renewable solar and wind energy. One possible solution is to convert this energy through chemical reactions into other fuels, say, hydrogen. But for this it is necessary to create new catalysts for high-intensity chemical reactions, and methods known today like DFT are very expensive. The authors of the project postedthe largest collection of catalysts, DFT decays and baselines for graph neural networks. The developers hope to find new low-cost molecular simulations that complement the current expensive simulations that run over days with efficient estimates of energy and intermolecular forces that are computed within milliseconds.

Researchers at DeepMind have also used graph neural networks to emulate the dynamics of complex particle systems such as water and sand. By predicting the relative motion of each particle at each step, one can plausibly recreate the dynamics of the entire system and learn more about the laws governing this motion. For example, this is how they try to solve the most interesting of the unsolved problems in the theory of solids - the transition to the glassy state... Graph neural networks not only allow you to emulate the dynamics during the transition, but also help you better understand how particles affect each other depending on time and distance.

The American physics laboratory Fermilab is working on the use of graph neural networks at the Large Hadron Collider to process millions of data and find those that may be associated with the discovery of new particles. The authors want to implement graph neural networks in programmable logic integrated circuits and embed them in processors for data collection , so that graph neural networks can be used remotely from anywhere in the world. Read more about their application in high energy physics in this study .

Drug development

Pharmaceutical companies are actively seeking new methods of drug development, competing fiercely with each other and spending billions of dollars on research. In biology, graphs can be used to represent interactions at different levels. For example, at the molecular level, bonds between nodes will denote interatomic forces in a molecule, or interactions between amino acid bases in a protein. On a larger scale, graphs can represent interactions between proteins and RNA or metabolic products. Depending on the level of abstraction, graphs can be used for targeted identification, predicting molecular properties, high-throughput screening, new drug design, protein design, and drug repurposing.

Probably the most promising result of the use of graph neural networks in this area is the work by researchers at MIT, published in Cell in 2020. They applied a deep learning model called Chemprop , which predicted the antibiotic properties of molecules: inhibiting the proliferation of E. coli. After training with just 2,500 molecules from an FDA-approved library, Chemprop was applied to a larger dataset, including a Drug Repurposing Hub containing a Halicin molecule , renamed in honor of the AI HAL 9000from the movie "A Space Odyssey 2001". It is noteworthy that before that Halicin was studied only in relation to the treatment of diabetes, because its structure is very different from known antibiotics. But clinical experiments in vitro and in vivo have shown that Halicin is a broad spectrum antibiotic. Extensive comparison with strong neural network models highlighted the importance of Halicin's properties discovered using graph neural networks. In addition to the practical role of this work, the Chemprop architecture is interesting for others: unlike many graph neural networks, it contains 5 layers and 1600 hidden dimensions, which is much more than the typical parameters of graph neural networks for such tasks. Hope this was just one of the few AI discoveries in future new medicine. Read more about this direction hereand here .

When graph neural networks became a trend

Graph embeddings peaked in 2018, when the structural embedding and graph neural network models proposed in 2016 were tested in many practical applications and showed high performance, including the most famous example of the PinSAGE model used in the recommendations of the social network Pinterest. Since then, the growth of research on this topic has become exponential, and there are more and more applications in areas where previously methods were not able to effectively take into account communication between objects in models. It is noteworthy that machine learning automation and the search for new efficient neural network architectures have also received a new impetus for development thanks to graph neural networks.

Are they a trend in Russia too?

Unfortunately, Russia in most cases lags behind modern research in the field of artificial intelligence. The number of articles at international conferences and in leading journals is an order of magnitude less than articles by scientists from the United States, Europe and China, and financial support for research in new areas meets resistance in the ossified environment of academics who are stuck in approaches from the last century and issue grants rather on the principle of gerontocratic nepotism. than real achievements. As a result, at leading conferences such as TheWebConf, ICDM, WSDM, KDD, NIPS, the names of Russian scientists are mainly affiliated with Western universities, which reflects the trend for brain drain, as well as serious competition from advanced countries in the field of AI development, especially China. ...

If you look at conferences in computer science with the highest A * rating according to Core, for example, conferences on high performance computing, then the average grant size for articles accepted in the main track is $ 1 million, which loses to the maximum programs from the Russian scientific foundation in 15 30 times. In such conditions, research carried out in large companies with R&D organization, in fact, are the only drivers in the search for new approaches based on graph neural networks.

In Russia, the theory of graph neural networks is being studied at the Higher School of Economics under my leadership, there are also groups at Skoltech and MIPT, applied research is carried out at ITMO, Kazan Federal University, as well as at Sber's AI Laboratory, in R&D projects JetBrains, Mail.ru Group, Yandex.

On the world stage, the drivers are Twitter, Google, Amazon, Facebook, Pinterest.

Fleeting or long-term trend?

Like all trends, the fashion for graph neural networks has given way to transformers with their large architectures describing all possible dependencies in unstructured data, but generating models that cost millions of dollars to train and are available only to mega-corporations. Graph neural networks have not only successfully taken their place as a standard for building machine learning on structural data, but have also proven to be an effective means of building structural attention in related industries, including high efficiency in multi-example learning problems and metric learning. I am sure that it is thanks to graph neural networks that we will get new discoveries in materials science, pharmacology and medicine. Perhaps there will be new, more efficient models for big data that have the properties of transferring knowledge between different graph data.Models will overcome the problems of applicability for graphs, whose structure is opposite to the similarity of features, but in general, this area of machine learning has turned into an independent science, and it's time to dive into it right now, this is a rare chance to participate in the development of a new industry, both in science and in industrial applications.

If you want to improve your skills in machine learning and study graph neural networks, we are waiting for you in our MADE Big Data Academy .