Who is this article for?

Have you ever been handed a code or program whose dependency tree looks like a tangled circuit board?

What dependency management looks like

No problem, I'm sure the developer has kindly provided you with an installation script to get everything working. So, you run its script, and immediately you see a bunch of error log messages in the shell. “Everything worked on my machine,” - this is how the developer usually answers when you ask him for help.

Docker solves this problem by providing almost trivial portability to dockerized applications. In this article, I'll show you how to quickly dockerize your Python applications so they can be easily shared with anyone who has Docker.

In particular, we'll look at scripts that need to run as a background process.

Github and Docker repositories

If visibility is more convenient for you, then study the Github and Docker repositories where this code will be hosted.

But ... why Docker?

Containerization can be compared to placing your software in a shipping container that provides a standard interface for the shipping company (or other host computer) that allows it to interact with the software.

Application containerization is actually the gold standard for portability.

General Docker / Containerization Framework

Containerization (especially with docker ) opens up tremendous possibilities for your software application. A properly containerized (for example, dockerized) application can be deployed scalable via Kubernetes or Scale Sets from any cloud provider. And yes, we will also talk about this in the next article.

Our application

There won't be anything too complicated in it - we are again working with a simple script that monitors changes in the directory (since I work on Linux, this is

/tmp

). Logs will be pushed to stdout, which is important if we want them to appear in docker logs (more on that later).

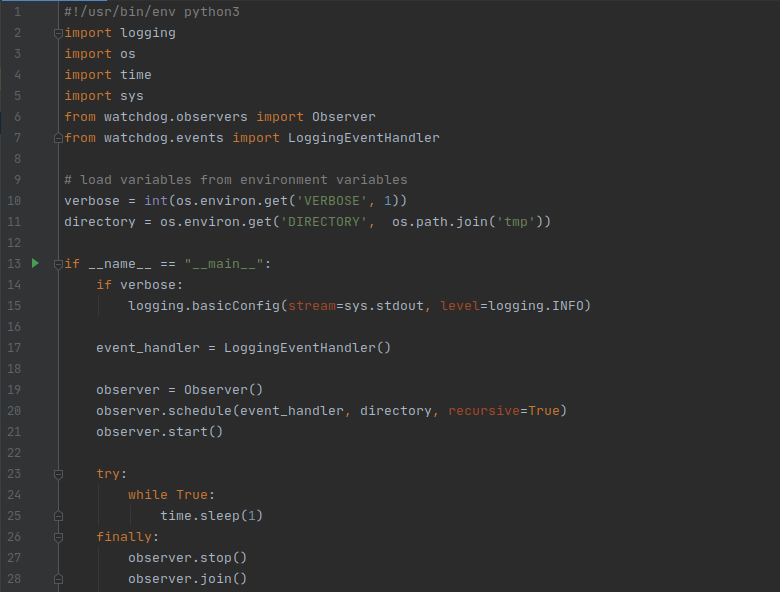

main.py: A simple file monitoring application

This program will run indefinitely.

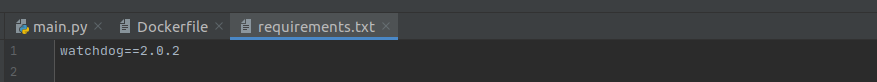

As usual, we have a file

requirements.txt

with dependencies, this time with only one:

requirements.txt

Create Dockerfile

In my previous article, we created a script for the installation process in the Makefile, which makes them very easy to share. This time we'll do something similar, but in Docker.

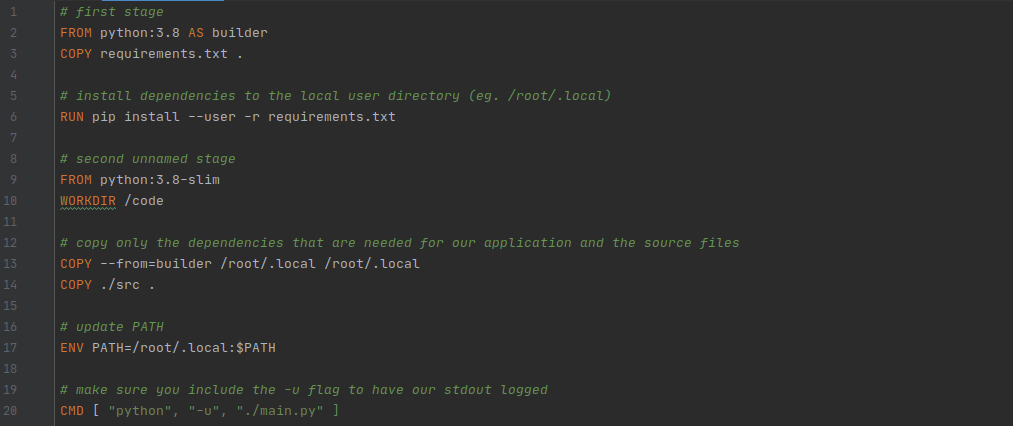

Dockerfile

We don't need to go into the details of the structure and operation of the Dockerfile, there are more detailed tutorials about it .

Dockerfile summary - we start with a base image containing the full Python interpreter and its packages, then install the dependencies (line 6), create a new minimalistic image (line 9), copy the dependencies and code into a new image (lines 13-14; this is is called a multi-stage build, in our case it reduced the size of the finished image from 1 GB to 200 MB), we set the environment variable (line 17) and the execution command (line 20), which is where we end.

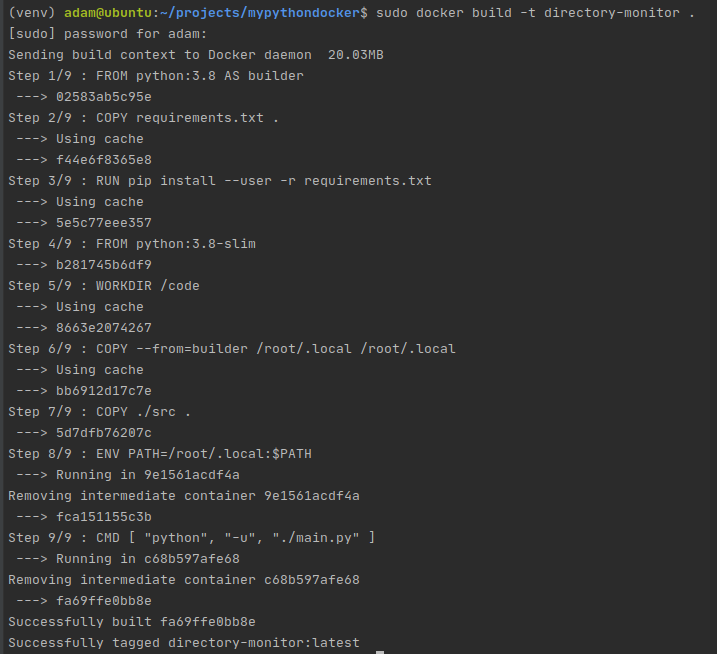

Assembling the image

Having finished with the Dockerfile, we simply run the following command from our project directory:

sudo docker build -t directory-monitor .

Putting together the image

Running the image

After completing the assembly, you can begin to create magic.

One of the great things about Docker is that it provides a standardized interface. So if you design your program correctly, then transferring it to someone else, it will be enough to say that you need to learn docker (if the person does not know it yet), and not teach him the intricacies of your program's device.

Want to see what I mean?

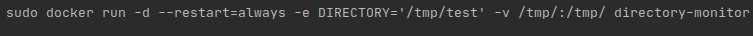

The command to run the program looks like this:

There is a lot to explain here, so let's break it down into parts:

-d

- launching the image in detached mode, not in foreground mode

--restart=always

- if the docker container crashes, it will restart. We can recover from accidents, hurray!

--e DIRECTORY='/tmp/test'

- we pass the directory that needs to be monitored using environment variables. (We can also design our python program to read arguments and pass the tracked directory in that way.)

-v /tmp/:/tmp/

- mount the directory

/tmp

to the

/tmp

Docker container directory . This is important: any directory we want to keep track of MUST be visible to our processes in the docker container, and this is how it is implemented.

directory-monitor

- name of the image to be launched

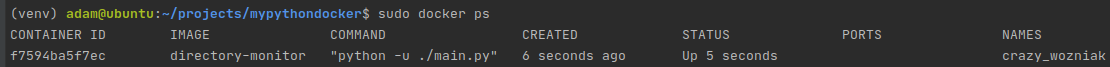

After launching the image, its state can be checked using the command

docker ps

:

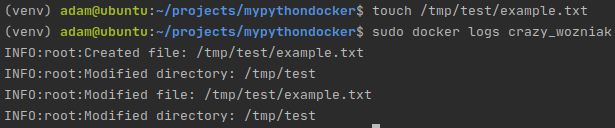

Docker ps output Docker generates crazy names for running containers because people don't remember hash values very well. In this case, the name crazy_wozniak refers to our container.

Now, since we are tracking

/tmp/test

on my local machine, if I create a new file in this directory, then this should be reflected in the container logs:

Docker logs demonstrate that the application is working correctly

That's it, your program is now dockerized and running on your machine. Next, we need to solve the problem of transferring the program to other people.

Share the program

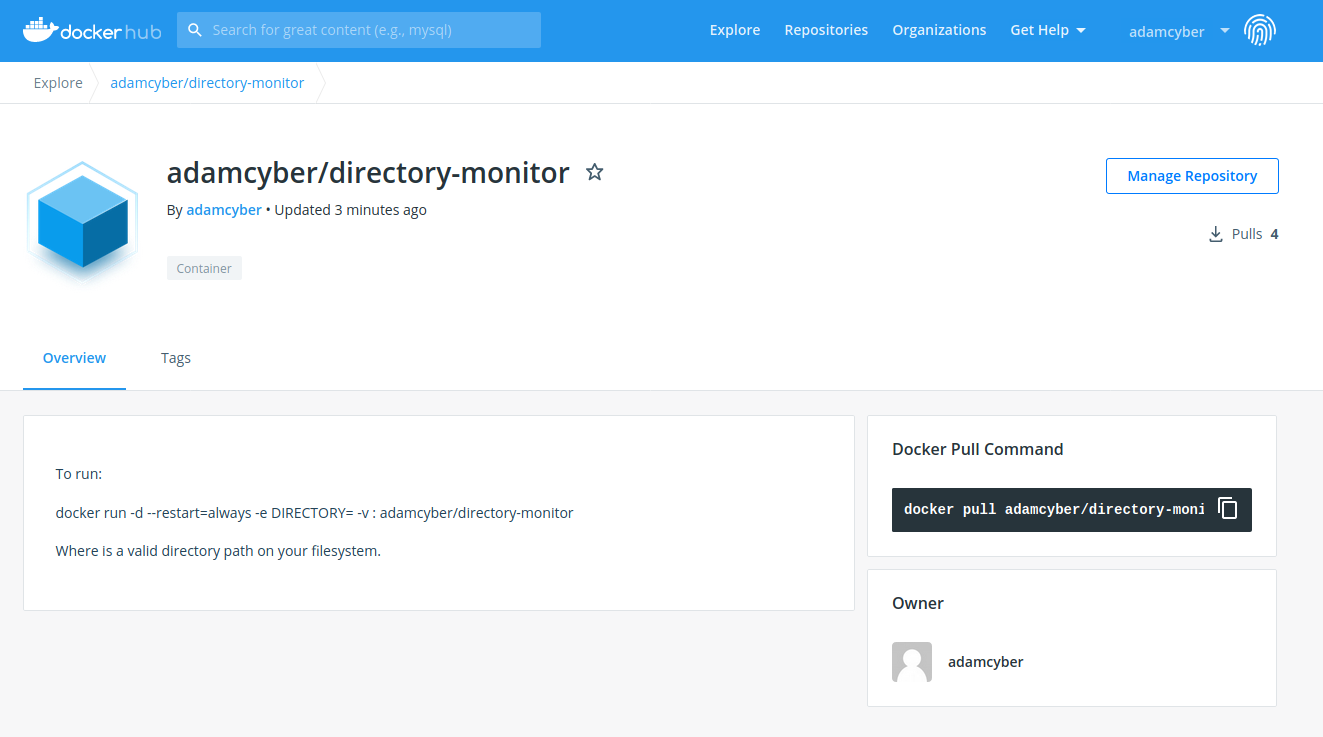

Your dockerized program can be useful to your colleagues, friends, you in the future, and anyone else in the world, so we need to make it easier to distribute. The ideal solution for this is Docker hub .

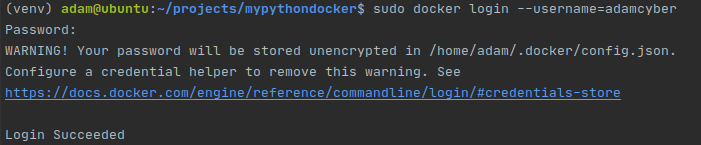

If you don't have an account yet, register and then login from cli:

Log in to Dockerhub

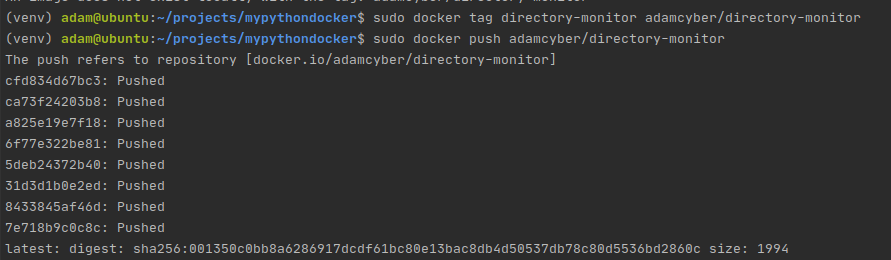

Next, mark and push the newly created image to your account.

Add a label and push the image

Now the image is in your docker hub account.To

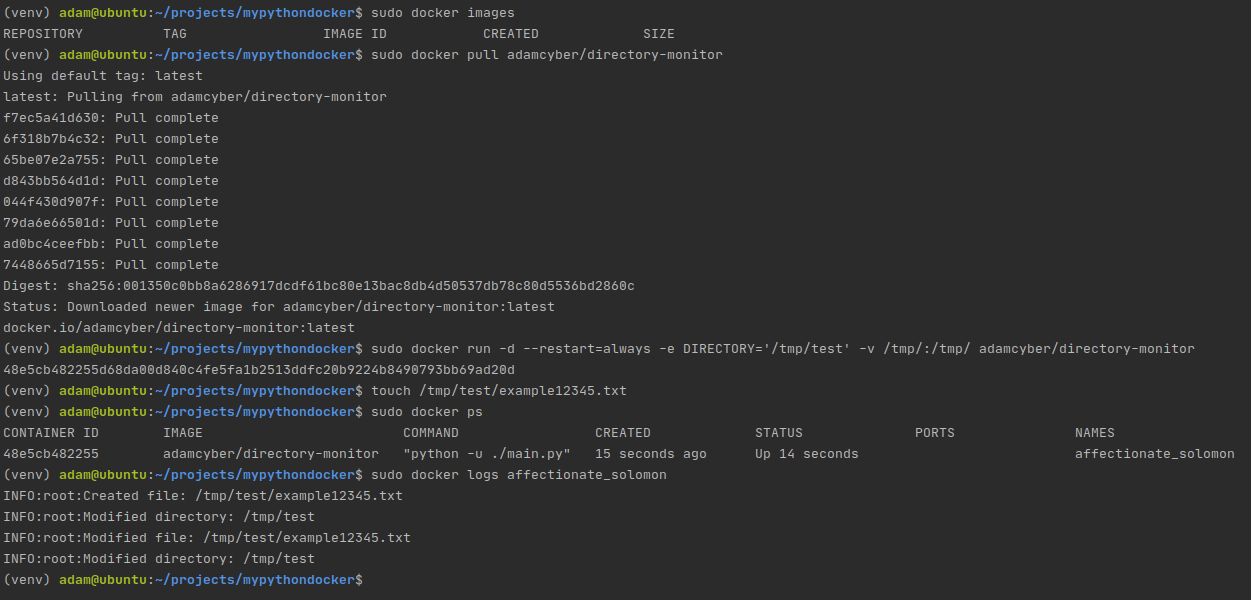

make sure everything works, let's try to pull this image and use it in end-to-end testing of all the work we have done:

Testing our docker image end-to-end.

This entire process took only 30 seconds.

What's next?

Hopefully I've been able to convince you of the amazing practicality of containerization. Docker will stay with us for a long time, and the sooner you master it, the more benefits you will get.

Docker is all about reducing complexity. In our example, it was a simple Python script, but you can use this tutorial to create images of arbitrary complexity with dependency trees that resemble spaghetti, but these difficulties will not affect the end user .

Sources of

- https://runnable.com/docker/python/dockerize-your-python-application

- https://www.docker.com/

- https://kubernetes.io/

- https://stackoverflow.com/questions/30449313/how-do-i-make-a-docker-container-start-automatically-on-system-boot

- https://docs.docker.com/docker-hub/repos/

Advertising

Vdsina offers virtual servers on Linux or Windows. We use exclusively branded equipment , the best-of-its-kind proprietary server control panel and one of the best data centers in Russia and the EU. Hurry up to order!