Another, perhaps most curious, was the mistake in the huge order from the French railways SNCF for 2 thousand trains in 2014. The team that formed the technical requirements personally measured the dimensions of the aprons at several dozen stations. Wanting to increase comfort, they set the width of the compositions back to back to the maximum. They carried out measurements in the vicinity of Paris - and that in the regions at many stations the aprons are closer to the tracks, they learned already during the tests. The price of a mistake is the modernization of the entire infrastructure for hundreds of millions of euros. They would be there MDM with the characteristics of the stations ...

This is followed by a huge number of exchange and banking errors, when incorrect data in the details, in numbers and the value of the shares being placed led to billions of dollars in losses or even bankruptcy.

This article continues the article " master data and integration " - and in more detail covers the issue of quality control of data, primarily - master data. The article will be of particular interest to IT executives, architects, integrators, as well as everyone who works in fairly large companies.

Content

1. Dictionary, types of business data: master data, regulatory reference information, operational data.

2. Briefly about what mistakes are.

3. Architecture of DQS solutions.

4. Technical and non-technical methods of dealing with errors:

4.1. NSI.

4.2. Master data.

4.3. Operating system.

5. What to do when none of the above has helped - implement DQS.

6. And how to share responsibility?

If you are already familiar with the terminology and problems, skip straight to Part 3, on the DQS architecture.

1. Dictionary, types of business data

For a couple of decades now, IT evangelists have been convincing us that data is the new oil. That any business is increasingly dependent on the information it possesses. Analytical and data departments appear not only in IT companies, but also in the most distant industrial and industrial sectors.

Many people have already gotten sore on the example of how General Electric and Boeing create "digital" subsidiaries and earn on the huge amount of information collected from the owners of their equipment - aircraft, turbines, power plants. These data allow them to increase the reliability of equipment, predict possible failures, greatly saving on potential damage, and finally, simply save people's lives!

Data is becoming more and more, and its accumulation is nonlinearly dependent on business growth, growth is outstripping. Any growing company at a certain stage of its development (approximately at level 6-7 on the scale from the previous article ) encounters problems with incorrect data, and there are always several cases when the cost of these errors turns out to be quite high.

The traditional picture of data growth is almost always exponential.

In the course of business, three types of data are of particular importance to the company:

- - — , , . , ( : , , ), , , ..;

- - () — -, . , : () , , , ;

- operational (aka transactional) data - the fact of the sale of a specific product to a specific client, invoices and acts, courses taken, courier orders and taxi rides - depending on what your company is doing.

If NSI can be compared with a supporting skeleton, master data with veins and arteries, then the operating system is the blood that runs through these veins.

The differentiation of the types of business data is necessary for the reason that each will have their own approach to working on errors, about this below.

2. Briefly about what mistakes are

Errors are inevitable, they arise always and everywhere, and, apparently, reflect the chaotic nature of the universe itself. You can consider them something bad, get upset because of them, but think about it: mistakes are at the heart of evolution! Yes, each next species is the previous one with several random errors in DNA, only the consequences of these errors turned out to be useful under certain conditions.

The main types of mistakes that a business suffers from:

- human factor. Typos of all kinds, confused fields and misplaced information. Forgotten or accidentally missed actions and steps when entering it (you also have 50 fields in your customer card?) Statically, this is the most likely type of errors, so the frequency and effect of them may turn out to be the greatest. Fortunately, the largest number of methods have been invented to combat them;

- . , , . , — , . , , . … , , ? , , , CRM : ! !

- deliberate mistakes. The employee deliberately transferred several million to himself - and disappeared. This, of course, is an extreme example, a crime, but there are many steps on the way to it. For example, one of the customers in CRM is assigned an undeservedly high discount or the cost of the item is set below the cost price.

And if the third is the subject of work of the information security service, it has its own methods, then we will work substantively with the human factor and incompleteness.

3. Architecture of DQS solutions

DQM - data quality management, data quality management.

DQS - data quality system, data quality [management] system.

Before talking directly about data quality management systems (DQS is not so much a specific software as an approach to working with data), I will describe the IT architecture.

Usually, by the time the issue of data quality management arises, the IT landscape is as follows:

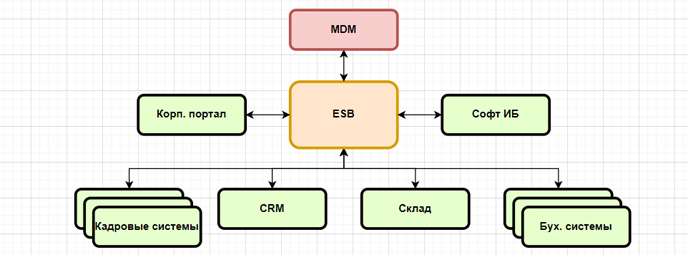

(diagram from the previous article)

Where MDM is a system for maintaining master data and regulations, and ESB is a single enterprise data bus. A frequent situation is when not all data and information flows between systems are still involved in a common loop, and some systems communicate directly with each other - this will need to be worked out, otherwise a number of processes will be a "blind spot" for DQS.

Traditionally, at the first stage, DQS is connected to the MDM system, since quality management of master data is considered a higher priority than operating systems. However, in the future, it is included in the common data bus as one of the stages of the processes, or presents its "services" in the API format. In concrete figures, there is approximately a tenfold difference in the amount of data between the first and the second scheme, or one level on the scale from the previous article.

4. Technical and non-technical methods of dealing with errors.

The next sentence will contain the saddest thought of this article. There is no silver bullet. There is no such button or system that you put in - and the errors will disappear. In general, there is no simple and unambiguous solution to this complex problem. What works great for one view or dataset will be useless for another.

The good news, however, is that the set of technical and organizational methods outlined in this article below will dramatically reduce errors. Companies implementing the DQM approach reduce the number of detected errors by 50-500 times. The specific figure is the result of a reasonable balance between effect, cost and usability.

4.1. Reference information.

In the case of normative and reference information (in fact, state classifiers), there is a maximally categorical solution, and it is universal: you do not have to maintain the normative documents yourself! Never, under any circumstances!

The standard must always and strictly be loaded from external sources, and your main task is to implement such loading and establish operational monitoring in case of failures.

#1. . : ( ), ( ), ( ).

, , ( - ) . , — ( ).

, : . - , . , . , , … .

( — ), (), (), (), , ( ) — API , .

As a result of these measures, no one in your company should ever think to enter, for example, the dollar / ruble exchange rate for yesterday manually. Only a selection from guides downloaded from official sources.

The categorical nature of this point is due to the fact that its implementation removes almost all errors in the norm. And if errors in the master data cannot be completely overcome, then in the NSI this way it is possible to reduce the number of errors to one or two per year - and these will no longer be your mistakes, but errors in state data.

4.2. Master data

The main strategy for master data may sound paradoxical: turn it into normative!

#2. — , ( 5-6 — , ).

MDM, : , . — .

, . . . (, , ) — (). — . -, (, -). , , .

, . , .

#3. , . , , . , , .

- . ? — . . : . , .

A natural continuation of this story will be an electronic personnel document flow - an electronic work book, electronic sick leave, etc., which will significantly save labor costs for personnel officers. In the limit, this will allow one personnel officer to serve not 200-300 employees, but 1000+.

Also, all employees automatically receive electronic signature keys - and will be able to use them both in internal business processes and in document flow with clients.

Information about debts, convictions, etc. available in open form via API acc. government services, integration with them is extremely simple, and will allow your company to close a large number of risks at once.

4.3. Operating system

There are already more approaches here. The first one is similar to the previous one - to connect external sources of information.

#4. — , — , — — . - ? .

. . , — , . , , .. .

— -. , . ( , !)

(, ).

, - - ? ( , ) — . , -, , .

#5. : , .

— , , -, ( , ). -, API , . — , . .. , .

Yes, not in all processes it will be possible to quickly find the necessary sources of information; search and analytics will be required. Also, the sources may turn out to be paid, and then the pros and cons are weighed, but the approach is working and has been repeatedly tested in practice.

Information (data) is a new oil, and all states strive to obtain the maximum possible amount of information about their subjects, including business, about all the processes in which they participate.

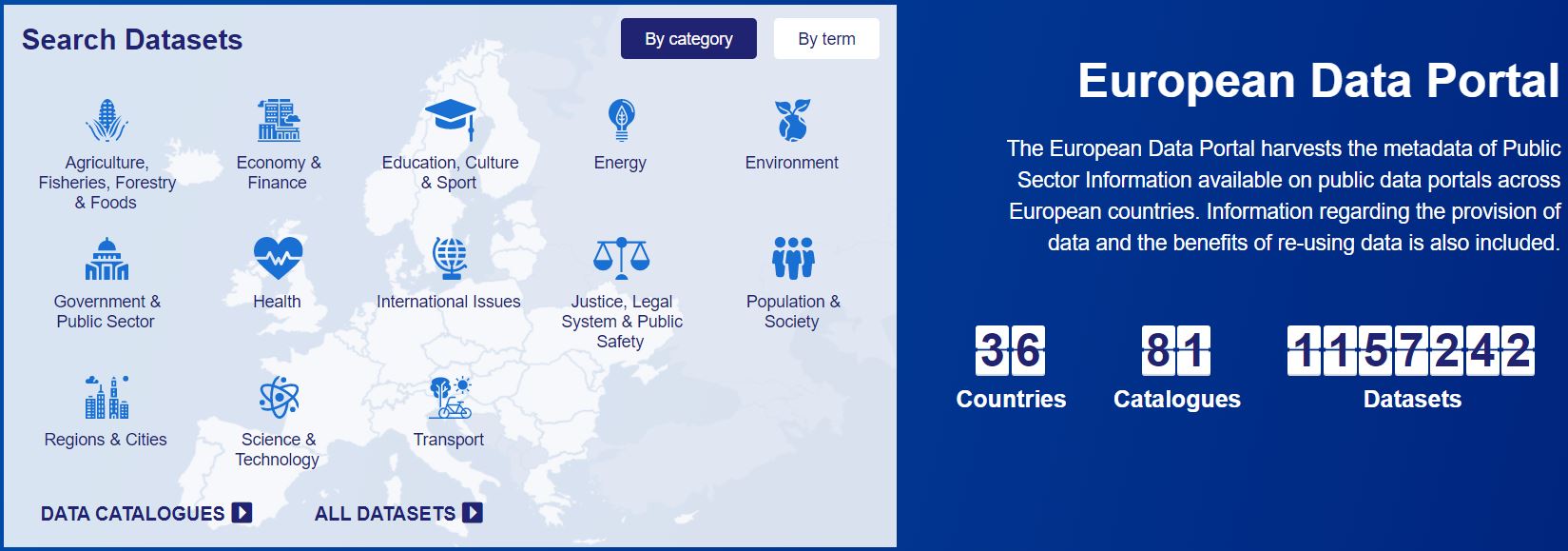

It's even hard for us to imagine what information the state collects, I can only say that at the time of this writing, about 20 thousand data sets are presented on the Russian open data portal. And Russia is only at the beginning of this path, so, on a similar portal of the European Union, more than a million sets of open data are available!

www.europeandataportal.eu/en

- Where is DQS here, - an attentive reader will ask?

And there was nothing about her yet.

All of the above are, in fact, standard tools and methods for organizing business processes with a minimum number of errors.

5. What to do when none of the above has helped - implement DQS

Sun Tzu teaches that the best battle is the one avoided.

The situation with the implementation of DQS is somewhat similar.

Your task is to try to maximize the transformation of master data and even operating systems into reference data, and in some industries, especially in the service sector, this is almost 100% possible. Most of all in the banking sector, therefore, the degree of automation of business processes in it is much greater than that of many others.

Nevertheless, if the battle cannot be avoided, you need to prepare for it as properly as possible.

At what level of company development should DQS be introduced? As a DQM process - by 4-5 (earlier than MDM systems!), As an organizationally dedicated function - at 7-8.

5.1. DQM as a process

If your company has an accounting or personnel system, then you will have a DQM process in some form. All these systems have a built-in set of rules for input data. For example, the obligatory and strict format of the date of birth for the employee, the obligatory name for the counterparties.

Your task at this stage will be to build the DQM process. He is next:

- come up with a rule;

- test the rule for applicability and adequacy, test it on cases;

- develop regulations for the application of the rule, communicate with users, justify;

- implement into production;

- monitor attempts to circumvent the rule.

If you have managed to implement MDM in the company, then the points from the second onwards should not cause you any special difficulties, this is the current systematic work.

The greatest difficulties in this case arise with coming up with new rules.

5.2. rules

If for such an entity as a full name, your imagination is limited to the obligatory name and surname, and for a date - to check for “no more than a hundred years”, do not be discouraged!

There is a great technique for developing new rules to test the most unimaginable data. To master it, you do not need to be seven inches in the forehead - and, as practice shows, any novice system or business analyst, even operators who enter master data, can master it.

In fact, this is a step-by-step script, which at the input has the definition of your data, and at the output - a set of rules for all occasions. The technique, known as taxonomy of dirty data, was developed by a group of European data scientists in the early 21st century.

The essence of the approach, as well as practical examples, are given in their system article, fortunately already published in translation here on Habré - habr.com/ru/post/548164

If the problem of data quality is not an empty phrase for you, then after a thoughtful reading of that of the article, you will find yourself in a state close to achieving nirvana :)

Example # 6 . Strong typing. If the data type "date" is used in the reference, then the structure of the date should be as explicit as possible. If you decided to save two seconds for operators, and made a template like “__.__.__” with a hint “day, month, year”, be sure that on the very first day the records “18.04.21”, “21.04.18 ”And“ 04.18.21 ”.

A good way to enter a date is three fields with an explicit designation (day, month, year) and a quick jump when entering two numbers in each of the fields. If you have ever paid for something with a card on the Internet, you will understand.

Example # 7 . Forbidden characters in the widest possible list of fields, dictionary checks. For example, if we are talking about education (position), and the classifiers of specialties did not help, you allow the user to enter data in the text field, even if periods, quotes, and free-standing dashes are prohibited there (the list is not complete). An example of information, the quality of which is increasing: "Doctor of Technical Sciences", "Doctor of Technical Sciences", "DTN", "Dr. Tech. sciences ”, etc.

#8. (NULL) — . , / , / — , . — “ ”.

, , . , “”, “”, “”, “” ( .) , , . (“ ”, “, ”) (“ ”, “-”, “ ”). — . , , “” “” — , — . “”, “”…

, , . , , , .

6. DQS?

In matters of management and responsibility, there are no right answers; rather, everything depends on specific teams and individuals. A rocket engineer might be a chief accountant, an artist might be a finance director, and a primary school teacher might be a security chief.

The question about responsibility for the DQM process is actually even more general: who is responsible for the quality of the data in the company? Traditionally, business users and the IT department act as antagonists in answering this question.

Businesses often start a dialogue with the statement “we noticed an error in your meteor data system”.

The IT service, on the other hand, believes that its task is to ensure the smooth operation of systems, and what specific data business users enter into the system is the business responsibility.

Establishing a working DQM process and running DQS is a compromise that satisfies both parties. The challenge for IT and analysts is to develop as many rules and constraints as possible on data input to minimize the risk of error.

The “business” attitude is usually caused by a lack of transparency in DQM processes. However, if we reduce it to a clear demonstration of the error, the position softens. And it can reach agreement in the case of demonstrating the consequences to the one who enters the primary data.

An amazing example of both motivation and even visualization of the consequences of errors is given in the article habr.com/ru/post/347838 - in this example, an IT service with advanced business analysis competencies is responsible for the DQM process. Moreover, the DQM competencies themselves are not difficult, and can be developed by any analyst in a couple of months.

Another example, interesting because the DQM process also includes business process quality management, is given in the article habr.com/ru/company/otus/blog/526174 .

Outcomes

The general conclusions from this article are paradoxical.

If your company has been asked the question “who is responsible for the quality of the data,” then you have fallen into a trap. There is no correct answer to it, tk. the question itself is wrong. If you try to go down this path, you will eventually realize that the only appropriate answer to this question (“everything”) will give you nothing in practice.

The correct approach is to split the question into two blocks.

The first one is building DQM as a process, implementing DQS, forming rules (not on an ad hoc basis, but as an ongoing process). This unit lives where analysis functions are strong, usually in IT, but not necessarily.

The second block - the input of the primary data itself - is the place where decisions are made about specific data, but not at random, but on the basis of all the rules. Thus, the implementation of DQS is an important step towards a data driven company.

I invite you to the discussion!