Nowadays, no project can do without analysis and word processing, and it just so happens that Python has a wide range of libraries and frameworks for NLP tasks. Tasks can be as trivial: text sentiment analysis, mood, entity recognition (NER) and more interesting bots, comparison of dialogues in support chats - to monitor whether your tech support or sales text scripts should be monitored, or text post-processing after SpeechToText.

A huge number of tools are available for solving NLP problems. Here is a short list of those:

CoreNLP

NLTK

TextBlob

Spacy

Spark NLP

Speech, as you understand, will focus on the latter, since it includes almost everything that the above libraries can do. There are both free pre - trained models and paid, highly specialized ones, for example, for healthcare .

To run Spark NLP you need Java 8 - it is needed for the Apache Spark framework with which Spark NLP works. Experiments on a server or local machine require a minimum of 16GB of RAM. It is better to install it on some Linux distribution (difficulties may arise on macOS), personally I chose the Ubuntu instance on AWS.

apt-get -qy install openjdk-8

You also need to install Python3 and related libraries

apt-get -qy install build-essential python3 python3-pip python3-dev gnupg2

pip install nlu==1.1.3

pip install pyspark==2.4.7

pip install spark-nlp==2.7.4

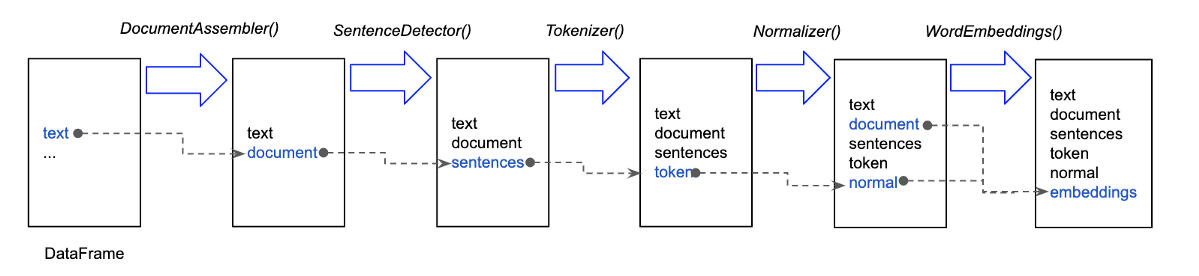

colab. Spark NLP (pipeline), pipe-, , : . , .

. ( colab)

documentAssembler = DocumentAssembler() \

.setInputCol('text') \

.setOutputCol('document')

tokenizer = Tokenizer() \

.setInputCols(['document']) \

.setOutputCol('token')

embeddings = BertEmbeddings.pretrained(name='bert_base_cased', lang='en') \

.setInputCols(['document', 'token']) \

.setOutputCol('embeddings')

ner_model = NerDLModel.pretrained('ner_dl_bert', 'en') \

.setInputCols(['document', 'token', 'embeddings']) \

.setOutputCol('ner')

ner_converter = NerConverter() \

.setInputCols(['document', 'token', 'ner']) \

.setOutputCol('ner_chunk')

nlp_pipeline = Pipeline(stages=[

documentAssembler,

tokenizer,

embeddings,

ner_model,

ner_converter

])

documentAssembler - Document,

tokenizer -

embeddings -

ner_model - . : October 28, 1955 = DATE

ner_converter - October 28, 1955

, - - , Spark NLP, SparkNLP (johnsnowlabs) SparkNLP - , :

import nlu

pipeline = nlu.load('ner')

result = pipeline.predict(

text, output_level='document'

).to_dict(orient='records')

NER, .

I would also like to note that both options for obtaining named entities require some time to initialize Apache Spark, preload models and establish a connection between the Python interpreter and Spark via pyspark. Therefore, you do not really want to restart the script with the code above 10-100 times, you need to provide for preloading and simply process the text by calling predict, in my case I made the initialization of the pipelines I needed during the initialization of Celery workers.

#

pipeline_registry = PipelineRegistry()

def get_pipeline_registry():

pipeline_registry.register('sentiment', nlu.load('en.sentiment'))

pipeline_registry.register('ner', nlu.load('ner'))

pipeline_registry.register('stopwords', nlu.load('stopwords'))

pipeline_registry.register('stemmer', nlu.load('stemm'))

pipeline_registry.register('emotion', nlu.load('emotion'))

return pipeline_registry

@worker_process_init.connect

def init_worker(**kwargs):

logging.info("Initializing pipeline_factory...")

get_pipeline_registry()

In this way, you can perform NLP tasks without brain pain and with a minimum of effort.