While trying to implement reverse image search for my site, I came across the huge world of image search. Below are brief descriptions and use cases for some of the reverse / similar image search approaches.

Dataset used

Perceptual hash

[ Colab ]

Detailed description of how phash works

From the images, we create hashes of a given length. The smaller the Hamming distance between two hashes, the more the similarity of the images.

- . N ( - threshold').

? ! Vantage-point tree, O(n log n) O(log n).

, , , vantage-point tree , for. , 100 . , ... , vptree . ? , vantage point tree PyPI, 1 - vptree. , - . vp-tree javascript . for-loop , vptree 10 . - , top N , . , vp-tree , . gist

- vp-tree, . , . / vp-tree c / , /.

{transformation_name}

- . - , "" .

https://habr.com/ru/post/205398/

https://habr.com/ru/post/211773/

: phash , preview/thumbnail. .

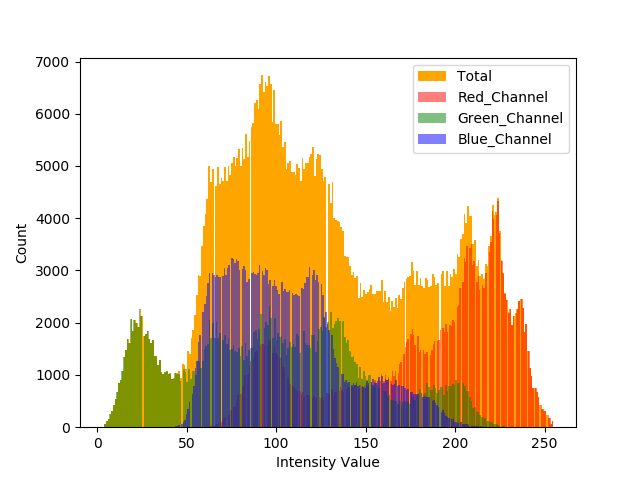

RGB Histogram

[Colab]

RGB , , , .

: . , .

flatten, , 4096 .

k-nearest neighbor search, .

. approximate nearest neighbor search. hnswlib, Hierarchical Navigable Small World. 50-70ms, 0.4ms.

.

approximate nearest neighbor search - https://habr.com/ru/company/mailru/blog/338360/

,

, phash

( 16 RGB 4096)

,

SIFT/SURF/ORB

[SIFT Colab]

SIFT.

, SURF ORB. SIFT - Root SIFT.

:

descs /= (descs.sum(axis=1, keepdims=True) + eps)

descs = np.sqrt(descs)

SIFT ~5 .

: SIFT features, Brute-Force Matcher(cv2.BFMatcher), matches.

:

SIFT , ,

:

( , python)

NN features

[Colab ResNet50] [Colab CLIP]

. . ResNet50 - 2048. "" ResNet50, knn . .

model = ResNet50(weights='imagenet', include_top=False,input_shape=(224, 224, 3),pooling='max')

- CLIP. , encode_image 512.

CLIP c , - , 224 aspect ratio, Center Crop, . .

. t-SNE.

t-SNE ResNet50 (10100x10100 7.91MB)

t-SNE CLIP (10100x10100 7.04MB)

Features CLIP . , CLIP , , .

:

approximate nearest neighbor search

:

features GPU

CLIP text search

[Colab CLIP]

CLIP:

CLIP , , . knn search features , features . .

text_tokenized = clip.tokenize(["a picture of a windows xp wallpaper"]).to(device)

with torch.no_grad():

text_features = model.encode_text(text_tokenized)

text_features /= text_features.norm(dim=-1, keepdim=True)

Github with all laptops