I write models for cases on Kaggle , study strangers and get inspired. All articles describing how to implement them in a web project, for me, a junior frontend student , give an overhead of complex information, but I just want to "borrow" any cool model and quickly implement it into my service. Hands itched to come up with a universal algorithm, so a solution was found quickly.

Let's get started. Step 1

I want to take the format of most models from Kaggle , so that in the future it would be easy to borrow someone else's code of any complexity without understanding it. For Boat carts write in the Python 3.9 , using liby pyTelegramBotAPI , to address the compatibility of extensions .py and .ipynb yuzaem ipynb .

And so, we install the dependencies:

pip install pyTelegramBotAPI pip install ipynb

Go to Kaggle and choose the model you like. I'll start with the classic Titanic case - Machine Learning from Disaster , borrow this solution (Titanic Random Forest: 82.78%), drag it into the bot project.

Install the appeared dependencies:

pip install <>

We saw the bot. Step 2

Create a new file and import our libs into it:

import telebot

from ipynb.fs.defs.ml import is_user_alive

ipynb.fs.defs.ml

ml , , is_user_alive

, . , .

, ( @BotFather):

bot = telebot.TeleBot('token')

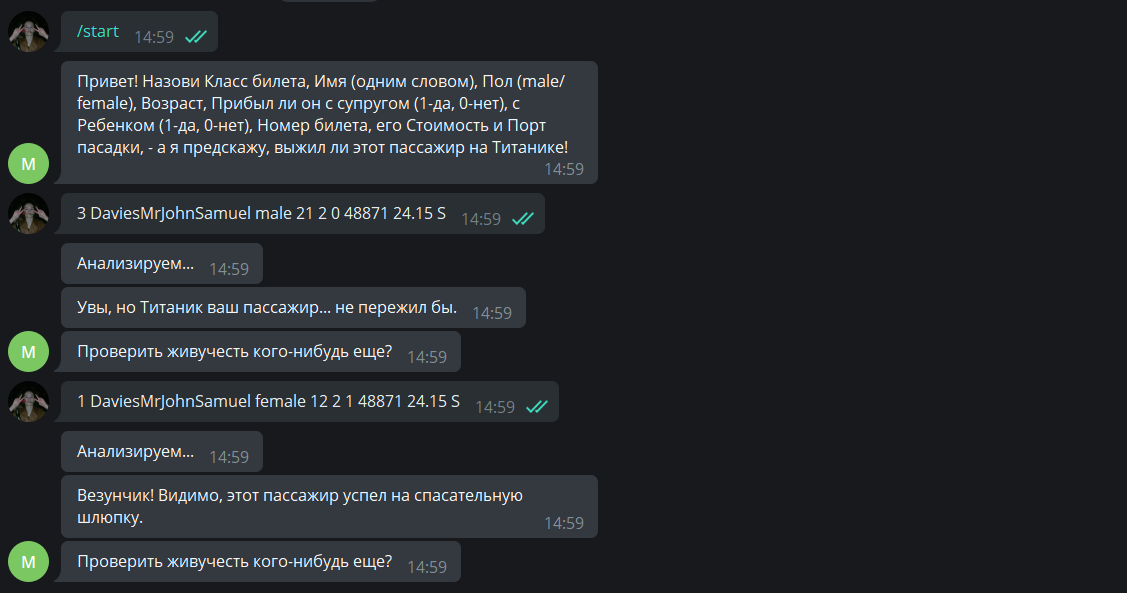

/start

, . , .

@bot.messagehandler(commands=['start'])

def welcome(message):

bot.sendmessage(message.chat.id, '! , ( ), '

' (male/female), , (1-, 0-), '

' (1-, 0-), , '

', - , !')

, . :

@bot.messagehandler(contenttypes=['text'])

def answer(message):

bot.sendmessage(message.chat.id, '…')

passengerdata = message.text.split()

passengerdata.insert(0, 0)

passengerdata.insert(9, ',')

passengerdata[2] = '"', passengerdata[2], '"'

, , 9 , .

. 3

cntrl+f

#%%

. . :

<>

def is_user_alive(user_data):

< >

- , :

with open(os.path.join('input', 'test.csv'), "a") as fp:

wr = csv.writer(fp, dialect='excel')

wr.writerow(user_data)

Predictions

( ). , (return

):

return predictions[len(predictions)-1:]['Survived']

. 4

answer

, 0 1… . , :

answer = is_user_alive(passenger_data)

if int(answer) == 1:

bot.sendmessage(message.chat.id, '! , .')

elif int(answer) == 0:

bot.send_message(message.chat.id, ', … .')

We create a function with a proposal to check someone else, call it from answer

with an message

argument:

def doagain(message):

bot.sendmessage(message.chat.id, ' - ?')

We start polling:

while True:

try:

bot.polling(none_stop=True)

except ():

time.sleep(5)

Result

Everything! Pretty simple, right?

If not, you can watch the video version:

Code: https://github.com/freakssha/ml-bot-titanic

This is a speedrun for itchy hands, it is not optimized and it can be improved a lot, but I don’t know how yet. If you understand how to do this without losing simplicity and versatility - write, please!