Google's Belgian data center is fully powered by its own solar power plant, and the equipment is cooled with water from the nearest canal - economy and energy efficiency at altitude. Source: Google

Since the beginning of the mass construction of data centers, 25 years have passed, and the market is very far from saturation - every year world investments in the construction of data centers are growing by 15%. At the same time, data centers are becoming more powerful and expensive. The cost of construction and operation directly affects the price of digital services, such as hosting, cloud storage and computing, so everyone is interested in improving the efficiency and economy of data centers around the world. What measures can be taken to reduce the cost of data center services?

High technology with high energy consumption

According to Nature magazine , it takes about 200 TWh to supply data centers around the world, with 140 TWh coming from US data centers. Six years ago, data centers consumed only 91 TWh, that is, the growth was an impressive 220%. Now data centers consume more than the whole of Ukraine (122 TWh), Argentina (129 TWh), Sweden (134 TWh ) or Poland (150 TWh).

Despite the fact that data centers are not smoking pipes and hazardous waste, but cloud computing, they also leave a serious carbon footprint in the global ecology - approximately0.3% of global emissions, which is a lot. This is because the share of "green" energy in the main countries that hold data centers is very small (11% in the US, 26% in China, all including hydroelectric power plants), so gas and coal-fired power plants are used to power the data centers. As a result, environmentalists and European governments are unhappy with the growth of greenhouse gas emissions, power grid operators are unhappy with the growing load and the need to modernize the networks, the owners of data centers are unhappy with the cost of maintenance.

After operating expenses, the main item of data center maintenance is electricity, which can account for up to half of the costs. To assess the energy efficiency of data centers, the PUE (Power usage effectiveness) coefficient is used, which expresses the ratio of all electricity consumed to the consumption of IT equipment. PUE equal to 1.0 is ideal and unattainable, you can only strive for it, since the servers are certainly supplied with cooling and monitoring systems that also require power supply. Cooling can account for 50-80% of the consumption of IT equipment, or even more, so the average PUE in the world is now 1.67 (1 unit of energy goes to servers and 0.67 units to other equipment). Thanks to an integrated approach, in the best cases, PUE can be reduced to 1.05-1.10,and this translates into colossal savings in electricity supply.

But to achieve such savings, you will have to follow almost all the recommendations from our checklist. Let's start from simple to complex: first, we will describe the measures for upgrading the current equipment, then we will swing at the reorganization of the data center, and then we will dream about the transfer or construction of new data centers, taking into account the latest trends and the most modern technologies.

According to a survey of data center owners, many would like to improve energy efficiency, cooling, layout or location of data centers. Source: Solar Thermal Magazine

UPS upgrade to minimize losses

To provide power redundancy, as well as to protect expensive data center equipment from power surges and other power grid problems, an online UPS with zero transfer time to battery is used. For the uninterrupted operation of servers, you have to pay with increased power consumption - with double conversion of voltage in order to increase its characteristics, losses are inevitable, which also have to be paid. UPS efficiency directly depends on the load - the higher it is (within the UPS characteristics), the greater the efficiency. The efficiency of current online double conversion UPSs under high load can be 85-95%, while highly outdated models can be a daunting 40-50%. That is, from 15% to 50% of electricity disappears during the transformation, but does not disappear from the cost.For older UPSs, the problem of low efficiency at low load is especially acute.

The most advanced dedicated online UPS systems can achieve efficiency levels of up to 99% during normal operation. That is why updating the UPS fleet can dramatically reduce the cost of maintaining a data center and significantly increase PUE. An example is a UPS from Eaton recently caught our eye. For small data centers - compact Eaton 9SX / 9PX for 5/6/8/11 kVA (the 9PX series is equipped with a DB15 port for organizing parallel operation of two UPSs). These UPSs are up to 98% efficient in "high efficiency" operation and up to 95% efficient on double conversion. Each module is completely self-contained and can be installed either vertically or in a 19-inch rack. To reduce battery maintenance costs, the 9SX / 9PX uses Eaton ABM Charge Management Technology, which extends battery life by 50%.Instead of trickle charging, ABM monitors the charge level and recharges the batteries only when needed.

But for modern large data centers, whose owners are more interested in the uninterrupted operation of equipment than in saving every ruble, it is better to pay attention to the Power Xpert 9395P tower UPS. They are 99% efficient in ESS energy conservation mode. The top model of the 9395P series is designed for 1200 kVA.

We update power distributors

Such a seemingly commonplace thing as power distribution units (PDUs, or Power distribution units) can also cause increased power consumption. The purpose and functionality of the PDU is simple - monitoring the voltage and supplying it to the equipment. While it is tempting at the planning stage of a data center to opt for cheap, non-remotely controlled outlet blocks, possible phase overloads can lead to power outages, and even UPSs will not help here.

Also, cheap PDUs have low efficiency. Modern expensive smart models are supplied with a high-quality transformer that meets the high requirements of the TP-1 energy efficiency standard. TP1 certification implies an increase in PDU efficiency by about 2-3% compared to conventional models, which seems to be a little, but when converted to annual costs, it translates into pleasant savings. In addition, modern PDUs simplify cable management, help reduce wiring and thus improve component flow. Yes, PDUs with TP-1 certification are naturally expensive, but the costs are recouped in about 6 years, while the service life of such PDUs is at least 20 years.

Replacing lighting with LED

Compared to the energy consumption of servers or cooling systems, data center lighting accounts for about 1% of all electricity required by a data center. Modestly, if the lighting is updated, the savings will be reflected not only in electricity bills, but also in estimates for replacing lamps.

Many legacy data centers continue to be illuminated with energy-efficient mercury lamps, which are inferior to LEDs in everything except, perhaps, price. But the resource of mercury lamps is about 5 times lower than that of LED lamps. The brightness of the LED luminaire will decrease to a critical 70% of the original in 50,000 hours, after which it will have to be changed. An energy-saving lamp degrades to the same level in just 10,000 hours, a little over a year. Thus, one replacement of LED sources will require up to five replacements of mercury lamps!

Also, the brightness of the LEDs can be easily changed, reducing power consumption depending on the time of day. And to obtain the same level of illumination when using LED lamps, one third less is required than energy-saving lamps.

Using free cooling

The best resource is free and renewable. Outdoor air and groundwater are such a resource for cooling servers. Instead of spending huge amounts of money on the operation of chillers, it will be much more economical to cool the equipment with "outboard" cold. The free cooling approach, in which cold filtered air or water enters the cooling system, is deservedly considered one of the best ways to reduce the cost of maintaining a data center. By abandoning air conditioners in favor of free cooling, PUE can be guaranteed to decrease from 1.8 to 1.1.

In the issue of free cooling, the Scandinavian countries are out of competition. Passionate about the environmental friendliness of everything and all of Sweden, heat in data centers is used to heat water in a home heating system. The Irish put the island winds, penetrating the country even in summer, into the service of free cooling, and the Danes let icy groundwater into the cooling system, which has the same low temperature both in winter and in summer.

Google is especially proud that by using free cooling in its European data centers, the company is saving water that could have gone to power plants to generate electricity for chillers (if there were any). Source: Google

The climate of the inhabited central part of Russia, not to mention the north-western region, pleases supporters of free cooling (and does not please everyone else) with an exceptionally small number of hot days. According to the statistics of the Russian Hydrometeorological Center, in the middle zone of the country only 3.8% of the time a year there is a temperature above + 22 ° C, and the average annual temperature in St. Petersburg is only + 5.6 ° C. For comparison, in the American state of Arizona, where a huge Apple data center is built, which also uses free cooling, even in January the temperature rarely drops below +6 ° C, its average annual level is above +16 ° C. Therefore, at least a quarter of the year Apple is forced to use voracious chillers to cool 120 thousand square meters. m of the area of your data center.

This does not mean that the time has come to master the Siberian virgin lands. Although the climate of Russia is conducive to the most effective use of free cooling, this is where the advantages of the north of the country for the construction of data centers end. But there are enough shortcomings: low transport accessibility, a shortage of highly qualified personnel, poor condition of telecommunications and energy networks.

Nevertheless, the first steps to create new data centers in the north have already been taken: Petrozavodsk State University has begun to deploy a very serious data center, which claims 20% of the Russian cloud storage market. At the same time, the Karelian weather should reduce the operating cost by about 40%. University staff and graduates, as well as the proximity to St. Petersburg, perfectly solve both the problem of personnel and the issue of connecting to a traffic exchange point.

One of Yandex's data centers is located in cold Finland. Due to free cooling, its PUE ratio was reduced to 1.1

Calling AI for help

Monitoring the cooling of a large data center and managing it is very laborious, and at the same time there is a high risk of overcooling something, and somewhere overlooked. The fact is that the cooling efficiency is influenced by many changing factors: the weather outside the window, the presence of open windows in the premises, the air humidity, the condition of the cooling towers, the load on the equipment. It is not possible for people to monitor hundreds and thousands of parameters in real time around the clock, so recently artificial intelligence has been put into the cooling management service.

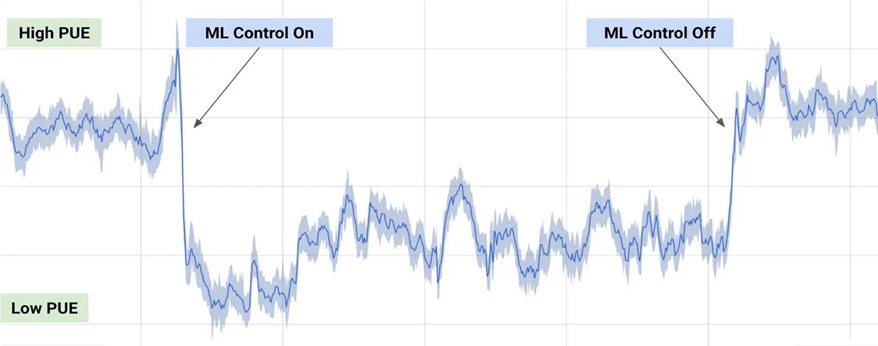

In 2014, Google acquired DeepMind, known for its AlphaGo product, which beat a professional go player for the first time. Based on the developments of DeepMind, a software package for the Google data center was created, which can not only monitor the readings of sensors and load, but also draw conclusions based on the accumulated history of observations and received weather forecasts. With the introduction of AI, energy costs for cooling Google data centers have decreased by an impressive 40%, while all equipment remains the same.

The result of the work of AI DeepMind in the Google data center - when machine learning was enabled, the system reduced energy consumption for cooling. Source: DeepMind

Individual solutions based on AI have found their place in the Sberbank data center in Skolkovo. Since the data center uses free cooling 330 days a year, the system monitors the external temperature and weather forecast and, on the basis of this, optimizes the energy consumption for cooling and even decides on the supply of cold air in case of warming.

The American company LitBit went even further, offering its AI employee for data centers, who diagnoses the condition of the data center based on visual and acoustic data. The AI evaluates the sound and vibration emitted by the equipment and compares it with reference casts to help detect degrading components.

We use modular data centers

The construction or expansion of a data center is not only an expensive process, but also a long one, largely due to the need to design a capital structure and carry out a project along the entire chain of necessary approvals. From the conclusion of the contract to the commissioning of a compact data center with an area of 200-300 sq. m 9-12 months pass, and this is provided that the documents do not get stuck in any of the authorities issuing their building permits.

The concept of modular data centers, such bricks, from which, as from a constructor, one could quickly assemble data centers, was born in the mid-2000s. Sun is considered to be a pioneer in this with its Sun Modular Datacenter - a modular data center inside a standard shipping container, which fits eight 40-unit racks, servers and networking equipment and a cooling system. Deploying a modular Sun Modular Datacenter cost about 1% of the construction of a traditional data center, could be easily transported from site to site, and connectivity was limited to electricity, water, and mains.

2009 archive.org Sun Modular Datacenter. , — archive.org 3 50 . : NapoliRoma / Wikimedia Commons

Now the market offers modular data centers in a wide variety of designs, and not only in the form of containers. Modules are considered the most efficient way to quickly build or expand a data center without unnecessary costs and lengthy approvals. The modules take up less space and use space more efficiently due to pre-planned layouts, have less power consumption and often better cooling, and can easily be moved closer to places with cheap electricity (near large power plants). So before thinking about building or expanding a data center, you should pay attention to ready-made modules.

Powering the data center from renewable energy sources

Compared to Europe, Russia has an important economic advantage that makes the location of data centers on its territory very attractive - the extremely low cost of electricity. In some regions of the country, it reaches 3 rubles. per kWh, while in Germany about 23 rubles. per kWh, and in the USA on average 9 rubles. per kWh. Plus, in Russia, the authorities do not yet treat the environment as scrupulously as in the Scandinavian countries. Therefore, we do not yet have the issue of transferring a data center to autonomous power supply from renewable energy sources.

However, in the West, the owners of data centers are forced to at least declare the minimization of their carbon footprint, offsetting emissions from the operation of the data center by financing environmental initiatives. This, as well as the high price for electricity, is forcing large companies to transfer large data centers to autonomous power supply. High initial costs abroad quickly pay off and benefit the image.

So Apple, one of the pioneers in the use of "green" technologies, was the first to transfer its data centers to electricity from its own solar and wind farms. It got to the point that in 2016 the company applied for a desire to start selling surplus generation from its American solar power plants with a capacity of 90 and 130 MW.

Autonomous power supply will be useful in areas far from powerful power grids. For example, the Icelandic Verne Global data center is built by a number of geothermal power plants. Although they are not owned by the company, they are environmentally friendly, renewable and very stable energy sources.

In Germany, green energy already accounts for more than half of all generation. Unfortunately, the share of renewable energy in Russia hovers around 4%, two-thirds of which is hydropower. But for new data centers, as a groundwork for the future, it will not hurt to think about autonomous power supply from a solar or wind power plant.

Saving is never superfluous

The largest and most expensive data center in the world belongs to the telecommunications giant China Telecom. It covers an area of 994 thousand square meters. m, and the construction cost $ 3 billion. The largest Russian data center was built by Sberbank in Skolkovo. It is 30 times smaller than the Chinese giant - only 33 thousand square meters. m. But thanks to the competent implementation of free cooling, AI and heating of premises with heat equipment, it was possible to achieve savings on maintenance of about 100 million rubles per year. Given the size of China Telecom's data center, you can be sure that all possible ways to reduce costs have been applied there, too. No matter how absurd and insignificant a measure to reduce the cost of data center maintenance may seem, in annual terms it can bring very noticeable savings, proportional to the size of the data center.