More and more often we communicate with gadgets by voice. Well-known assistants like Alexa and Siri have been joined by in-car speech interfaces Apple CarPlay and Android Auto. There are even applications that are sensitive to voice biometrics. And if you also create new products using speech commands?

This could be the starting point for speech programming, an approach to software development in which developers use their voice to write code instead of a keyboard and mouse. The commands they utter are used to manipulate the code and create new commands that maintain and automate the workflow.

Speech programming is not as easy as it might seem. There is a complex multi-layer technology behind it. The Serenade voice coding app has a dedicated speech recognition engine built into it. He works with code, and this is his main difference from the solutionGoogle, which recognizes spoken language. As soon as the programmer speaks the text of the program, Serenade passes it to the natural language processing (NLP) engine. His machine learning models are trained to detect and translate common programming tokens into syntactically correct code.

In 2020, Serenade raised $ 2.1 million in a seed round . The company appeared a year earlier, when its founder was diagnosed with tunnel syndrome:

« Quora . : , , .»

— Serenade

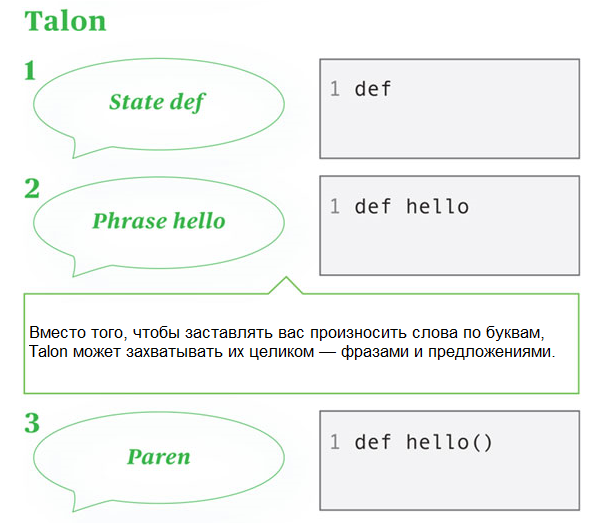

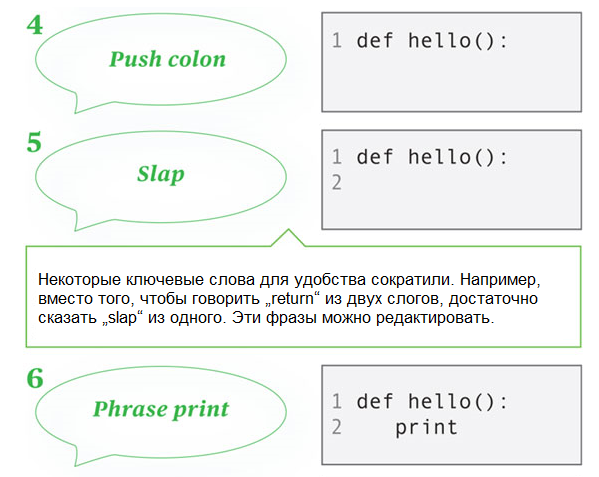

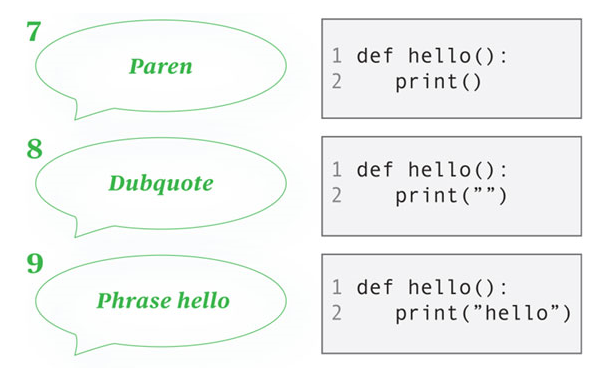

In 2017, Ryan Heilman also left his job as a programmer due to pain in his arms and started building Talon , a typing platform without a keyboard. “The idea behind Talon is to completely replace the keyboard and mouse for everyone,” he says.

Talon includes several components: speech recognition, eye tracking, and noise recognition. Talon's speech transcript is based on Facebook's Wav2letter API , to which Heilman added commands to generate code. Eye tracking and noise detection allow you to simulate mouse operation. The eyes move the cursor around the screen, and clicks happen when the user clicks their tongue:

“This sound is easy to reproduce. It is easy and recognizable without delay, so it is a quick, non-verbal way to click with a mouse that doesn’t cause vocal stress. ”

- Talon creator Ryan Heilman

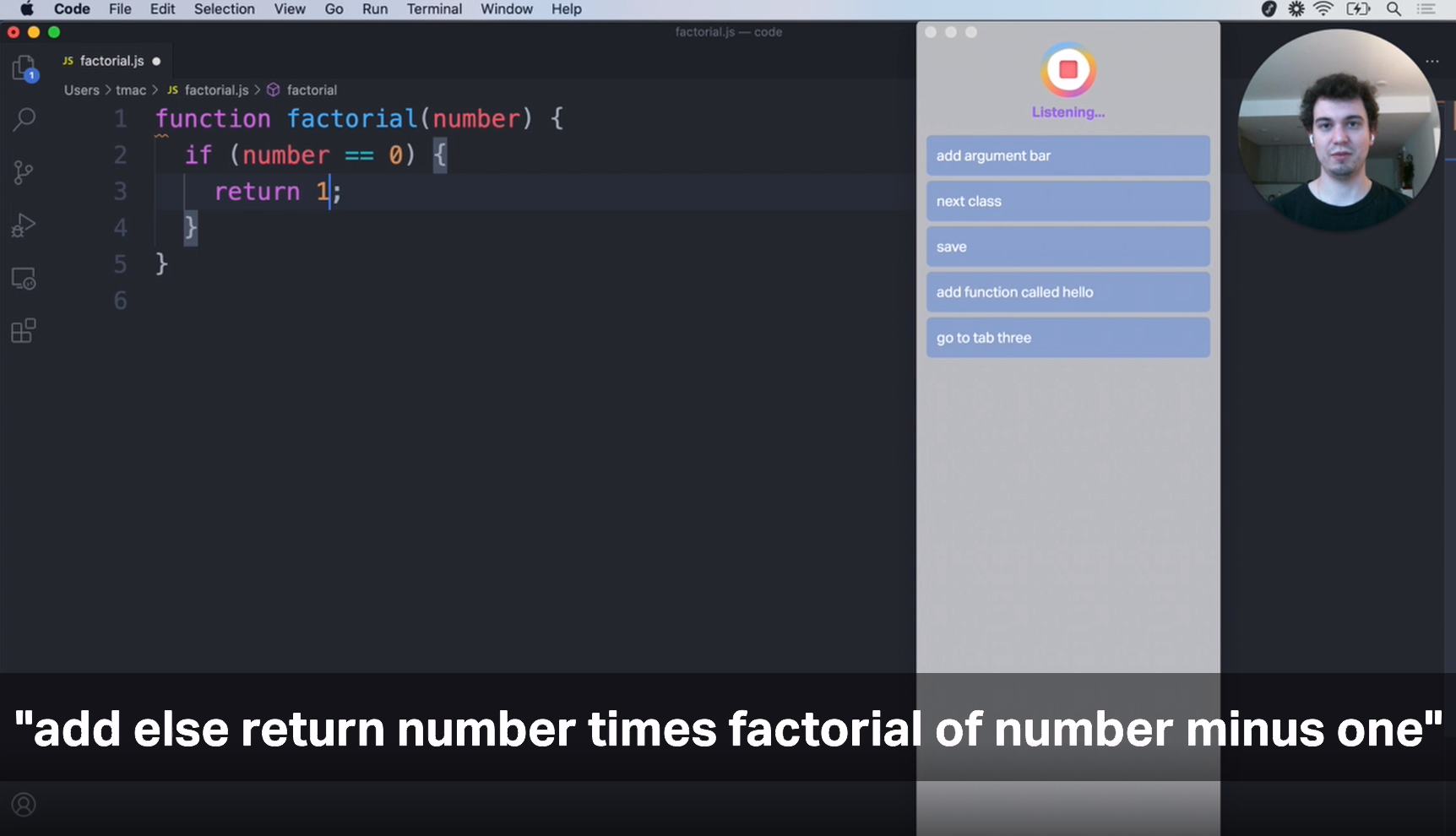

In 2019, Emily Shea showed what working at Talon looks like. From the outside, programming in this environment sounds like a conversation in a foreign language. The video is filled with voice commands like "slap" (press Enter), "undo" (delete), "spring 3" (go to the third line of the file) and "phrase name op equals snake extract word paren mad" (which should create this line of code : name = extract_word (m)).

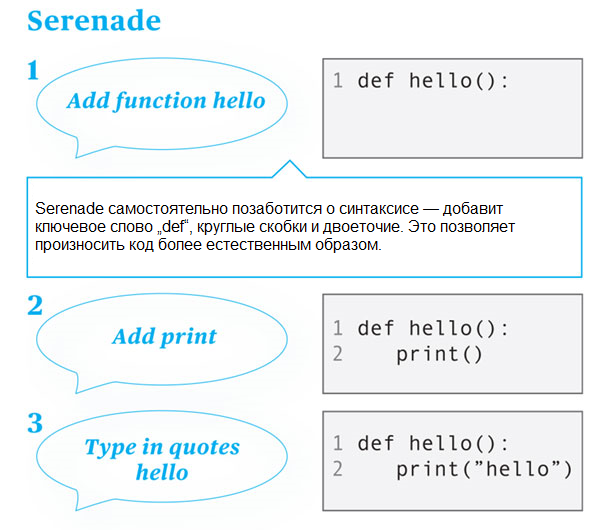

Programming in Serenade is more natural. You can say "delete import" to remove the import statement at the top of the file, or "build" to start a build. You can also say "add function factorial" to create a function that calculates factorial in JavaScript. The application takes care of the syntax - including the "function" keyword, parentheses and curly braces - so you don't have to pronounce every element.

Serenade models are trained to work with sound from a laptop microphone. Ideally, however, you need a good microphone to help cut out unnecessary background noise.

If you plan to work with Talon and use eye tracking, you will need special equipment, although the environment works fine without it. Open source voice recognition platforms like Aenea and Caster are free, but powered by the Dragon engine , which is sold separately. However, Caster supports the open source speech recognition tool Kaldi and Windows Speech Recognition, which are preinstalled in Windows.

The results speak for themselves:

"Describing in words what you want to do is much easier than using the keyboard: just say 'move these three lines down' or 'duplicate this method'."

- Serenade Labs Co-Founder Tommy McWilliam

Speech programming allows people with injuries or chronic illnesses to continue to work in their favorite field. “The ability to use your voice and remove your hands from the equation circuitry has made the control of the computer more flexible,” says Emily Shea. Plus, voice programming will lower the barrier to entry into software development.

"If people can think about a program in a logical and understandable way, machine learning can take over the job of turning a person's thoughts into syntactically correct code."

- Serenade Labs Co-Founder Tommy McWilliam

Speech programming is still in its infancy. Its widespread adoption depends on how difficult it will be for software engineers to abandon the keyboard and mouse. Coding without these devices also opens up possibilities for brain-to-computer interfaces to turn human thoughts into code or even off-the-shelf software.