Let's move on to basic concepts.

Software Testing is a verification of the conformity of the actual and expected results of the program's behavior, carried out on a finite set of tests chosen in a certain way.

The purpose of testing is to verify that the software meets the requirements, to ensure confidence in the quality of the software, to search for obvious errors in the software, which must be identified before the users of the program find them.

Why is software testing required?

- The testing process ensures that the software will perform as required.

- This reduces coding cycles by identifying problems early in development. Detecting problems earlier in the development phase ensures that resources are used correctly and prevents cost increases.

- , .

- , , , .

- 1 — (Testing shows presence of defects). , , , . , , .

- 2 — (Exhaustive testing is impossible). , . , .

- 3 — (Early testing). , , .

- 4 — (Defects clustering). – . . , .

- 5 — (Pesticide paradox). , . « », , , , , .

- 6 — (Testing is concept depending). - . , , , , .

- Principle 7 - Misconception about the absence of errors (end the Absence-of-errors The fallacy) . The absence of found defects during testing does not always mean that the product is ready for release. The system should be user-friendly and meet the user's expectations and needs.

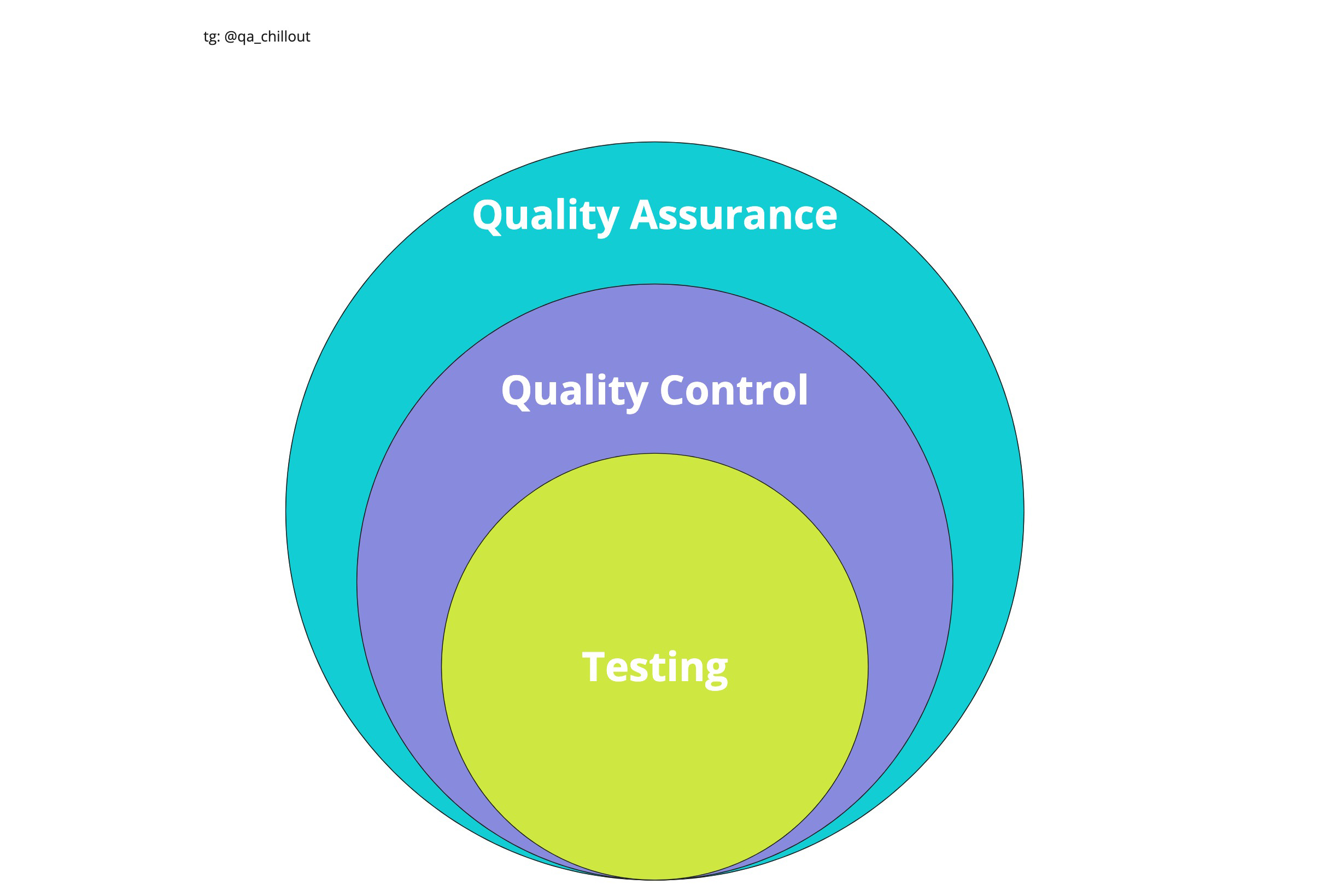

Quality Assurance (QA) and Quality Control (QC) - these terms are similar to interchangeable ones, but there is still a difference between quality assurance and quality control, although in practice the processes have some similarities.

QC (Quality Control) - Product quality control - analysis of test results and quality of new versions of the released product.

Quality control tasks include:

- checking software readiness for release;

- verification of compliance with the requirements and quality of this project.

QA (Quality Assurance) - Product quality assurance - exploring opportunities to change and improve the development process, improve communication in the team, where testing is only one of the aspects of quality assurance.

Quality assurance objectives include:

- verification of technical characteristics and software requirements;

- risk assessment;

- scheduling tasks to improve product quality;

- preparation of documentation, test environment and data;

- testing;

- analysis of test results, as well as drawing up reports and other documents.

Verification and validation are two concepts closely related to testing and quality assurance processes. Unfortunately, they are often confused, although the differences between them are quite significant.

Verification is the process of evaluating a system to see if the results of the current development phase meet the conditions that were formulated at the beginning.

Validation is the determination of the compliance of the developed software with the expectations and needs of the user, his requirements for the system.

: 310, , «», . , , «». , . , , , . «» — , «» — . , .

The documentation that is used on software development projects can be roughly divided into two groups:

- Project documentation - includes everything related to the project as a whole.

- Product documentation is a part of design documentation, separated separately, which relates directly to the developed application or system.

Testing stages:

- Product analysis

- Working with requirements

- Developing a test strategy and planning quality control procedures

- Creation of test documentation

- Prototype testing

- Basic testing

- Stabilization

- Exploitation

Software development stages - the stages that software development teams go through before the program becomes available to a wide range of users.

The software product goes through the following stages:

- analysis of project requirements;

- design;

- implementation;

- product testing;

- implementation and support.

Requirements

Requirements are a specification (description) of what needs to be implemented.

Requirements describe what needs to be implemented without detailing the technical side of the solution.

Requirements for requirements:

- Correctness - each requirement must accurately describe the desired functionality.

- Verifiability - a requirement should be formulated in such a way that there are ways to unambiguously verify whether it is fulfilled or not.

- Completeness - each requirement must contain all the necessary information the developer needs to implement the functionality.

- Unambiguous - the requirement is described without non-obvious abbreviations and vague wordings and allows only an unambiguous interpretation of what is written.

- — .

- — ( ). .

- — .

- — .

- — , .

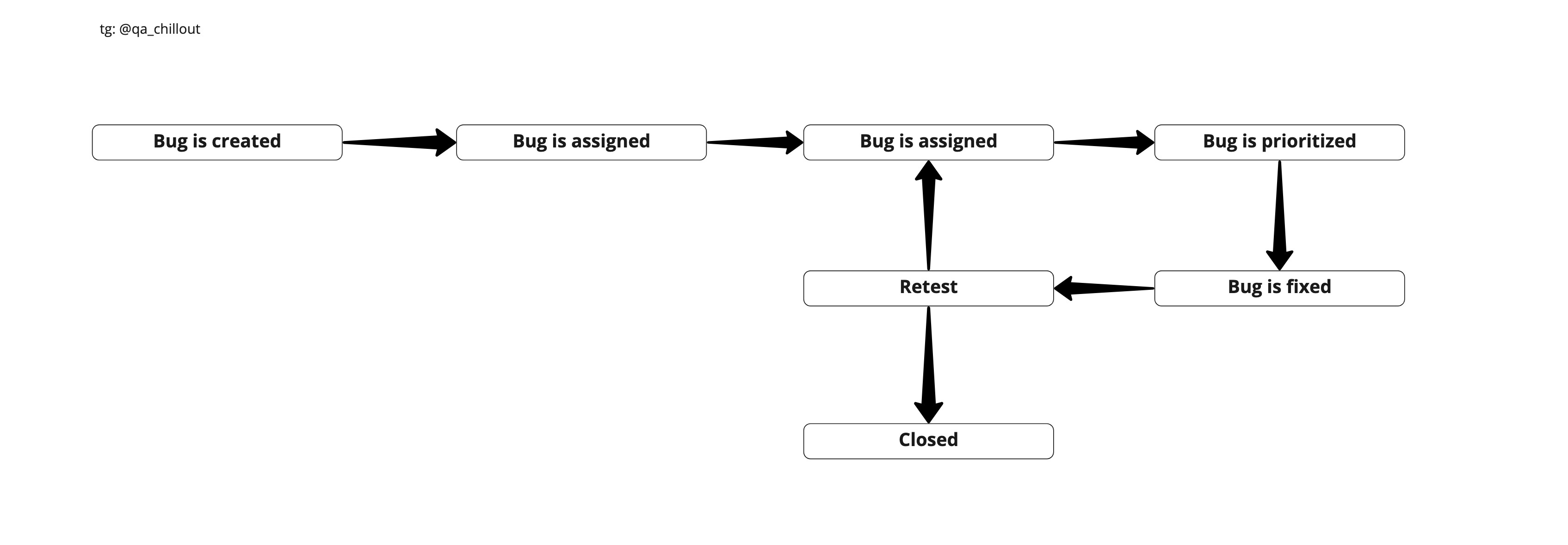

Defect (bug) - deviation of the actual result from the expected one.

A bug report is a document describing the situation that led to the discovery of a defect, indicating the reasons and the expected result.

Defect report attributes:

- Unique identifier (ID) - assigned automatically by the system.

- Topic (short description, Summary) - a briefly formulated essence of the defect according to the rule “What? Where? When?"

- Description - a broader description of the essence of the defect (specified optionally).

... - Steps To Reproduce - a sequential description of the actions that led to the identification of the defect. It is necessary to describe in as much detail as possible, indicating the specific input values.

- (Actual result) — , , .

- (Expected result) — , , .

- (Attachments) — , -.

- (, Severity) — .

- (, Priority) — .

- (Status) — . - .

- (environment) – , .

Severity vs Priority

Severity shows the degree of damage caused to the project by the existence of a defect. Severity is exposed by the tester.

Defect Severity Grading:

- Blocking (S1 - Blocker)

testing of a significant part of the functionality is not available at all. Blocking error that renders the application inoperative, as a result of which further work with the system under test or its key functions becomes impossible. - (S2 – Critical)

, -, , , , - , workaround ( / ), . - (S3 – Major)

- /-, , workaround, - . visibility – , , , . - (S4 – Minor)

GUI, , . , . - Trivial (S5 - Trivial)

almost always defects in the GUI - typos in the text, mismatch of font and shade, etc., or a poorly reproducible error that does not concern business logic, a problem with third-party libraries or services, a problem that does not have any effect on the overall quality of the product.

The priority indicates how quickly the defect should be fixed. Priority is set by the manager, team lead or customer. Defect Priority gradation

(Priority):

- P1 High The

error should be corrected as soon as possible. its presence is critical to the project. - P2 Medium The

error must be corrected, its presence is not critical, but must be resolved. - P3 (Low)

, .

:

- (epic) — , .

- (story) — (), 1 .

- (task) — , .

- - (sub-task) — / , .

- (bug) — , .

- (Development Env) – , , , Unit-. .

- (Test Env) – . . , , . .

- (Integration Env) – , . end-to-end , , . , . – , .

- (Preview, Preprod Env) – , : , - , . , «». end-to-end , / (User Acceptance Testing (UAT)), L3 L2 DryRun ( ). L3 .

- (Production Env) – , . L2 , , . L3.

- Pre-Alpha: . . . .

- Alpha: . — . - . . .

- Beta: . , .

- Release Candidate (RC) : Based on Beta Test feedback, you make software changes and want to test bug fixes. At this point, you don't want to make drastic changes to functionality, but simply check for bugs. RC may also be released to the public.

- Release: everything works, software is released to the public.

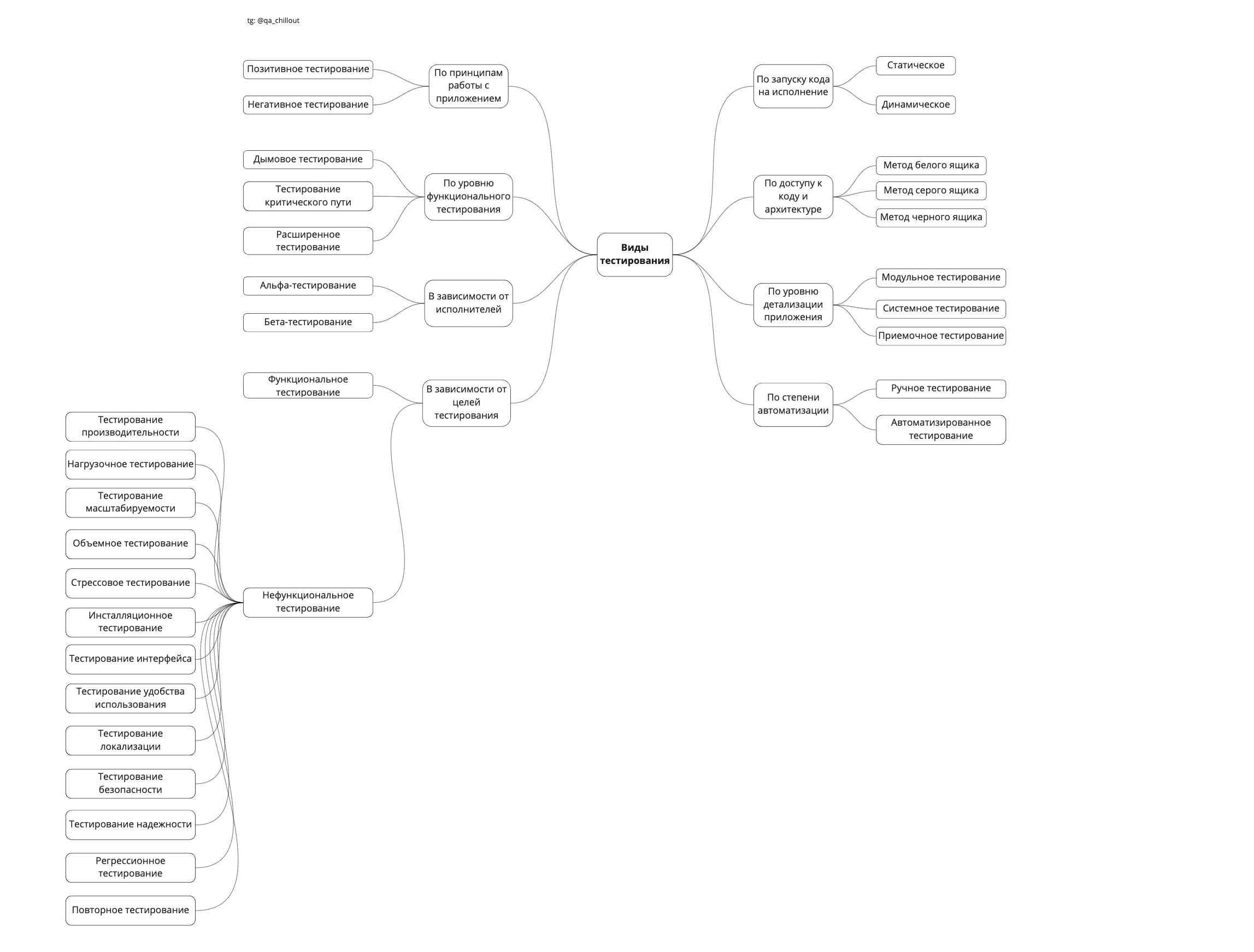

The main types of software testing

A type of testing is a set of activities aimed at testing the specified characteristics of a system or its part, based on specific goals.

- Classification by running code for execution:

- — . , . «». — .

- — . , / . — , . , , .

- :

- — , , // .

- — , White Box Black Box . , .

- — , – , .

- :

- — - ( , subprograms, subroutines, ) . , .

- — , ( , ).

- — , , .

- — , - .

- :

- .

- .

-

- — , .

- — , .

- :

- (smoke test) — , , .

- (critical path) — , .

- (extended) — .

- :

- - — . - .

- - — . , .

- :

- (functional testing) — ( ).

- (non-functional testing) — , , , « ».

- (performance testing) — , , .

- (load testing) — , , .

- (scalability testing) — .

- (volume testing) — , .

- (stress testing) — , .

- (installation testing) — , , .

- (GUI/UI testing) — .

- (usability testing) — , .

- (localization testing) — (, ).

- (security testing) — , .

- (reliability testing) — .

- Regression testing - testing of previously tested functionality after making changes to the application code, to make sure that these changes did not introduce errors in areas that have not been changed.

- Re-testing / confirmation testing - testing during which test scripts that detected errors during the last run are executed to confirm the success of fixing these errors.

Test design is the stage of software testing, at which test cases (test cases) are designed and created.

Test design techniques:

- (equivalence partitioning) — , , ( ) .

- (boundary value testing) — () .

- (pairwise testing) — , -.

- (State-Transition Testing) — .

- (Decision Table Testing) — , , .

- (Exploratory Testing) — , -: .

- (Domain Analysis Testing) — , .

- (Use Case Testing) — Use Case ( — ).

White box testing is a software testing method that assumes that the internal structure / device / implementation of the system is known to the tester.

According to ISTQB, white box testing is:

- testing based on an analysis of the internal structure of a component or system;

- test design based on the white box technique - a procedure for writing or selecting test cases based on an analysis of the internal structure of a system or component.

Why White Box? The program under test for the tester is a transparent box, the contents of which he perfectly sees.

Gray box testing- software testing method, which assumes a combination of White Box and Black Box approaches. That is, we only partially know the internal structure of the program.

Black box testing — also known as specification-based testing or behavior testing — is a testing technique that relies solely on the external interfaces of the system under test.

According to ISTQB, black box testing is:

- testing, both functional and non-functional, which does not involve knowledge of the internal structure of a component or system;

- test design based on the black box technique - a procedure for writing or selecting test cases based on an analysis of the functional or non-functional specification of a component or system without knowing its internal structure.

Test Documentation

A Test Plan is a document that describes the entire scope of testing, from the description of the facility, strategy, schedule, criteria for starting and ending testing, to the equipment required in the process of operation, special knowledge, as well as risk assessment with options for their resolution. ...

The test plan should answer the following questions:

- What should be tested?

- What will you test?

- How will you test?

- When will you test?

- Test start criteria.

- Test termination criteria.

The main points of the test plan:

The IEEE 829 standard lists the points that the test plan should consist of:

a) Test plan identifier;

b) Introduction;

c) Test items;

d) Features to be tested;

e) Features not to be tested;

f) Approach;

g) Item pass / fail criteria;

h) Suspension criteria and resumption requirements;

i) Test deliverables;

j) Testing tasks;

k) Environmental needs;

l) Responsibilities;

m) Staffing and training needs;

n) Schedule;

o) Risks and contingencies;

p) Approvals.

A check list is a document that describes what should be tested. The checklist can be of completely different levels of detail.

Most often, the checklist contains only actions, without the expected result. The checklist is less formalized.

A test case is an artifact that describes a set of steps, specific conditions and parameters required to test the implementation of a function under test or a part of it.

Test case attributes:

- Preconditions - a list of actions that bring the system to a state suitable for conducting a basic check. Or a list of conditions, the fulfillment of which indicates that the system is in a state suitable for conducting the main test.

- Steps - a list of actions that transfer the system from one state to another, in order to obtain a result, on the basis of which it is possible to conclude that the implementation meets the requirements.

- Expected result - what they should get in fact.

Summary

Try to understand the definitions, not memorize them. And if you have a question, you can always ask us in the @qa_chillout telegram channel .