Despite the digitalization of everything and everyone, at a time when humanity is on the verge of creating a neurointerface, when AI has become commonplace, the classical task of obtaining data from a scan / picture is still relevant.

Good day. My name is Aleksey. I work as a programmer in a company selling equipment. I had my own best practices for recognizing and loading data into an accounting program, and it was just the managers who manually entered dozens of pages of pdf documents that could not be easily transferred to EDF. I invited them to try my solution.

Initially, ABBYY Cloud was used for recognition, but it is not free, and the trial mode is not long enough. I decided to write my API in python, where all the power of the free tesseracta is used. The problem is that tesseract is text recognition, and it does not define a table, it turns out to be a little useful mess. Just the day before I read the article https://vc.ru/ml/139816-povyshenie-kachestva-raspoznavaniya-skanov-dokumentov-s-tablicami-s-pomoshchyu-vychisleniya-koordinat-yacheek, where all table cells are obtained using openCV, each cell is run through tesseract and thus correct data can be obtained. I decided to try this method. About what happened, and there will be a post.

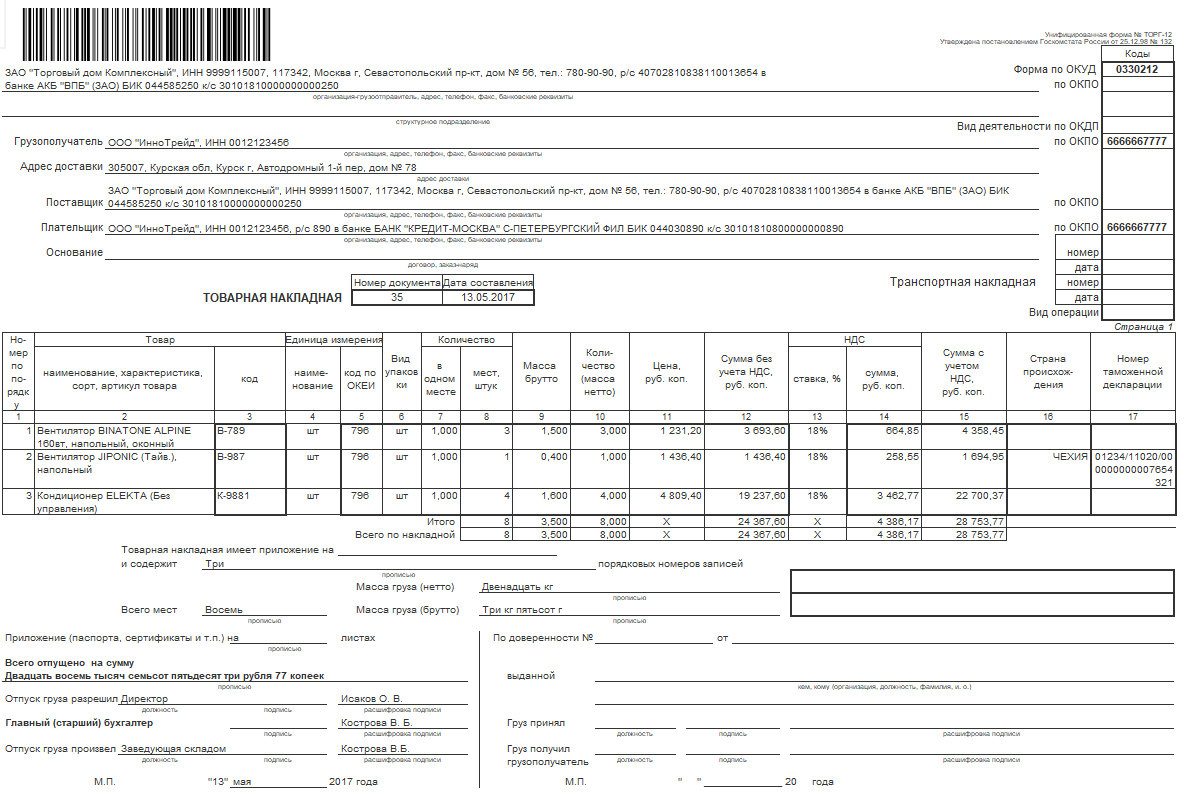

For the test, I took from the demo base 1c TORG-12. This form has a rather complex structure, a lot of tables, a lot of text, a lot of data. Just what you need.

pdf , gostscript . ImageMagick, - . cmd , gostscript .

, openCV , QR-. pyzbar.

, . , . , , . - .

clahe = cv2.createCLAHE(clipLimit=50, tileGridSize=(50, 50))

lab = cv2.cvtColor(img, cv2.COLOR_BGR2LAB)

l, a, b = cv2.split(lab)

l2 = clahe.apply(l)

lab = cv2.merge((l2, a, b))

img2 = cv2.cvtColor(lab, cv2.COLOR_LAB2BGR)

gray = cv2.cvtColor(img2, cv2.COLOR_BGR2GRAY)

ret, thresh = cv2.threshold(gray, 75, 255, cv2.THRESH_BINARY_INV )

kernel = np.ones((2, 2), np.uint8)

obr_img = cv2.erode(thresh, kernel, iterations=1)

obr_img = cv2.GaussianBlur(obr_img, (3,3), 0)

, . , . 5 , delta.

contours, hierarchy = cv2.findContours(obr_img, cv2.RETR_TREE, cv2.CHAIN_APPROX_TC89_L1)

coordinates = []

ogr = round(max(img.shape[0], img.shape[1]) * 0.005)

delta = round(ogr/2 +0.5)

ind = 1;

for i in range(0, len(contours)):

l, t, w, h = cv2.boundingRect(contours[i])

if (h > ogr and w > ogr):

#

#

#

#

#

#

#

#

coordinates.append((0, ind, 0, l, t, w, h, ''))

ind = ind + 1

, . sqlite3 coordinates. . , hierarchy, , . .

, . - . , .

, , - . , , . . , , . , , , , , , .

2 :

. , . , . . , , , , . - . . , , . . . . / 2*. . , - , . .

. . 4 . , , "". "" , , . - , 4 , .

. . tesseract , 3 , . , "-". "-", "---00", . .

text1 = pytesseract.image_to_string(image[t1:t2,l1:l2], lang=lang, config='--psm 6')

text2 = pytesseract.image_to_string(image[t1:t2,l1:l2], lang=lang, config='')

text3 = pytesseract.image_to_string(image[t1+round(delta/2):t2-round(delta/2),l1+round(delta/2):l2-round(delta/2)], lang=lang, config='--psm 7')

text1 = text1.replace("\n", " ")

text2 = text2.replace("\n", " ")

text3 = text3.replace("\n", " ")

text1 = re.sub(' *[^ \(\)--\d\w\/\\\.\-,:; ]+ *', ' ', text1)

text2 = re.sub(' *[^ \(\)--\d\w\/\\\.\-,:; ]+ *', ' ', text2)

text3 = re.sub(' *[^ \(\)--\d\w\/\\\.\-,:; ]+ *', ' ', text3)

while text1.find(' ')!=-1:

text1 = text1.replace(' ',' ')

while text2.find(' ') != -1:

text2 = text2.replace(' ', ' ')

while text3.find(' ') != -1:

text3 = text3.replace(' ', ' ')

. , . , . , -, , , . , , . , , , . , , ? , 2 , . . , , , . , ; ; , . .

. . 4 . "". , , . .

, , . , . API JSON, 1 . , . . . 1 pdf 20 , . , Tesserocr Pytesseract, .

https://github.com/Trim891/API. PyCharm "", GitHub, *.py requirements.txt. , , , , , ; " , ", , , - ; , , 2 . .

PS There are a lot of comments in the files, a lot of unnecessary things and, in general, shit-code is a creative mess. It was all for internal use, there was no time to dress up =)