But we found a way out - now you can't commit to the repository without tests. At least imperceptibly and with impunity.

Testing system

The first thing we need is a testing system. We have already described it here. Recall that you need the same code to run on the Ci server, and locally, so that there is no difficulty in maintaining. It is desirable that the project be able to set various parameters for common tests, or even better - extend it with their own. Of course, the candy didn't come out right away.

Stage one- you can run, but it hurts. What to do with the python code is still clear, but with all sorts of utilities like CppCheck, Bloaty, optipng, our internal crutches, bicycles - no. To run correctly, we need executable files for all platforms on which our colleagues work (mac, windows and linux). At this stage, all the necessary binaries were in the repository, and the relative path to the binaries folder was indicated in the test system settings.

<CppCheck bin_folder=”utils/cppcheck”>...</CppCheck>

This poses several problems:

- from the project side, you need to store unnecessary files in the repository, since they are needed on the computer of each developer. Naturally, the repository is larger because of this.

- when a problem arises, it is difficult to understand which version the project has, whether the required structure is in the folder.

- where to get the necessary binaries? Compile yourself, download on the Internet?

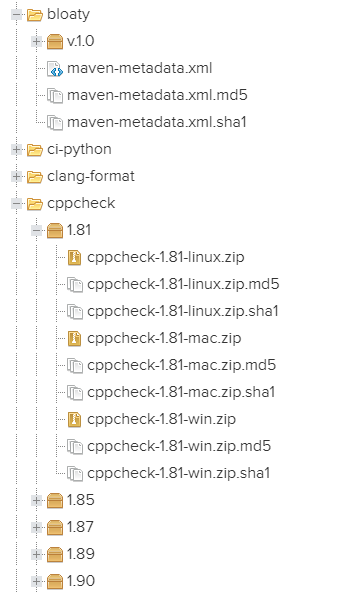

Stage two - we put things in order in the utilities. But what if you write out all the necessary utilities and collect them in one repository? The idea is that on the server there are already assembled utilities for all the necessary platforms, which are also versioned. We already used Nexus Sonatype, so we went to the next department and agreed on the files. The result is a structure:

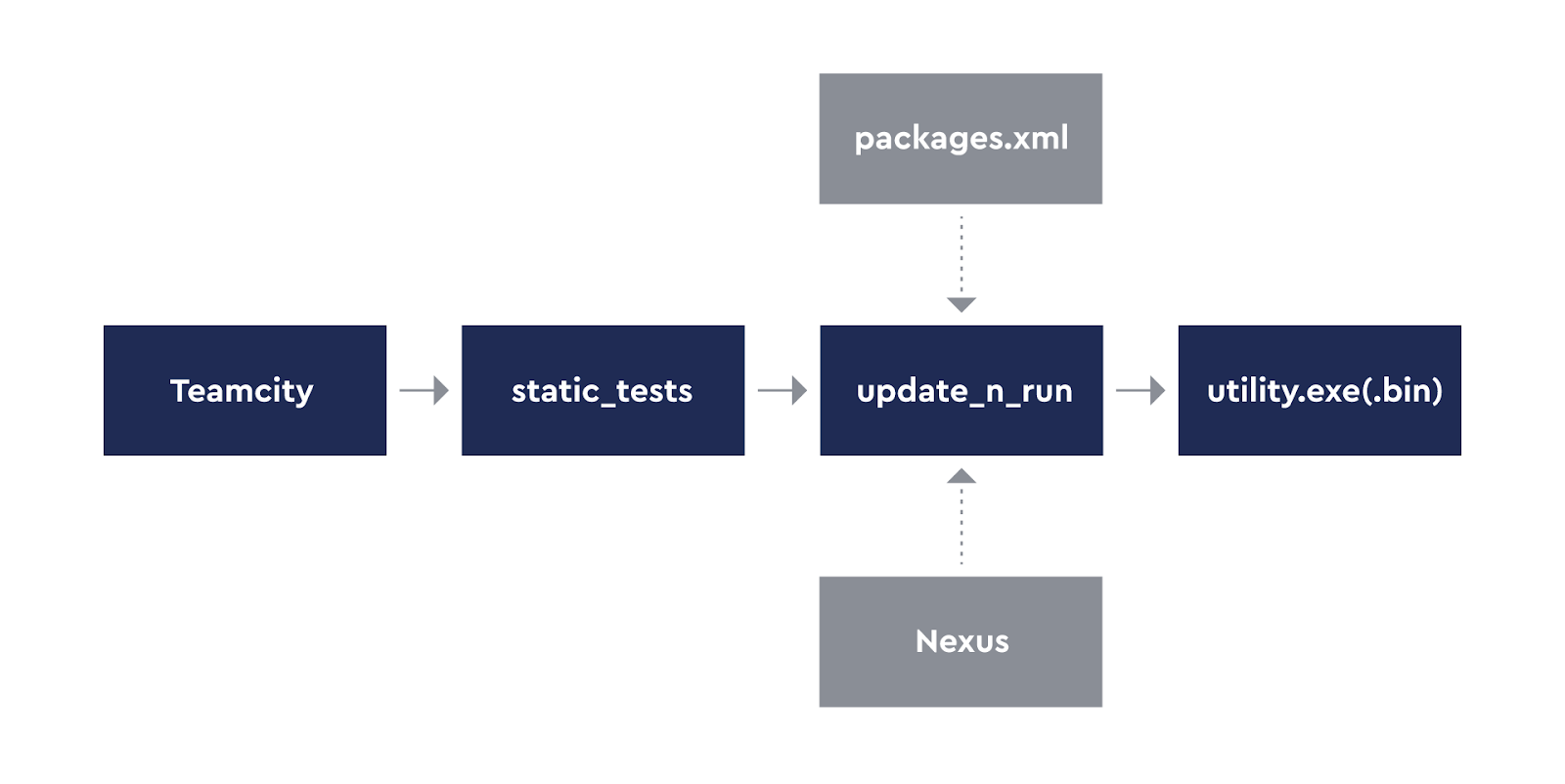

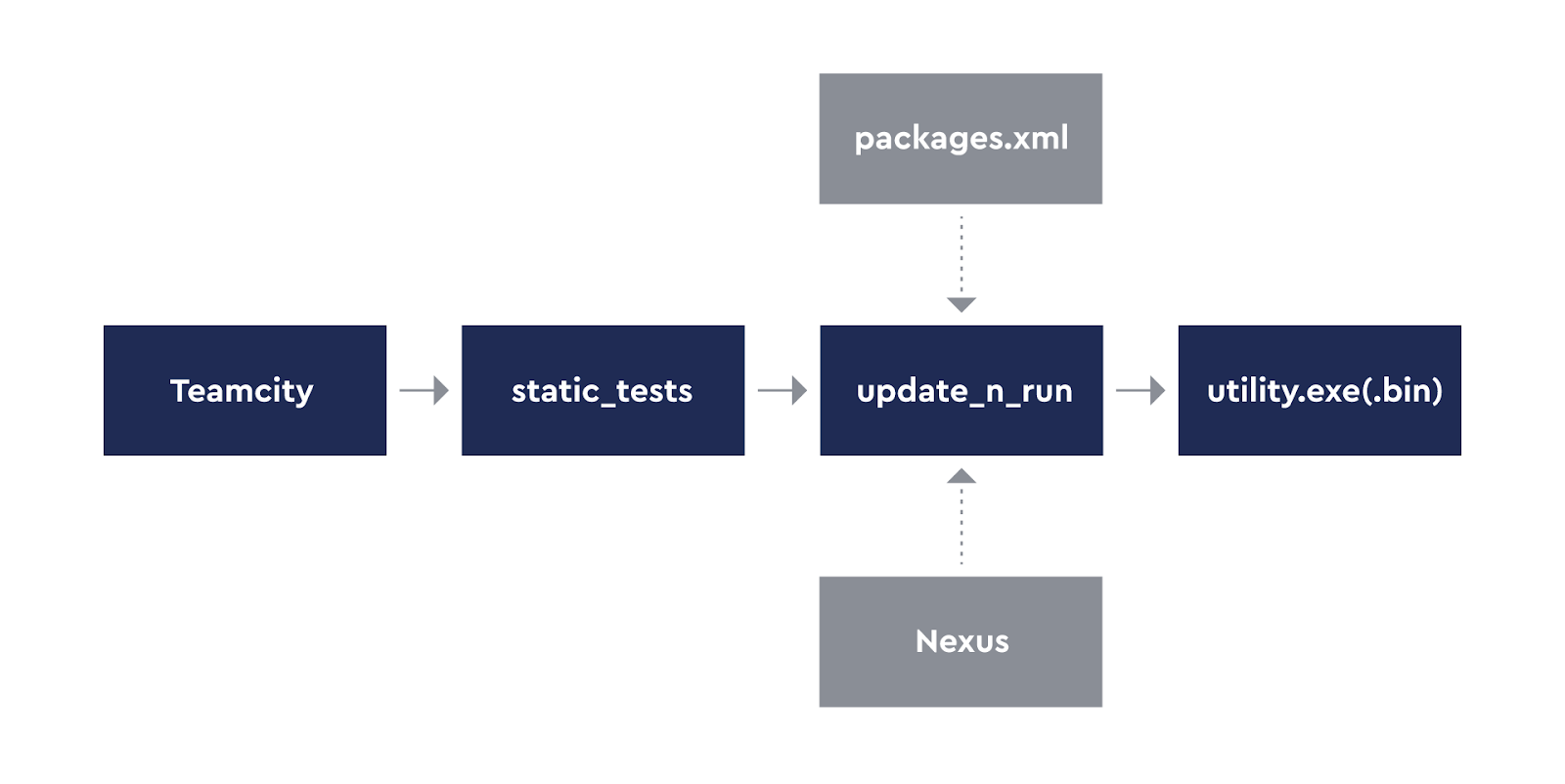

To start, you need a script that knows the secret address where the binaries are located, can download them, and also run, depending on the platform, with the passed parameters.

Omitting the intricacies of implementation

def get_tools_info(project_tools_xml, available_tools_xml): # Parse available tools at first and feel up dictionary root = etree.parse(available_tools_xml).getroot() tools = {} # Parse xml and find current installed version ... return tools def update_tool(tool_info: ToolInfo): if tool_info.current_version == tool_info.needed_version: return if tool_info.needed_version not in tool_info.versions: raise RuntimeError(f'Tool "{tool_info.tool_id}" has no version "{tool_info.needed_version}"') if os.path.isdir(tool_info.output_folder): shutil.rmtree(tool_info.output_folder) g_server_interface.download(tool_id=tool_info.tool_id, version=tool_info.needed_version, output_folder=tool_info.output_folder) def run_tool(tool_info: ToolInfo, tool_args): system_name = platform.system().lower() tool_bin = tool_info.exe_infos[system_name].executable full_path = os.path.join(tool_info.output_folder, tool_bin) command = [full_path] + tool_args try: print(f'Run tool: "{tool_info.tool_id}" with commands: "{" ".join(tool_args)}"') output = subprocess.check_output(command) print(output) except Exception as e: print(f'Fail with: {e}') return 1 return 0 def run(project_tools_xml, available_tools_xml, tool_id, tool_args): tools = get_tools_info(project_tools_xml=project_tools_xml, available_tools_xml=available_tools_xml) update_tool(tools[tool_id]) return run_tool(tool_info, tool_args)

On the server, we added a file with a description of the utilities. The address of this file is unchanged, so the first thing we do is go there and see what we have in stock. Omitting subtleties, these are the package names and the path to the executable file inside the package for each platform.

xml "on the server"

<?xml version='1.0' encoding='utf-8'?>

<Tools>

<CppCheck>

<windows executable="cppcheck.exe" />

<darwin executable="cppcheck" />

<linux executable="cppcheck" />

</CppCheck>

</Tools>

And on the project, add a file with a description of what you need.

xml project

, , , . .

:

, — .

- , , ? -, . — , . : git.

-, — bash-, git: pull push, , git-.

, :

, , , .git/hooks. — . , ( Windows Mac), . , .

, . , .

. , , git-bash Windows. FAQ.

. , . , FAQ. , .git/hooks . , :

, , :

— . .git/hooks, . . , .git/hooks , .

, , - . , -. — . , — . :

, : , . , .

<spoiler title=« :> : 32- ; , 64-; pip install , . - 32- — .

<?xml version='1.0' encoding='utf-8'?>

<Tools>

<CppCheck version="1.89" />

</Tools>

, , , . .

python -m utility_runner --available-source D:\Playrix\![habr]\gd_hooks\available_source.xml --project-tools D:\Playrix\![habr]\gd_hooks\project\project_tools.xml --run-tool CppCheck -- --version

:

- , ,

- , , . .

, — .

- ?

- , , ? -, . — , . : git.

-, — bash-, git: pull push, , git-.

, :

- pre-commit — . , .

- prepare-commit-msg — , . , rebase.

- commit-msg — . , . , .

, , , .git/hooks. — . , ( Windows Mac), . , .

, . , .

. , , git-bash Windows. FAQ.

: , , dns . , curl

[ .

. , . , FAQ. , .git/hooks . , :

git rev-parse

git rev-parse --git-path hooks

, , :

|

|

| Worktree |

|

| submodule |

|

— . .git/hooks, . . , .git/hooks , .

, , - . , -. — . , — . :

- pre-commit , . pre-commit-tmp

- commit-msg pre-commit pre-commit-tmp

, : , . , .

<spoiler title=« :> : 32- ; , 64-; pip install , . - 32- — .

But still, how to launch?

First, we made a multi-page instruction on which croissants are tastier , which python to install. But do we remember about game designers and scrambled eggs? It has always been burnt out: either python of the wrong bitness, or 2.7 instead of 3.7. And all this is multiplied by two platforms where users work: windows and mac. (Linux users with us either gurus and set up everything themselves, quietly tapping to the sounds of a tambourine, or they passed the problem.)

We solved the issue radically - we collected python of the required version and bitness. And to the question "how do we put it and where to store it" they answered: Nexus! The only problem: we don't have python yet to run the python script we made to run the utilities from the Nexus.

This is where bash comes in! He's not so scary, and even good when you get used to him. And it works everywhere: on unix everything is already fine, and on Windows it is installed along with git-bash (this is our only requirement for the local system). The installation algorithm is very simple:

- Download the compiled python archive for the required platform. The easiest way to do this is through curl - it is almost everywhere (even on Windows ).

Download pythonmkdir -p "$PYTHON_PRIMARY_DIR" curl "$PYTHON_NEXUS_URL" --output "$PYTHON_PRIMARY_DIR/ci_python.zip" --insecure || exit 1

- Unzip it, create a virtual environment linking to the downloaded binary. Don't repeat our mistakes: don't forget to nail the virtualenv version.

echo "Unzip python..." unzip "$PYTHON_PRIMARY_DIR/ci_python.zip" -d "$PYTHON_PRIMARY_DIR" > "unzip.log" rm -f "$PYTHON_PRIMARY_DIR/ci_python.zip" echo "Create virtual environment..." "$PYTHON_EXECUTABLE" -m pip install virtualenv==16.7.9 --disable-pip-version-check --no-warn-script-location

- If you need any libraries from lib / *, you need to copy them yourself. virtualenv doesn't think about it.

- Install all required packages. Here we agreed with the projects that they will have a ci / required.txt file, which will contain all the dependencies in pip format .

Installing dependencies

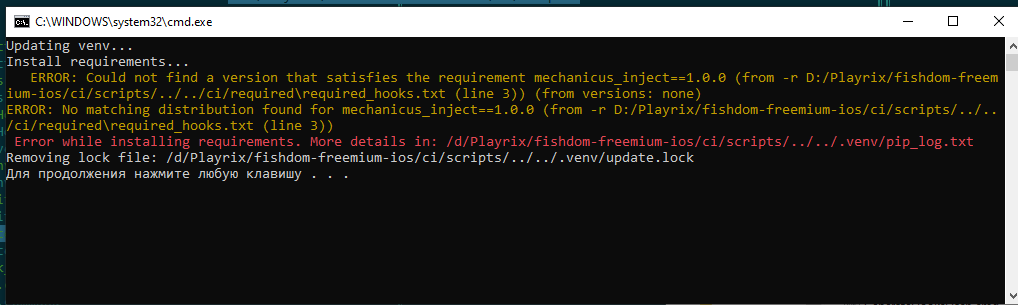

OUT_FILE="$VENV_DIR/pip_log.txt" "$PYTHON_VENV_EXECUTABLE" -m pip install -r "$REQUIRED_FILE" >> "$OUT_FILE" 2>&1 result=$? if [[ "$result" != "0" ]]; then var2=$(grep ERROR "$OUT_FILE") echo "$(tput setaf 3)" "$var2" "$(tput sgr 0)" echo -e "\e[1;31m" "Error while installing requirements. More details in: $OUT_FILE" "\e[0m" result=$ERR_PIP fi exit $result

Required.txt example

pywin32==225;sys_platform == "win32" cryptography==3.0.0 google-api-python-client==1.7.11

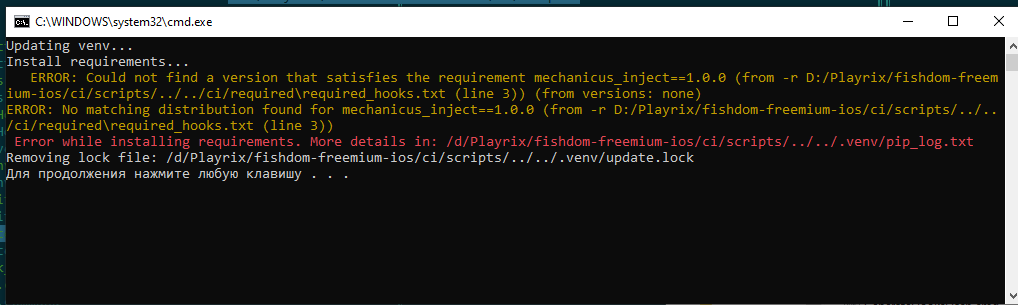

When they address a problem, they usually attach a screenshot of the console where the errors were displayed. To make our work easier, we not only store the output of the last pip install run , but also added colors to life, displaying errors in color from the log directly to the console. Long live grep!

How it looks

At first glance, it may seem that we do not need a virtual environment. After all, we have already downloaded a separate binary, into a separate directory. Even if there are several folders where our system is deployed, the binaries are still different. But! Virtualenv has an activate script that makes it so that python can be called as if it were in the global environment. This isolates the execution of scripts and makes it easier to launch.

Imagine: you need to run a batch file from which a python script runs, from which another python script runs. This is not a fictional example - this is how post-build events are executed when building an application. Without virtualenv, you would have to calculate the necessary paths everywhere on the fly, but with activatewe just use python everywhere . More precisely, vpython - we have added our own wrapper to make it easier to run both from the console and from scripts. In the shell, we check whether we are already in the activated environment or not, whether we are running on TeamCity (where our virtual environment is), and at the same time we prepare the environment.

vpython.cmd

set CUR_DIR=%~dp0 set "REPO_DIR=%CUR_DIR%\." rem VIRTUAL_ENV is the variable from activate.bat and is set automatically rem TEAMCITY - if we are running from agent we need no virtualenv activation if "%VIRTUAL_ENV%"=="" IF "%TEAMCITY%"=="" ( set RETURN=if_state goto prepare :if_state if %ERRORLEVEL% neq 0 ( echo [31m Error while prepare environment. Run ci\PrepareAll.cmd via command line [0m exit /b 1 ) call "%REPO_DIR%\.venv\Scripts\activate.bat" rem special variable to check if venv activated from this script set VENV_FROM_CURRENT=true ) rem Run simple python and forward args to it python %* SET result=%errorlevel% if "%VENV_FROM_CURRENT%"=="true" ( call "%REPO_DIR%\.venv\Scripts\deactivate.bat" set CI_VENV_RUN= set VENV_FROM_CURRENT= ) :eof exit /b %result% :prepare setlocal set RUN_FROM_SCRIPT=true call "%REPO_DIR%\ci\PrepareEnvironment.cmd" > NUL endlocal goto %RETURN%

Tanakan, or don't forget to put tests

We have solved the forgetfulness problem for running tests, but even one script can be overlooked. Therefore, they made a pill for forgetfulness. It has two parts.

When our system starts up, it modifies the commit comment and marks it as "approved." As a label, we decided not to philosophize and add [+] or [-] at the end of the comment to the commit.

A script is running on the server that parses messages, and if it does not find the coveted set of characters, it creates a task for the author. This is the simplest and most elegant solution. Non-printable characters are not obvious. To run server hooks, you need a different tariff plan on GitHub, and no one will buy premium for one feature. Going through the history of commits, looking for a symbol and setting a task is obvious and not so expensive.

Yes, you can put a symbol with your own pens, but are you sure you won't break the assembly on the server? And if you break it ... yes, the bald man from Homescapes is already following you.

What's the bottom line

It is rather difficult to track the number of errors that hooks have found - they do not get to the server. There is only a subjective opinion that there are much more green assemblies. However, there is also a negative side - the commit began to take quite a long time. In some cases, it can take up to 10 minutes, but that's a separate story about optimization.