Disclaimer: my name is Eric Burygin, I have been working as a tester for a long time, I teach students on the course "Test Engineer" , so it may seem that the tester just wants to transfer a piece of work to the developers. In fact, the described approach has both pros and cons, so the article is, among other things, debatable. I would be glad to see the opinions of both developers and testers in the comments.

If development writes tests, you can solve several problems at once, for example:

- Perceptibly speed up the release cycle.

- Unload testing.

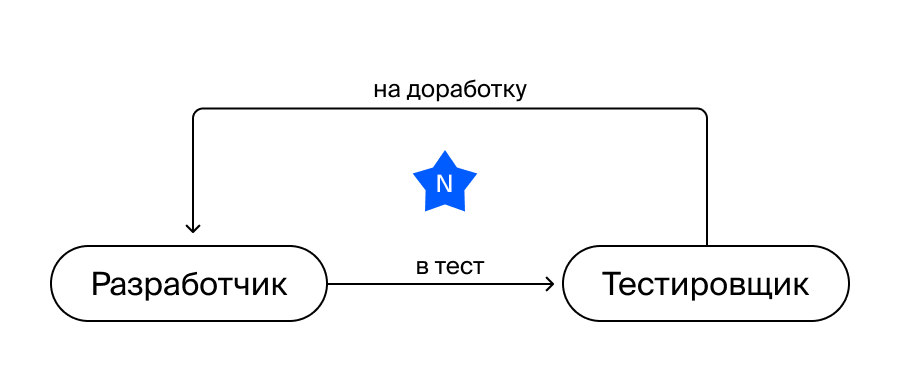

In most commands, the process looks something like this:

- The developer creates new features and completes existing ones.

- The tester tests all this and writes various test cases.

- The automator, justifying the title of the position, automates everything according to the written test cases from clause 2.

Everything seems to be simple.

But there are weaknesses in this paradigm.

Let's say a developer has completed his feature and has safely passed it for testing. But the feature turned out to be not medium rare, but frankly raw. This will lead to a rediscovery of the problem and additional fixes, and there can be from one to N iterations, depending on the size of this feature, its complexity, impact on related processes, and the conscientiousness of the developer himself. And also on how your processes are in principle arranged within the development, how carefully pull requests look, whether the application is launched before being sent for testing.

In general, there are enough variables.

After the task is tested and ready for release, testing needs to be written for the entire functionality of test cases. Then regress / smoke and finally release.

After receiving the written test cases, the automator covers the functionality with tests. There is a fairly high probability that the task will end up in an existing queue, so the tests will be written with a delay.

- Just need more developers

Alas, not a panacea. Rather the opposite. The more developers you have in this scheme, the stronger the testing load will be. As a result, either the release cycle or the testing team itself will increase.

And this, according to the domino principle, will increase the load on the automators, who will have to process more and more test cases that fall on them from testing. There will be a mirror situation: either the test coverage time will increase, or the automation staff will.

There are usually two testers and one automation engineer for every eight developers. At the same time, automation is not directly involved in the release cycle - rather, it is nearby. And the question arises: how to make the described processes more effective, and even not to lose in quality?

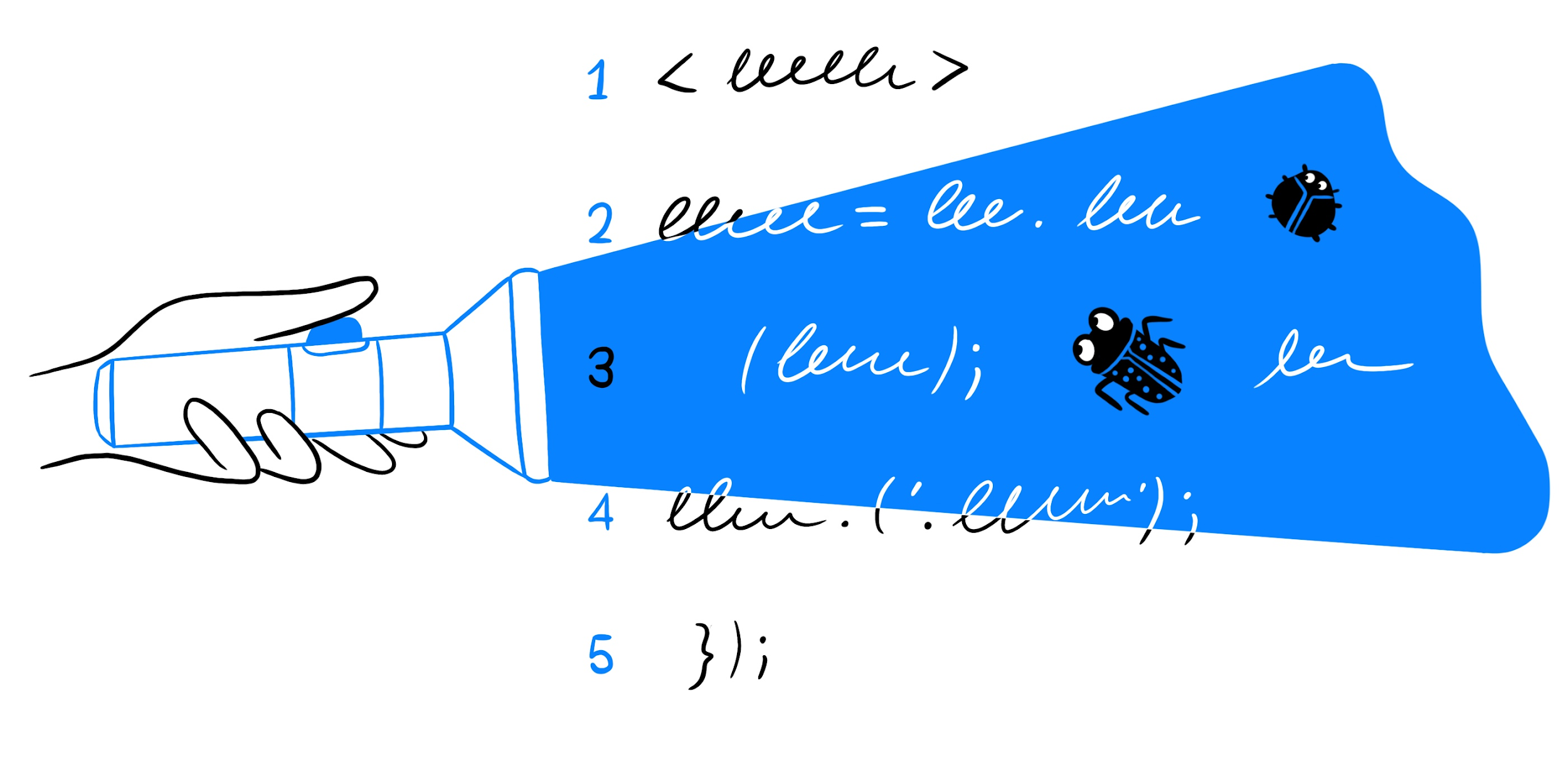

Let's try to move the automation stage from third place to first, to the development stage.

What happens

You will immediately get a good set of advantages, see:

- developers write tests simultaneously with writing the feature itself, which significantly improves its quality;

- the load on testing decreases: testers now need to look at the test results and assess whether the task is sufficiently covered by the tests;

- manual regression in the scheme is no longer necessary.

What about testers?

The tester, even in the updated paradigm, remains an expert in testing - it is he who reviews both the quality and the completeness of the autotest coverage of a particular feature, as well as analyzes complex and unusual problems. But now, thanks to the reduced load, the tester frees up part of his time, he can deal with processes.

At the same time, you need to understand that manual testing will still not go anywhere - you will always have something that for some reason is either impossible to automate or does not make sense.

So, to a new paradigm. Cool? Yes, at least implement it right now. If you can do two things.

- Sell this idea to development. Because not every developer will immediately want to write tests, because it can be boring, or he simply does not want to: do you, in fact, have testers there for what?

- Sell this idea to managers. Because, with other advantages, you increase the development time for each feature.

What are the disadvantages here can await you.

- Most developers just don't know how to test, because they are developers, not testers. And that's okay. Here you can either teach them how to test, which will not be the most trivial task, or just write test cases for them. Which de facto breaks the process itself.

- At the start, automation will take more time, because there will be no test code base, infrastructure and usual approaches - the task is new.

- Clear reports will be needed for testing. But keep in mind: even the most understandable report cannot always be read correctly right away.

- You cannot easily and quickly cover not every problem with tests. In some cases, you will have to spend more time on tests than on the actual implementation of the feature.

- It will be difficult to deliver large-scale tasks at the same time as tests, it takes a lot of time.

- For the same large-scale and complex tasks, you will still have to set aside time to simply delve into them, because there is no other way to check the correctness of the tests that the developers wrote.

What to do?

Basically, each problem has a solution.

- Developers don't know how to test. →

You can consult them in the early stages to help understand. - , . →

. . - . →

, , . - . →

: . - , . →

, — . . - . →

. .

An approach with all its pros and cons has a right to life. And if you also set up the processes correctly, then this will help you speed up the release cycle and not inflate the state (: