Neuron

In this article, you will learn the basics of how artificial neurons work. In subsequent articles, we will learn the basics of neural networks and write the simplest neural network in python.

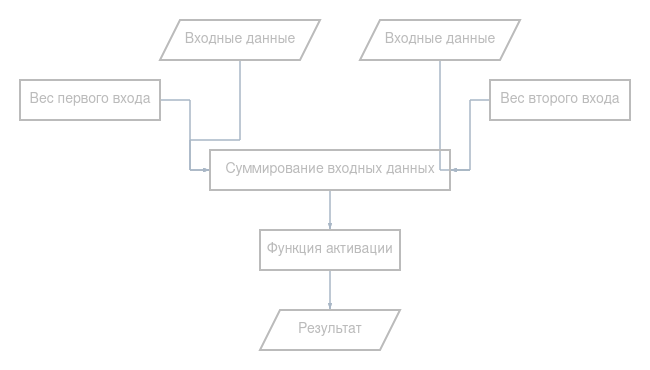

Neural networks are composed of neurons connected to each other, so a neuron is the main part of a neural network. The neurons perform only 2 actions: they multiply the input data by the weights and add them and add the bias, and the second action is activation.

Now more about the input data, weights, biases and the activation function.

Input data is data that a neuron receives from previous neurons or from the user.

Weights are assigned to each input of the neuron, initially they are assigned random numbers. When training a neural network, the value of neurons and displacements changes. The input data that is fed to the neuron is multiplied by the weights.

Offsets are assigned to each neuron, just like the weights of the initial bias, these are random numbers. Offsets make it easier and faster to train the neural network.

The activation function that we will use in our neural network is called sigmoid. The sigmoid formula is:

This function wraps any number from -∞ to + ∞ in the range from 0 to 1.

If we describe mathematically, the tasks that the neuron performs, then we get 2 formulas: Notation:

f (x) - activation function

x - the sum of the product of the input data with the weights, and the bias

i - array of input data

w -

b -

j -

python.

numpy. Linux Window:

pip install numpy

:

import numpy as np

Neuron, :

class Neuron:

def __init__(self, number_of_weights = 1):

self.w = np.random.normal(size=number_of_weights)

self.b = np.random.normal()

, , . . :

def activate(self, inputs):

x = np.dot(self.w, inputs) + self.b

return sig(x)

, . :

def sig(x):

return 1 / (1 + np.exp(-x))

Math.py

numpy:

import numpy as np

Neuron.py. Neuron.py Math.py:

from Math import *

2 :

Neuron.py

import numpy as np

from Math import *

class Neuron:

def __init__(self, number_of_weights = 1):

self.w = np.random.normal(size=number_of_weights)

self.b = np.random.normal()

def activate(self, inputs):

x = np.dot(self.w, inputs) + self.b

return sig(x)

Math.py

import numpy as np

def sig(x):

return 1 / (1 + np.exp(-x))

.

, :

,

Python

- .