23 times

more targeted emails using a neural network compared to a trigger

8.5 times

more revenue from email marketing by attribution last click

2 times

less unsubscriptions

17 times

more openings

Below we will share our experience and tell you:

- why we decided to use the LSTM neural network model to predict the email sending date instead of the gradient boosting algorithm;

- how the LSTM works;

- what data the neural network uses for training;

- what architecture of the neural network was used and what difficulties they encountered;

- what results were achieved and how they were assessed.

Why did you decide to abandon the gradient boosting algorithm in favor of LSTM

Email newsletters help inform customers about new products, reactivate churning customers, or show personalized recommendations. For each client, the date of the best mailing is different: someone makes purchases on the weekend, so it is best to send an email on Saturday; and someone recently bought a house for a cat, and it is worth sending a letter as soon as possible and advising him about food. A neural network helped us determine the best date for sending an email and guess the client's need.

At first we used standard algorithms. For a whole year, we created signs from the history of customer actions and trained gradient boosting on them in order to predict the best date for sending emails. For instance:

- calculated how many days will pass from the date of purchase to the next purchase;

- tried to make a classification of signs and predict the probability of sending a letter on a certain day;

- tried to determine the interests of the user depending on the place of residence in order to increase the likelihood of viewing the letter and clicks.

But this model did not give a stable positive result for all projects, it could not find complex patterns in user behavior and did not bring enough money.

When we were already thinking of abandoning the algorithm and the idea of predicting the date of sending an email, we decided to try something exotic and train the LSTM model of the neural network for this task. Usually it is used for text analysis, less often for analyzing stock prices in financial markets, but never for marketing purposes. And the LSTM worked.

What is LSTM

LSTM (Long Short Term Memory) is a neural network architecture that comes from natural language analysis.

Let's analyze how LSTM works using machine translation as an example. All the letters of the text are fed to the input of the neural network in turn, and at the output we want to get a translation into another language. To translate text, the network must store information not only about the current letter, but also about those that were in front of it. An ordinary neural network does not remember what it was shown before, and cannot translate an entire word or text. LSTM, on the other hand, has special memory cells where useful information is stored, therefore it produces a result based on the total data and translates the text taking into account all letters in words. Over time, the neural network can clear cells and forget information that is no longer needed.

The same principle turned out to be important for predicting user actions. The neural network took into account the entire history of actions and produced relevant results - for example, it determined the best date for sending an email.

Internal structure of one layer LSTM

The inner layer of the LSTM consists of the operations of addition + , multiplication × , sigmoid σ, and hyperbolic tangent tanh

What data is used by the neural network

To learn how to predict the best email sending date, the neural network analyzes a set of historical data. We pass in the sequence the time elapsed between actions and 9 types of tokens:

- buying a cheap product,

- buying an average price product,

- buying an expensive product,

- viewing a cheap product,

- viewing an average price product,

- viewing an expensive product,

- receiving a letter,

- opening a letter,

- click on any object inside the letter.

This is how a typical example of an input sequence looks like:

(view_medium, 0.5, view_cheap, 24, buy_cheap) A

user with this sequence looked at an average-price product, looked at a cheap product in half an hour, and decided to buy a cheap product a day later.

The last five user actions are the target variable. Their neural network has learned to predict.

What architecture of the neural network was used

The first attempts to train the neural network were unsuccessful: it retrained and always predicted only the sending of a letter, and not other actions, for example, the probability of opening a letter or buying. Since customers are more likely to receive emails than open them or buy something, “receive emails” is the most frequent token. The neural network received good results in terms of metrics, although the real result was negative. After all, there is no point in an algorithm that always says that the client will receive a letter - and nothing else.

For example, there is an input sequence of three tokens "receive a letter" and one "purchase of goods". The neural network processes it and predicts a sequence with four tokens "receiving a letter". In 3 out of 4 cases, she will guess, and the client will indeed receive a letter, but such a prediction makes no sense. The main task is to predict when a customer will open an email and make a purchase.

After testing several architectures and learning paths, we found what worked.

As usual for Seq2Seq models, the network consists of two parts: an encoder and a decoder. The encoder is small and consists of LSTM and embedding layers, while the decoder also uses self attention and dropout. In training, we use teacher forcing - sometimes we give a network prediction as input for the next prediction.

The encoder encodes the input sequence into a vector that contains important, in the opinion of the network, information about the user's actions. The decoder, on the contrary, decodes the resulting vector into a sequence - this is the network prediction.

Getting a prediction using an LSTM network

Training time: the model was trained for about a day on Tesla V100 and upon completion of training received ROC-AUC 0.74.

How the LSTM model works with real data (inference)

To apply the model for some user and find out whether it is worth sending him a letter, we will collect a vector from his last actions and run it through the neural network. Suppose that the neural network's response was like this:

(email_show, 10, email_open, 0.5, view_cheap, 0.5 view_medium, 15 buy_medium)

The model predicts not only actions, but also how much time will pass between them. Let's cut off all events that happen later than a day later. We will process them the next day, because during this time, new information about the client's actions may appear, which will need to be taken into account. We get the following sequence:

(email_show, 10, email_open, 0.5, view_cheap, 0.5 )

There is a view token in the sequence, so an email will be sent to the user today.

It is important to send an email only if there is a viewing or purchase token, and not receiving an email, so that the network does not repeat the trigger mailings that it remembered earlier. For example, if you do not take into account viewing and purchases, we can get a sequence only with tokens for receiving a letter. And then the network will duplicate the trigger settings of the marketer instead of predicting the opening of an email or a purchase:

How the result was evaluated

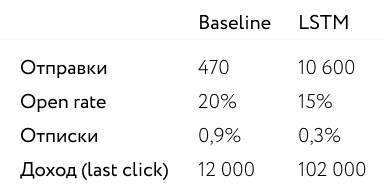

To check the model's performance, we ran AB tests. As a baseline, we used an algorithm that calculates the average time between purchases of a user and sends an email when this time passes. One half of the users received emails based on the baseline decisions, the other - according to model predictions. AB tests were conducted with the customer base of the pet shops Beethoven and Staraya Farm .

The test lasted two weeks and reached statistical significance. The neural network has learned to find 23 times more users who need to send an email, while in percentage terms the open rate fell by only 5%, and the number of openings in absolute numbers grew 17 times.

AB test result for LSTM neural network model and conclusions

So, the experiment with a neural network instead of an algorithm turned out to be successful. The LSTM neural network model has become a suitable tool for predicting the best email sending date. We have learned from our own experience that there is no need to be afraid to use non-standard models to solve trivial problems.

Sergey Yudin, ML-developer, author