Here you download yourself a previously paid pre-order. Installation is complete, you start the game. Everything is going well: the game flies with a frame rate of 60 FPS. Or at least that's what the frame counter in your GPU overlay tells you. But something is going wrong. You move your mouse back and forth and notice that the game is ... freezing.

How is this possible? What other freezes at 60 FPS?

It may seem ridiculous until you come across it yourself. If you have met such friezes, then you probably already managed to hate them.

These are not lags. Not a low frame rate. This is stattering. With high FPS and ideal ultra-fast configuration.

What is it, where did it come from and is there a way to get rid of it? Let's figure it out now.

Since the introduction of the first arcade machines in the 70s, video games have been running at 60 FPS. It is usually assumed that the game should run at the same speed as the display. It was only after the popularization of 3D gaming that we had to face and adopt a lower frame rate. Back in the 90s, when "3D cards" (now called "GPUs") began to replace software rendering, people were playing at 20 frames per second, and 35 FPS was already considered the frequency for serious network competition.

Now we have super-fast cars that can of course fly at 60 FPS. However ... it looks like there are more performance dissatisfied than ever. How is this possible?

It's not that games aren't fast enough. And the fact that they freeze even with high performance.

If you browse the gaming forums, you will probably see something like this in the headlines:

PC gamers often complain that games suffer from stattering even if there are no frame rate issues.

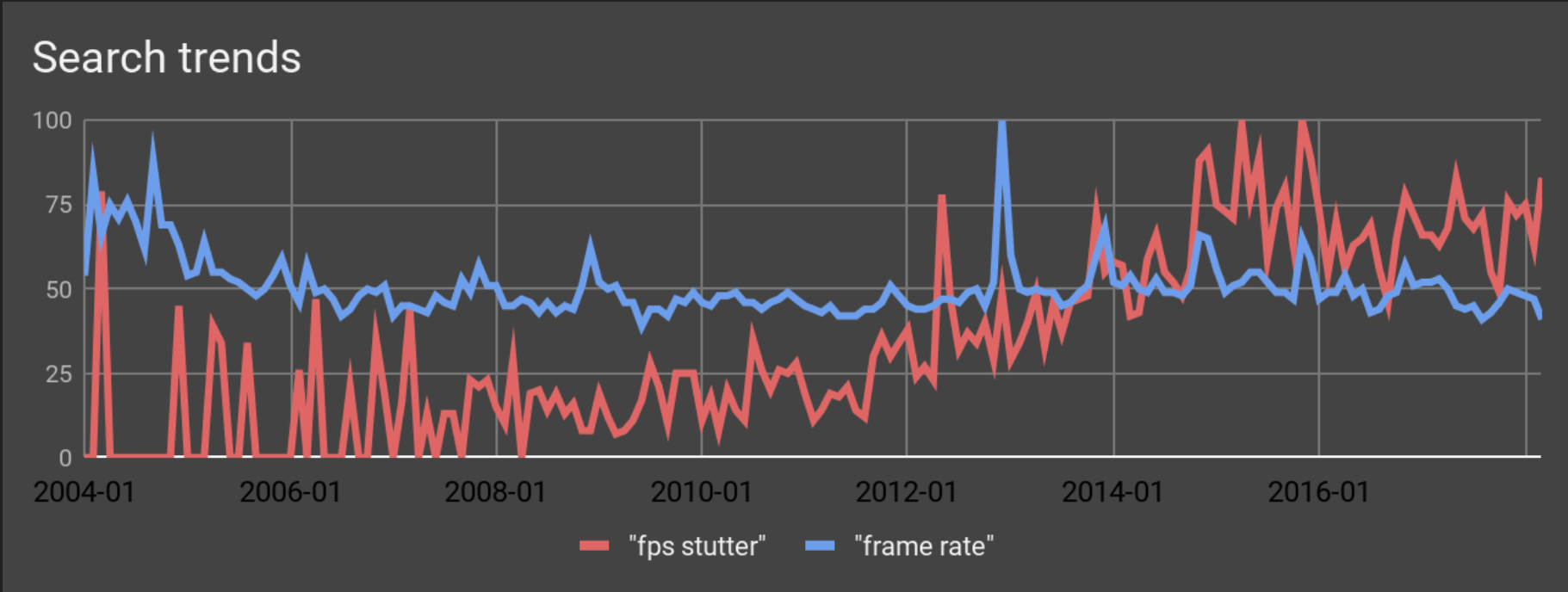

It can be assumed that these are isolated cases, but such assumptions are dispelled by Google search statistics:

Over the past 5 years, stattering has become (relatively) more of a problem than performance.

(Note that these are relative values. It's not that people are looking for information about stattering more often than about frame rates in general. While the number of searches for frame rate remains the same, searches for stating are becoming more common. especially lately.)

Decade of searching for the reasons for stattering

The patient is definitely alive. He just frets often.

The author first encountered this problem sometime in 2003 while working on Serious Sam 2. People began to report cases of screen and mouse movements that were not smooth during testing on an empty level. This was accompanied by a very specific pattern in the frame rate graph, which the development team called "heartbeat".

The first thought was that somewhere in the code a bug had crept in, but no one could find it. It seemed that the problem appeared and disappeared randomly - when restarting the game, restarting the computer ... but it was worth changing any performance parameter and it disappeared. Then you could change the parameter back, and everything continued to work perfectly. Ghost problem.

Obviously, Sam wasn't the only problem. When launching other games, it appeared in the same way, suggesting that there was something wrong with the drivers. But stattering didn't depend on the manufacturer of your GPU. It took place even with different APIs (OpenGL, DirectX 9, DirectX 11 ...). The only thing that remained in common was that stattering appeared here and there on some machines and game scenes.

With the release of new games, this problem continued to appear and disappear. Previously, this only affected some users, and everything was limited to requests from the technical support to change some performance parameters - which sometimes helped, and sometimes it did not, you never know.

Then, all of a sudden, one beautiful winter day in early 2013, the guys at Croteam discovered another example of this problem that could be reproduced relatively consistently at the time - this time on one of the levels in Serious Sam 3. They fiddled with that scene for a long time, until suddenly it dawned on. It was so simple - no wonder it escaped the public eye for a decade.

By changing just one simple option in the game engine, they were able to solve this problem. However, it immediately became clear that the solution would actually require much more time and effort. And not only from a specific team, but from the entire gaming ecosystem: GPU driver developers, API maintainers, OS vendors - everyone.

What has been happening all this time

This is how it looks when the game slows down even at 60 FPS. You could have experienced something similar playing any modern game, and probably the first thing you would think is that the game is not optimized. Well, let's revisit this theory.

If the game is "too slow", it means that at some points it will not be able to render one frame fast enough and the monitor will have to show the previous frame again. Therefore, when we shoot video at 60 frames per second, it should show "dropped frames" - when the next frame was not displayed in time, which is why the same one was shown twice.

However, this only happens when you play the entire animation. If you went through it frame by frame, you would not find any gaps.

How is this possible?

Let's take a closer look at this. Below is a side-by-side comparison between ideal smooth video and staged video:

Six consecutive frames with accurate timing. Above - correctly placed frames, below - frames with stattering.

You can see two things here: firstly, they really work at the same speed: whenever a new frame appears on top (correct), then a new frame appears below (stattering). Secondly, for some reason they seem to move a little differently - there is a noticeable "gap" in the middle of the image that fluctuates between a larger and smaller time division.

The most attentive may notice another curious detail: the bottom image is supposedly "slower" ... in fact it is "ahead" of the correct one. Strange, isn't it?

If we look at several consecutive frames and their timing, we can see something else interesting: the first two frames are perfectly synchronized, but in the third frame the tree in the "slower" video is significantly ahead of its counterpart in the "correct" video (circled in red) ... You can also notice that this frame clearly took longer (circled in yellow).

Wait, wait ... but if the video is “slower” and the frame “took longer”, how can it go ahead?

To understand further explanations, you first need to understand how modern games and other 3D applications generally perform animation and rendering.

A brief history of frame sync

A long time ago, in a galaxy far, far away ... when developers created the first video games, they usually did so based on the exact frame rate at which the display ran. In NTSC regions, where TVs operate at 60 Hz, this means 60 frames per second, and in PAL / SECAM regions, where TVs operate at 50 Hz, 50 frames per second.

Most of the games were very simple concepts running on fixed hardware - usually an arcade console or a well-known "home microcomputer" like the ZX Spectrum, C64, Atari ST, Amstrad CPC 464, Amiga, etc. Thus, creating and By testing games for a specific machine and a specific frame rate, the developer could always be 100% sure that the frame rate would never drop anywhere.

Object speeds were also stored in “personnel” units. Thus, you needed to know not how many pixels per second the character would move, but how many pixels in the frame. For example, in Sonic The Hedgehog for the Sega Genesis, this speed is 16 pixels per frame. Many games even had separate versions for PAL and NTSC regions, where animations were hand-drawn specifically for 50 and 60 FPS, respectively. In fact, working at any other frame rate was simply impossible.

And since games began to run on more varied devices over time, including PCs with ever-expanding and upgraded hardware, it was impossible to know exactly what frame rate the game would run at. This fact was compounded by the fact that the games themselves have become more complex and unpredictable - this is especially noticeable in 3D games, where there can be large differences in the complexity of the scene, sometimes even determined by the players themselves. For example, everyone loves to shoot at stacks of fuel barrels, causing a colorful series of explosions ... and an inevitable drop in frame rate. But since it's fun, no one really minds.

Therefore, it is difficult to predict how long it will take to model and render one frame. (Please note that on modern consoles we can assume that the hardware is fixed, but the games themselves are still quite unpredictable and complex.)

If you cannot be sure at what frame rate the game will work, you need to measure the current frequency frames and constantly adapt the physics of the game and the speed of the animation for it. If one frame takes 1 / 60th of a second (16.67ms) and your character is running at 10m / s, then it moves 1/6 of a meter in each frame. But if the frame suddenly starts to take 1/30 of a second (33.33 ms), then you have to move the character already by 1/3 meter per frame (twice "faster") so that he continues to move at the same apparent speed.

How to arrange it? As a rule, the game measures the time at the beginning of adjacent frames and calculates the difference. This is a fairly simple method, but it works really well.

Rather, it worked very well before. Back in the 90s, when 35 FPS was considered what speed, people were more than happy with it. But at that time, video cards were not such a significant part of the PC, and the central processor had control over everything that happened on the screen. If you didn't have a 3D accelerator, it even drew objects by itself. Thus, he knew exactly when they would hit the screen.

The situation today

Over time, more and more complex GPUs began to appear, and they inevitably became more and more "asynchronous". This means that when the CPU tells the GPU to draw something to the screen, the GPU simply stores that command in a buffer so that the CPU can keep going while the GPU is rendering. Ultimately, this leads to a situation where the CPU tells the GPU when the end of the frame is coming, and the GPU, while storing this among its data, does not really consider it to be something of a priority - it is still processing some of the previously issued commands. ... It will show the frame on the screen only when it has done everything that it was loaded before.

So, when the game tries to calculate the time by subtracting the timestamps at the beginning of two consecutive frames, the relevance of this is, frankly ... highly questionable. So let's go back to our example. There we had the following frames:

Six consecutive frames with precise timing. The top row is correct, the bottom row has a stattering effect.

In the first two frames, the frame time is 16.67ms (or 1/60 of a second) and the camera moves the same amount in the upper and lower cases, so the trees are in sync. In the third frame (below, with stattering), the game saw that the frame time is 24.8 ms (that is, more than 1/60 of a second) and therefore thinks that the frame rate has dropped and rushes to catch up ... just to find that on the next frame the time is only 10.7 ms, which causes the camera to slow down, and now the trees are more or less synchronized again.

What's going on? The frame times measured by a game fluctuate due to various factors - especially on a busy multitasking system like a PC. Therefore, at some points in time, the game assumes that the frequency has dropped from 60 FPS, and generates animation frames designed for a lower frame rate. But due to the asynchronous nature of the GPU operation, it always somehow returns to the same 60 frames per second.

This is stattering - an animation generated for a variable frame rate (heart rate) displayed at the actual correct fixed frame rate.

So, in essence, we can assume that there is no problem - everything is going smoothly, the game just does not know it.

This brings us to what we talked about at the beginning of the article. When we have finally figured out the cause of the problem (although we know that this is an illusion of a problem - there really is no problem, right?), We can apply the following magic pill.

What is this pill? In Serious Engine, it is denoted as sim_fSyncRate = 60. In simple terms, it means this: " completely ignore all this shenanigans and pretend that we always measure stable 60 frames per second ." And it makes everything run smoothly - just because everything worked smoothly from the start! The only reason stating came about was because the timing was incorrect for animation.

And what, is that all?

So the solution is that simple?

Unfortunately no. It was just on tests only. If we stopped measuring the frame rate in real conditions and simply assumed that it is always equal to 60 FPS, then when it drops below 60 - and on a PC it will sooner or later drop for whatever reason: programs running in the background , energy conservation or overheating protection, who knows - then everything will slow down.

So, if we measure frame time, stattering occurs, and if not, at some point everything can slow down. And then what?

The real solution would be to measure not the start / end time of rendering a frame, but the time when the image is shown on the screen.

But how can the game know when a frame is actually displayed on the screen?

No way: at the moment this is impossible.

Strange, but true. One would expect this to be a core feature of every graphics API. But no: they have undergone changes in all other aspects besides this. There is no way to know for sure when the frame will actually appear on the screen. You can find out when the rendering finished. But this is not the same as display time.

Now what?

Well, it's not that bad. Many people are actively working on implementing support for correct frame synchronization for different APIs. The Vulkan API already has an extension called VK_GOOGLE_display_timing that has a proven track record of implementing this concept, but it is only available for a limited number of devices.

Work is underway to provide similar and better solutions, I would like to believe that already in all major graphics APIs. When? It is difficult to say, because the problem cuts deeply into various OS subsystems.

However, we hope that it will soon become available to the wider public.

Various caveats and other details

We will assume that this is the end of the main text. The sections below are "bonus features" that are largely independent of each other and the above.

"Composer"

Is that a frosted glass effect? Yeah, that's why we have to have a composer. Pretty important, isn't it?

A concept called Compositing Window Manager , also known as composer, is involved behind the scenes . This is a system that is now present in every OS and allows windows to be transparent, have blurred backgrounds, shadows, and so on. Composers can go further and show your program windows in 3D. To do this, the composer takes control of the very last part of the frame and decides what to do with it, just before it hits the monitor.

In some operating systems, the composer can be disabled in full screen mode. But this is not always possible, and even in such cases - can't we run the game in windowed mode?

Power & Thermal Management VS Rendering Complexity

We must also take into account the fact that modern CPUs and GPUs do not operate at a fixed frequency, but both have systems that adjust their speed up and down depending on the load and the current temperature. Thus, the game cannot simply assume that they will have the same speed from frame to frame. On the other hand, the operating system and drivers cannot expect the game to do the same amount of work every frame. Complex communication systems between two parties must be designed in such a way that all of this is taken into account.

Couldn't we just ...

Couldn't. :) Usually, GPU time measurement is offered as an alternative to display time. But this does not take into account the presence of a composer and the fact that none of the GPU rendering timers actually sync directly with the display update. For perfect animation, we definitely need to know exactly when the image was displayed, not when it finished rendering.