This article was written because I would like to have such an article in front of my eyes when I deployed the cluster using the documentation. I want to say right away that I am not an expert in K8S, but I had experience with deploying product installations of DC / OS (an ecosystem based on Apache Mesos). For a long time, K8S scared me off by the fact that when you try to study it, you are bombarded with a bunch of concepts and terms, which makes your brain explode.

However, I had a task to configure a failover Bare Metal cluster for a complex application, which is why this article arose. During the tutorial, I will touch on the following aspects:

correct installation of K8S using kubeadm on nodes with multiple NICs;

implementation of a fault-tolerant Control Plane with shared IP and DNS name access;

implementation of an Ingress controller based on Nginx on dedicated nodes with access from the public network;

forwarding the K8S API to the public network;

access to the K8S Dashboard UI from the administrator's computer.

I installed in Ubuntu 18.04 environment, and therefore some of the steps may not work in your distribution, plus I have specific problems with Keepalived, Pacemaker. The text may contain the phrases "as I understand ..." and "I still do not quite understand ...".

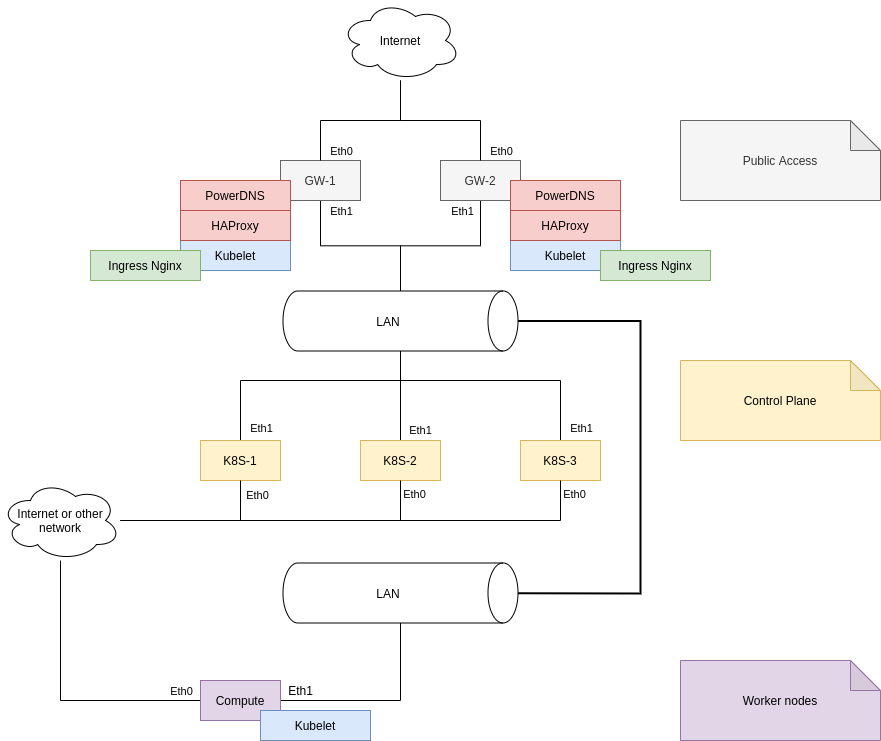

First, let's look at the node topology of the cluster in which we will be deploying K8S. This is a simplified topology with no unnecessary details.

A distinctive feature in my cluster is that all nodes have two network interfaces - on eth0 there is always a public address, and on eth1

- an address from the network 10.120.0.0/16

.

, K8S , NIC. - .

, K8S NIC , , "" , , .

Kubeadm , IP- - , , "-", , .

Enterprise K8S, - , , , Ceph VLAN-.

, Ansible, playbook-, . , .

DNS-

DNS- IP-, . , gw-1

, gw-2

pdns-recursor .

pdns-recursor gw-1

, gw-2

:

allow-from=10.120.0.0/8, 127.0.0.0/8

etc-hosts-file=/etc/hosts.resolv

export-etc-hosts=on

export-etc-hosts-search-suffix=cluster

/etc/hosts.resolv

ansible :

# Ansible managed

10.120.29.231 gw-1 gw-1

10.120.28.23 gw-2 gw-2

10.120.29.32 video-accessors-1 video-accessors-1

10.120.29.226 video-accessors-2 video-accessors-2

10.120.29.153 mongo-1 mongo-1

10.120.29.210 mongo-2 mongo-2

10.120.29.220 mongo-3 mongo-3

10.120.28.172 compute-1 compute-1

10.120.28.26 compute-2 compute-2

10.120.29.70 compute-3 compute-3

10.120.28.127 zk-1 zk-1

10.120.29.110 zk-2 zk-2

10.120.29.245 zk-3 zk-3

10.120.28.21 minio-1 minio-1

10.120.28.25 minio-2 minio-2

10.120.28.158 minio-3 minio-3

10.120.28.122 minio-4 minio-4

10.120.29.187 k8s-1 k8s-1

10.120.28.37 k8s-2 k8s-2

10.120.29.204 k8s-3 k8s-3

10.120.29.135 kafka-1 kafka-1

10.120.29.144 kafka-2 kafka-2

10.120.28.130 kafka-3 kafka-3

10.120.29.194 clickhouse-1 clickhouse-1

10.120.28.66 clickhouse-2 clickhouse-2

10.120.28.61 clickhouse-3 clickhouse-3

10.120.29.244 app-1 app-1

10.120.29.228 app-2 app-2

10.120.29.33 prometeus prometeus

10.120.29.222 manager manager

10.120.29.187 k8s-cp

Ansible

# {{ ansible_managed }}

{% for item in groups['all'] %}

{% set short_name = item.split('.') %}

{{ hostvars[item]['host'] }} {{ item }} {{ short_name[0] }}

{% endfor %}

10.120.0.1 k8s-cp

, DNS-, DHCP, DNS-. Ubuntu 18.04 systemd-resolved

, gw-1, gw-2

. systemd, systemd-resolved

, /etc/systemd/network/0-eth0.network

:

[Match]

Name=eth0

[Network]

DHCP=ipv4

DNS=10.120.28.23 10.120.29.231

Domains=cluster

[DHCP]

UseDNS=false

UseDomains=false

: DHCP, eth0 DNS- , DHCP-. 10.120.28.23, 10.120.29.231

*.cluster

.

, systemd-resolved

DHCP. , .

systemd-resolve --status

:

Global

DNSSEC NTA: 10.in-addr.arpa

16.172.in-addr.arpa

168.192.in-addr.arpa

17.172.in-addr.arpa

18.172.in-addr.arpa

19.172.in-addr.arpa

20.172.in-addr.arpa

21.172.in-addr.arpa

22.172.in-addr.arpa

23.172.in-addr.arpa

24.172.in-addr.arpa

25.172.in-addr.arpa

26.172.in-addr.arpa

27.172.in-addr.arpa

28.172.in-addr.arpa

29.172.in-addr.arpa

30.172.in-addr.arpa

31.172.in-addr.arpa

corp

d.f.ip6.arpa

home

internal

intranet

lan

local

private

test

Link 3 (eth1)

Current Scopes: none

LLMNR setting: yes

MulticastDNS setting: no

DNSSEC setting: no

DNSSEC supported: no

Link 2 (eth0)

Current Scopes: DNS

LLMNR setting: yes

MulticastDNS setting: no

DNSSEC setting: no

DNSSEC supported: no

DNS Servers: 10.120.28.23

10.120.29.231

DNS Domain: cluster

. ping gw-1

, ping gw-1.cluster

ip-.

. Kubernetes . :

sudo -- sh -c "swapoff -a && sed -i '/ swap / s/^/#/' /etc/fstab"

swap- fdisk

.

. Flannel - K8S. , VXLAN

. .

, multicast

, VXLAN

multicast

-, . multicast

- , , VXLAN

, VXLAN

BGP . , Kubernetes - . , Flannel VXLAN

multicast. VXLAN+multicast

over VXLAN+multicast

over Ethernet

, VXLAN-

, Ethernet

multicast

- .

/etc/modules br_netfilter, overlay

.

modprobe br_netfilter && modprobe overlay

, .

/etc/sysctl.conf

:

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

sysctl -p

.

ontainerd

. Kubernetes Containerd (, Docker Containerd), :

sudo apt-get update

sudo apt install containerd

sudo sh -- -c "containerd config default | tee /etc/containerd/config.toml"

sudo service containerd restart

kubeadm, kubelet, kubectl

. K8S:

sudo apt-get update && sudo apt-get install -y apt-transport-https curl curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo apt-key add - cat <<EOF | sudo tee /etc/apt/sources.list.d/kubernetes.list deb https://apt.kubernetes.io/ kubernetes-xenial main EOF sudo apt-get update sudo apt-get install -y kubelet kubeadm kubectl sudo apt-mark hold kubelet kubeadm kubectl

Control Plane

, Control Plane K8S - k8s-{1,2,3}

. , :

kubeadm init --pod-network-cidr=10.244.0.0/16 \

--control-plane-endpoint=k8s-cp \

--apiserver-advertise-address=10.120.29.187

Flannel --pod-network-cidr=10.244.0.0/16

. POD- K8S .

--control-plane-endpoint string Specify a stable IP address or DNS name for the control plane.

DNS IP, K8S- Control Plane. k8s-cp

, ip- 10.120.0.1

(. , , k8s-cp Control Plane: 10.120.29.187 k8s-cp

).

--api-server-advertise-address

. api-server

, Etcd

, , . , kubeadm

, -, . , Etcd

, Etcd

, . .

, Flannel , , Control Plane ( IP- ).

, , .

, , Kubeadm

K8S . , Control Plane . , :

ps xa | grep -E '(kube-apiserver|etcd|kube-proxy|kube-controller-manager|kube-scheduler)'

. , kubectl

:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

kubectl

, kubectl get pods --all-namespaces

. , :

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system etcd-k8s-1 1/1 Running 0 2d23h

kube-system kube-apiserver-k8s-1 1/1 Running 0 2d23h

kube-system kube-controller-manager-k8s-1 1/1 Running 1 2d23h

kube-system kube-scheduler-k8s-1 1/1 Running 1 2d23h

, 1 , , RESTARTS

, Running

.

Kubernetes , CNI. CNI - Flannel. :

kubectl apply -f https://github.com/coreos/flannel/raw/master/Documentation/kube-flannel.yml

, kubectl get pods --all-namespaces

, , Flannel RESTARTS . - , Flannel ( POD- Flannel):

kubectl logs -n kube-system kube-flannel-ds-xn2j9

, . Control Plane , :

# ssh k8s-2

sudo kubeadm join k8s-cp:6443 --apiserver-advertise-address=10.120.28.37 --token tfqsms.kiek2vk129tpf0b7 --discovery-token-ca-cert-hash sha256:0c446bfabcd99aae7e650d110f8b9d6058cac432078c4fXXXXX6055b4bd --control-plane

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

# ssh k8s-3

sudo kubeadm join k8s-cp:6443 --apiserver-advertise-address=10.120.29.204 --token tfqsms.kiek2vk129tpf0b7 --discovery-token-ca-cert-hash sha256:0c446bfabcd99aae7e650d110f8b9d6058cac432078c4fXXXXXec6055b4bd --control-plane

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

--token

"", kubeadm token create

, .

Etcd

:

kubectl get pods --all-namespaces | grep etcd

kube-system etcd-k8s-1 1/1 Running 0 2d23h

kube-system etcd-k8s-2 1/1 Running 1 2d22h

kube-system etcd-k8s-3 1/1 Running 1 2d22h

Running, . kubectl get pods --all-namespaces

Control Plane:

NAME READY STATUS RESTARTS AGE

coredns-74ff55c5b-h2zjq 1/1 Running 0 2d23h

coredns-74ff55c5b-n6b49 1/1 Running 0 2d23h

etcd-k8s-1 1/1 Running 0 2d23h

etcd-k8s-2 1/1 Running 1 2d22h

etcd-k8s-3 1/1 Running 1 2d22h

kube-apiserver-k8s-1 1/1 Running 0 2d23h

kube-apiserver-k8s-2 1/1 Running 1 2d22h

kube-apiserver-k8s-3 1/1 Running 1 2d22h

kube-controller-manager-k8s-1 1/1 Running 1 2d23h

kube-controller-manager-k8s-2 1/1 Running 1 2d22h

kube-controller-manager-k8s-3 1/1 Running 1 2d22h

kube-flannel-ds-2f6d5 1/1 Running 0 2d3h

kube-flannel-ds-2p5vx 1/1 Running 0 2d3h

kube-flannel-ds-4ng99 1/1 Running 3 2d22h

kube-proxy-22jpt 1/1 Running 0 2d3h

kube-proxy-25rxn 1/1 Running 0 2d23h

kube-proxy-2qp8r 1/1 Running 0 2d3h

kube-scheduler-k8s-1 1/1 Running 1 2d23h

kube-scheduler-k8s-2 1/1 Running 1 2d22h

kube-scheduler-k8s-3 1/1 Running 1 2d22h

IP-, Control Plane.

IP DNS

:

Keepalived;

Pacemaker;

kube-vip.

, . Keepalived

, - -, . , , - Ubuntu 18.04 VXLAN

-, Underlay. Tcpdump

VRRP

-, k8s-{1,2,3}

, IP- 3 , MASTER

-. debug , Keepalived

Pacemaker

. - Corosync

Pacemaker

, :

sudo systemctl enable corosync

sudo systemctl enable pacemaker

, , /etc/rc.local

.

, IP- kube-vip, Kubernetes. , kube-vip Control Plane:

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

name: kube-vip-cp-k8s-1 # ,

namespace: kube-system

spec:

nodeName: k8s-1 #

containers:

- args:

- start

env:

- name: vip_arp

value: "true"

- name: vip_interface

value: eth1

- name: vip_leaderelection

value: "true"

- name: vip_leaseduration

value: "5"

- name: vip_renewdeadline

value: "3"

- name: vip_retryperiod

value: "1"

- name: vip_address

value: 10.120.0.1 # IP,

image: plndr/kube-vip:0.3.1 #

imagePullPolicy: Always

name: kube-vip-cp

resources: {}

securityContext:

capabilities:

add:

- NET_ADMIN

- SYS_TIME

volumeMounts:

- mountPath: /etc/kubernetes/admin.conf

name: kubeconfig

- mountPath: /etc/ssl/certs

name: ca-certs

readOnly: true

hostNetwork: true

volumes:

- hostPath:

path: /etc/kubernetes/admin.conf

name: kubeconfig

- hostPath:

path: /etc/ssl/certs

name: ca-certs

status: {}

Control Plane :

kubectl apply -f cluster_config/vip-{1,2,3}.yml

POD-, , 10.120.0.1

. , kube-vip

IP arping

:

sudo arping 10.120.0.1

ARPING 10.120.0.1

42 bytes from 1e:01:17:00:01:22 (10.120.0.1): index=0 time=319.476 usec

42 bytes from 1e:01:17:00:01:22 (10.120.0.1): index=1 time=306.360 msec

42 bytes from 1e:01:17:00:01:22 (10.120.0.1): index=2 time=349.666 usec

kube-vip? IP, , , , .

, kube-vip Kubernetes - gw-1

, gw-2

/etc/hosts.resolv

k8s-cp

:

10.120.0.1 k8s-cp

pdns-recursor sudo service pdns-recursor restart

, k8s-cp

IP 10.120.0.1

. , kubectl

k8s-cp

.

Control Plane K8S. k8s-{1,2,3}

, , .

Worker-

gw-1

, gw-2

, Nginx Ingress (compute-1

).

:

kubeadm token create --print-join-command

kubeadm join k8s-cp:6443 --token rn0s5p.y6waq1t6y2y6z9vw --discovery-token-ca-cert-hash sha256:0c446bfabcd99aae7e650d110f8b9d6058cac432078c4fXXXe22ec6055b4bd

# ssh gw-1

sudo kubeadm join k8s-cp:6443 --token rn0s5p.y6waq1t6y2y6z9vw --discovery-token-ca-cert-hash sha256:0c446bfabcd99aae7e650d110f8b9d6058cac432078c4fXXXe22ec6055b4bd

# ssh gw-2

...

# ssh compute-1

kubectl get pds --all-namespaces

POD-, kubectl get nodes

:

kubectl get nodes NAME STATUS ROLES AGE VERSION compute-1 Ready compute 2d23h v1.20.2 gw-1 Ready gateway 2d4h v1.20.2 gw-2 Ready gateway 2d4h v1.20.2 k8s-1 Ready control-plane,master 2d23h v1.20.2 k8s-2 Ready control-plane,master 2d23h v1.20.2 k8s-3 Ready control-plane,master 2d23h v1.20.2

, , . , .

:

kubectl label node gw-1 node-role.kubernetes.io/gateway=true

kubectl label node gw-2 node-role.kubernetes.io/gateway=true

kubectl label node compute-1 node-role.kubernetes.io/compute=true

#

kubectl label node compute-1 node-role.kubernetes.io/compute-

Ingress

, Nginx Ingress gw-1

, gw-2

. Nginx Ingress, :

hostNetwork

;

Deployment "2";

gateway

.

hostNetwork

. , K8S - , API External IP, . , bare metal External IP :

kubectl get nodes --output wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

compute-1 Ready compute 3d v1.20.2 10.120.28.172 <none> Ubuntu 18.04.5 LTS 4.15.0-135-generic containerd://1.3.3

gw-1 Ready gateway 2d4h v1.20.2 10.120.29.231 <none> Ubuntu 18.04.5 LTS 4.15.0-135-generic containerd://1.3.3

gw-2 Ready gateway 2d4h v1.20.2 10.120.28.23 <none> Ubuntu 18.04.5 LTS 4.15.0-135-generic containerd://1.3.3

k8s-1 Ready control-plane,master 3d v1.20.2 10.120.29.187 <none> Ubuntu 18.04.5 LTS 4.15.0-135-generic containerd://1.3.3

k8s-2 Ready control-plane,master 2d23h v1.20.2 10.120.28.37 <none> Ubuntu 18.04.5 LTS 4.15.0-135-generic containerd://1.3.3

k8s-3 Ready control-plane,master 2d23h v1.20.2 10.120.29.204 <none> Ubuntu 18.04.5 LTS 4.15.0-135-generic containerd://1.3.3

, External IP API, Kubernetes . , .

GitHub, , metallb, . , Nginx Ingress, hostNetworking

, Ingress 0.0.0.0:443

, 0.0.0.0:80

.

, Nginx Ingress :

YAML

apiVersion: v1

kind: Namespace

metadata:

name: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

---

# Source: ingress-nginx/templates/controller-serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

helm.sh/chart: ingress-nginx-3.21.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.43.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/controller-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

labels:

helm.sh/chart: ingress-nginx-3.21.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.43.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller

namespace: ingress-nginx

data:

---

# Source: ingress-nginx/templates/clusterrole.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

helm.sh/chart: ingress-nginx-3.21.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.43.0

app.kubernetes.io/managed-by: Helm

name: ingress-nginx

rules:

- apiGroups:

- ''

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

verbs:

- list

- watch

- apiGroups:

- ''

resources:

- nodes

verbs:

- get

- apiGroups:

- ''

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- extensions

- networking.k8s.io # k8s 1.14+

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- events

verbs:

- create

- patch

- apiGroups:

- extensions

- networking.k8s.io # k8s 1.14+

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io # k8s 1.14+

resources:

- ingressclasses

verbs:

- get

- list

- watch

---

# Source: ingress-nginx/templates/clusterrolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

helm.sh/chart: ingress-nginx-3.21.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.43.0

app.kubernetes.io/managed-by: Helm

name: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/controller-role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

labels:

helm.sh/chart: ingress-nginx-3.21.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.43.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx

namespace: ingress-nginx

rules:

- apiGroups:

- ''

resources:

- namespaces

verbs:

- get

- apiGroups:

- ''

resources:

- configmaps

- pods

- secrets

- endpoints

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- extensions

- networking.k8s.io # k8s 1.14+

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- extensions

- networking.k8s.io # k8s 1.14+

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io # k8s 1.14+

resources:

- ingressclasses

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- configmaps

resourceNames:

- ingress-controller-leader-nginx

verbs:

- get

- update

- apiGroups:

- ''

resources:

- configmaps

verbs:

- create

- apiGroups:

- ''

resources:

- events

verbs:

- create

- patch

---

# Source: ingress-nginx/templates/controller-rolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

helm.sh/chart: ingress-nginx-3.21.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.43.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/controller-service-webhook.yaml

apiVersion: v1

kind: Service

metadata:

labels:

helm.sh/chart: ingress-nginx-3.21.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.43.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller-admission

namespace: ingress-nginx

spec:

type: ClusterIP

ports:

- name: https-webhook

port: 443

targetPort: webhook

selector:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

---

# Source: ingress-nginx/templates/controller-deployment.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

labels:

helm.sh/chart: ingress-nginx-3.21.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.43.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

node-role.kubernetes.io/gateway: "true"

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

selector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

revisionHistoryLimit: 10

minReadySeconds: 0

template:

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

node-role.kubernetes.io/gateway: "true"

spec:

hostNetwork: true

dnsPolicy: ClusterFirst

containers:

- name: controller

image: k8s.gcr.io/ingress-nginx/controller:v0.43.0@sha256:9bba603b99bf25f6d117cf1235b6598c16033ad027b143c90fa5b3cc583c5713

imagePullPolicy: IfNotPresent

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

args:

- /nginx-ingress-controller

- --election-id=ingress-controller-leader

- --ingress-class=nginx

- --configmap=$(POD_NAMESPACE)/ingress-nginx-controller

- --validating-webhook=:8443

- --validating-webhook-certificate=/usr/local/certificates/cert

- --validating-webhook-key=/usr/local/certificates/key

securityContext:

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

runAsUser: 101

allowPrivilegeEscalation: true

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: LD_PRELOAD

value: /usr/local/lib/libmimalloc.so

livenessProbe:

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 1

successThreshold: 1

failureThreshold: 5

readinessProbe:

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 1

successThreshold: 1

failureThreshold: 3

ports:

- name: http

containerPort: 80

protocol: TCP

- name: https

containerPort: 443

protocol: TCP

- name: webhook

containerPort: 8443

protocol: TCP

volumeMounts:

- name: webhook-cert

mountPath: /usr/local/certificates/

readOnly: true

resources:

requests:

cpu: 100m

memory: 90Mi

nodeSelector:

node-role.kubernetes.io/gateway: "true"

serviceAccountName: ingress-nginx

terminationGracePeriodSeconds: 300

volumes:

- name: webhook-cert

secret:

secretName: ingress-nginx-admission

---

# Source: ingress-nginx/templates/admission-webhooks/validating-webhook.yaml

# before changing this value, check the required kubernetes version

# https://kubernetes.io/docs/reference/access-authn-authz/extensible-admission-controllers/#prerequisites

apiVersion: admissionregistration.k8s.io/v1

kind: ValidatingWebhookConfiguration

metadata:

labels:

helm.sh/chart: ingress-nginx-3.21.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.43.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

name: ingress-nginx-admission

webhooks:

- name: validate.nginx.ingress.kubernetes.io

matchPolicy: Equivalent

rules:

- apiGroups:

- networking.k8s.io

apiVersions:

- v1beta1

operations:

- CREATE

- UPDATE

resources:

- ingresses

failurePolicy: Fail

sideEffects: None

admissionReviewVersions:

- v1

- v1beta1

clientConfig:

service:

namespace: ingress-nginx

name: ingress-nginx-controller-admission

path: /networking/v1beta1/ingresses

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.21.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.43.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/clusterrole.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.21.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.43.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

rules:

- apiGroups:

- admissionregistration.k8s.io

resources:

- validatingwebhookconfigurations

verbs:

- get

- update

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/clusterrolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.21.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.43.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.21.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.43.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

namespace: ingress-nginx

rules:

- apiGroups:

- ''

resources:

- secrets

verbs:

- get

- create

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/rolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.21.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.43.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/job-createSecret.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: ingress-nginx-admission-create

annotations:

helm.sh/hook: pre-install,pre-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.21.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.43.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

namespace: ingress-nginx

spec:

template:

metadata:

name: ingress-nginx-admission-create

labels:

helm.sh/chart: ingress-nginx-3.21.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.43.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

spec:

containers:

- name: create

image: docker.io/jettech/kube-webhook-certgen:v1.5.1

imagePullPolicy: IfNotPresent

args:

- create

- --host=ingress-nginx-controller-admission,ingress-nginx-controller-admission.$(POD_NAMESPACE).svc

- --namespace=$(POD_NAMESPACE)

- --secret-name=ingress-nginx-admission

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

restartPolicy: OnFailure

serviceAccountName: ingress-nginx-admission

securityContext:

runAsNonRoot: true

runAsUser: 2000

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/job-patchWebhook.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: ingress-nginx-admission-patch

annotations:

helm.sh/hook: post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.21.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.43.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

namespace: ingress-nginx

spec:

template:

metadata:

name: ingress-nginx-admission-patch

labels:

helm.sh/chart: ingress-nginx-3.21.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.43.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

spec:

containers:

- name: patch

image: docker.io/jettech/kube-webhook-certgen:v1.5.1

imagePullPolicy: IfNotPresent

args:

- patch

- --webhook-name=ingress-nginx-admission

- --namespace=$(POD_NAMESPACE)

- --patch-mutating=false

- --secret-name=ingress-nginx-admission

- --patch-failure-policy=Fail

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

restartPolicy: OnFailure

serviceAccountName: ingress-nginx-admission

securityContext:

runAsNonRoot: true

runAsUser: 2000

kubectl apply -f nginx-ingress.yaml

, POD- Nginx, gw-1

, gw-2

443- 80- :

kubectl get pods --all-namespaces | grep nginx

ingress-nginx ingress-nginx-admission-create-4mm9m 0/1 Completed 0 46h

ingress-nginx ingress-nginx-admission-patch-7jkwg 0/1 Completed 2 46h

ingress-nginx ingress-nginx-controller-b966cf6cd-7kpzm 1/1 Running 1 46h

ingress-nginx ingress-nginx-controller-b966cf6cd-ckl97 1/1 Running 0 46h

gw-1

, gw-2

:

sudo netstat -tnlp | grep -E ':(443|80)'

tcp 0 0 0.0.0.0:443 0.0.0.0:* LISTEN 2661/nginx: master

tcp 0 0 0.0.0.0:443 0.0.0.0:* LISTEN 2661/nginx: master

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 2661/nginx: master

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 2661/nginx: master

tcp6 0 0 :::443 :::* LISTEN 2661/nginx: master

tcp6 0 0 :::443 :::* LISTEN 2661/nginx: master

tcp6 0 0 :::80 :::* LISTEN 2661/nginx: master

tcp6 0 0 :::80 :::* LISTEN 2661/nginx: master

gw-1

, gw-2

80- Nginx. , gw-1

, gw-2

DNS, CDN ..

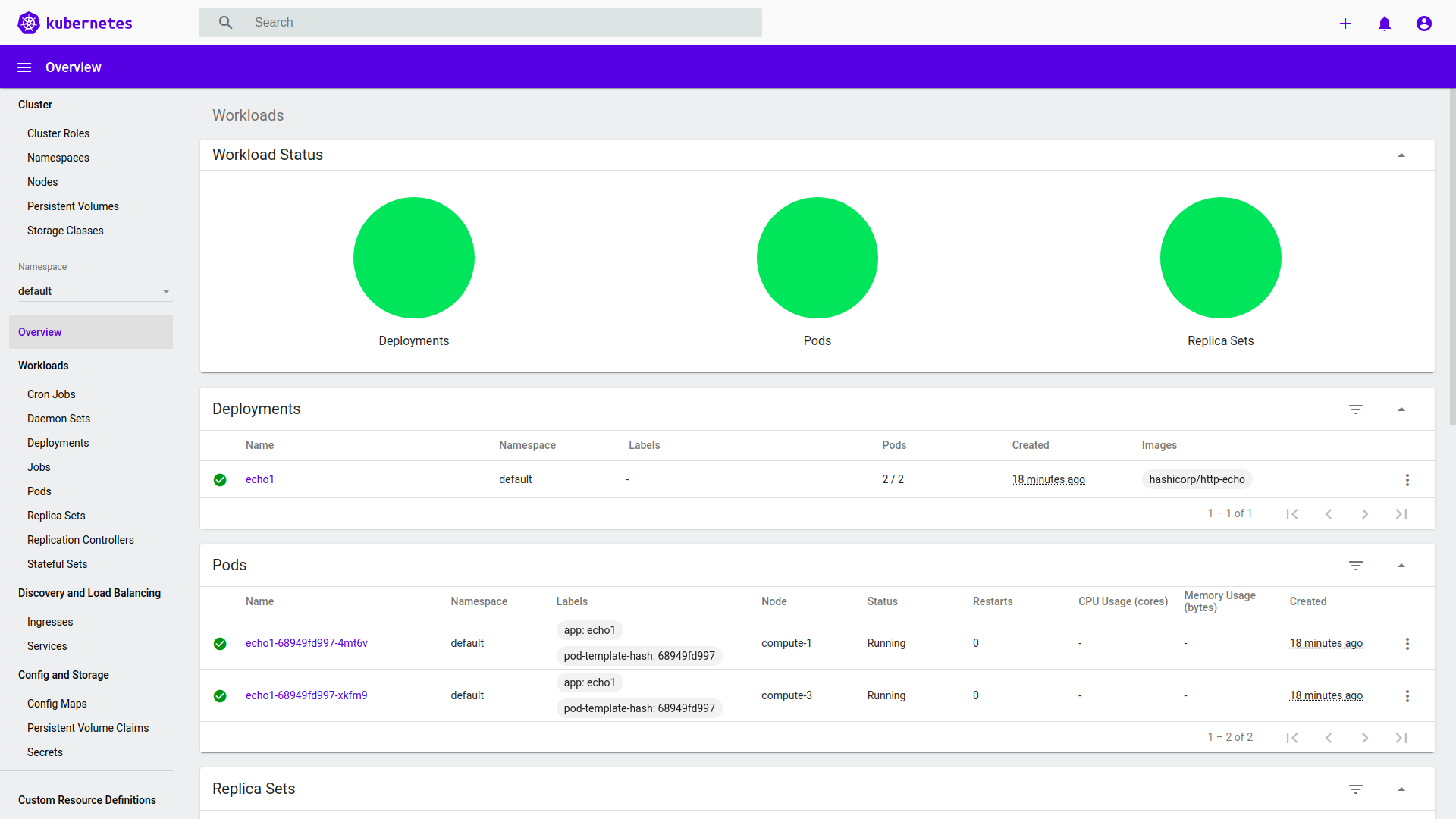

inress , , Ingress ( ) - echo1.yaml

:

apiVersion: v1

kind: Service

metadata:

name: echo1

spec:

ports:

- port: 80

targetPort: 5678

selector:

app: echo1

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: echo1

spec:

selector:

matchLabels:

app: echo1

replicas: 2

template:

metadata:

labels:

app: echo1

spec:

containers:

- name: echo1

image: hashicorp/http-echo

args:

- "-text=echo1"

ports:

- containerPort: 5678

kubectl apply -f echo1.yaml

. Ingress (ingress-echo1.yaml

):

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: echo-ingress

spec:

rules:

- host: echo1.example.com

http:

paths:

- backend:

serviceName: echo1

servicePort: 80

kubectl apply -f ingress-echo1.yaml

. , /etchosts

echo1.example.com

:

127.0.0.1 localhost

127.0.1.1 manager

# The following lines are desirable for IPv6 capable hosts

::1 localhost ip6-localhost ip6-loopback

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

X.Y.Z.C echo1.example.com

Nginx Ingress. curl

:

curl echo1.example.com

echo1

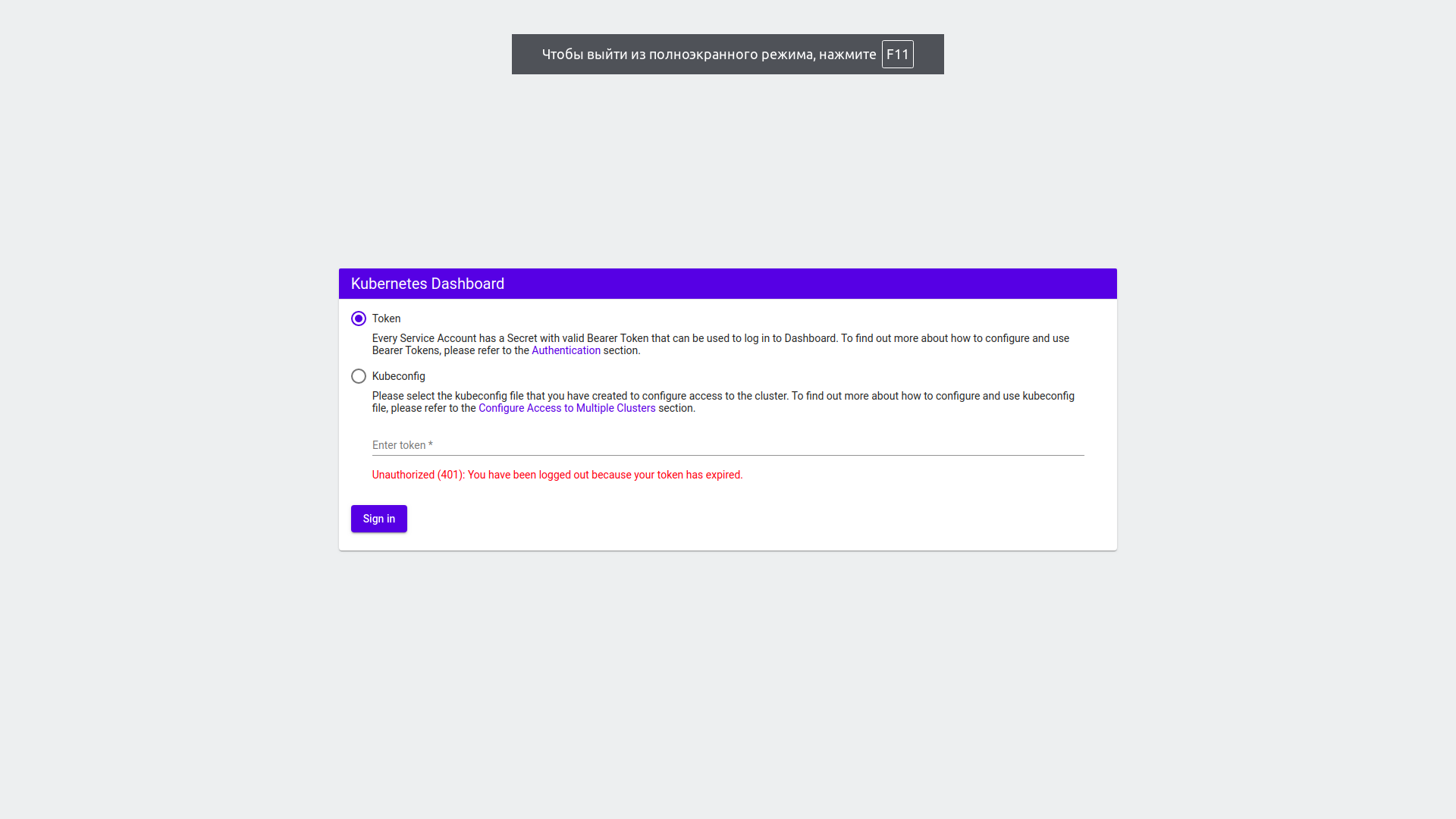

K8S Dashboard

Dashboard :

kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0/aio/deploy/recommended.yaml

Dashboard , , http

, localhost

. , - Dashboard Ingress. , Dashboard RBAC Kubernetes, Dashboard.

. :

cat <<EOF | kubectl apply -f - apiVersion: v1 kind: ServiceAccount metadata: name: admin-user namespace: kubernetes-dashboard EOF

:

cat <<EOF | kubectl apply -f - apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: admin-user roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-admin subjects: - kind: ServiceAccount name: admin-user namespace: kubernetes-dashboard EOF

, Dashboard:

kubectl -n kubernetes-dashboard get secret $(kubectl -n kubernetes-dashboard get sa/admin-user -o jsonpath="{.secrets[0].name}") -o go-template="{{.data.token | base64decode}}"

kubect

$HOME/.kube/config

, "" k8s-cp

, kubectl proxy

Dashboard : http://localhost:8001/api/v1/namespaces/kubernetes-dashboard/services/https:kubernetes-dashboard:/proxy/.

, , API Kubernetes HAProxy.

API K8S

gw-1

, gw-2

haproxy

. /etc/haproxy/haproxy.cfg

, defaults

:

defaults

# mode is inherited by sections that follow

mode tcp

frontend k8s

# receives traffic from clients

bind :6443

default_backend kubernetes

backend kubernetes

# relays the client messages to servers

server k8s k8s-cp:6443

API K8S , /etc/hosts

gw-1

gw-2

k8s-cp

. kubectl

, kubectl proxy

:

kubectl proxy

Starting to serve on 127.0.0.1:8001

http://localhost:8001/api/v1/namespaces/kubernetes-dashboard/services/https:kubernetes-dashboard:/proxy/ Dashboard K8S:

Dasboard, :

kubectl -n kubernetes-dashboard get secret $(kubectl -n kubernetes-dashboard get sa/admin-user -o jsonpath="{.secrets[0].name}") -o go-template="{{.data.token | base64decode}}"

eyJhbGciOiJSUzI1NiIsImtpZCI6IlFkcGxwMTN2YlpyNS1TOTYtUnVYdsfadfsdjfksdlfjm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLWd6anprIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiJjM2RiOWFkMS0yYjdmLTQ3YTYtOTM3My1hZWI2ZjJkZjk0NTAiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6YWRtaW4tdXNlciJ9.ht-RyqLXY4UzBnxIzJcnQn8Kk2iHWZzKVeAjUhKkJ-vOAtyqBu50rExgiRWsEHWfJp1p9xN8sXFKg62FFFbHWftPf6PmwfcBK2lXPy6OL9OaooP17WJVn75KKXIZHPWLO9VmRXPB1S-v2xFuG_J8jHB8pZHJlFjp4zIXfB--QwhrxeoTt5zv3CfXCl1_VYgCoqaxRsa7e892-vtMijBo7EZfJiyuAUQr_TsAIDY3zOWFJeHbwPFWzL_1fF9Y2o3r0pw7hYZHUoI8Z-3hbfOi10QBRyQlNZTMFSh7Z38RRbV1tw2ZmMvgSQyHa9TZFy2kYik6VnugNB2cilamo_b7hg

Dashboard:

As I wrote at the beginning of this article, I already have experience deploying container management systems based on Docker, Apache Mesos (DC / OS). However, the Kubernetes documentation seemed complex, confusing, and fragmentary to me. To get the current result, I went through a lot of third-party tutorials, Issue GitHub and, of course, Kubernetes documentation. Hope this guide will save you some time.