What is Airflow?

Apache Airflow is an advanced workflow manager and an indispensable tool in the modern data engineer's arsenal.

Airflow allows you to create directed acyclic graph (DAG) workflows of tasks. A variety of command line utilities perform complex operations on the DAG. The user interface easily visualizes pipelines running in a production environment, monitors progress, and troubleshoots as needed.

Create, plan and control your workflow programmatically. It provides a functional abstraction in the form of an idempotent DAG (Directed Acyclic Graph). A function as an abstraction service to perform tasks at specified intervals.

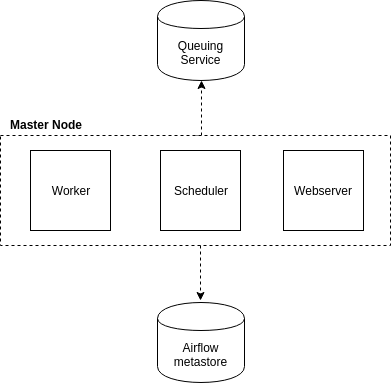

Cluster with one Airflow node

In a single-node Airflow cluster, all components (worker, scheduler, web server) are installed on a single node known as the " Master node". To scale a single node cluster Airflow

must be configured in LocalExecutor

. The worker takes (pull) a task from the IPC (inter-process communication) queue, this scales very well as long as the resources are available on the Master node. To scale Airflow across multiple nodes, you need to enable Celery Executor

.

Airflow single node architecture

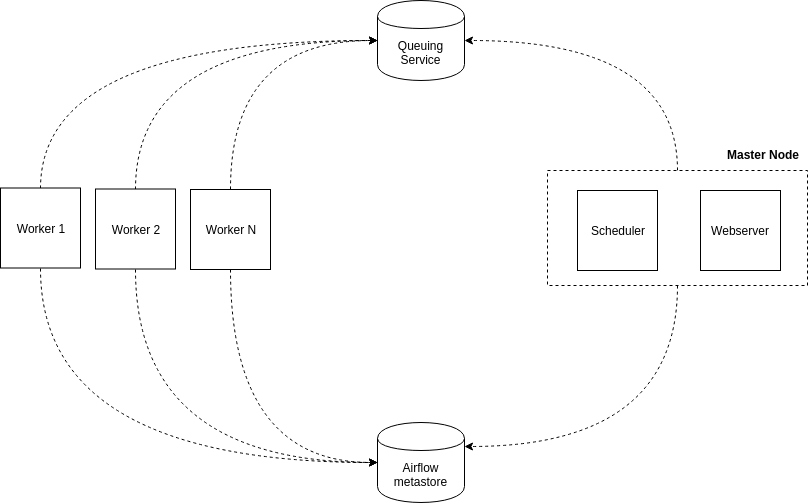

Airflow multinode cluster

Airflow . - , , , . , Airflow CeleryExecutor

.

Celery CeleryExecutor

Airflow. / Celery Redis RabbitMQ. RabbitMQ — . — . IPC, , RabbitMQ — . RabbitMQ / , Celery . .

Airflow

Celery:

Celery — , . , . Airflow . Airflow Airflow, .

Airflow Celery:

. CentOS 7 Linux.

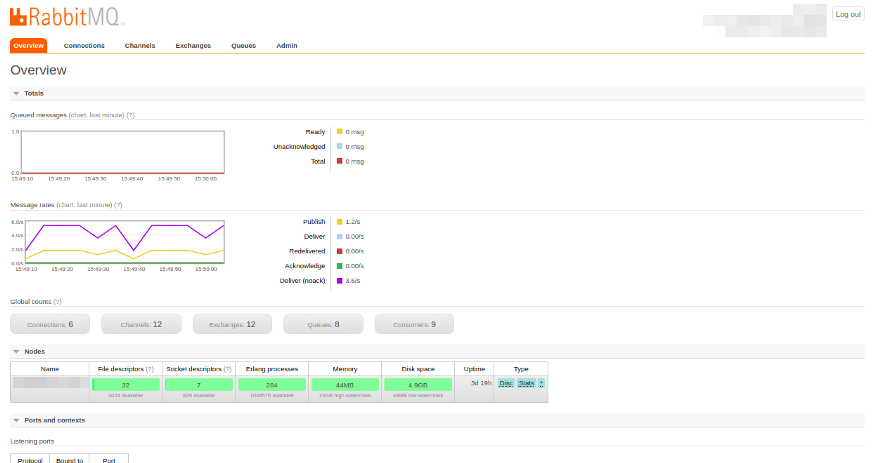

- RabbitMQ

yum install epel-release yum install rabbitmq-server

- RabbitMQ Server

systemctl enable rabbitmq-server.service systemctl start rabbitmq-server.service

- - RabbitMQ

rabbitmq-plugins enable rabbitmq_management

rabbitmq — 15672

, - — admin/admin

.

-

pyamqp

RabbitMQ PostGreSQL

pip install pyamqp

amqp://

— , librabbitmq, , py-amqp

, .

pyamqp://

librabbitmq://

, , . pyamqp://

amqp

(http://github.com/celery/py-amqp)

PostGreSQL: psycopg2

Psycopg — PostgreSQL Python.

pip install psycopg2

- Airflow.

pip install 'apache-airflow[all]'

airflow

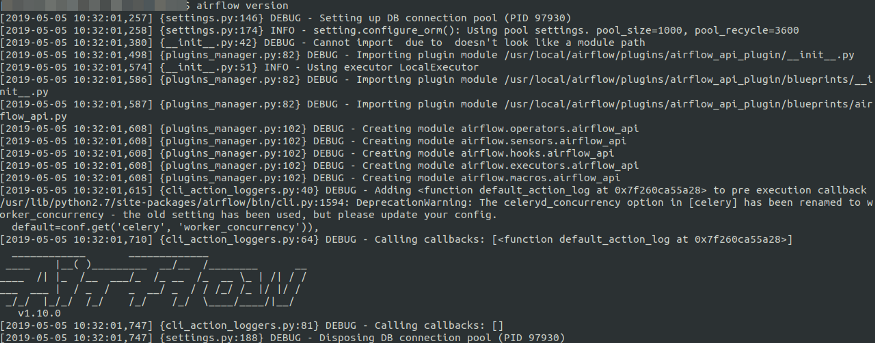

airflow version

Airflow v1.10.0, .

airflow initdb

, . Airflow .

- Celery

Celery .

pip install celery==4.3.0

Celery

celery --version 4.3.0 (rhubarb)

- airflow.cfg Celery Executor.

executor = CeleryExecutor sql_alchemy_conn = postgresql+psycopg2://airflow:airflow@{HOSTNAME}/airflow broker_url= pyamqp://guest:guest@{RabbitMQ-HOSTNAME}:5672/ celery_result_backend = db+postgresql://airflow:airflow@{HOSTNAME}/airflow dags_are_paused_at_creation = True load_examples = False

airflow.cfg

airflow airflow initdb

, airflow

.

- airflow

# default port is 8080 airflow webserver -p 8000

# start the scheduler airflow scheduler

airflow .

airflow worker

Once you're done starting the various airflow services, you can test out the fantastic airflow interface with the command:

http://<IP-ADDRESS/HOSTNAME>:8000

as we specified port 8000 in our webserver service start command, otherwise the default port number is 8080.

Yes! We have finished creating a cluster with the Airflow multinode architecture. :)