Systems for converting text queries to SQL

What such a system should be able to do:

- Find entities in the text that correspond to database entities: tables, columns, sometimes values.

- Link tables, form filters.

- Define a set of returned data, that is, make a select list.

- Determine the sampling order and number of rows.

- Identify, in addition to the relatively obvious ones, some absolutely implicit dependencies or filters that are opaque to anyone except the designers of the base schema (see the condition for the bonus_type field in the picture above)

- Resolve ambiguities when selecting entities. “Give me data on Ivanov” - should you request information on a counterparty or an employee with that last name? “Employee data for February” - limit the sample by hiring date or sales date? etc.

That is, at the first step, you need to parse the query, just like when working with all other NLP systems, and then either generate SQL on the fly, or find some most suitable intent, in the function of which a previously prepared parameterized SQL query is written. At first glance, the first option looks much more impressive. Let's talk about it in more detail.

The peculiarity of such systems is that, in fact, only one intent is registered in them, which is triggered for everything that has at least some relation to the model, with some super-function that generates SQL for all types of queries. SQL can be created based on any rules, algorithmically or with the participation of neural networks.

Algorithms and rules

At first glance, the task of converting a parsed sentence into SQL is a purely algorithmic problem, that is, it can be solved without problems. It seems that we have everything we need to convert one strong model to another: recognized entities, references, co-references, etc. But, unfortunately, the nuances and ambiguities, as always, complicate everything, and in this case they make the 100% universal approach almost inoperative. Models are imperfect (see examples above and further along the article), entities intersect, both in names and in meaning, the growth of complexity with an increase in the number of entities and the complexity of the base becomes nonlinear.

Neural networks

The use of neural networks for such systems is a rapidly developing area. Within the framework of this article, I will confine myself to links and brief conclusions.

I advise you to read a short series of articles: 1 , 2 , 3 , 4 , 5 , they contain quite a bit of theory, a story about how training and quality testing are conducted, a brief overview of solutions. Additionally, here - more about SparkNLP. Here - about Photon solution from SalesForce. According to the reference one more representative of the open source community - Allennlp. Here- data on the quality of systems, that is, test rates. Here is data on the use of NLP libraries and, in particular, similar solutions in an enterprise.

This direction has a great future, but again with reservations - not yet for all types of models. If, when working with a model, you do not need to obtain completely strict numbers and guaranteed accurate, repeatable, predictable results (for example, you need to determine a trend, compare indicators, identify dependencies, etc.) - everything is fine. But the non-determinism and probabilistic nature of the answers impose restrictions on the use of such an approach for a number of systems.

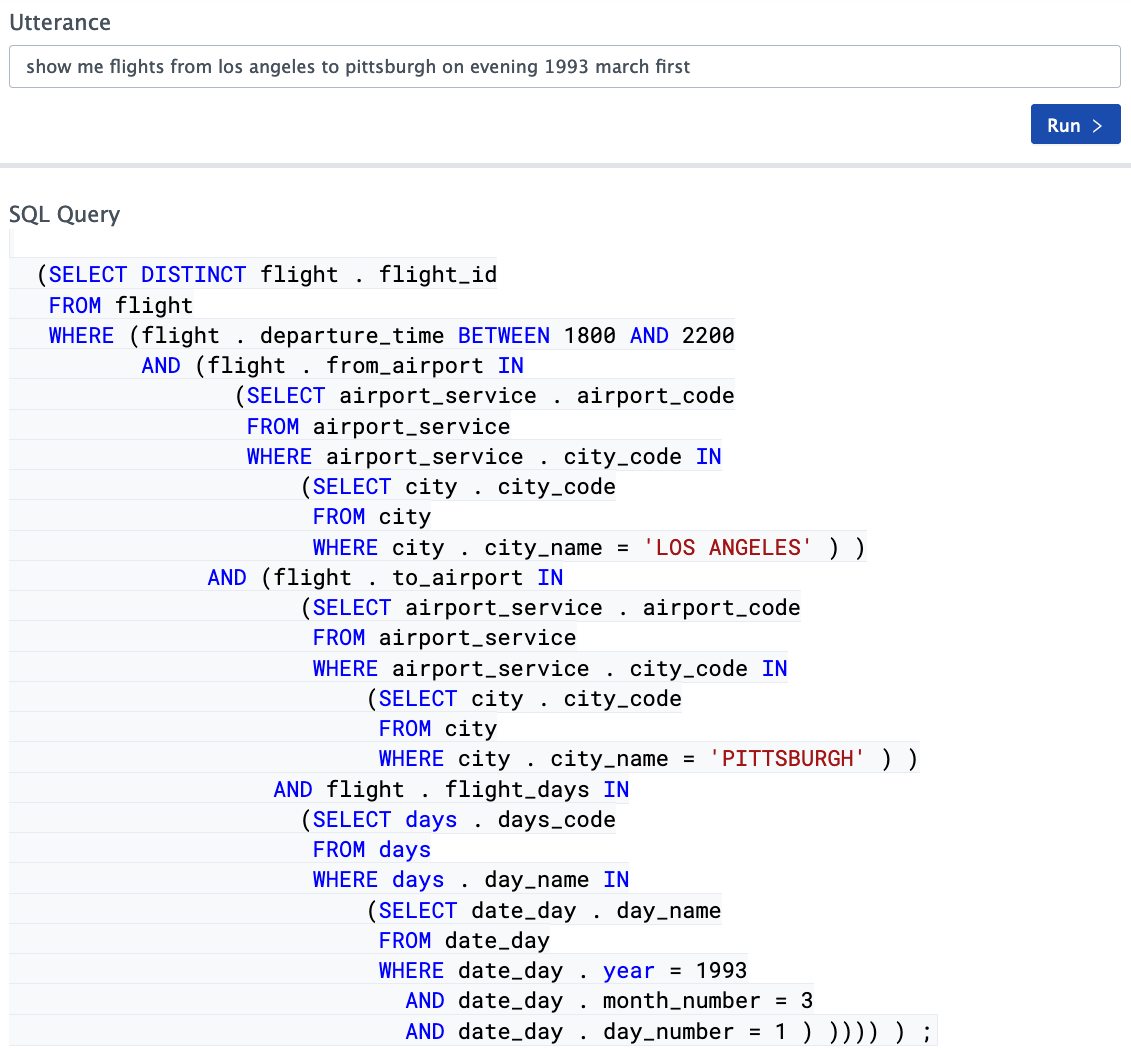

Examples of working with systems based on neural networks

Oftentimes, companies that provide services of this kind show excellent results on well-made videos and then offer to contact them for a detailed conversation. But there are also online demos available on the net. It is especially convenient to experiment with Photon , since in this case the base diagram is right before your eyes. The second demo I saw in the public domain is from Allennlp. The parsing of some queries is surprising in its sophistication, some options are slightly less successful. The overall impression is mixed, try playing with these demos if you are interested and form your opinion.

In general, the situation is quite interesting. Systems for automatic translation of textual unstructured queries into SQL based on neural networks are getting better and better, the quality of passing test sets is getting higher and higher, but still their value does not exceed 70% at best ( spider dataset - about 69% today day). Can this result be considered good? From the point of view of the development of such systems, yes, of course, the results are impressive, but it is far from possible to use them in real systems without modification for all types of tasks.

Apache NlpCraft Tools

How can the Apache NlpCraft project help in building and organizing such systems? If there are no questions about the first part of the problem (parsing a text query), everything is as usual, then for the second part (formation of SQL queries based on NLP data), NlpCraft does not provide a 100% complete solution, but only tools that help in solving this problem independently ...

Where to begin? If we want to automate the development process as much as possible, the metadata of the database schema and the data itself will help us. We will list what information we can extract from the database and for simplicity we will restrict ourselves to tables, we will not try to analyze triggers, stored procedures, etc.

- — . , .

- (null / not null) (where clause).

- , foreign keys , 1:1, 1:0, 1:n, n:m. joins.

- . , , .. , select list.

- . . - — , — enumeration, . .

- . , . .

- Primary and unique keys — , , , .

- (, , Oracle) — .

- Check constraints - knowledge of constraints can help in building all the same filters on these columns.

Thus, if you have obtained metadata, then you already know a lot about the entities of the model. So, for example, in some ideal world, you know almost everything about the table below:

CREATE TABLE users (

id number primary key,

first_name varchar(32) not null,

last_name varchar(64) not null unique,

birthday date null,

salary_level_id number not null foreign key on salary_level(id)

);

In reality, everything will not be so rosy, names will be abbreviated and unreadable, data types will often turn out to be completely unexpected, and denormalized fields and hastily added tables like 1: 0 will be scattered here and there. As a result, to be realistic, most of the databases that have been in production for a long time can only be used with great difficulty for recognizing entities without any preliminary preparation. This applies to any systems, and based on neural networks, perhaps even more so than others.

In this situation, it is advisable to give the NLP module access to a somewhat refined scheme - a pre-prepared set of views, with the correct field names, a necessary and sufficient set of tables and columns, security issues, etc.

Getting started designing

The main and very simple idea is that it is almost impossible to cover all user requests. If the user sets a goal to deceive the system and wants to ask a question that will confuse it, he will easily do it. The developer's task is to strike a balance between the capabilities of the system being developed and the complexity of its implementation. Hence, too, a very simple advice - do not try to support one universal intent that answers all questions, with one universal method that generates SQL for all these options. Try to give up 100% versatility, it will make the project a little less colorful, but more realizable.

- Ask users and write down 30-40 of the most common types of questions.

- , , , ..

- . SQL, 20-30 . , . SQL ML text2Sql, .

- . — , , , . — SQL . C — , .

With such a volume of work and sufficient resources, the time required to solve such a problem is measured in days, and at the end you have 80% coverage of user needs, and with a fairly high quality of performance. Then go back to the first point and add more intents.

The easiest way to explain why it is worth supporting multiple intents is with an example. Almost always users are interested in a certain number of very non-standard reports, something like “compare me such and such for such and such a period, but not included in such and such a period and at the same time ...”. No system will be able to immediately generate SQL for such a query, you will have to either somehow train it, or select and separately program such cases. Being able to respond to a limited range of intricate queries is very important to your users. Look for a balance again, not the fact that there will be enough resources to satisfy all such requests, but completely ignoring such wishes means narrowing the system's functionality to an unacceptable level. If you find the right ratio,your system will take a finite amount of development time and will not be just a fun toy for a few days, causing annoyance rather than usefulness later on. A very important point is that you can add intents for tricky requests not immediately, but in the process, one by one. We have MVP with only one universal intent at once.

Toolkit and API

Apache NlpCraft offers a toolkit to simplify database manipulation.

Operating procedure:

- Generate model template from database jdbc url. As I mentioned above, it is sometimes better to prepare a set of views with a more “correct” representation of the data and provide access to that set. The easiest way to generate a template is to use the CLI utility . We launch the utility, specify the database schema, jdbc driver, a list of used and ignored tables and other parameters as parameters, see the documentation for more details .

- JSON YAML , , , , .., , .

:

- id: "tbl:orders" groups: - "table" synonyms: - "orders" metadata: sql:name: "orders" sql:defaultselect: - "order_id" - "customer_id" - "employee_id" sql:defaultsort: - "orders.order_id#desc" sql:extratables: - "customers" - "shippers" - "employees" description: "Auto-generated from 'orders' table." ..... - id: "col:orders_order_id" groups: - "column" synonyms: - "{order_id|order <ID>}" - "orders {order_id|order <ID>}" - "{order_id|order <ID>} <OF> orders" metadata: sql:name: "order_id" sql:tablename: "orders" sql:datatype: 4 sql:isnullable: false sql:ispk: true description: "Auto-generated from 'orders.order_id' column."

- — , , . , . , , , , , , .. .

- Based on the rich model, the developer can use a compact API that greatly facilitates the construction of SQL queries in the intent function - see a detailed example .

Below is a code snippet for clarity:

@NCIntent(

"intent=commonReport " +

"term(tbls)~{groups @@ 'table'}[0,7] " +

"term(cols)~{

id == 'col:date' ||

id == 'col:num' ||

id == 'col:varchar'

}[0,7] " +

"term(condNums)~{id == 'condition:num'}[0,7] " +

"term(condVals)~{id == 'condition:value'}[0,7] " +

"term(condDates)~{id == 'condition:date'}[0,7] " +

"term(condFreeDate)~{id == 'nlpcraft:date'}? " +

"term(sort)~{id == 'nlpcraft:sort'}? " +

"term(limit)~{id == 'nlpcraft:limit'}?"

)

def onCommonReport(

ctx: NCIntentMatch,

@NCIntentTerm("tbls") tbls: Seq[NCToken],

@NCIntentTerm("cols") cols: Seq[NCToken],

@NCIntentTerm("condNums") condNums: Seq[NCToken],

@NCIntentTerm("condVals") condVals: Seq[NCToken],

@NCIntentTerm("condDates") condDates: Seq[NCToken],

@NCIntentTerm("condFreeDate") freeDateOpt: Option[NCToken],

@NCIntentTerm("sort") sortTokOpt: Option[NCToken],

@NCIntentTerm("limit") limitTokOpt: Option[NCToken]

): NCResult = {

val ext = NCSqlExtractorBuilder.build(SCHEMA, ctx.getVariant)

val query =

SqlBuilder(SCHEMA).

withTables(tbls.map(ext.extractTable): _*).

withAndConditions(extractValuesConditions(ext, condVals): _*).

...

// SQL

// .

}

Here is a fragment of the default intent function that reacts to any base element defined in the request and is triggered if no stricter matches were found during the matching process. It demonstrates the use of the SQL elements extractor API, which is involved in building SQL queries, as well as working with the SQL builder example.

Once again, I would like to emphasize that Apache NlpCraft does not provide a ready-made tool for translating a parsed text query into SQL, this task is outside the scope of the project, at least in the current version. The query builder code is available in examples, not in the API, it has significant limitations, but it also consists of only 500 lines of code with comments, or about 300 without them. At the same time, despite all its simplicity and even its limitations, even this simplest implementation is able to generate the necessary SQL for a very significant number of the most diverse types of user queries. In this version, we suggest our users interested in building similar systems to use this exampleas a template and develop it to suit your needs. Yes, this is not a task for one evening, but you will get a result of incomparably higher quality than when using universal solutions head on.

I repeat that in the default intent function, you can either just modify the examples from the example (according to reviews, its functionality may well be enough), or use solutions with neural networks.

Conclusion

Building a system for accessing a database is not an easy task, but Apache NlpCraft has already taken over a considerable part of the routine work, and largely due to this, the development of a decent quality system will take measurable time and resources. Whether the Apache NlpCraft community will develop the direction of automating the translation of text queries into SQL and expand this simple SQL example to a full-fledged API - time and user requests that form the plan and direction of the project will show.