Several years ago, developers from Boston Dynamics filmed several videos showing how people push robots with hockey sticks and other objects. The video quickly went viral, and many users who watched it predicted an imminent uprising of robots against their tormentors.

But, of course, all this is not for nothing - robots were taught to respond to unexpected external factors. And this is very difficult, since there is a huge number of different scenarios to be foreseen. Researchers from Zhejiang (China) and Edinburgh (UK) universities have now followed in the footsteps of Boston Dynamics. The research team developeda complex program for teaching robots to fall, jerk, drift, etc. As far as you can tell, the program is very effective.

The main task is to teach robots to quickly recover after being literally dropped. What for? In real life, if the robot needs to perform some tasks outside the laboratory, but in the ordinary world, it will have to fall repeatedly. Somewhere the car will slip, maybe someone will push it - for example, on the street, at rush hour, when the courier robot will go to deliver the parcel. And this is not to mention the scenario when the robot acquires the profession of a rescuer / scout of the area.

Regardless of what profession the robot will receive, it needs special skills. For the courier - one set of skills, for the rescuer - the second, for the assistant geologist - the third. In any case, there will be some unique skills, plus universal ones - the ability to quickly rise after a fall, as an example.

A group of experts from China and England is in the process of creating a software platform for training a robot - in our case, it is a robot dog. Experts have already developed a self-learning system with eight basic algorithms that allow a mechanical dog to learn to interact with the real world. For training, a specialized neural network based on reinforcement learning is involved. First, the neural network trains virtual robots, that is, their models, developing different scenarios for responding to external factors. After this stage ends, the learning result in the form of a set of algorithms is "poured" into a real robot. And we get an already trained system. All this can be compared to the programs that Neo received in The Matrix. One - and he knows kung fu, two - he knows how to fly a helicopter,three - gains weapon expertise.

It's about the same with a robot. The finished program is filled in - and the hitherto immovable car already knows how to get up after falls, bend around obstacles, walk on ice, etc.

Such a training system is much more effective than the trial and error method with a real robot. In order to learn how to act correctly in difficult situations, the neural network conducts thousands or even millions of simulations. A real robot, if it went through all these tests, would break after the tenth or hundredth fall. And in simulation, you can do anything, even drop the system from a skyscraper, if you need it for training.

Another feature of the training is that initially the basic skills of the robot are trained separately. As mentioned above, there are eight such algorithm skills. If earlier we compared the training scheme with the "Matrix", then here the script of the football team comes to the rescue. Each skill can be compared to an individual member of the team - a goalkeeper or a midfielder. Each of them is trained with a special set of skills, and together, after achieving certain results, they all become an effective team. This is approximately how everything works with a robot - it is taught to stand up separately, to bend around obstacles separately, etc. And then all these skills come together. The main thing is to turn all the acquired skills into a single flexible system, where nothing contradicts and does not interfere with each other.

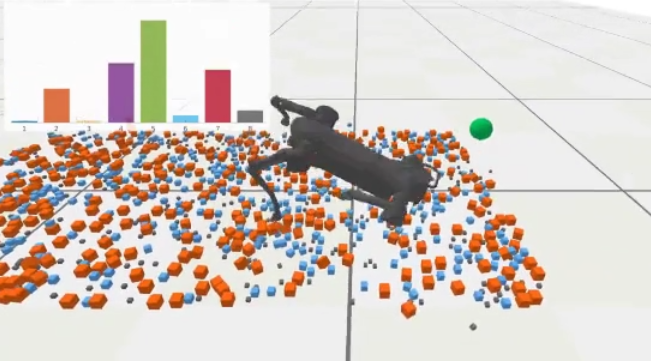

The picture above shows how a robot is taught to walk over rough terrain with a lot of stones. It follows its target, a virtual green ball, and falls at times. After each fall, he receives a certain experience, which allows him to avoid a similar situation the next time. After thousands of virtual falls, the robot learns to walk over rough terrain with virtually no error - and does not fall. And if so, it quickly gets up.

All this is then complicated by the addition of external factors - the robot is pushed in different directions, stones are thrown, etc. As a result, the machine adapts and comes into operation very quickly after being exposed to unexpected external factors. The developers say that children learn in much the same way - after all, a child cannot climb stairs, avoid obstacles, avoid hazards in the form of puddles, etc. All this has to be learned by trial and error.

Developers cannot foresee everything in the world, it is clear that the robot will have to somehow react to unforeseen problems. But the basic skills gained through such training will help you cope with the most difficult tasks. Well, one robot will be able to transfer the gained experience to the second, that - to the third, etc. Everything, as Sheckley described in The Guardian Bird, only