The author of our new translated article claims that Knative is the best they could think of in the universe! Do you agree?

If you are already using Kubernetes, you have probably heard of serverless architecture. While both platforms, Kubernetes and Knative, are scalable, it is the serverless architecture that does everything it can to provide developers with working code without bothering them with infrastructure issues. It also reduces infrastructure costs by virtually scaling application instances from scratch.

On the other hand, you can take advantage of Kubernetes without limits by following the traditional hosting model and advanced traffic management techniques. Thanks to this, we open up various opportunities, for example, blue-green deployments and A / B testing.

Knative is an effort to take the best of both worlds! As an open source cloud platform, Knative allows you to run your serverless applications on Kubernetes, leveraging the power of Kubernetes, and ensures the simplicity and flexibility of a serverless architecture.

Thus, developers can focus on coding and deploying a container to Kubernetes with a single command, while Knative manages the application while taking care of network nuances, auto-scaling to zero, and version tracking.

In addition, Knative allows developers to write loosely coupled code with their event handling framework, which provides universal subscription, delivery, and event management. This means you can declare event connectivity and your applications can subscribe to specific data streams.

Led by Google, the open-source platform has been included in the Cloud Native Computing Foundation. This implies a lack of vendor lock-in, which is otherwise a significant limitation of current cloud serverless FaaS solutions. You can run Knative on any Kubernetes cluster.

Who is Knative for?

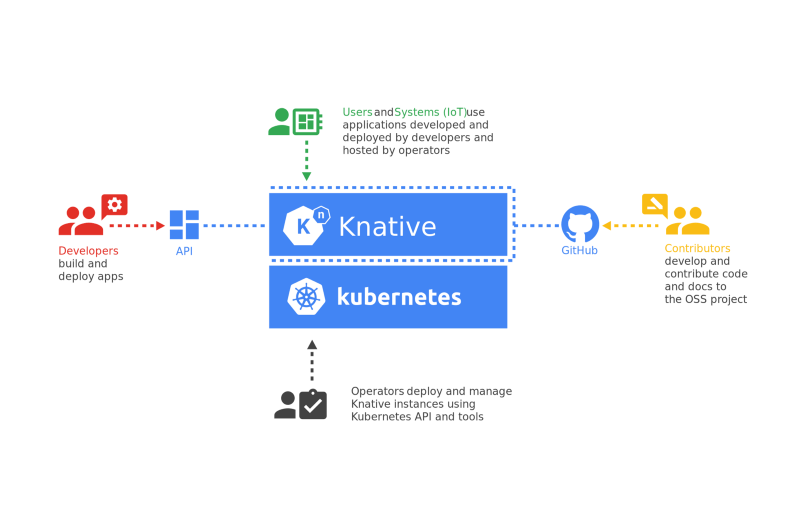

Knative helps a wide variety of professionals, each with their own knowledge, experience and responsibilities.

Engineers can focus on managing the Kubernetes cluster and installing and maintaining Knative instances using kubectl, while developers build and deploy applications using the Knative API.

For any organization this is a big plus, since now different teams can solve their problems without interfering with each other.

Why should you use Knative?

Most organizations using Kubernetes have a complex process for managing the deployment and maintenance of workloads. This leads developers to pay attention to details that they don't need to worry about. Developers should focus on coding rather than thinking about assemblies and deployments.

Kubeless helps developers to simplify their code execution without having to know too much about what's going on under the hood of Kubernetes.

A Kubernetes cluster takes up infrastructure resources because it requires all applications to have at least one running container. Knative manages this aspect for you and takes care of auto-scaling containers in the cluster from scratch. This allows Kubernetes administrators to package multiple applications into a single cluster.

If you have multiple applications with different peak times, or have a cluster with autoscaling worker nodes, you can greatly benefit from this during downtime.

A real goldmine for microservices architecture applications that may not need microservices running at a specific time. This helps you use resources more efficiently and you can do more with limited resources.

Plus, it integrates pretty well with the Eventing engine and makes it easy to design unrelated systems. Application code can remain completely free of any endpoint configuration, and you can publish and subscribe to events by declaring configurations at the Kubernetes level. This is a significant advantage for complex microservice applications!

How does Knative work?

Knative provides the kn API using the Kubernetes and CRD operators. Using them, you can deploy applications using the command line. In the background, Knative will create everything Kubernetes needs (deployments, services, inputs, and so on) to run applications without you having to worry about it.

Be aware that Knative does not immediately create pods. Instead, it provides a virtual endpoint for applications and listens to them. If a request hits these endpoints, Knative runs the required pods. This allows applications to scale from scratch to the desired number of instances.

Knative provides application endpoints using its own domain in the format [app-name]. [namespace]. [custom-domain] .

This helps to uniquely identify the application. This is similar to how Kubernetes handles services, but you need to create your own domain A records to point to the Istio ingress gateway. Istio manages all traffic that passes through your cluster in the background.

Knative is an amalgamation of many CNCF and open source products like Kubernetes, Istio, Prometheus, Grafana, and event streaming engines like Kafka and Google Pub / Sub.

Installing Knative

Knative has a reasonable modular structure and you can only install the components you need. Knative offers event, maintenance and monitoring components. You can install them using custom CRDs.

Knative does have external dependencies and requirements for every component. For example, if you are installing a service component, you also need to install Istio and the DNS add-on.

Installing Knative is pretty complicated and worthy of a separate article. But for the demonstration, let's start by installing the service component.

To do this, you need a working Kubernetes cluster.

Install the Service CRD and the serving core components:

kubectl apply -f https://github.com/knative/serving/releases/download/v0.17.0/serving-crds.yaml kubectl apply -f https://github.com/knative/serving/releases/download/v0.17.0/serving-core.yaml

Install Istio for Knative:

curl -L https://istio.io/downloadIstio | ISTIO_VERSION=1.7.0 sh - &&

cd istio-1.7.0 && export PATH=$PWD/bin:$PATH

istioctl install --set profile=demo

kubectl label namespace knative-serving istio-injection=enabled

Wait for Istio to be ready by checking if Kubernetes has allocated an external IP address to the Istio Ingress gateway:

kubectl -n istio-system get service istio-ingressgateway

Define your own domain and configure DNS to point to the IP address of the Istio ingress gateway:

kubectl patch configmap/config-domain --namespace knative-serving --type merge -p "{\"data\":{\"$(kubectl -n istio-system get service istio-ingressgateway -o jsonpath='{.status.loadBalancer.ingress[0].ip}').xip.io\":\"\"}}"

kubectl apply -f https://github.com/knative/net-istio/releases/download/v0.17.0/release.yaml

kubectl apply -f https://github.com/knative/serving/releases/download/v0.17.0/serving-default-domain.yaml

Install the Istio HPA add-on:

kubectl apply -f https://github.com/knative/serving/releases/download/v0.17.0/serving-hpa.yaml

Installing the Knative CLI

Installing the Knative CLI is easy. You need to download the latest version of the Knative CLI binary to the bin folder or specify the appropriate path.

sudo wget https://storage.googleapis.com/knative-nightly/client/latest/kn-linux-amd64 -O /usr/local/bin/kn

sudo chmod +x /usr/local/bin/kn

kn version

Launching the Hello, World! Application

Now let's run our first "Hello, World!" app to see how easy it is to deploy with Knative.

Let's use the Hello, World! Sample application in Go for demonstration. This is a simple REST API that returns Hello $ TARGET , where $ TARGET is an environment variable that you can set in the container.

Run the following command to get started:

$ kn service create helloworld-go --image gcr.io/knative-samples/helloworld-go --env TARGET="World" --annotation autoscaling.knative.dev/target=10

Creating service 'helloworld-go' in namespace 'default':

0.171s Configuration "helloworld-go" is waiting for a Revision to become ready.

6.260s ...

6.324s Ingress has not yet been reconciled.

6.496s Waiting for load balancer to be ready

6.637s Ready to serve.

Service 'helloworld-go' created to latest revision 'helloworld-go-zglmv-1' is available at URL:

http://helloworld-go.default.34.71.125.175.xip.io

kubectl get pod

No resources found in default namespace.

Let's start the helloworld service .

$ curl http://helloworld-go.default.34.71.125.175.xip.io Hello World!

And after a while we get an answer. Let's take a look at the pods.

$ kubectl get pod NAME READY STATUS RESTARTS AGE helloworld-go-zglmv-1-deployment-6d4b7fb4f-ctz86 2/2 Running 0 50s

So, as you can see, Knative unrolled underneath in the background in one move. It turns out that we literally scaled from scratch.

If we give a little time, we will see that the pod begins to be completed. Let's see what's going on.

$ kubectl get pod -w NAME READY STATUS RESTARTS AGE helloworld-go-zglmv-1-deployment-6d4b7fb4f-d9ks6 2/2 Running 0 7s helloworld-go-zglmv-1-deployment-6d4b7fb4f-d9ks6 2/2 Terminating 0 67s helloworld-go-zglmv-1-deployment-6d4b7fb4f-d9ks6 1/2 Terminating 0 87s

The example above shows that Knative manages the pods according to our requirements. Although the first request is slow because Knative creates workloads to process it, subsequent requests will run faster. You can fine tune the slowdown time of the pods depending on your requirements or if you have a tighter SLA.

Let's go a little further. If you look at the annotations, we have limited each one under processing to 10 concurrent requests. So what happens if we load our functions on top of that? Let's find out now!

We'll use the hey utility to load the application. The following command sends 50 concurrent requests to the endpoint within 30 seconds.

$ hey -z 30s -c 50 http://helloworld-go.default.34.121.106.103.xip.io

Average: 0.1222 secs

Requests/sec: 408.3187

Total data: 159822 bytes

Size/request: 13 bytes

Response time histogram:

0.103 [1] |

0.444 [12243] |■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■

0.785 [0] |

1.126 [0] |

1.467 [0] |

1.807 [0] |

2.148 [0] |

2.489 [0] |

2.830 [0] |

3.171 [0] |

3.512 [50] |

Latency distribution:

10% in 0.1042 secs

25% in 0.1048 secs

50% in 0.1057 secs

75% in 0.1077 secs

90% in 0.1121 secs

95% in 0.1192 secs

99% in 0.1826 secs

Details (average, fastest, slowest):

DNS+dialup: 0.0010 secs, 0.1034 secs, 3.5115 secs

DNS-lookup: 0.0006 secs, 0.0000 secs, 0.1365 secs

req write: 0.0000 secs, 0.0000 secs, 0.0062 secs

resp wait: 0.1211 secs, 0.1033 secs, 3.2698 secs

resp read: 0.0001 secs, 0.0000 secs, 0.0032 secs

Status code distribution:

[200] 12294 responses

Now let's look at the pods.

$ kubectl get pod NAME READY STATUS RESTARTS AGE helloworld-go-thmmb-1-deployment-77976785f5-6cthr 2/2 Running 0 59s helloworld-go-thmmb-1-deployment-77976785f5-7dckg 2/2 Running 0 59s helloworld-go-thmmb-1-deployment-77976785f5-fdvjn 0/2 Pending 0 57s helloworld-go-thmmb-1-deployment-77976785f5-gt55v 0/2 Pending 0 58s helloworld-go-thmmb-1-deployment-77976785f5-rwwcv 2/2 Running 0 59s helloworld-go-thmmb-1-deployment-77976785f5-tbrr7 2/2 Running 0 58s helloworld-go-thmmb-1-deployment-77976785f5-vtnz4 0/2 Pending 0 58s helloworld-go-thmmb-1-deployment-77976785f5-w8pn6 2/2 Running 0 59s

As we can see, Knative scales the pods as the load on the function increases and slows it down when there is no more load.

Conclusion

Knative combines the best features of a serverless architecture with the capabilities of Kubernetes. It is gradually moving towards a standard way of implementing FaaS. As Knative is part of CNCF and is gaining more and more technical interest, we may soon find that cloud providers are adopting Knative into their serverless products.

Thanks for reading my article! I hope you enjoyed it.