Mysterious problem

In late 2017, I got a call to discuss an issue with the Netflix app on the new set-top box. It was a new 4K capable Android TV based on Android Open Source Project (AOSP) version 5.0, Lollipop. I worked at Netflix for several years and helped launch a few devices, but this was my first Android TV.

All four parties were in touch: a large European Pay TV company launching the device (operator), a firmware integrator (integrator), a system-on-a-chip supplier (chip supplier), and myself (Netflix).

The integrator and Netflix have already completed Netflix's rigorous certification process, but during an internal test with the operator, a company executive reported a serious problem: Netflix playback lagged, meaning the video played for a very short time, then paused, then paused, then paused. This did not always happen, but it started to lag steadily a few days after turning on the console. They showed the video, it looked terrible.

The integrator found a way to reproduce the problem: launch Netflix several times, start playback, and then return to the UI. They provided a script to automate the process. Sometimes it took as long as five minutes, but the script always reproduced the bug reliably.

Meanwhile, an engineer from a chip supplier diagnosed the root cause: a Netflix Android TV app called Ninja was unable to deliver audio data. Lags are caused by underruns in the hardware audio pipeline. Playback stopped when the decoder was waiting for a portion of the audio stream from the Ninja, and then resumed when new data arrived. The integrator, chip supplier, and operator all thought the problem was clear. And they all looked at me: Netflix, you have a bug in your application and you need to fix it. I heard the tension in the voice of the operator representative. The release of the device was delayed and over budget, and they expected results from me.

Investigation

I was skeptical. This same Ninja app runs on millions of Android TV devices, including smart TVs and other set-top boxes. If there is a bug in Ninja, why does it only happen on this device?

I started by replicating the problem myself using a script from the integrator. I contacted a colleague from the chip company and asked if he had seen anything like this (not seen). Then I started to study the Ninja source code. It was necessary to find the exact code that is responsible for the delivery of audio data. I figured out a lot, but I started to get lost in the code that is responsible for reproducing, and I needed help.

I went upstairs and found the engineer who wrote the Ninja audio and video pipeline, he introduced me to the code. After that, I myself studied it for some time in order to finally understand the main parts and add my own logs. The Netflix app is complex, but in a simplified way, it retrieves data from the Netflix server, buffers video and audio data on the device for a few seconds, and then delivers the video and audio frames one at a time to the hardware decoders.

Figure: 1. Simplified playback pipeline

Let's talk about the audio / video pipeline in the Netflix app for a moment. Before the “decoder buffer”, it is exactly the same on every set-top box and TV, but moving A / V data into the decoder buffer of a device is a device-specific procedure. It runs on its own thread. The purpose of this procedure is to keep the decoder buffer full by calling the next frame of audio or video data through the Netflix API. In Ninja, this work is done by a threadAndroid. There is a simple state machine and some logic to handle the various playback states, but on normal playback, the stream copies one frame of data to the Android playback API and then tells the thread scheduler to wait 15ms before the next handler call. When you create an Android thread, you can request the thread to restart like a loop, but it is the Android thread scheduler that calls the handler, not your own application.

At maximum 60 FPS, the device should display a new frame every 16.66 ms, so checking after 15 ms is enough anyway. Since the integrator determined that the problem was in the audio stream, I focused on the specific handler that was delivering the audio samples to the Android audio service.

It was necessary to understand where the lags come from, that is, the delay. I assumed that some function called by the handler was to blame, so I scattered the log messages all over the handler and was going to easily find the code that caused the lags. It soon became clear that there was nothing wrong with the handler, and it ran for a few milliseconds even when playback was lagging.

Yeah, insight

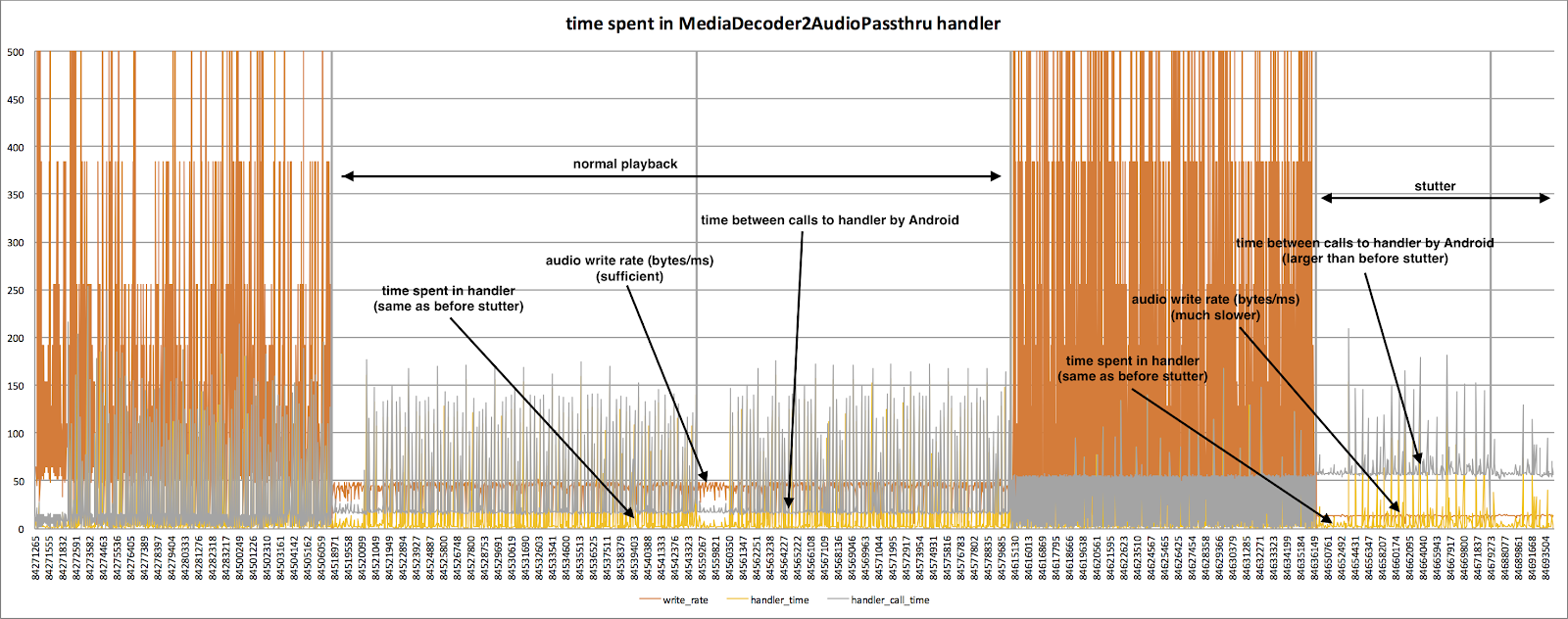

In the end, I focused on three numbers: baud rate, handler call time, and time to transfer control from handler back to Android. I wrote a script to parse the log output and generated the graph below showing the response. Figure: 2. Visualization of audio streaming bandwidth and handler timings The orange line is the rate at which data travels from the streaming buffer to the Android audio system (bytes per millisecond). There are three different scenarios in this diagram:

- Two areas with high peaks, where data rates reach 500 bytes per millisecond. This phase is buffering before starting playback. The handler copies the data as fast as it can.

- — . 45 .

- , 10 . .

Inevitable conclusion: the orange line confirms the conclusions of the engineer from the chip company. Indeed, Ninja is not fast enough to deliver audio data.

To understand why, let's take a closer look at the yellow and gray lines.

The yellow line shows the time spent in the handler procedure itself, calculated from the time stamps recorded at the beginning and at the end of the procedure. In both normal and lagging areas, the time in the handler is the same: about 2 ms. Bursts show cases where times are slower due to other tasks being performed on the device.

True root cause

The gray line - the time between calls to the handler - tells a different story. In normal playback, the handler is called approximately every 15ms. In the case of lags on the right, the handler is called approximately every 55 ms. There is an extra 40 ms between calls, and in such a situation it cannot keep up with the playback. But why?

I reported my discovery to the integrator and chip supplier (look, the Android thread scheduler is to blame!), But they kept insisting that Netflix should solve the problem. Why not copy more data each time the handler is called? It was a fair criticism, but implementing this behavior would entail profound changes that I did not want to go for, so I continued to search for the root cause. I dived into the Android source code and found out that Android threads are a user-space construct and the thread scheduler uses a system call to synchronize

epoll()

. I knew performance was

epoll()

not guaranteed, so I suspected that something was systematically affecting him.

At this point, I was rescued by another engineer from a chip supplier who discovered a bug that was already fixed in the next version of Android (Marshmallow). It turns out that the Android thread scheduler changes the behavior of threads depending on whether the application is running in the foreground or in the background. Background threads are assigned an additional 40 ms (40,000,000 ns) latency.

A bug deep in the Android kernel meant that this extra timer value was persisted when the thread was brought to the fore. Usually the audio processor thread was created when the application was in the foreground, but sometimes a little earlier when the Ninja was still in the background. If this happened, playback would start to lag.

Lessons learned

This isn't the last bug we've fixed on the Android platform, but it was the hardest to track down. It was outside the Netflix app and even outside the playback pipeline, and all the raw data indicated an error in the Netflix app itself.

The story illustrates an aspect of my work that I love: it is impossible to predict all the problems our partners will throw at me. And I know that solving them requires understanding a lot of systems, working with great colleagues, and constantly pushing yourself to learn new things. What I do has a direct impact on real people and their enjoyment of a great product. When people enjoy watching Netflix in their living room, I know I am part of the team that made it possible.