The integration was accompanied by a struggle with literally every millisecond of latency, an infrastructure upgrade and the development of content delivery technologies, which we had to invent names ourselves. We tell you what we encountered during the work, what happened in the end and why users need it.

Why integrate cloud with CDN at all

First of all, a public cloud is a scalable capacity. They can be used in any way: for developing and testing services, as well as storing and processing data. We at G-Core Labs launched the cloud last year and have already managed to use it in high-load projects. For example, our longtime client - Wargaming - uses this solution for several tasks at once:

- Testing new features and services of different projects;

- Prepare test prototypes with external developers who need access to isolated custom and controlled resources;

- Operation of the online game "Caliber" on virtual machines.

The cloud copes with all of the above with a bang, but the work does not end there. No matter what these or those capacities are used for, the result of their work still needs to be delivered to the point of destination. Regardless of whether we are talking about an online game or real military formations, this is where the problem arises: that it is extremely difficult to quickly deliver heavy data to remote regions that multi-ton military equipment. This task can be simplified by integrating the cloud with the content delivery network. With the help of CDN, the transportable part - static data - can be thrown "over the air" directly to the point of destination, and all that remains is to send "oversized" dynamic data from the cloud. With this approach, you can safely start work even on other continents, since integration allows faster competitors to deliver heavy content around the world.

, , : CDN

Let's get down to specifics. We know firsthand that it takes a long time to deliver heavy content to remote regions directly from the cloud, and it can be expensive to constantly increase the capacity of the infrastructure according to the increase in load. Fortunately, in addition to the public cloud, we ended up with our own CDN, which even entered the Guinness Book of Records, providing an uninterrupted experience of playing World of Tanks during peak periods.

To kill two birds with one stone, we needed to integrate it with the cloud. Then we would be able to offer users a solution that would cost less than an infrastructure upgrade and would allow faster data delivery to remote regions. So we started the first phase of work and solved the key problems:

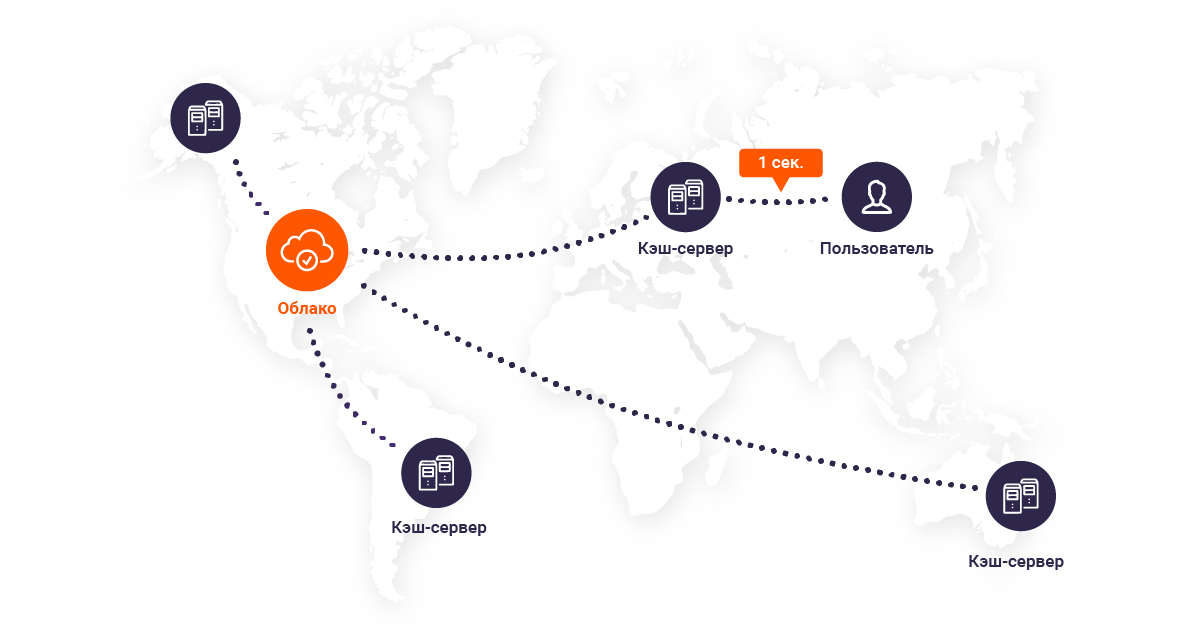

1. Cloud services were under constant load.Users of high-load projects regularly requested content from our clients' clouds. This resulted in a high load and long data return. A solution was needed that would easily reduce the number of references to the source. To do this, we integrated public cloud servers and CDN cache servers, and also made a single interface for managing these services. With its help, users can move static data to the desired points of presence on the network. Due to this, the calls to the cloud occur only on the first requests for content. It works in a standard way: CDN takes data from the source and sends it to the user, as well as to the closest cache server, from where the content is distributed on subsequent requests;

2. The data was transferred for a long time between the cloud and the CDN.By combining the cloud with a content delivery network, we noticed that data delivery latency could be reduced. To save as many precious milliseconds as possible, we had to implement the exchange of traffic between the cache servers and the cloud within the backbone;

3. The load on the source was uneven. Even after connecting the CDN, the remaining calls to the cloud were distributed inefficiently. We fixed this with HTTP (S) balancers. Now, at the time of the content request, they determine from which source (virtual machine or cloud storage bucket) data should be taken for caching;

4. Heavy content took a long time to reach users.To reduce the waiting time, we constantly increased the capacity and geography of the CDN presence. Now users no longer have to wait for content to reach them halfway across the world - at the time of contact, the content delivery network selects the closest of 100 points of presence on five continents. As a result, the average response time worldwide is within 30ms.

Having dealt with these problems, we have already considered the work finished. But the cloud with CDN had other plans for us.

This is how the steel was tempered: we are modernizing the infrastructure

At one point, it became clear that the effect of all our efforts could not fully manifest itself while we were using the old hardware configuration. To make the servers and the applications hosted on them work better and the content transferred faster, an infrastructure upgrade was required. The stars in the sky converged earlier this year: We started upgrading as soon as the second generation Intel Xeon Scalable processor line was released.

Now the standard server configuration looks like this:

- Cloud services run on Intel Xeon Gold 6152, 6252 and 5220 processors, have up to 1 TB of RAM, as well as SSD and HDD with triple replication;

- CDN cache servers are equipped with Intel Xeon Platinum, virtual RAID on CPU and SSD D3-S4610.

As a result of the upgrade, performance increased so much that we abandoned some of the servers and reduced the cost of their operation. It seemed that all of the above would be more than enough for the work of any project. But one day this was not enough.

Shielding, sharding and geo-distribution: speeding up content delivery in extreme conditions

Misfortune never comes alone. This is especially true when it comes to global projects. Lack of geographically distributed infrastructure, high loads due to many users from all over the world and a sea of heterogeneous data that they need to quickly deliver - one of our clients, a large media resource, needed to deal with all these complexities at once. Few details:

- The content took a long time to reach users, and sometimes it did not reach them at all due to high delays and network problems. The difficulty was that the entire large pool of servers with data was located in one geographic point;

- The content source was accessed by users from all over the world, which caused an increased load on the infrastructure and led to a high cost of service, as well as slow data delivery;

- Users needed to deliver a huge amount of constantly updated content that was unique to each region.

The basic capabilities of cloud integration with a CDN were indispensable. We started developing additional solutions.

How we came up with regional shielding

We have introduced this concept, and now an existing service, specifically to solve the problem with the remoteness of the content source. Due to the fact that all the client's servers were located in one geographic point, the data from them took a long time to reach users from different parts of the world. The situation was complicated by the fact that it was necessary to deliver different, constantly updated content to different regions. Simple data caching on edge servers would not fix the problem - they would still often access the source halfway across the world.

We solved the problem by deploying a large pool of cache servers in popular traffic exchange points on different continents. "Regional shields" have become a kind of layers between the source and edge-servers in the user's countries. Now all the content demanded in the corresponding parts of the world first got to them, and then was transferred to the cache servers. In this way, shielding reduced the load on the client's source at once and reduced delays to the end users. The client, in turn, saved on the placement of several server pools with the same content in different parts of the world, since with this principle of work, one data source was enough.

Why did you need content sharding

Regional shieldings have solved the problem of long-term delivery of content to different parts of the world in full. However, now a new difficulty arose: since the client had a lot of data and it was constantly updated, it did not end up in the cache of the edge servers that users were accessing. This led to the fact that a mass of requests from cache servers was constantly poured onto regional pools, the number of which in one group reached 20-30 pieces. To remove some of this load from shields and deliver content to users even faster, we have added the ability to take the necessary data from the nearest edge server in the pool.

Now cache servers in the regions of presence began to access shields only when there was no data in the entire group. Moreover, even in these cases, the content was immediately requested from the server that contained it - thanks to sharding, edge-servers "knew" in advance where a specific file is, and did not poll the entire pool of regional shields for this. This principle of operation reduced the number of requests to the pool and made it possible to efficiently distribute content across it instead of storing copies of the data on each server. As a result, the shields contained more content and, as a result, placed less stress on the client's source.

The creation of such an infrastructure could not but entail one more difficulty. Given the number of cache servers in the groups, it would be silly to assume that none of them can fail. In such a situation, as in the case of adding a new server to the pool, the cache in the groups had to be redistributed in an optimal way. To do this, we have implemented the organization of a sharded cache with a consistent hashing algorithm in the upstream block in nginx:

upstream cache_servers {

hash $cache_key consistent;

server edge1.dc1.gcorelabs.com;

server edge2.dc1.gcorelabs.com;

server edge3.dc1.gcorelabs.com;

}

The appearance of unavailable servers in the pool was also fraught with another problem: other servers continued to send requests to them and waited for a response. To get rid of this delay, we wrote an algorithm for detecting such servers in the pool. Now, due to the fact that they are automatically transferred to the down state in the upstream group, we no longer access inactive servers and do not expect data from them.

As a result of these works, we reduced the cost of services for the client, saved him from the serious costs of organizing his own infrastructure and significantly accelerated the delivery of data to users, despite all the difficulties.

Who needs a cloud with CDN

Integration work is over, and our customers are already using the product. We share which of them gets the most out of this.

Let's say right away that the solution was not useful to everyone. We did not expect anything else: for some, only storage and virtual machines are enough, and for others - content delivery networks. For example, when the entire audience of a project is in the same region, there is practically no need to connect the CDN to the cloud. To minimize delays, in this case, a server located not far from users will suffice.

Integration is revealed in all its glory when you need to quickly and far away give heavy content to a large number of users. Here are a couple of examples of how a cloud with CDN helps different projects:

- Streaming services that are critical to latency and buffering ensure stable operation and high quality broadcasts;

- Online entertainment services deliver heavy games to different parts of the world faster and reduce the load on servers, including at peak loads;

- Media projects make ads load faster and stay accessible when traffic spikes;

- Online stores load faster in different countries, including during promotions and sales.

We continue to see exactly how they are using the cloud with CDN. We, like you, are interested in the numbers: how much the load on the infrastructure is reduced, how much faster users receive content in specific regions, and how much integration helps to save money. We will share all this in future cases.