If you had to lead the development of a software product, you probably wondered - how to help the team move faster? And how do you know how fast you are moving?

It seems logical to use metrics to answer such questions. After all, we have been using them for a long time and successfully for the software products themselves. There are performance metrics, production server load, uptime. There are also product metrics based on user behavior such as conversion and retention. The benefit of metrics is not only a clearer understanding of the current state, but more importantly, in providing feedback. You make a change to improve on something, and you can see by the metrics how much improvement (or deterioration) you get. Popular programming wisdom says: every optimization of program performance must start with measurement, and that makes a lot of sense.

Since we can successfully apply metrics to software products themselves, why not apply them to the speed of development of those products? In this case, we could try some improvements in the processes and visually see if they help to move faster. And the problem of determining a fair salary for programmers would also be simplified. What metrics could you use?

We don't have good metrics for this.

Development speed is the amount of work completed per unit of time. We can measure time, everything is simple here. But how to measure the amount of work done? Attempts to do this began many decades ago, with the birth of the programming industry itself. However, every time the indicator was used as a target for improvement, something was bound to go wrong. For instance:

- . , , , . , , , ;

- . , , ;

- . . , , . , , ;

- . , . , , , ;

- . , , , — , ;

- … .

Developers are quick-witted and creative, and they specialize in solving complex problems. Whatever metric you give them, they will find the easiest way to improve their performance, but most likely it will have nothing to do with the actual volume and quality of the work performed. Will they use these “cheating” methods? Not necessarily, it depends on your particular situation, including how strong the incentive you create. But they will most certainly realize that evaluating their productivity has little to do with value. This not only demotivates, but also distracts from doing real work.

But why?

Why do metrics work great for improving the properties of software products, but not for measuring the work done by programmers? Maybe this is some kind of conspiracy of programmers? In fact, if we look beyond software development, we see other examples, in some of which metrics work well and in others they don't.

Examples of where metrics work well are mass manufacturing or sales. Let there be production and sale of mugs. You can measure the volume of production - the number of cups per unit of time, its quality (scrap percentage), the cost of one cup. In sales - sales volume, margin. These metrics are quite successfully used in management. For example, the production manager can be assigned the task of reducing the scrap rate while maintaining the cost price, and the sales manager - increasing sales while maintaining the price. Improvements in these metrics will benefit the business, so they can be considered indicators of the performance of the people who are in charge.

An example of when metrics do not work is the assessment of scientific performance. Scientists conduct research, which is then published as scientific papers. This area also has its own numerical metrics - the number of papers, the number of citations, the statistical significance of the results, etc. Is it possible to say that a scientist who released 10 scientific papers brought the world twice as much benefit than a scientist who released 5 papers? This is unlikely, because the value of their work can be very different, and at the same time, even on a subjective level, it can be difficult to understand which work was more valuable. The problem of "cheating" citations and publications is widely known in the scientific community, so, unfortunately, they are not considered reliable indicators of value. There is also the problem of manipulating statistical significance .

Two main criteria

Regardless of the context, metrics that work well have two things in common:

- Direct (not indirect) relationship with value;

- Accuracy, that is, the metric is based on measuring the number of some units of value and these units are equal to each other;

Let's look again at the examples we looked at above: The

metrics for mass production and sales meet both criteria. In the production of mugs, the value is produced - the mugs themselves. The connection is direct, the company needs to produce mugs. And since the production is mass, then the units of value (circles) are equal to each other. If we are talking about sales, then the units of value are money. The purpose of the enterprise is to make a profit, so the relationship with value is, again, direct. And since every dollar earned is equal to another, we can build accurate metrics.

In the evaluation of scientific works, these criteria cannot be met. We cannot find a unit of measurement that would directly determine the value of scientific work, because all scientific works are unique. It cannot be otherwise, simply proceeding from the very essence of science - to discover new knowledge. It makes no sense for a scientist to write another scientific work that would exactly repeat another. Each scientific work should bring something new.

Since we cannot find metrics that directly measure the value of scientific work, we are left with only indirect ones - for example, the number of publications and citations. The problem with indirect metrics is that they correlate poorly with value and tend to be easily cheated. If you start using such a metric as a goal, then you yourself create an incentive to artificially wind it up.

Back to measuring programmer productivity

What did we have there? Lines of code, number of commits, tasks, task score in hours or storypoints ... If you try to check these metrics against two main criteria, you will see that none of them meet them:

- There is no direct relationship to value. We do not supply our clients with lines of code, man-hours or storypoints. Users don't care how many commits we made or how many tasks we closed;

- They are not accurate. Commit to commit are different, one line of code is not equal to another, tasks are also different, and man-hours and storypoints are estimated subjectively, so they will also differ.

So it’s not surprising that none of these metrics work — they are all indirect and imprecise.

Why are there no metrics that would be directly related to the value of a programmer's work? For the same reasons why scientists do not have them. Programmers, like scientists, are constantly creating new things. They don't write exactly the same code over and over - it doesn't make sense. Previously written code can be reused in different ways, separated into a separate module or library, well, or just copied, at worst. Therefore, every working day for programmers is unique. Even if they solve similar problems, they solve them each time in a different context, in new conditions.

The work of programmers is a piece production, not a mass production. They do not produce the same repeatable results, so there is no baseline for measurement. Metrics that work so well in mass production or sales don't work here.

Isn't there something more modern, based on research?

Of course, today no one seriously talks about measuring a programmer's work by lines of code. There must be some more modern metrics based on research, right?

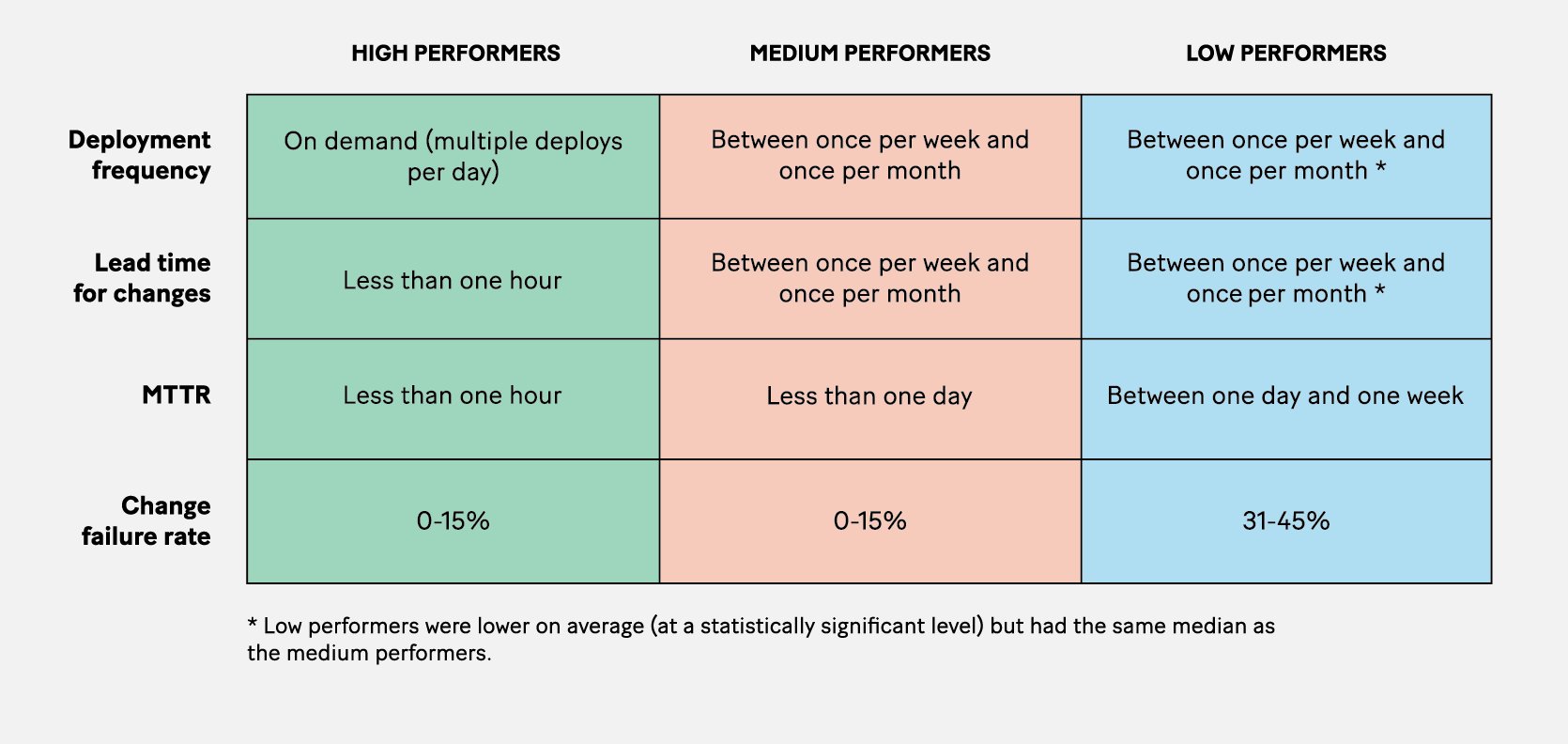

There are some. In their 2018 book “Accelerate,” the authors cite the results of a study of 2,000 organizations from different industries. The authors tried to figure out what metrics might be used to measure performance:

Source: Nicole Forsgren, Jez Humble, and Gene Kim, “Measuring Performance,” in Accelerate: The Science behind DevOps: Building and Scaling High Performing Technology Organizations

Here are four metrics. Let's see which ones are related to value and can be accurately measured:

- . , . , . . . . , , . , . , — ;

- Lead time — , . , , . , , , — ;

- (MTTR) — , , , . . -, . , MTTR . -, , . , — ;

- , . , . , , , . Linux, “ — ”. SaaS- . , — - , , - . , . , — . , — .

Bottom line: None of these four metrics are accurate, and they don't always have a clear relationship to customer value. Is there an opportunity for “cheating” in this case? Sure. Deliver trivial low-risk changes as often as possible, and all metrics except Lead time will look great.

As for Lead time - even if we omit the (important) fact that it is inaccurate, emphasis on it will lead to the prioritization of the client's simplest wishes and pushing into the far corner of everything that the client clearly did not ask for - usually these are refactorings, tests, and just any improvements he hadn't thought of himself.

Therefore, I would not recommend using these metrics as targets.

But maybe we will find new metrics?

Of course, you can say: “Wait, if no suitable metrics have been found yet, this does not mean that there cannot be any at all! We are smart people, we will strain ourselves and come up with something ”. Alas, I'm afraid it won't. There is a fundamental reason why there are no good metrics in this area. As we said above, good ones would meet two main criteria:

- Direct (not indirect) relationship with value;

- Accuracy, that is, the metric is based on measuring the number of some units of value, and these units are equal to each other.

We cannot accurately measure the direct value, because all the results of the work of programmers are different, they never produce anything exactly the same. This is a piece production for unique, non-repetitive tasks. And since there is nothing repetitive, then there is no base for comparison and measurement either. We're only left with proxies, but since they're poorly value-bound and prone to cheating, reliance on them is detrimental.

Can you improve areas for which there are no good metrics?

Metrics are great because they provide feedback. You make some changes in the process and you can clearly see whether they led to improvements or not. If there are no metrics, then the feedback becomes less pronounced and it may even feel like you are moving blindly. There is a famous saying attributed to Peter Drucker:

You cannot control what you cannot measure.

Only this is not true. According to the Drucker Institute, Peter Drucker was not really under the illusion that a metric could be found for any activity, and never said such words . Not everything that is valuable can be measured, and not everything that can be measured is valuable.

Complexity with metrics does not mean that nothing can be improved. Some companies release software much faster than others, and without sacrificing quality. This means that there are some significant differences, and therefore, improvements should be possible.

Summary

It is possible and necessary to improve your software product using metrics. Performance, load, uptime, or product metrics like conversion and customer retention are your friends.

However, you shouldn't try to speed up the development process with the help of metrics, due to the lack of suitable metrics. Many indicators in this area have been invented, but, unfortunately, they are all indirect or imprecise, and more often both at once, so when trying to use them as goals, only harm is obtained.

But don't be discouraged - there is hope! The lack of good metrics for development speed is sad, but that doesn't mean speed improvements are impossible. How possible. Yes, we only have subjective qualitative assessments for feedback. However, there are enough of them to implement improvements and understand whether there is an effect from them. For example, one of the things that can be improved is communication between developers and management . The link above provides examples of how to improve communication, and why it is worth focusing on it.

That's all, write in the comments what you think. Happy deployments, even on Fridays.