British scientists have trained AI to transform spoken language into video with a virtual sign language interpreter. The algorithm independently evaluates the quality of work. The neural network will help people with partial or complete hearing loss improve the perception of content and feel more free at public events.

There are tens of millions of deaf and hard of hearing people in the world who use sign language as their primary means of communication. On the one hand, in the online world, the problem of communication for hearing impaired people is solved with the help of subtitles. But on the other hand, now popular webinars, streams and other content have to be translated into sign language in real time. Scientists have been researching this problem for a long time and looking for a solution.

Researchers are now looking towards neurotechnology. At the University of Surrey, developers have created a new generation sign language translation algorithm. AI converts spoken language into movements of the human skeleton. The skeleton is then given a human appearance and a realistic footage is created. With the help of technology, you can also produce videos based on text.

Why such difficulties and why is the movement of the entire skeleton important? Sign language is not only about hand signs, it involves all parts of the body and even facial expressions. Previous technologies often generated blurry models, which led to distortion of meanings or even misunderstanding of the speech of the virtual sign language interpreter.

Photo: ru.freepik.com

How the new neural network works

The algorithm is based on the following: the receiving signal comes in the form of audio, then it is converted into a schematic model of the human skeleton, which reproduces speech with the appropriate gestures. The sequence of poses is then fed to the U-Net. The web converts movements and poses into lifelike videos.

Algorithm of a virtual sign language interpreter

To achieve high results, scientists trained a neural network using videos of real sign language interpreters.

To evaluate the resulting model, the developers conducted experiments with volunteers. So, they asked them to compare the new method with other previously used speech transformation methods. Of the 46 people, 13 were sign language speakers. The comparison was carried out according to four parameters, for each of which the new algorithm outperformed the previous versions in quality.

Results from a study on volunteers

Not only British scientists

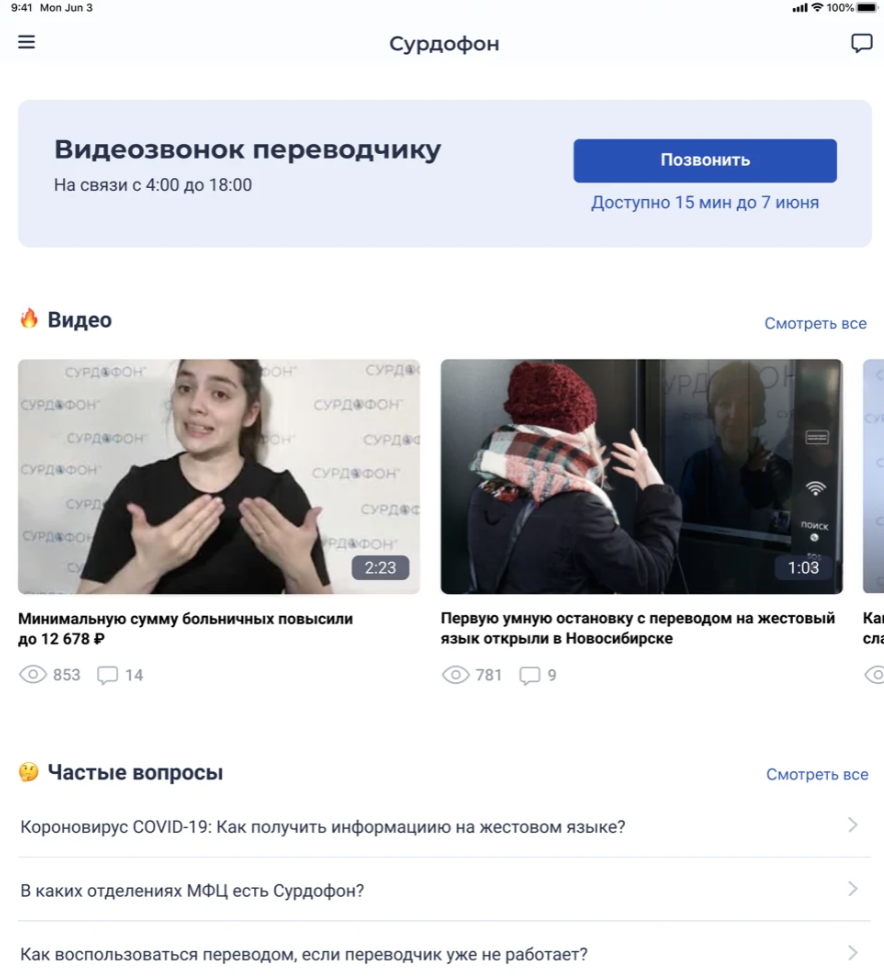

Since the beginning of the century, researchers have been developing in the field of sign language translation. One of the most famous products is the animated virtual translator from IBM . But the project was not developed. Several years later he was reincarnated in Novosibirsk. The program , invented by scientists from the Novosibirsk Academic City, recognizes speech, analyzes the meaning and translates it into sign language. Then the avatar shows the text on the screen.

Animated sign language translator from Novosibirsk developers

At that time it was believed that the development would become as popular as Google Translator. Now you can test the program in the Adaptis application for the AppStore and Google Play .

Screenshot of Adaptis App in the App Store

A few years ago, Belgian scientists printed an Arduino-controlled 3D hand that also functions as a sign language interpreter. The project was named ASLAN. The arm consisted of 25 separate PLA plastic parts. In the future, they intended to add another hand and a robotic face to it to convey emotions.

Translation from sign language into the language we are accustomed to also presents great difficulties. Russian scientists from the Institute of Management Problems named after V.A. Trapeznikov Institute of Control Sciences of the Russian Academy of Sciences (IPU RAS) a few years ago began the development of such an AI. It was supposed that in the future he would help translate gestures into words, phrases and letters. Scientists then reported that it could take more than one year to create an algorithm.

Photo: ru.freepik.com The

Russian program will be based on a site created by a hearing impaired employee of the Institute of Control Sciences of the Russian Academy of Sciences. She has been developing the Surdoserver website for several years. At the same time, it was reported that Russian scientists are working on a mobile application "Surdoservice" and a deaf cloud for the exchange of information by hearing impaired people.