Then I just sat and admired AlphaZero versus Stockfish . And now I returned to the topic in connection with the task of optimizing neural networks, which sometimes has to be done at work (alas, less than we would like). As it seems to me, these tasks may be closely related, so I wanted to somehow systematize my ideas.

Chess is a game with complete information, based on an enumeration of options (as well as checkers, go, etc.).

The problem, however, is that the variation tree in chess grows quite fast (although significantly slower than in Go). For example, with a full board of pieces in a quiet position, each side has about 10 reasonable continuations. Thus, in just 3 moves of black and white (6 half moves) we can get a million positions from this one. Also, let's take into account that the average game between people lasts 40-50 moves (between computers - 80-100). Thus, we will come to the conclusion that a complete calculation of the tree of variants is impossible for most positions, which means that we must focus on partial cutting of the search tree, both in width and in depth. Now let's see how humans and machines have dealt with this problem. I'll start with a little historical overview.

"Protein Chess".

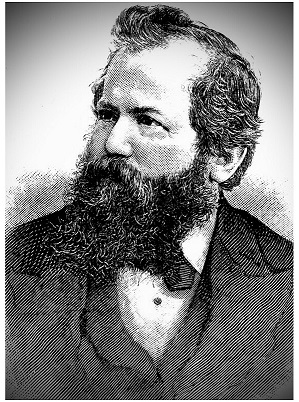

Chess has been known for about 1400 years, but the first large tournaments in it began to be held in the middle of the 19th century. It was a time of open and romantic battles. The opponents tried to quickly introduce pieces into the battle, open up the position and start an attack on the king. No one particularly considered material and positional concessions. But surprisingly enough, the antagonist of romantic chess, Wilhelm Steinitz, became the first official world champion .

He laid the foundations for positional play . Largely thanks to Steinitz, we began to operate with such concepts as “pawn structure”, “strong and weak squares”, “good and bad pieces”. This is what introduced into chess an element of strategy based on long-term advantages. Steinitz developed a positional approach and relentlessly punished his opponents for material sacrifices and positional flaws. Emmanuel Lasker , who replaced him on the chess throne, spoke very well of the first champion : " Steinitz's talent as a practical player was lower than the talent of Blackburn or Zukertort, whom he nevertheless defeated, because he was a great thinker, but they were not ."Steinitz formulated the basic principles of position assessment and the resulting game plans in a high-level language (in this case, German).

Accordingly, he made them available for study by other people . This has shaped what we call the human approach to chess. We are very serious about pruning the variation tree in chess based on positional principles. Some moves are discarded because they lead to a bad position on the calculated horizon. Some because they lead to long-term concessions, others because they are aimless. As a result, we calculate a very small part of the possible options.

Further understanding of chess was essentially a development of the ideas laid down by the first champion. Such concepts as blockade, prevention, domination appeared. Chess players began to study the principles of playing typical positions arising from various openings (closed chains, an isolated pawn, etc.). One way or another, positions close to material balance were studied. But there were also exceptions - for example, young Mikhail Tal played in a different style. He created sharp unbalanced positions with a violation of the material ratio (later Garry Kasparov also demonstrated a similar game). Unaccustomed to such a game, opponents passed one after another. Tal became world champion in 1960, but lost the rematch a year later. In the second half of the twentieth century, the focus of research shifted towards the beginning of the game - the opening. With the light hand of Mikhail Botvinnik (6th world champion) and Garry Kasparov (13th), chess players began to devote the lion's share of their time to working out specific opening variations. Increasingly using computers in this process. As a result, many variations in popular openings are developed up to positions where the outcome of the game is predetermined. This leads to a certain emasculation of the chess, as well as to the need to memorize a huge number of variations so as not to be defeated already in the opening. Not surprisingly, the pendulum has swung in the opposite direction lately. The current world championMagnus Carlsen rather strives to get not an advantage at the end of the opening, but a playing position that is not “hackneyed” by computer engines. The severity of the struggle is carried over to the later stages of the game (middlegame, endgame).

"Silicone Chess".

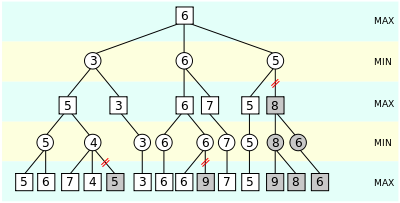

According to the apt expression of Alexander Kronrod , chess is the “fruit fly” of artificial intelligence. Their study began with the advent of the first computers and attracted such pioneers as Alan Turing and Claude Shannon.... It was Shannon who put forward the first estimate of the value of chess pieces "King = 200, Queen = 9, Rook = 5, Bishop = 3, Knight = 3, Pawn = 1". Oddly enough, it was this simple assessment that determined the development of chess programming for the next 70 years. Shannon also predicted the division of chess programs into "fast" (brute force) and "smart" (clever). "Fast" programs completely go through all possible options to a certain depth, evaluate the position using a simple evaluation function (such as material ratio) and select the best move using the minimax principle... "Smart" programs use more complex algorithms and vary the search depth in much the same way as a human does. The 6th world champion Mikhail Botvinnik was involved in the creation of such an algorithm in the last years of his life. However, without much success, like many other creators of "smart" programs. For in his third prediction, Shannon was wrong - "smart" programs constantly failed in the fight against "fast" ones. The reason is that brute force is very well parallelized and optimized. And Shannon's simple estimate turned out to be quite stable and robust. For as chess players know, any positional advantage will sooner or later be transformed into a material one. While the principles of position assessment lend themselves to formalization much worse.

They require cumbersome sequential computations and are poorly optimized. As a result, as computer performance increased, "fast" programs began to dominate. This is how the mainstream of computer chess was formed, which is strikingly different from human ones - busting to a certain depth using alpha-beta clipping (and some other heuristics) and position estimation according to Shannon. Also, programs began to actively develop and use opening (when the game has not yet gone far from the initial position) and endgame (when the number of pieces is small and the tree of variations can be calculated completely) bases. And the performance of computers was growing all the time, and the programmers also did not sit idle, constantly optimizing the engines. On May 11, 1997, an epoch-making event occurredDeep Blue computer defeated world champion Garry Kasparov in a 6-game match.

Immediately after that, IBM shut down this never-cheap project. Chips that speed up chess calculations were created especially for Deep Blue ! However, even without them, the superiority of a computer over a person was already obvious. Deep Fritz , Deep Junior , Rybka , Komodo , Stockfish began to mercilessly smash the leading grandmasters, even giving them material ahead ... Between themselves, however, they played with varying success - the results of the world championships among programs can be found here .

Everything changed when the creators of AlphaZero, after defeating the world champion in the game of playing in the game of Li Sedol , finally took up chess. The result was phenomenal - after 4 hours of playing with myselfAlphaZero defeated StockFish by winning 28 games and drawing 72. After a year, DeepMind did a cleaner experiment , allowing Stockfish to use the opening and endgame books. Still, the result +155 -6 = 839 leaves no doubt about who is the strongest player in the world at the moment.

Let's understand how arranged this new miracle. (There is already a whole book for those who want to dig deeper into python scripts ). The main algorithm is Monte Carlo tree search... This is, of course, an overkill, which makes AlphaZero similar to other chess programs. But the word Monte Carlo should not be misleading - the search is controlled by a neural network (for Go it was 80-layer, I don't know which one here) and is narrowly targeted. AlphaZero truncates the brute-force tree for positional considerations, just like a human does! Compared to Stockfish, Alphazero goes through almost 1000 times fewer options... She shovels much less "garbage", but calculates the most likely strongest options deeper and more accurately. Therefore, it wins even with less time or on weaker hardware. And the most important thing is that AlphaZero "studied" chess exclusively on "her own experience". She had no a priori information. Her "understanding" is not tainted by the "Shannon assessment." She has her own unique understanding of the vision of chess and the style of play, which often ignores the material balance (like young Tal!).

What conclusions can we draw from this wonderful experiment?

- He decisively refutes all considerations about the emasculation of chess. The very appearance of a player who plays in a style never seen before and demonstrates total superiority over competitors indicates that the possibilities of the game are far from exhausted.

- . 4 (« ») ! – ( -) . . . AlphaZero () .

- – ? – , , .. , . AlphaZero – – –. ? , , . , . . . . SkyNet will become a little less distant and a little more sinister ... In the meantime, I would be grateful for links, articles and ideas on how to approach this problem.

PS. But you will watch the games . I got an incomparable pleasure.