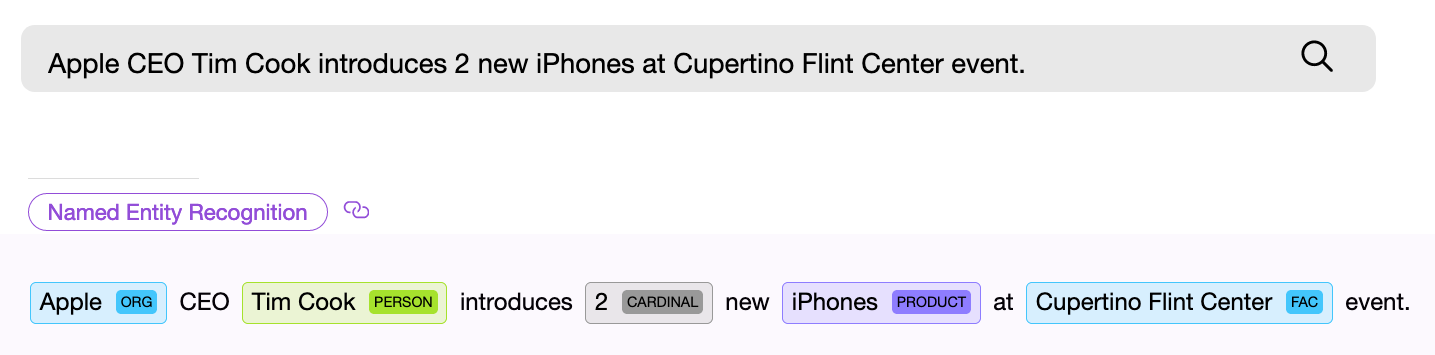

NER (named entity recognition) component, that is, a software component for searching for named entities, must find an object in the text and, if possible, get some information from it. Example - "Give me twenty-two masks." The numerical NER component finds the phrase “twenty-two” in the given text and extracts from these words the numerical normalized value - “ 22 ”, now this value can be used.

NER components can be based on neural networks or work on the basis of rules and any internal models. Generic NER components often use the second method.

Let's consider several ready-made solutions for finding standard entities in the text. In this post, we will focus on free or free with restrictions libraries, and also talk about what has been done in the Apache NlpCraft project within the framework of this issue. The list below is not a detailed and detailed overview, of which there are already a sufficient number on the network, but rather a brief description of the main features, pros and cons of using these libraries.

NER Component Providers

Apache OpenNlp

Apache OpenNlp provides a fairly standard set of NER components for the English language, dealing with dates, times, geography, organizations, numeric percentages, and persons. A small set is also available for other languages (Spanish, Dutch).

Delivery:

Java library. Apache OpenNlp does not ship models with the main project. They are available for download separately.

Pros:

Apache license. Models have been tested in many implementations.

Minuses:

Apparently, the models were removed from the main project for a reason. One gets the impression that work on them is either stopped or is proceeding at a depressingly unhurried pace, since new models or changes in existing ones have not been seen for quite some time. Since Apache OpenNlp users can create and train their own models, it is possible that this task is actually completely left to them.

Stanford Nlp

Stanford NLP is a lively, constantly evolving product of excellent quality and wide range of possibilities. For the English language, added support for recognizing the following entities: person, location, organization, misc, money, number, ordinal, percent, date, time, duration, set. In addition, the built-in Regex NER component allows you to find with a high degree of accuracy such entities as: email, url, city, state_or_province, country, nationality, religion, (job) title, ideology, criminal_charge, cause_of_death, handle. More details on the link . Limited NER support for German, Spanish and Chinese is announced. The quality of recognition can be tested using the online demo .

Supply:

Java library. Models can be downloaded from mavens along with the project.

I have not found anywhere a list or detailed description of NER components for languages other than English. Links 1 , 2 - examples of the process of training your own NER components for different languages are given. Simply put, the ability to use other languages is announced, but you have to tinker.

Pros:

The feeling from working with the project as a whole and with ready-made models is the most positive, the project lives and develops, the recognition quality is good (“good” is a conditional concept, there are metrics that characterize the quality of NER components recognition, but this issue is beyond the scope of the article).

Minuses:

Apart from some chaos with the docs, they are small. To whom it is important, pay attention to the license. The GNU General Public License is different from Apache , so, for example, you cannot add a product with this license to products licensed under Apache, etc.

Google Language API

The Google language API for English supports the following list of entities: person, location, organization, event, work_of_art, consumer_good, other, phone_number, address, date, number, price.

Platform:

REST API, SaaS. Ready-made client libraries over REST are available (Java, C #, Python, Go, etc.).

Pros:

A large set of NER components, development and quality are provided by the well-known Internet giant.

Cons:

Starting from certain volumes, use is paid .

Spacy

This library provides one of the widest sets of entities supported for recognition, see the link for a list of supported ones.

Platform:

Python.

Unfortunately, the lack of personal experience of industrial use does not allow me to add a real description of the pros and cons of this library. In addition, a detailed overview of Python NLP solutions has already been published on habr.

All of the above libraries allow you to train your own models. Also, all of them (except Apache OpenNlp) allow extracting normalized values from found entities, that is, for example, getting the number “173” from the numeric entity “one hundred and seventy three” found in the query.

As we can see, there are many options for solving the problem of finding named entities, the direction of their development is obvious - expanding the list of supported languages and a set of recognized entities, improving the quality of recognition.

Below is a summary of what the Apache NlpCraft project has brought to this already highly developed area.

Additional features provided by NlpCraft

- Native NER components for new entities, improved solutions for some of the existing ones.

- Integration of NER components of all the above libraries within the framework of product use.

- Support for “composite entities”, which gives users an easy way to create new custom components from existing ones.

Now about all this in a little more detail.

Proprietary NER components

The native NER components of Apache NlpCraft are components for recognizing dates, numbers, geography, coordinates, sorting, and matching different entities. Some of them are unique, some are just an improved implementation of existing solutions (recognition accuracy has been increased, additional value fields have been added, etc.).

Integration of existing solutions

All of the above solutions are integrated for use with Apache NlpCraft.

When working with a project, the user just needs to connect the required module and specify in the configuration which NER components should be used when searching for entities of a particular model.

Below is an example of a configuration that uses four different NER components from two providers when searching through the text:

"enabledBuiltInTokens": [

"nlpcraft:num",

"nlpcraft:coordinate",

"google:organization",

"google:phone_number"

]

Read more about using Apache NlpCraft here . A valid Google developer account is required to use the Google Language API.

Composite entity support

Support for composite entities is the most interesting of the above features, let's dwell on it in a little more detail.

A composite entity is an entity defined on the basis of another. Let's look at an example. Suppose you are developing an intent-based NLP control system (see Alexa , Google Dialogflow , Alice , Apache Nlpraft , etc.), and your model works with geography, but only for the United States. You can take any geography search component like “ nlpcraft: city ” and use it directly.

Further, when the intent is triggered, in the corresponding function (callback), you must check the value of the field " country”, And if it does not meet the required conditions, terminate the function, preventing false positives. Next, you should go back to matching and try to choose another, more appropriate function.

What's wrong with this approach:

- You make it much more difficult to work with called functions by transferring control from them to the main worker thread and back. In addition, it is worth considering that not all dialogue systems have such a control transfer functionality.

- You smear the matching logic between the intent and the executable method code.

Ok ... You can create your own NER component from scratch to find American cities, but this task is not solved in five minutes.

Let's try it differently. You can complicate the intent (in those systems where possible) and search for cities additionally filtered by country. But, I repeat, not all systems provide the possibility of complex filtering by element fields, in addition, you complicate intents, which should be as clear and simple as possible, especially if there are many of them in the project.

Apache NlpCraft provides a mechanism for defining native NER components based on existing ones. Below is a configuration example (full DSL syntax is available here , an example of creating elements is here ):

"elements": [

{

"id": "custom:city:usa",

"description": "Wrapper for USA cities",

"synonyms": [

"^^id == 'nlpcraft:city' && lowercase(~city:country) == 'usa')^^"

]

}

]

In this example, we describe a new named entity "American city" - " custom: city: usa ", based on the already existing " nlpcraft: city " filtered by a certain criterion.

Now you can create intents based on the created new element, and cities outside the United States encountered in the text will not cause unwanted triggering of your intents.

Another example:

"macros": [

{

"name": "<AIRPORT>",

"macro": "{airport|aerodrome|airdrome|air station}"

}

],

"elements": [

{

"id": "custom:airport:usa",

"description": "Wrapper for USA airports",

"synonyms": [

"<AIRPORT> {of|for|*} ^^id == 'nlpcraft:city' &&

lowercase(~city:country) == 'usa')^^"

]

}

]

In this example, we have defined a named entity “city airport in the United States” - “ custom: airport: usa ”. When defining this element, we not only filtered the cities according to their state affiliation, but also set an additional rule, according to which the name of the city must be preceded by some synonym that defines the concept of “airport”. (Read more about creating synonyms of elements via macros - here ).

Composite elements can be defined with any degree of nesting, that is, if necessary, you can design new elements based on the newly created “ custom: airport: usa ”. Also note that all the normalized values of the parent entities, in this case the base element “ nlpcraft: city”Are also available in the“ custom: airport: usa ” element , and can be used in the function body of the triggered intent.

Of course, “building blocks” can be defined not only for all supported standard components from OpenNlp, Stanford, Google, Spacy and NlpCraft, but also for custom NER components, expanding their capabilities and allowing you to reuse existing software developments.

Please note, in fact, you do not produce new components for each new task, but simply configure them or "mix" their functionality into your own elements.

Thus, using “composite entities”, a developer can:

- Significantly simplify the logic for constructing intents by partially transferring it to reusable building blocks.

- Get NER components with new behavior using configuration changes without model training or coding.

- Reuse ready-made solutions with the expected quality, relying on existing tests or metrics.

Conclusion

I hope that a brief overview of the pros and cons of existing NER components will be useful to readers, and understanding how Apache NlpCraft can significantly expand their capabilities and adapt existing solutions for new tasks will speed up the development of your projects.