Chatbots are now widespread in various business areas. For example, banks can use them to optimize the work of their contact center, instantly answering popular questions from customers and providing them with reference information. For customers, chatbots are also a convenient tool: it is much easier to write a question in chat than to wait for an answer by calling a contact center.

In other areas, chatbots have also worked well: in medicine, they can interview a patient, convey symptoms to a specialist, and schedule an appointment with a doctor to establish a diagnosis. In logistics companies, chat bots will help you agree on the delivery date, change the address and choose a convenient pick-up point. In large online stores, chat bots have partly taken over the maintenance of orders, and in the field of car sharing services, chat bots perform up to 90% of the operator's tasks. However, chat bots are not yet able to solve claims work. Negative feedback and controversial situations still fall on the shoulders of operators and specialists.

Thus, most growing businesses are already actively using chatbots to work with customers. However, the benefits of implementing a chatbot often vary: in some companies the level of automation reaches 90%, in other companies it is only 30-40%. What does it depend on? How good is this metric for a business? Are there ways to increase the level of chatbot automation? This article will address questions that will help you understand this.

Benchmarking

Today, almost every business area has its own competitive environment. Many companies take similar business approaches. Therefore, if competing companies use chat bots in their activities, then it is quite advisable to compare them. Benchmarking is a good comparison tool.

In our case, chatbot benchmarking will involve covert research in order to compare the functionality of competitors' chatbots with the functionality of your own chatbot. Let's consider a case using a bank chat bot as an example.

Suppose a bank has developed a chatbot to optimize the operation of the contact center and reduce the cost of maintaining it. To conduct benchmarking, it is necessary to analyze other banks and identify the most functional chatbots of their competitors.

It is necessary to form a list of questions for verification (at least 50 questions divided into several topics):

- Questions about banking services , for example: "What are your deposit rates?", "How to reissue a card?" etc.

- Reference information , for example: "What is the current exchange rate?", "How to get a credit vacation?" etc.

- Client understanding level. (Resistance of the bot to typos, errors, perception of colloquial speech), for example: "I am popping the map, what am I doing?", "Top up the mobile", etc.

- Conversation on abstract topics , for example: "Tell a joke", "What to do during self-isolation?" etc.

Note: these topics of questions are given as an example and can be expanded or changed.

These are the questions you should ask your chatbot as well as your competitors' chatbots. After writing the question, 3 options for the result are possible (depending on the result, the corresponding score is put down):

- the bot did not recognize the customer's question (0 points);

- the bot recognized the client's question, but only after clarifying questions (0.5 points);

- the bot recognized the question on the first try (1 point).

If the chatbot transferred the client to the operator, then the question is also considered unrecognized (0 points).

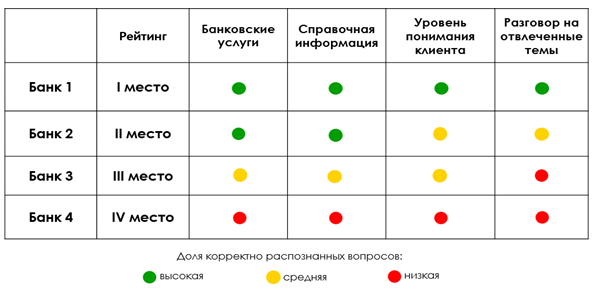

Next, the number of points scored by each chatbot is summed up, after which the share of correctly recognized questions on each topic is calculated (low - less than 40%, medium - from 40 to 80%, high - more than 80%), and the final rating is compiled. The results can be presented in the form of a table:

Suppose, according to the benchmarking results, the bank's chatbot took the second place. What conclusions can be drawn? The result is not the best, but not the worst either. Based on the table, we can say about its not the strongest sides: firstly, the algorithms need to be improved to correctly recognize the client's questions (the chatbot does not always understand the client's questions containing errors and typos), and also does not always support dialogue on abstract topics ... A more detailed difference can be seen when comparing with the first-ranked chatbot.

The chatbot, which took third place, showed itself worse: firstly, it requires a serious revision of the knowledge base on banking services and reference information, and secondly, it is poorly trained for dialogue with a client on abstract topics. It is obvious that the level of automation of such a chatbot is at a lower level compared to competitors who took I and II places.

Thus, based on the results of the benchmarking, the strengths and weaknesses in the work of chat bots were identified, as well as a comparison of competing chat bots with each other was made. The next step is to identify these problem areas. How can this be achieved? Let's consider some approaches based on data analysis: AutoML, Process-mining, DE-approach.

AutoML

Currently, artificial intelligence has already penetrated and continues to penetrate many areas of business, which inevitably entails an increased demand for competencies in the field of DataScience. However, the demand for such specialists is growing faster than their skill level. The fact is that the development of machine learning models takes a lot of resources and requires not only a large amount of knowledge from a specialist, but also a significant amount of time spent on building models and comparing them. To reduce the pressure created by scarcity, as well as shorten the time to develop models, many companies have begun to create algorithms that can automate the work of DataScientists. Such algorithms are called AutoML.

AutoML, also called automated machine learning, helps the DataScientist automate the time-consuming and repetitive tasks of developing machine learning models while maintaining their quality. While AutoML models can save you time, they will only be effective when the problem they solve is persistent and repetitive. Under these conditions, AutoML models perform well and show acceptable results.

Now let's use AutoML to solve our problem: to identify problem areas in the work of chat bots. As mentioned above, a chatbot is a robot, or a specialized program. She knows how to extract keywords from a message and search for a suitable answer in her database. It is one thing to look for the right answer, another is to maintain a logical dialogue, imitating communication with a real person. This process depends on how well scripted the chatbot is.

Imagine a situation when a client has a question, and a chatbot answers him strangely, not logically, or generally on a different topic. As a result, the client is not satisfied with this answer, and at best writes about his misunderstanding of the answer, at worst - negative messages towards the chatbot. Therefore, the task of AutoML will be to identify negative dialogues from the total number (based on the chatbot logs uploaded from the database), after which it is necessary to identify which scenarios these dialogues relate to. The result obtained will be the basis for the refinement of these scenarios.

First, let's mark up the client's dialogs with the chatbot. In each dialog, we leave only messages from clients. If in the client's message there is a negative in the direction of the chatbot, or not understanding his answers, set flag = 1, in other cases = 0:

Marking messages from clients

Next, we declare the AutoML model, train it on the marked-up data and save it (all the necessary model parameters are also passed, but they are not shown in the example below).

automl = saa.AutoML

res_df, feat_imp = automl.train('test.csv', 'test_preds.csv', 'classification', cache_dir = 'tmp_dir', use_ids = False)

automl.save('prec')We load the resulting model, after which we make a prediction of the target variable for the test file:

automl = saa.AutoML

automl.load('text_model.pkl')

preds_df, score, res_df = automl.predict('test.csv', 'test_preds.csv', cache_dir = 'tmp_dir')

preds_df.to_csv('preds.csv', sep=',', index=False)Next, we evaluate the resulting model:

test_df = pd.read_csv('test.csv')

threshold = 0.5

am_test = preds_df['prediction'].copy()

am_test.loc[am_test>=threshold] = 1

am_test.loc[am_test<threshold] = 0

clear_output()

print_result(test_df[target_col], am_test.apply(int))The resulting matrix of errors:

In the process of creating the model, we tried to minimize the error of the 1st kind (assigning a good dialogue to a bad one), therefore, for the obtained classifier, we stopped at the f1-measure equal to 0.66. With the help of the trained model, it was possible to identify 65 thousand "bad" sessions, which, in turn, made it possible to identify 7 insufficiently effective scenarios.

Process Mining

To identify problematic scenarios, we can also apply tools based on Process Mining - the general name for a number of methods and approaches designed to analyze and improve processes in information systems or business processes based on the study of event logs.

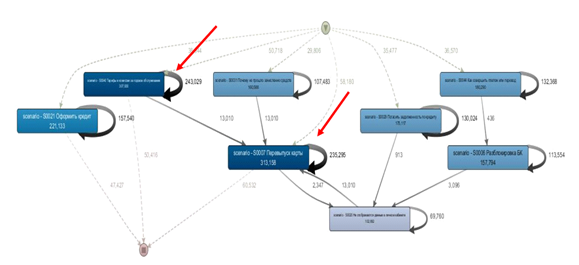

Using this method, we were able to identify 7 scenarios that are involved in long and ineffective dialogues:

18% of dialogues have more than 4 messages from the chatbot.

Each element in the above graph is a scenario. As you can see from the figure, the scripts are looping, and the bold looping arrows indicate a rather long dialogue between the client and the chatbot.

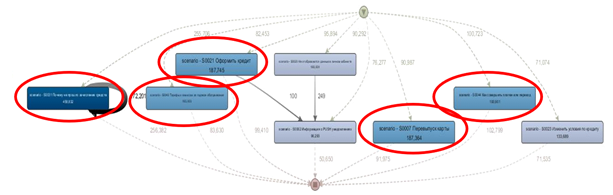

Next, to find bad scenarios, we prepared a separate dataset and built a graph based on it. For this, only those dialogs were left in which there is no access to the operator, after which they filtered out dialogs with unresolved questions. As a result, we identified 5 scenarios for improvement, in which the chatbot does not solve the client's question.

Identified scenarios figure in about 15% of all dialogues

DE (Data Engineering) approach

A simple analytical approach was also used to search for problem scenarios: dialogues were identified, the feedback rating in which (from the clients' side) ranged from 1 to 7 points, then the most common scenarios in this sample were selected.

For example, using the approaches based on AutoML, Process Mining and DE in a comprehensive manner, we identified problem areas in the company's chatbot that require improvement.

Now the chatbot is getting better!