In just 20 years, software development has moved from architectural monoliths with a single database and centralized state to microservices, where everything is distributed across numerous containers, servers, data centers, and even continents. Distribution makes scaling easier, but it also introduces entirely new challenges, many of which were previously solved with monoliths.

Let's take a quick tour of the history of networked applications to figure out how we arrived at today. And then let's talk about the stateful execution model used in Temporal.and how it solves the problems of service-oriented architectures (SOA). I may be biased because I run the grocery department at Temporal, but I believe that this approach is the future.

A short history lesson

Twenty years ago, developers almost always created monolithic applications. It is a simple and consistent model, similar to how you program in your local environment. By their very nature, monoliths depend on a single database, that is, all states are centralized. Within a single transaction, a monolith can change any of its states, that is, it gives a binary result: whether it worked or not. There is no room for inconsistency. That is, the wonderful thing about the monolith is that there will be no inconsistent state due to a failed transaction. And this means that developers do not need to write code, all the time guessing about the state of different elements.

Twenty years ago, developers almost always created monolithic applications. It is a simple and consistent model, similar to how you program in your local environment. By their very nature, monoliths depend on a single database, that is, all states are centralized. Within a single transaction, a monolith can change any of its states, that is, it gives a binary result: whether it worked or not. There is no room for inconsistency. That is, the wonderful thing about the monolith is that there will be no inconsistent state due to a failed transaction. And this means that developers do not need to write code, all the time guessing about the state of different elements.

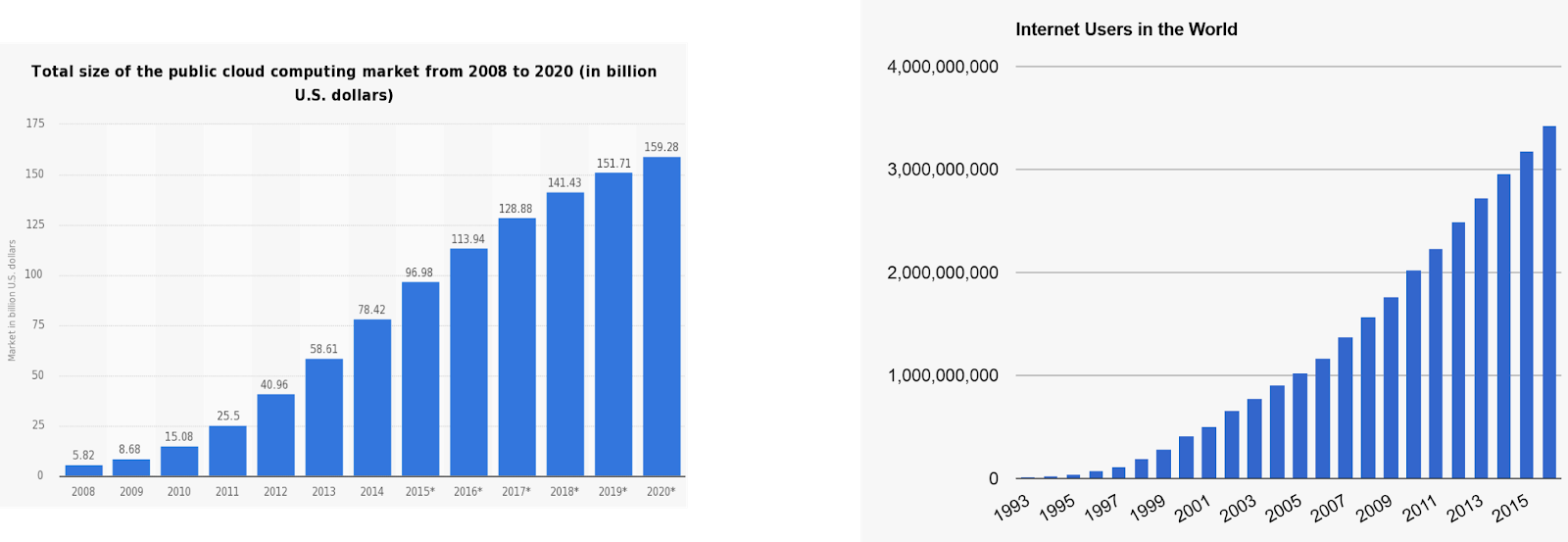

For a long time, monoliths made sense. There weren't many connected users yet, so the software scaling requirements were minimal. Even the largest software giants operated systems that were paltry by modern standards. Only a handful of companies like Amazon and Google used large-scale solutions, but those were the exceptions to the rule.

People as software

Over the past 20 years, software requirements have been constantly growing. Today, applications should work on the global market from day one. Companies like Twitter and Facebook have made 24/7 online a prerequisite. Apps no longer provide anything, they have become a user experience themselves. Every company today must have software products. "Reliability" and "availability" are no longer properties, but requirements.

Unfortunately, the monoliths began to fall apart when "scalability" and "availability" were added to the requirements. Developers and businesses alike needed to find ways to keep pace with explosive global growth and demanding user expectations. I had to look for alternative architectures that reduce the emerging problems associated with scaling.

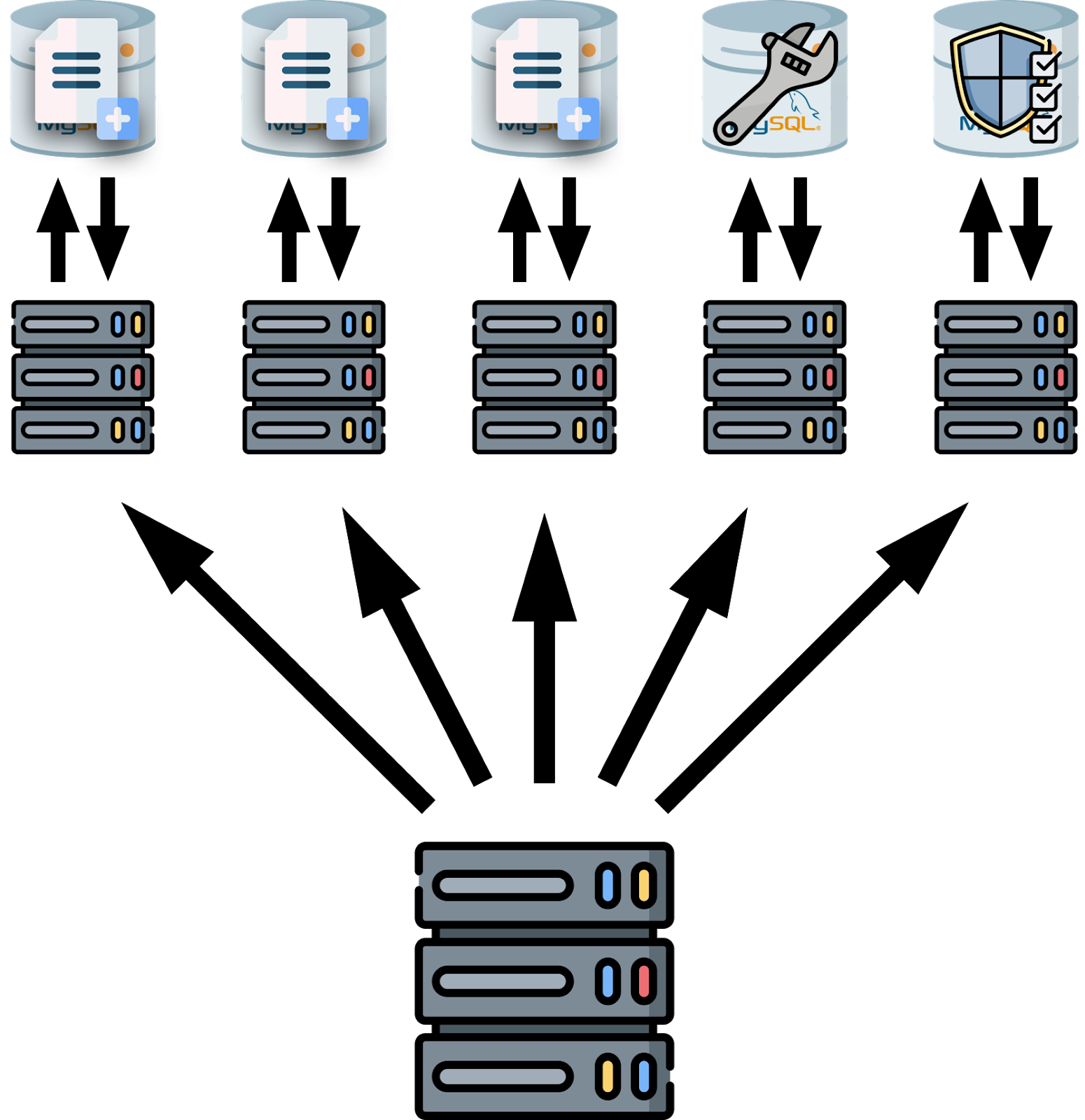

Microservices (well, service oriented architectures) were the answer. Initially, they seemed to be a great solution because they allowed you to split applications into relatively self-contained modules that can be independently scaled. And since each microservice maintained its own state, applications were no longer limited to the capacity of a single machine! Developers were finally able to create programs that could scale with the growing number of connections. Microservices also gave teams and companies flexibility in their work due to transparency in responsibility and separation of architectures.

There is no free cheese

While microservices have solved the scalability and availability issues that have hampered software growth, things have not been cloudless. Developers began to realize that microservices have serious flaws.

Monoliths typically have one database and one application server. And since the monolith cannot be split, there are only two ways to scale:

- Vertical : Upgrading hardware to increase throughput or capacity. This scaling can be effective, but it is expensive. And it certainly won't fix the problem forever if your application needs to keep growing. And if you expand enough, you won't end up with enough equipment to upgrade.

- : , . , .

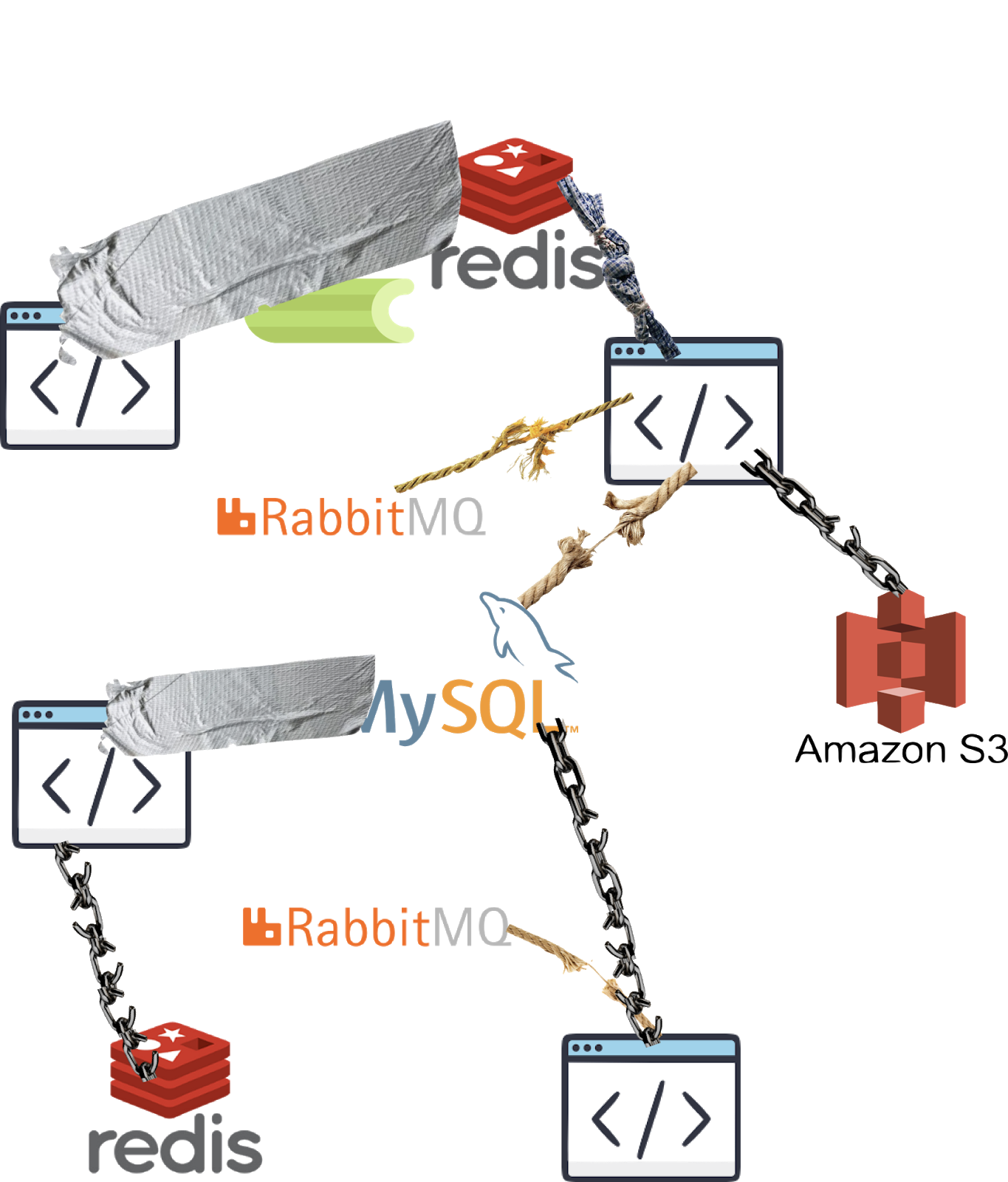

It's different with microservices, their value lies in the ability to have many "types" of databases, queues and other services that are scaled and managed independently of each other. However, the first problem that began to be noticed when switching to microservices was precisely the fact that now you have to take care of a bunch of all kinds of servers and databases.

For a long time, everything was left to chance, developers and operators got out on their own. Infrastructure management problems posed by microservices are difficult to tackle, at best degrading application reliability.

However, supply arises in response to demand. The more microservices spread, the more developers are motivated to solve infrastructure problems. Slowly but surely, tools began to emerge, and technologies like Docker, Kubernetes, and AWS Lambda filled the gap. They made the microservice architecture very easy to operate. Instead of writing their own code to orchestrate with containers and resources, developers can rely on pre-built tools. In 2020, we have finally reached the milestone where the availability of our infrastructure no longer interferes with the reliability of our applications. Perfectly!

Of course, we are not yet living in the utopia of perfectly stable software. Infrastructure is no longer the source of application insecurity; application code has taken its place.

Another problem with microservices

In monoliths, developers write code that changes states in a binary way: either something happens or it doesn't. And with microservices, the state is distributed across different servers. To change the state of an application, multiple databases must be updated at the same time. The chances are that one database will update successfully and others will crash, leaving you with an inconsistent intermediate state. But since services were the only solution to the problem of horizontal scaling, the developers had no other option.

A fundamental problem with state distributed across services is that each call to an external service will have a random outcome in terms of availability. Of course, developers can ignore the problem in their code and assume every call to an external dependency is always successful. But then some dependency can put the application down without warning. Therefore, the developers had to adapt their code from the era of monoliths to add checks for the failure of operations in the middle of transactions. The following shows continually retrieving the last recorded state from the dedicated myDB store to avoid race conditions. Unfortunately, even this implementation does not help. If the state of the account changes without updating myDB, inconsistencies may occur.

public void transferWithoutTemporal(

String fromId,

String toId,

String referenceId,

double amount,

) {

boolean withdrawDonePreviously = myDB.getWithdrawState(referenceId);

if (!withdrawDonePreviously) {

account.withdraw(fromAccountId, referenceId, amount);

myDB.setWithdrawn(referenceId);

}

boolean depositDonePreviously = myDB.getDepositState(referenceId);

if (!depositDonePreviously) {

account.deposit(toAccountId, referenceId, amount);

myDB.setDeposited(referenceId);

}

}

Alas, it is impossible to write code without errors. And the more complex the code, the more likely bugs will appear. As you might expect, the code that works with the "middleware" is not only complex but also convoluted. At least some reliability is better than no reliability, so the developers had to write such initially buggy code to maintain the user experience. It costs us time and effort, and employers a lot of money. While microservices scale well, they come at a price of developer fun and productivity, and application reliability.

Millions of developers spend time every day re-inventing one of the most re-invented wheels - the reliability of boilerplate. Modern approaches to working with microservices simply do not reflect the requirements for the reliability and scalability of modern applications.

Temporal

Now we got to our solution. It is not endorsed by Stack Overflow, and we do not claim to be perfect. We just want to share our ideas and hear your opinion. What better place to get feedback on improving your code than Stack?

Until today, there has been no solution that allows you to use microservices without solving the problems described above. You can test and emulate crash states, write code taking crashes into account, but these problems still arise. We believe Temporal solves them. It is an open-source (MIT no-nonsense) stateful environment for microservices orchestration.

Temporal has two main components: a stateful backend that runs on the database of your choice, and a client framework in one of the supported languages. Applications are built using a client framework and regular legacy code that automatically saves state changes in the backend as they run. You can use the same dependencies, libraries, and build chains as you would when building any other application. To be honest, the backend is highly distributed, so it's not like J2EE 2.0. In fact, it is the distribution of the backend that allows for almost infinite horizontal scaling. Temporal brings consistency, simplicity, and reliability to the application layer, as did the Docker infrastructure, Kubernetes, and serverless architecture.

Temporal provides a number of highly reliable mechanisms for microservices orchestration. But the most important thing is to preserve the state. This function uses event emitting to automatically save any stateful changes to a running application. That is, if the computer running Temporal crashes, the code will automatically jump to another computer as if nothing had happened. This even applies to local variables, threads of execution, and other application-specific states.

Let me give you an analogy. As a developer, you probably rely today on SVN versioning (that's OG Git) to keep track of the changes you make to your code. SVN simply saves new files and then links to existing files to avoid duplication. Temporal is something like SVN (rough analogy) for stateful history of running applications. When your code changes the state of your application, Temporal automatically saves that change (not the result) without error. That is, Temporal not only restores the crashed application, it also rolls it back, forks and does much more. So developers no longer need to build applications with an expectation that the server might crash.

It's like switching from manually saving documents (Ctrl + S) after each entered character to Google Docs automatic cloud saving. Not in the sense that you don't manually save anything anymore, it's just that there is no longer any one machine associated with this document. Statefulness means that developers can write a lot less boring boilerplate code that had to be written due to microservices. In addition, you no longer need special infrastructure - separate queues, caches and databases. This makes it easier to maintain and add new features. It also makes it much easier to get newbies up-to-date because they do not need to understand the confusing and specific state management code.

State retention is also implemented in the form of "persistent timers". This is a fail-safe mechanism that can be used with a command

Workflow.sleep. It works exactly the same as sleep. However, Workflow.sleepit can be safely euthanized for any length of time. Many Temporal users have been sleeping for weeks, even years. This is accomplished by storing long-running timers in the Temporal store and keeping track of the code to wake up. Again, even if the server crashes (or you just turned it off), the code will go to the available machine when the timer expires. Sleep processes do not consume resources, you can have millions of them with negligible overhead. It might sound too abstract, so here's an example of a working Temporal code:

public class SubscriptionWorkflowImpl implements SubscriptionWorkflow {

private final SubscriptionActivities activities =

Workflow.newActivityStub(SubscriptionActivities.class);

public void execute(String customerId) {

activities.onboardToFreeTrial(customerId);

try {

Workflow.sleep(Duration.ofDays(180));

activities.upgradeFromTrialToPaid(customerId);

while (true) {

Workflow.sleep(Duration.ofDays(30));

activities.chargeMonthlyFee(customerId);

}

} catch (CancellationException e) {

activities.processSubscriptionCancellation(customerId);

}

}

}

In addition to persisting state, Temporal offers a set of mechanisms for building robust applications. Activity functions are called from workflows, but the code running inside the activity is not stateful. Although they do not save their state, activities contain automatic retries, timeouts, and heartbeats. Activities are very useful for encapsulating code that can fail. Let's say your application uses a banking API that is often not available. For legacy software, you need to wrap all the code that calls this API with try / catch statements, retry logic, and timeouts. But if you call the banking API from an activity, then all these functions are provided out of the box: if the call fails, the activity will be automatically retried. It's all greatbut sometimes you yourself own an unreliable service and want to protect it from DDoS. Therefore, activity calls also support timeouts, backed up by long timers. That is, the pauses between repetitions of activities can reach hours, days or weeks. This is especially useful for code that needs to run successfully, but you're not sure how fast it needs to happen.

This video explains the programming model in Temporal in two minutes:

Another strength of Temporal is the observability of the running application. The Observation API provides a SQL-like interface for querying metadata from any workflow (executable or not). You can also define and update your own metadata values right within the process. The observation API is very useful for Temporal operators and developers, especially when debugging during development. Monitoring even supports batch actions on query results. For example, you can send a kill signal to all worker processes that match a request with a creation time> yesterday. Temporal supports a synchronous fetch feature that allows you to pull the values of local variables from running instances. It's like a debugger from your IDE has been working with production applications. For example, this is how you can get the value

greeting in a running instance:

public static class GreetingWorkflowImpl implements GreetingWorkflow {

private String greeting;

@Override

public void createGreeting(String name) {

greeting = "Hello " + name + "!";

Workflow.sleep(Duration.ofSeconds(2));

greeting = "Bye " + name + "!";

}

@Override

public String queryGreeting() {

return greeting;

}

}

Conclusion

Microservices are great, and they come at the price of productivity and reliability that developers and businesses pay. Temporal is designed to solve this problem by providing an environment that pays microservices for developers. Out-of-the-box statefulness, auto-failures, and surveillance are just a few of the features Temporal has that make microservices development smart.