What is happening in the field of conditional lossless networks

Over the years, when data transmission media experienced rapid development, engineers managed to encounter many phenomena that hinder the successful implementation of storage networks and high-performance computing clusters on Ethernet: losses, non-guaranteed information delivery, deadlocks, microburst and other unpleasant things.

As a result, it was considered correct to build a reference dedicated network for a specific scenario:

- IB for clusters of high load computing;

- FC for classic storage network;

- Ethernet for service tasks.

Attempts to achieve versatility looked something like the illustration.

For some tasks, the vectors could coincide (similar to that of a swan and a crayfish), and situational versatility was achieved, albeit with lower efficiency than when choosing a highly specialized scenario.

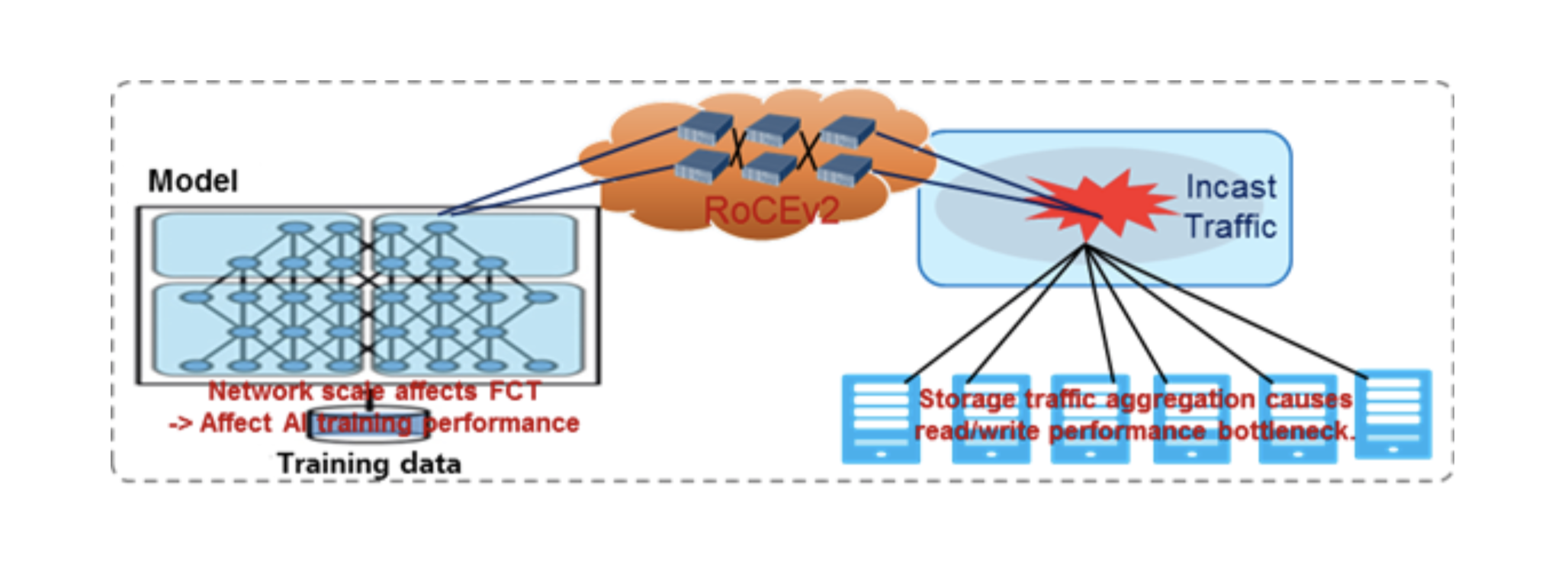

Today, Huawei sees the future in multitasking converged factories and offers its customers an AI Fabric solution designed, on the one hand, for scenarios of increasing network performance without loss (up to 200 Gbps per server port in 2020), and on the other hand, for increasing the performance of the applications (migration to RoCEv2).

By the way, we had a separate detailed post about the technical component of AI Fabric .

What needs optimization

Before talking about algorithms, it makes sense to clarify what exactly they are designed to improve.

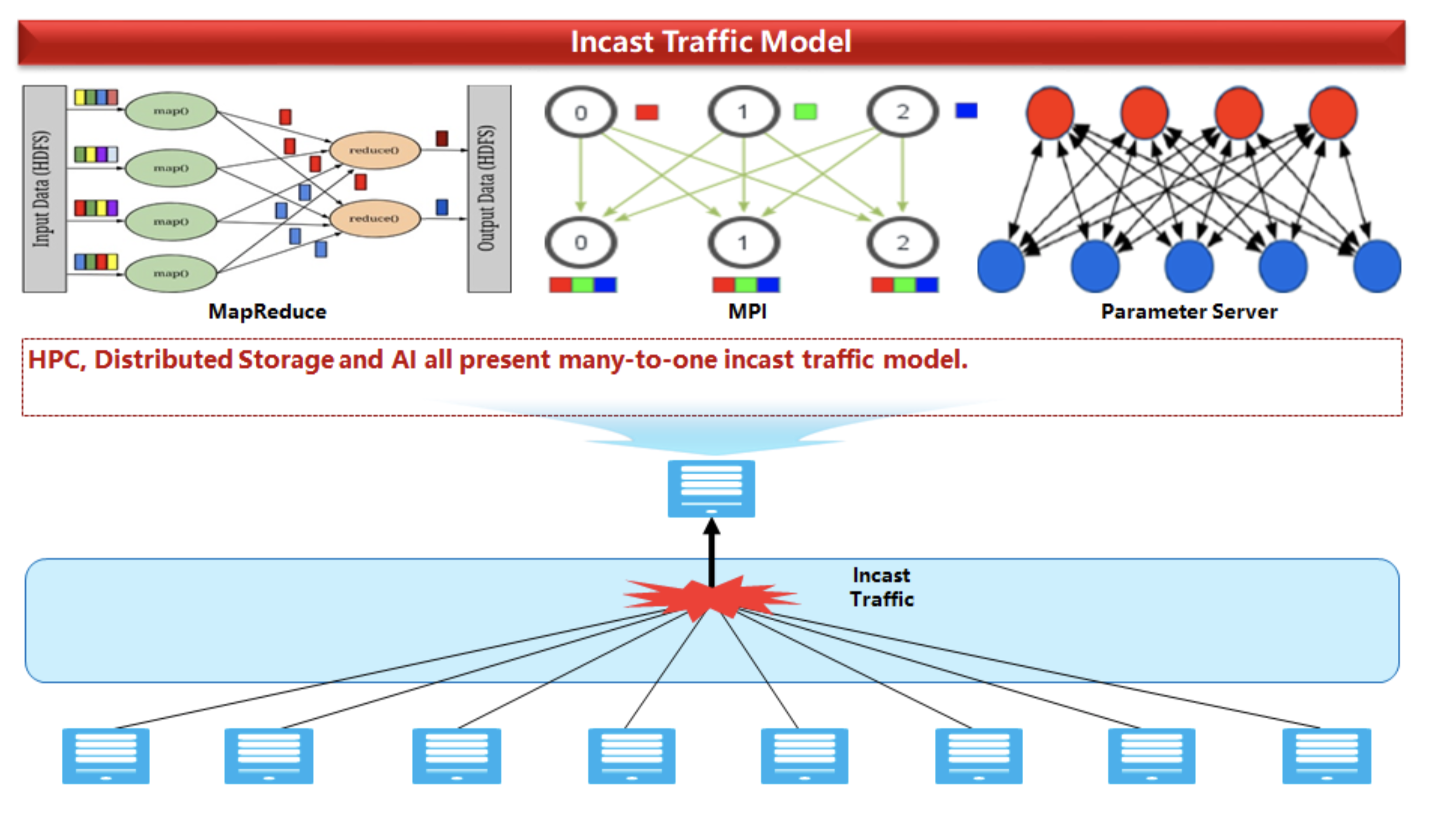

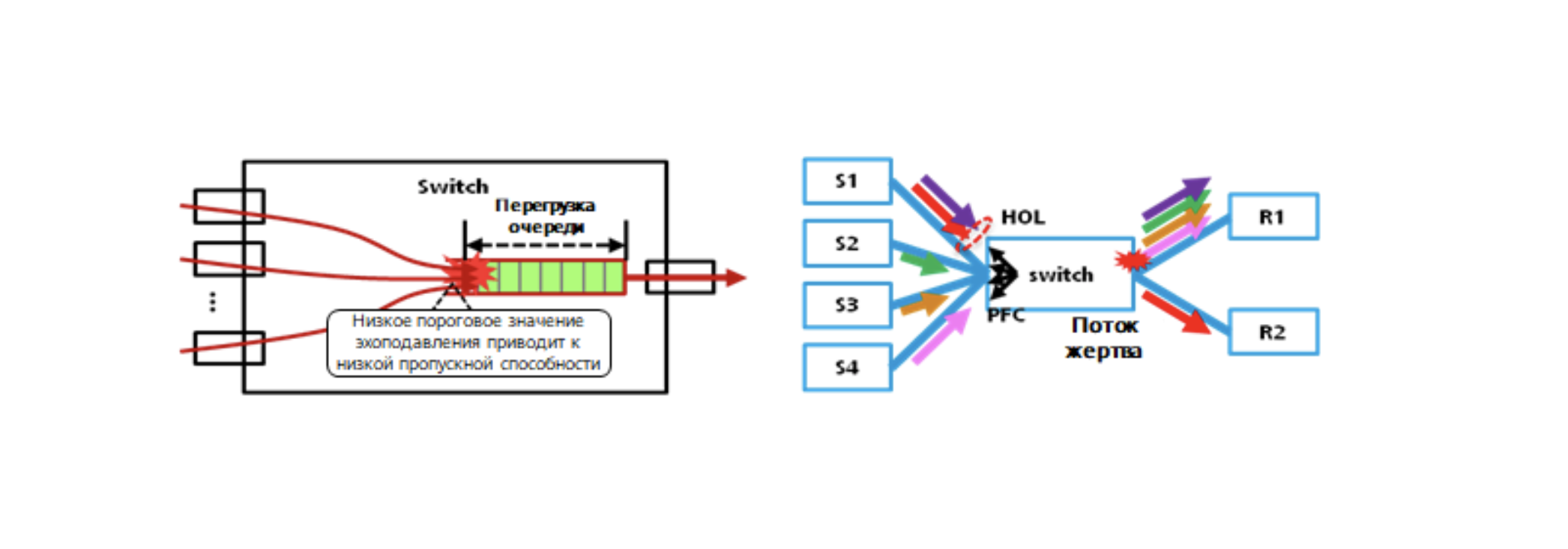

Static ECN leads to the fact that with an increase in the number of sender servers with a single recipient, a suboptimal traffic picture emerges (to put it mildly, we are dealing with the so-called many-to-one incast model).

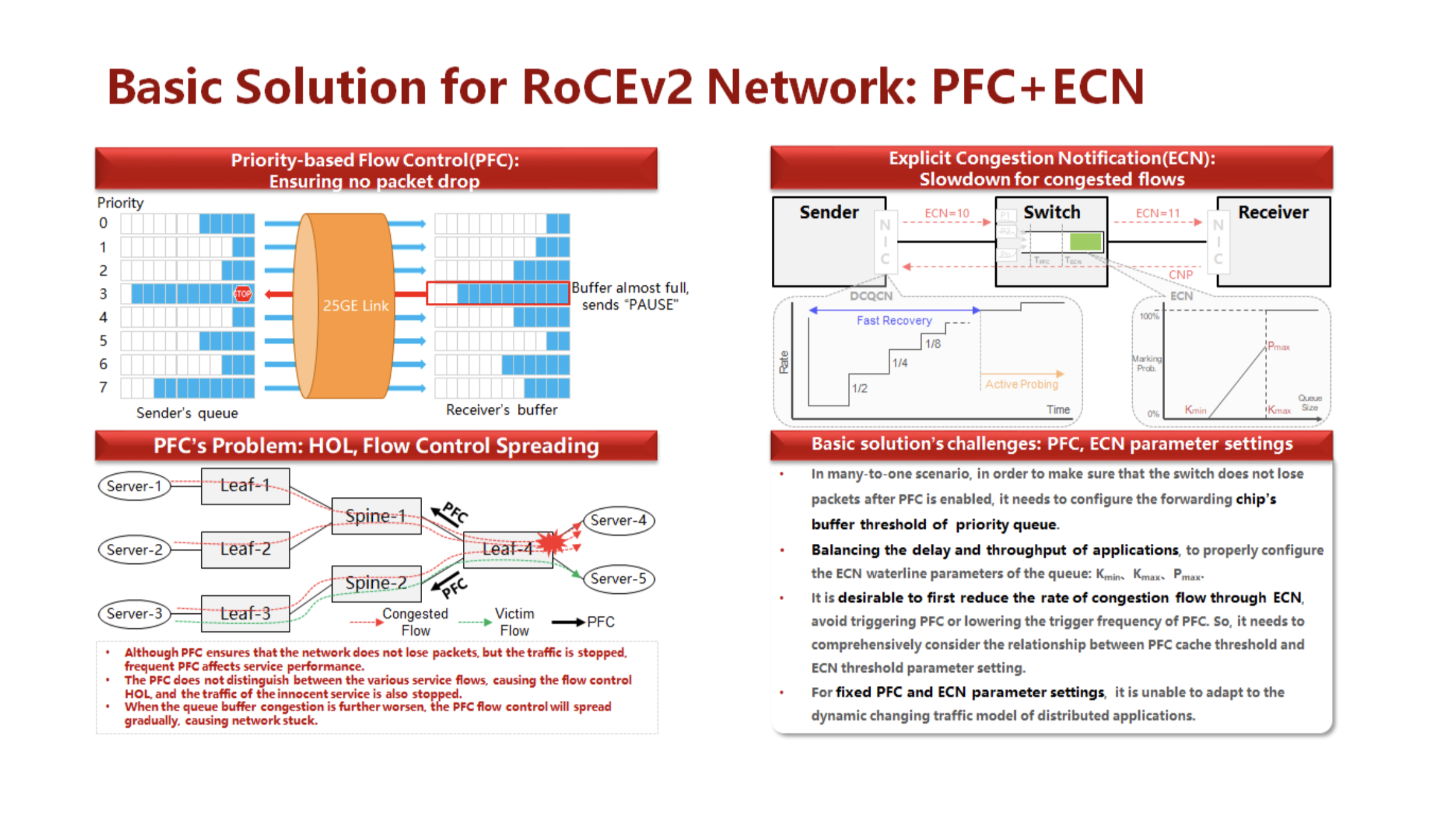

In traditional Ethernet, we have to manually balance the odds of loss on the network and the poor performance of the network itself.

We will see the same prerequisites also when using the PFC / ECN bundle in the case of implementation without constant tuning (see the figure below).

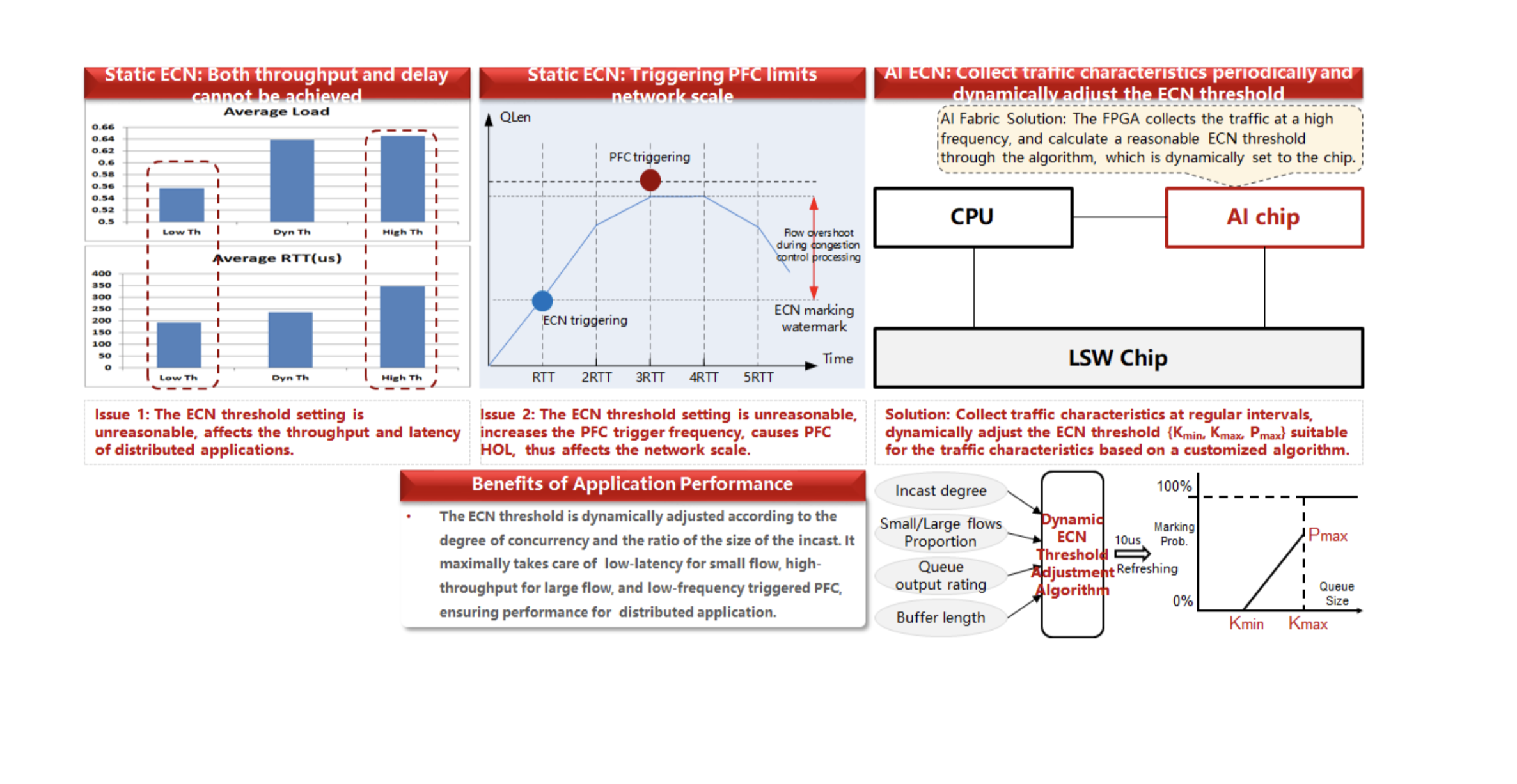

To solve the described problems, we use the AI ECN algorithm, the essence of which is to timely change the ECN thresholds. How it looks is shown in the diagram below.

Previously, when we used the Broadcom chipset + Ascend 310 AI processor bundle, we had a limited number of options for tuning these parameters.

We can conditionally call such a variant Software AI ECN, since the logic is done on a separate chip and is already “spilled” into a commercial chipset.The models equipped with the Huawei P5 chipset have much wider "AI capabilities" (especially on the latest release), due to the fact that it implements a significant part of the functionality necessary for this.

How we use algorithms

Using the Ascend 310 (or the P-card's built-in module), we begin to analyze the traffic and compare it to a benchmark of known applications.

In the case of known applications, traffic indicators are optimized on the fly, in the case of unknown applications, the transition to the next step takes place.

Key points:

- DDQN reinforcement learning, exploration, accumulation of large numbers of baseline configurations, and exploration of the best ECN compliance strategy is performed.

- The CNN Classifier identifies scenarios and determines if the recommended DDQN threshold is reliable.

- If the recommended DDQN threshold is not reliable, a heuristic method is used to correct it to ensure that the solution is generalized.

This approach allows you to adjust the mechanisms for working with unknown applications, and if you really want to, you can set a model for your application using the Northbound API to the switch management system.

Key points:

- DDQN accumulates a large number of baseline configuration memory samples and deeply examines the network state and baseline configuration reconciliation logic to learn policies.

- The CNN Neural Network Classifier identifies scenarios to avoid risks that can arise when unreliable ECN configurations are recommended in unknown scenarios.

What do we get

After such a cycle of adaptation and changing additional network thresholds and settings, it becomes possible to get rid of several types of problems at once.

- Performance issues: low bandwidth, long latency, packet loss, jitter.

- PFC Issues: PFC Deadlock, HOL, Storms, etc. PFC technology causes many system level issues.

- RDMA Application Challenges: AI / High Performance Computing, Distributed Storage and Combinations. RDMA applications are sensitive to network performance.

Summary

Ultimately, additional machine learning algorithms help us solve the classic problems of the "unresponsive" Ethernet network environment. Thus, we are one step closer to an ecosystem of transparent and convenient end-to-end network services - as opposed to a set of disparate technologies and products.

***

Huawei solutions continue to appear in our online library . Including on the topics covered in this post (for example, before building full-size AI solutions for various scenarios of "smart" data centers). You can find a list of our webinars for the coming weeks here .