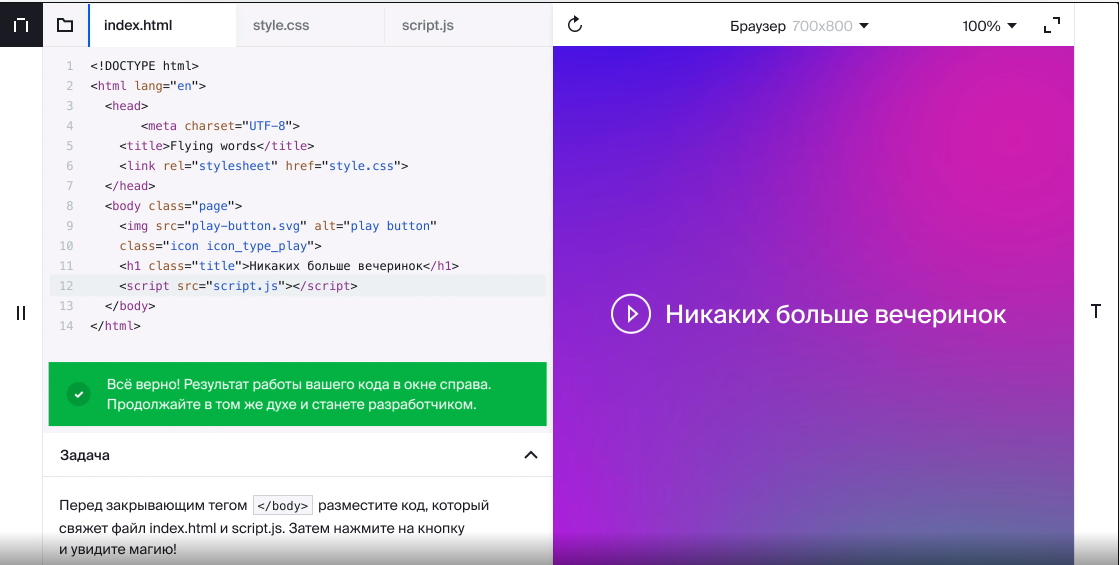

In an attempt to do something similar, we once launched a web simulator in Yandex MVP, in which the user could write code, scripts and everything else on different tabs, and next door he displayed all this as the final result.

MVP showed itself well, and we brought the web simulator to the level of a full-fledged tool for testing the knowledge of our students in Yandex.Practice . My name is Artem, and I will tell you how we made a simulator for teaching web development, how it works and what it can do.

From the outside, it seems that everything is simple here - I stuffed all the custom code into an iframe, posted it via postmessage, then rendered it in any way possible, and everything works. Such a slightly pumped online code preview.

But there are nuances.

How it works

At the start, we noted a possible problem: if you deploy the simulator on a Yandex domain (like the Workshop itself, for example), then there is a nonzero probability that users will turn out to be a little more curious. Namely, they will take and throw some code into the simulator, which the simulator will enthusiastically process. And the code will turn out to be fraudulent and will take away the existing Yandex cookie, insert it into some third-party service, after which the fraudster will have access to the user's personal account in Yandex and all personal data. It is quite easy to implement this if this iframe is located in the yandex.ru domain. Therefore, we made a separate domain on yandex.net specifically for the simulator and named it Feynman. In honor of Richard , yes.

In general, our simulator stores the files that we send to the backend in plain text, json and base64 formats for images. Then they are converted into real files and distributed already in the form of static, which we can put in the iframe for rendering.

But we are not just playing syntax highlighting here, we have a simulator for testing knowledge. Therefore, we need to test and check this code on the fly, that is, somehow wedge into the iframe process and see if the user did everything right, say, how he named the variable, or if everything is ok with the divs.

And here we again run into domains. The user code, as I already wrote, is put into the simulator on the Feynman domain, and we check it from Yandex, from the praktikum.yandex.ru domain . The browser's same-origin policy is on guard and does not allow you to tamper with the internals of the iframe if you have different domains.

Therefore, we decided to cram the iframe into the iframe.

The following situation turned out:

- We create an iframe, which is actually empty at first.

- He draws some kind of blank page.

- We from our front post a postmessage with a link to what Feynman gave us (from where he hosts statics).

- The first iframe takes this link and substitutes it in the src of the inner iframe.

As a result, our first iframe can own the code and do whatever it wants with the internal iframe. De facto, tests are just an eval function that has access to: document, window, and so on, everything in the iframe. This gives us the opportunity to take a test for a problem and run it in the window of a given iframe.

Not by tests alone

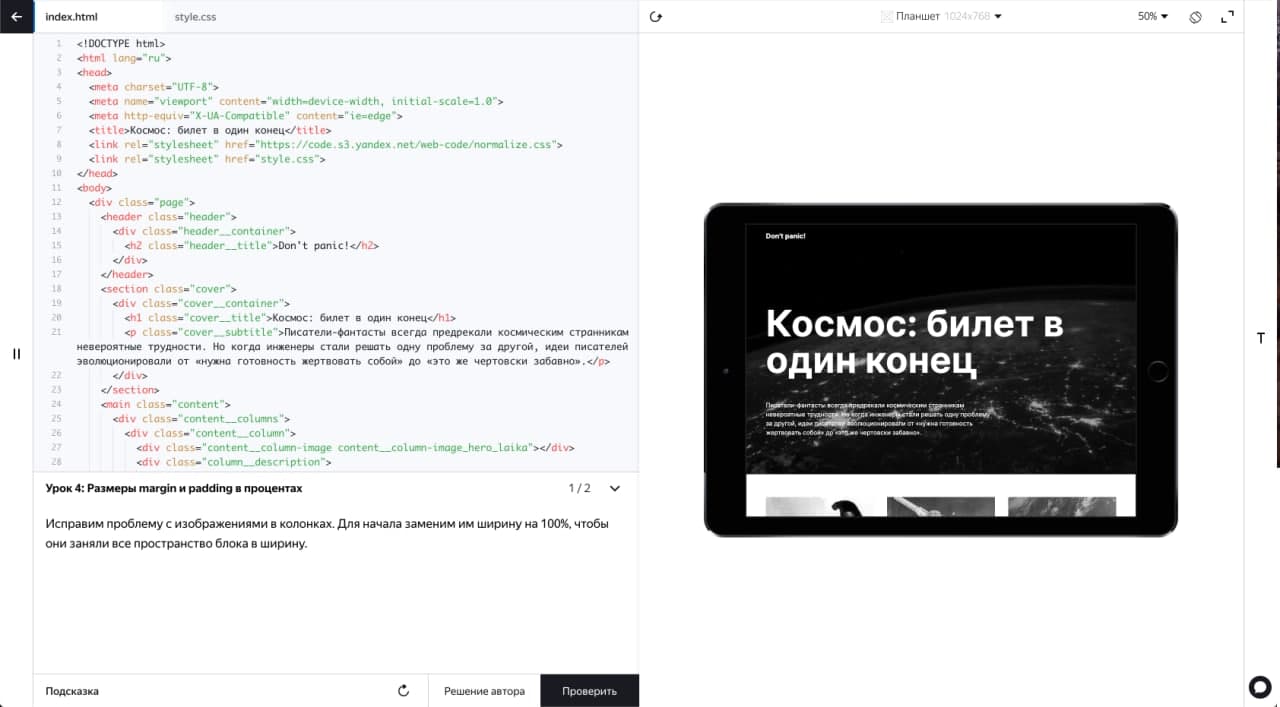

Then we wanted to add some useful features: a terminal, a console, the ability to display user data about what he wrote to the log, and other joys. Of course, we made a full-fledged adaptive mode so that the user can see how the result will look on smartphones and tablets.

For this, a special library was written that loads all the necessary styles and emulates responsive mode. We also slightly change our original iframe and add everything that allows the user to display his console.log on the screen, and not only some simple objects, but also full-fledged document dom trees.

In addition to this, we learned how to run preliminary tests. This is useful because there are many tests that check about the same things - for example, whether the user has overdone it with loops in the code, whether it has got carried away with nesting, etc. It doesn't make much sense to describe this in each test separately, so we wrote a test library that has a set of special pre-testing methods that check the code. If everything is fine at this stage, then the main test and the solved problems are already checked, after which the result is shown to the user.

To return the result of preliminary testing to the user, we also use postmessage - we send messages through it, whether there were any errors or everything is cool. By the way, the student's code on the web simulator is also checked through the es-lint linter with translation into Russian and always highlights syntax errors.

Problems with code reviews (and not only)

If there were any system or browser notifications on the page, for example, it was suggested to enter something, then often when running our test, the user continued to see browser windows with notifications and requests for data entry. We had to do this: when a user just launches a page with his code to see how everything works, this alert also needs to work. And when the test runs this code for verification, we no longer need alerts to continue to appear on the user's screen. In fact, we replaced all these alerts with our own stubs for tests (mock), overriding alert, prompt, confirm inside window. If you do not do this, you could get a loop at the output or an empty alert that does nothing.

By the way, about infinite loops. The main problem here was that the user could knowingly take and write code that would happily go into an infinite loop (there is only one javascript thread in the browser), and as a result, the entire browser went to lie down.

To combat this, we learned first of all to track such infinite loops before submitting code for review. To do this, it was necessary to remake the user script in some way, we went this way:

- For each cycle we add a certain function that counts the number of calls.

- If this number of calls exceeds 100,000, then we immediately throw an exception, which we also send back via postmessage. Plus, just in case, we check the timeout if the cycle runs for more than 10 seconds.

- Along the way, we track that, since an exception has arisen, something is wrong here, and the test itself no longer makes sense to run - the code is looped.

The situation with links should be noted separately. Let's say a user inside his code may have some links that should open on click in a new tab, for example, his portfolio or github account. And we didn't need such links to open directly inside the iframe - otherwise, instead of the iframe, we will have a page with its link. It is necessary to open such things in a new tab, via Tab. Usually, to open a link not inside the frame, but in the parent frame, you just need to specify target = "_ parent". But in our case, we needed to add a handler that determines whether the link is external.

And for all links, we wrote a special handler: if we see that the link is external, then we send the postmessage outward, interrupt the link handler itself (prevent default), and postmessage comes back to our front. We see that we have an external link here, and show a notification - are we sure we are going to an external site? And after that we open new tabs.

And also anchors, with them everything was much more straightforward. They just didn't work inside the iframe. Generally. Therefore, as a small hack, we subscribed to click events on any link - if there was an anchor on it, we made the scrollIntoView to a specific element.

All metadata (if a user had a favicon registered on the HTML page, for example, or a specific title), we also send via postmessage after the iframe has loaded. Using querySelector, we get these two tags, send them back to our front through postmessage, and the front itself inserts all these icons where necessary. It seems to be a trifle, but the user gets the impression that he has a full-fledged browser inside the browser.

Attempts to bypass the simulator

Our web simulator, in contrast to the simulators that we did for Python, SQL and others, uses the front for checks, not the backend. Therefore, when the user completes the tests correctly, a corresponding POST request is sent to the backend. In principle, a user, with the proper skill, can do the same and send such a request manually.

There is a double-edged sword. On the one hand, it's cool that a person is sufficiently interested in technology and basic hacks to do this. On the other hand, it is a bit like a shot in the leg, because our simulator is not in order to formally receive from it “OK, you are great, you did everything”, but in order to learn how to work normally, notice your mistakes and correct them. In general, it's like going to the gym, sitting on a bench press for 5 minutes, and then writing on Facebook “Did 3 sets with a hundred kilos”: you can amuse self-esteem, but the achievements will stop there.

Actually, that is why we do not take this check to the backend, it would solve a similar problem. People come to study in order to get a real job (maybe in the Workshop itself), not virtual achievements.

We are constantly improving the web simulator, using both our own wish list and feedback from users, so we will continue to tell you about its development. Now it is being finalized, taking into account the needs of students with a request for specific technologies, for example, we have added work with React and NodeJS. The web simulator is by far the most popular of all, followed by the Python simulator - largely due to both the lower threshold of entry and the popularity of the technologies themselves. In addition to the technical part inside the simulator, there are also many mechanics for working with interactive theory (and there are enough of them in all our courses). There is no separate simulator only for the QA specialty, there we made a special set of quizzes + stands, on which testers study. By the way, a couple of testers,who are now helping us do the Workshop are graduates of ourQA course .

The simulators for C ++ and the simulator for machine learning are more complicated, if you are interested, we will try to talk about them in the next posts.

Thank you for reading, if you have any questions about our simulators or about the Workshop in general - write, we will answer.