Recommender systems from scratch, chatbots and more

Having plunged into the abyss of textual data, in October this year we trained the ruGPT-3 models - models for the Russian language based on the architecture from OpenAI. But what are these models capable of? In this article, we will collect the first examples of applying the model - and try new ones.

We present the first results of the largest trained model, ruGPT-3 Large, developed in collaboration with the SberDevices , Sber.AI and SberCloud teams . Let's explore the boundaries of its application with you.

At this year's AI Journey, we prepared a competition of applications ruGPT-3 - any solution using a model, assessed on three scales - innovation, emotional engagement and business applicability - can be submitted to the track.

Spoiler:

1 million rubles for the first place

How to train the model for a new task?

Models and code for them are in the sberbank-ai repository : you can use the transformers library or Megatron-LM.

In this article, I provide examples using huggingface / transformers - the code for the cases is given here:

- Fine-Tuning Colab Laptop Models

- Just generating a Colab laptop

Model cards in transformers library: Large Medium Small

When you run the generation script, it's easier to do Run all - and then wait a little while the model is loaded.

The script in the last cell will ask you to enter the beginning of the text - my favorite seed: "Brazilian scientists have discovered a rare species of dwarf unicorns that live in the west of Jutland." We get : Only ruGPT-3 Small fits into the Colab laptop, so it's better to use cards with more than 14Gb memory.

ruGPT-3 Small: , . Agence France-Presse. , Pygmaliona (. Pygmaliona pygmalioni), . 30 , — 1 . . , .

By the way, for the duration of the competition, we give the participants of the track access to the facilities of Christofari!

For the participants of the ruGPT-3 track, we have allocated 100 accounts - in each account 1 GPU Tesla V100-SXM3 32 Gb for free during the competition.

How do I get an account?

1) Register for the track ruGPT-3 (AI 4 Humanities) ods.ai/tracks/aij2020

2) Write a short letter with the name of your team and the task to AIJ_ruGPT-3@sberbank.ru

Few-Shot vs Fine Tuning

In the case of large generative models such as GPT-like architectures, there are several ways to "handle" them.

- : «» — . (GPT-3 ), . ( « → ») .

- : , « » (. «prompt engineering» — , , .

?

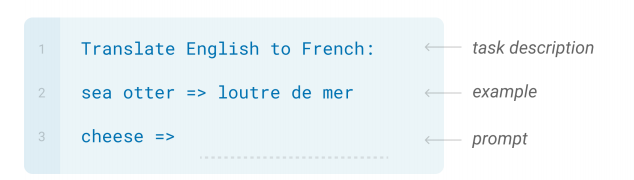

It can be very expensive to retrain large models, so this way of "communicating" with them allows you to avoid even that. The essence of seed programming is to select such prepared examples and beginnings for generation, so that further the model produces exactly what is needed.

Choosing the "seed" and generator hyperparameters (temperature, top_n, top_k), you can achieve quite tolerable answers due to only preliminary training of the model.

A seed example with 1 example and a description of the problem.

We will test both methods in practice - we will try to solve popular problems from scratch, and specific ones - with additional training.

Recommender systems

Let's make a recommendation system without training: we will submit information about the user's preferences in the form of text to ruGPT-3 Large, and we will watch how the model continues it. We can generate many options at once to get more recommendations.

Movie Recommendations: Not bad! At least the films seem to be generated according to the seed. Since I am not a connoisseur of Soviet cinema, I did not even know the film "Three plus two", but it is. For the generation, we used the following hacks - we specially supplied text with an open quote to force the model to generate proper names, and also cut off all unnecessary after the first sentence. Music Recommendations:

: — “”, “”, “

ruGPT-3 Large: — “”, “”, “ ”, “ ”, “ ”.

ruGPT-3 Large: — “”, “”, “ ”, “”, “” (, , , ).

ruGPT-3 Large: — “”, “”, “” .”

: : “ ”, “

ruGPT-3 Large: : “ ”, “ ” “ ”.

ruGPT-3 Large: : “ ”, “ ”.

ruGPT-3 Large: : “ ”, “ ”, “ ” .

: “ , “ ”, “ #( , )

ruGPT-3 Large: , “ ”, “” “”.

ruGPT-3 Large: , “ ”, “” “ ”.

ruGPT-3 Large: , “ ”, “”, “ ”, “ ”, “” “”.

The results are different all the time - you can potentially generate N variants, extract all the group names and collect Counter of the most popular ones - voila, a recommendation system from scratch.

Generating reviews

Let's take a more difficult task - let's make the model mimic a person in discussions or shops: As you can see, for topics widespread on the Internet, the model can generate coherent texts without training. But where is there still no way without additional training? There are 3 such cases:

: “ .

- ”

ruGPT-3 Large: “ .

- . , .”

: “ .

”

ruGPT-3 Large: “ .

.

, .

, , . , , .”

: .

"1+1"

ruGPT-3 Large: .

"1+1" — , . . . . " ", .

- complex data format - if, during generation without training, you lose quality due to generation in the wrong format - NER problem, code generation, symbolic calculations, etc.;

- , — QA , , , ;

- , , , — , .

StackOverflow

Let's raise the level of complexity: download Russian [stack overflow] (https://ru.stackoverflow.com/) and try to make a question-answer system. Will we be able to immediately learn both the style of answers, and knowledge, and several programming languages? It's not easy to get a good result in a taoki problem right away, but we can have fun - over 5 epochs, the model has learned to reproduce the style, being very confused in the content. But I learned the links to the documentation well - quite a good result for 5 eras of learning.

Training format: we submit the texts of questions and best answers in the format Q: ”blabla” A: ”blabla”. (there is actually no such library, the model generated a link to the documentation)

Q: python?

A: : push.log("Shalom").

- , .

set.log()**)

rGet = puts.random(extents) log.findIndex(puts.current) void join($window, command='unsetMainServer onManager') }

,

Q: python?

A: import pycode as pyc;

// ( href=https://docs.python.org/3/library/pycode.html )

For such tasks, you can enter additional arguments - for example, the name of the section / subject / programming language, about which you want to answer, and train the model on data of the form {“parameter”: ”value”, “question”: ”text_question”, “answer”: "Answer_text"}.

In terms of both the code and the text, we have a file right away - maybe someone will do better within the track? There are already quite successful implementations on the English-language model with code: writing html-code of web page elements, generating Figma templates.

Trying other languages! Twitter and more

The main language of teaching the model is Russian, but is it possible to apply the model in multilingual tasks? A few other languages always fall into the training sample from the web, so there is hope. Straightforward approach with seeds shows that the model knows a little about the English model: Well, almost: Sometimes it doesn't work at all: Why not practice on purely English data: I took the twitter-text-classification dataset from the Kaggle competition. After 5 epochs on 5 thousand first comments (20 minutes in Colab ) we get quite tolerable generation of very toxic comments in English:

ruGPT-3 Large:

—> cat

—> dog

—> pear

—> coder

—> cat

—> dog

—> pear

—> Python

—> cat

—> dog

—> pear

—> bunch #

—> dog

—> cat

—> dog

Context >>> Is it

ruGPT-3 Small: Is it's the case that #Microsoft is using #SAP to manage #Microsoft’s business?

Context >>> It is

ruGPT-3 Small: It is the only one that I've been trying to do. Siri is on the verge of death, and I'm not aware of why she has to be on the verge.

Context >>> Why

ruGPT-3 Small: Why do I go here? I'm wondering if I can use the #Microsoft Windows 8 Pro for the #WindowsPhone phone? Thanks!

Dialogue systems

Already, without pre-training, applying the model to dialog systems works quite well: the model can perform the chit-chat function (talking about nothing), freely chatting on various topics. With additional training on specific scenarios and domains, you can implement business applications.

The example below is an example of a dialogue without training, on a narrowly focused topic - an Internet provider support.

Seeding: first 2 lines - further generation. Of course, without pre-training, the model does not have a clear idea of the scripts and scripts of support specialists, however, even such a cold start already shows the standard way of “finding out what happened - hanging out - redirecting the call”. You can remember the classics:

User1: , ! ?

User2: .

User1: ?

User2: 500

User1: ?

User2: . .. .

User1: ?

User2: . , - ...

User1: , , , , ?

User2:

User1: , . , .

User2: , . .

User1: . , , .

User2: , , ?

User1: ?

User2: . .

User1: ?

User2:

User1: . .

User1: , . - .

User2: , .

User1:

User2:

User1: «», 656, , .

User2: , 766—1669, : , , , , , , ?

User1: 1 . , , .

User2: , , , - ?

User1: , 1 . , - , , , . .

User2: , , , , - .

User1: .

User2: , ?

User1: , , .

User2: , , .

In general, "we must be patient." The model even remembered that User1's name is Dmitry, and User2 said goodbye to him accordingly.

Instead of a conclusion

To continue to develop the direction of generative models and improve architectures in NLP problems, it is always necessary to understand the limits of applicability of a particular technology - and its relevance.

Now, with every release of similar architectures - Reformer, Longformer, BigBird, etc. - we look at large pre-trained models in a slightly new way, posing new challenges to them.

In addition to the aforementioned cases, ruGPT-3 can definitely be applied and beneficial in practices such as:

- augmentation of text data for ML tasks

- dialogue models for automation

- generative summation tasks

- rewriting, generation of news, sports notes based on structured information.

Hopefully the demos and models will benefit the community - and fun!

And, of course, we are waiting for your decisions on the ruGPT-3 track until the end of November 22!