Over the past few years, significant advances have been made in the field of natural language processing (NLP) , where models such as BERT , ALBERT , ELECTRA and XLNet have achieved astounding accuracy in a variety of tasks. During pre-training , vector representations are formed on the basis of an extensive corpus of texts (for example, Wikipedia ), which are obtained by masking words and trying to predict them (so-called masked language modeling). The resulting representations encode a large amount of information about language and the relationship between concepts, for example, between a surgeon and a scalpel. Then the second stage of training begins - fine-tuning - in which the model uses data sharpened for a specific task in order to learn how to perform specific tasks like classification using general pre-trained representations . Given the widespread use of such models in various NLP problems, it is critical to understand what information they contain and how any learned relationships affect the results of the model in its applications in order to ensure that they comply with the Principles of Artificial Intelligence (AI) .

The article “ Measuring gender correlations in pretrained NLP models ” explores the BERT model and its lightweight cousin ALBERT in search of gender-related relationships, and formulates a number of best practices for using pretrained language models. The authors present experimental results in the form of public model weights and exploratory dataset to demonstrate the application of best practices and provide a basis for further investigation of the parameters, which is beyond the scope of this paper. Also, the authors plan to lay out a set of weights Zari , in which the number of gender correlations is reduced, but the quality is kept high on standard NLP problems.

Measuring correlations

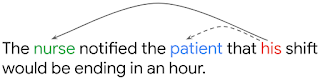

, , , . , . , , his («») nurse ( «»), patient («»).

– OntoNotes (Hovy et al., 2006). F1-, ( Tenney et al. 2019). OntoNotes , WinoGender, , , . ( 1) WinoGender , (.. nurse , ). , , , - , .

BERT ALBERT OntoNotes () WinoGender ( ). WinoGender , , .

, (Large) BERT, ALBERT WinoGender, ( 100%) (accuracy) OntoNotes. , . : , . , .. , , , , .

: NLP- ?

- : , , , . , BERT ALBERT 1% , 26% . : , WinoGender , , , , , , (male nurse).

- : , . , , , , . , , - (dropout regularization), : (dropout rate) BERT ALBERT, . , , , .

- : : , , WinoGender - . , OntoNotes ( BERT'), , - , . (. ).

, NLP-, . , . , , , , . , , , .

- — Kellie Webster

- Translation - Ekaterina Smirnova

- Editing and layout - Sergey Shkarin