The Waymo team (which runs Google's self-driving car) has released a detailed report of their tests in Phoenix, Arizona. These reports describe several years of operation and more than 9 million kilometers. The reports provide incredible safety records that should guide them to roll out their products on a large scale (at least in low-traffic urban and suburban areas like Chandler, Arizona).

This report is notable for several reasons:

- It is incredibly transparent - something we have never seen before in any team. In essence, the rest of the companies are thrown a glove - if their reports are not as transparent, we will assume that they are not doing so well.

- , . 10 30 , 9 8 ( 10%, 2 ). - 6 .

- Waymo , / .

- ( ), .

- , 9.8 40-60 , , 22-27 , 12 6 . , 8 , Waymo «» – .

Accident involving a Waymo vehicle without a test driver. A car crashed into the back of the unmanned vehicle, the driver of which did not slow down.

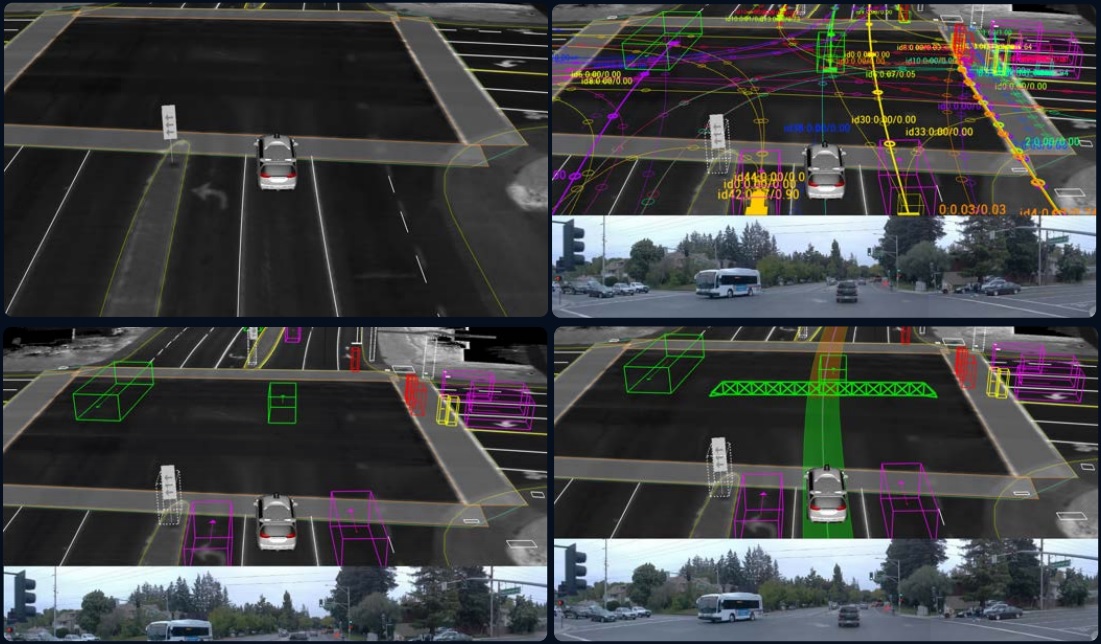

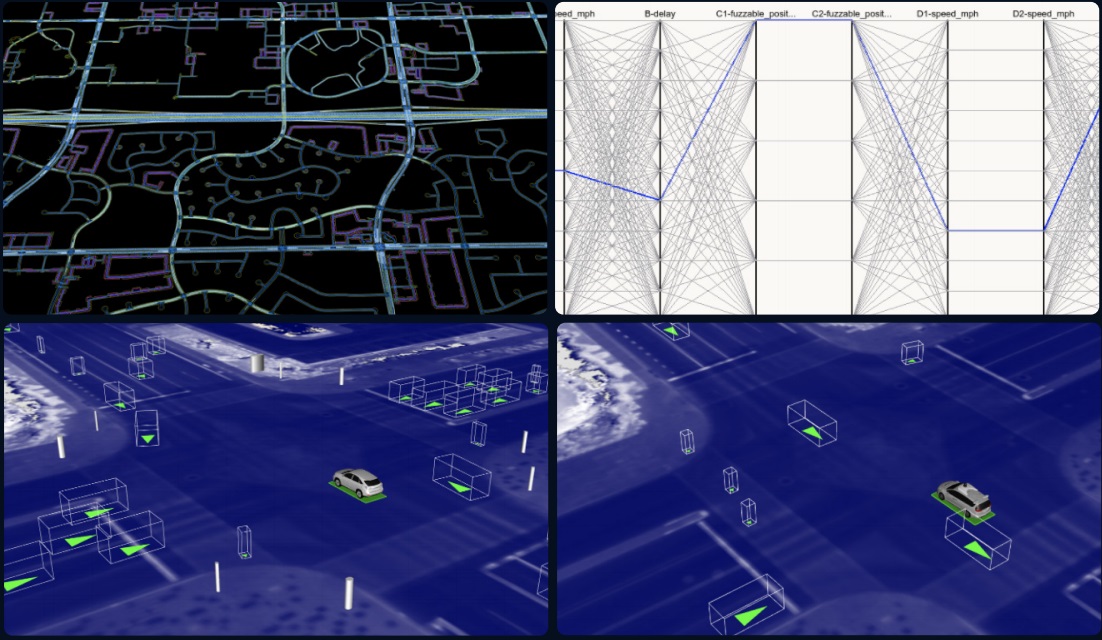

Note that 62% of the events described did not occur at all. Typically, test drivers took control, and careful analysis in simulators led to the conclusion that there would have been some "contact" without a safety driver. Waymo engineers study all potential collisions to estimate the total number of accidents that can occur without a driver behind the wheel (Waymo self-driving cars have driven 104,000 km without test drivers. One of the accidents occurred with exactly such a car that was hit in the rear during braking ). 18 accidents were real, 29 were evaluated in simulators.

47 accidents per 9.8 million kilometers (or 209,000 kilometers per accident) - this estimate is slightly better than what we did for people when it comes to accounting for all accidents (including those contacts that do not cause significant damage and that the police will not know about) and insurance companies). Waymo engineers took into account pedestrian contact with their vehicle and even drivers who deliberately cut off their vehicles to check them. The 47 mentioned above would be a better estimate of human accidents if half of those 47 contacts were Waymo's fault.

These estimates are not entirely correct, since we do not have data on sufficiently calm suburbs of Phoenix. It's easier to drive there (which is why the company chose them as the first step), so if we improve our accident rate by half or one third (compared to more difficult areas), the results will be impressive.

Below I will talk about a number of special cases and some statements. Waymo has also released a new document describing their technical processes and security principles. This document is rather a new version of the past, and there are no great revelations in it. However, this is still a good example of transparency, perhaps it should be devoted to a separate article.

Are they really innocent?

Waymo believes that all incidents are related to the misconduct of other parties. Most of these incidents did not reach the police with formal charges. Reading the descriptions, it might seem like Waymo is to blame for some of the accidents, so the company uses the phrase "almost all incidents." So, for example, their car did crash into a car, which cut it and braked it for no reason, but the company believes that this was done on purpose.

Be that as it may, there are two main factors of failure. First, in 29 simulated crashes, a human driver could have saved the day, even though other road users were to blame. In fact, people often prevent accidents that can be triggered by other drivers. Of course, Waymo's software has room to grow - it still doesn't perform as well as test drivers. Waymo claims that their testers have completed additional training courses and are well above average driving skills. I took their course 8 years ago, and it included special programs for safe driving, accident prevention and more. I believe these courses are now even better.

All this is great, we should strive for this, but this is not the bar that should be set when it comes to the first version of the product. Waymo test drivers (there are two of them) don't drive, they just sit there, ready to take over. To be prepared for potentially dangerous situations, they monitor the road (and the software console) more closely than any other driver. Hopefully, the algorithms in Waymo systems will learn from all simulated pins and learn from the actions of the test pilots to drive the same way.

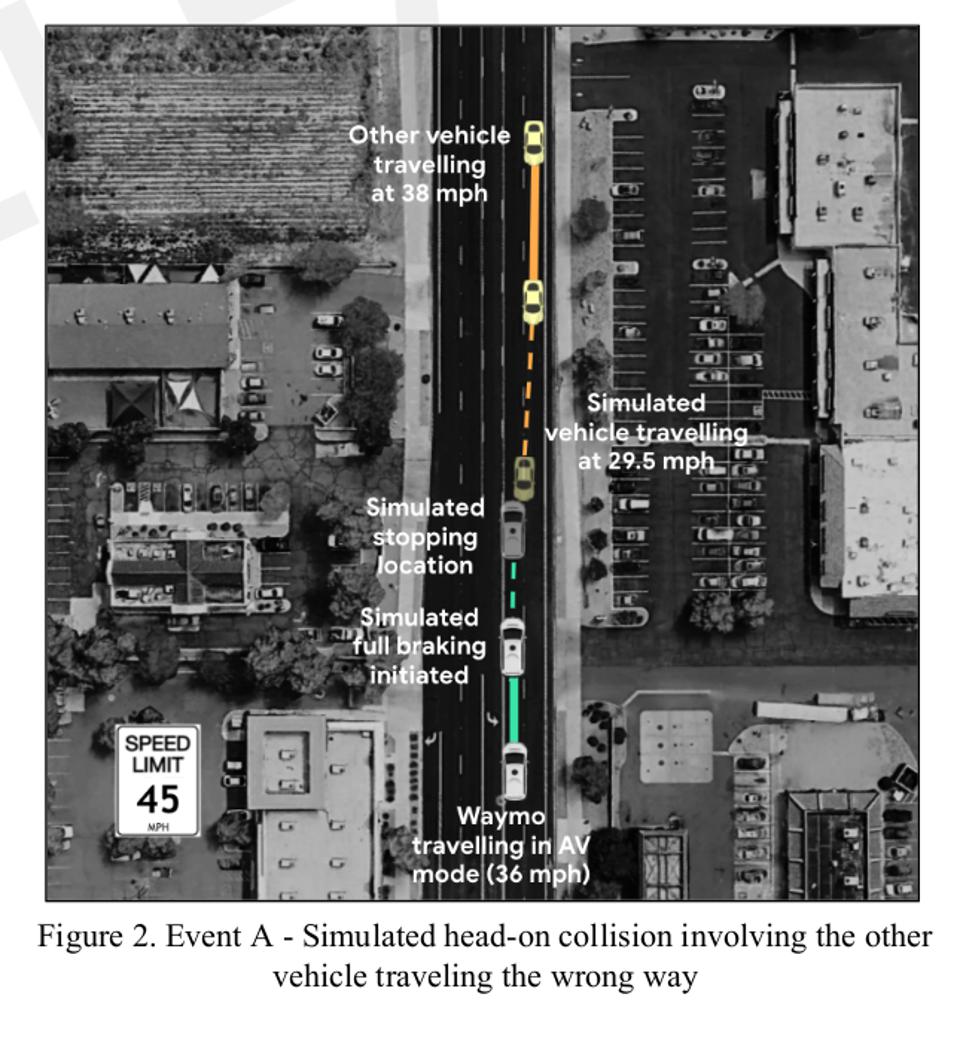

Perhaps the creepiest example involves a car driving in the oncoming lane towards a head-on collision with a Waymo car. In the simulation, Waymo's car completely stopped in its lane, but was still hit by an oncoming car (the company does not disclose how the collision was avoided in reality - perhaps the test driver made a maneuver that the software would not have gone to, or maybe the driver of another car came into myself and turned into my own lane).

A driver driving in the oncoming lane enters the lane of a Waymo car - frontal collision simulation.

Perhaps Waymo was partly to blame for some of the incidents. In two simulated crashes, Waymo's car hit other cars. In one of the cases, the unmanned vehicle changed into a lane in which another car was overtaking, exceeding the speed by 48 km / h. In another case, an unmanned vehicle rebuilt into the left lane for a turn and crashed into a car that climbed into this lane a little earlier. While these drivers were certainly breaking the rules, Waymo could have improved the detection of objects behind it (the company probably already did).

Waymo's car crashed into the back of the car, which cut it off and braked sharply for no reason. Probably, someone “played” with an unmanned vehicle to test it - and did it badly (this happens occasionally, but we do not know about all cases. Usually the test driver intervenes immediately).

The second question is whether the unusual and conservative behavior of self-driving cars was the “cause” of some of these accidents - not because the system did something wrong, but because of the increased likelihood of human error. People expect certain actions from other drivers, and robots may not do them, which may surprise other road users. All 15 times when a Waymo car was crashed from the back, other drivers were to blame, but some of these accidents might not have happened if the driving style of the self-driving car was more aggressive.

There have also been several incidents in which the Waymo car turned right and something prompted the drivers behind it to try to overtake it on the right. Because of this, side collisions occurred. People do not expect someone to take a right turn on the right side - this could cause aggression from other drivers leading to an accident (which is still the fault of the human driver). All of this reminds me of the first (and only?) Accident that Waymo was to blame - their car tried to bypass the bus on the right, as people often do in wide right-turn lanes.

One day, a Waymo car braked sharply after it started turning left and was hit from behind by a car that was too close.

Waymo claims that they wanted such cases, and that it is unlikely that there will be many such situations, but they cannot be completely ruled out. Be that as it may, 200,000 km per accident is roughly comparable to human driving (according to some estimates, even slightly better), and there is evidence of this. Moreover, even if we assume that Waymo is to blame for some of the accidents listed above, they still crash less often than people.

It's just suburban Phoenix

It is believed that if Waymo succeeded in suburban Phoenix, then it is far from certain that their system will work safely enough in downtown San Francisco or more complex cities like Boston, New York or Mumbai. Of course, you cannot think that you will just move the car there, load a new map into it and everything will work. There is still much to be done.

Cruise, which conducts tests in downtown San Francisco, has long said it wants to tackle a bigger challenge first. It is unclear how long it will take for Cruise to turn around there, or how long it will take Waymo to get out. Waymo has run tests for a long time in the suburbs of San Francisco / Silicon Valley (where the company is based) and conducted several tests in downtown San Francisco.

However, there are many places in the world that are not very different from Phoenix, so if Waymo does a little more work, it can cover a very significant layer of territories. The Cruise should also be given credit. Downtown is a major market for taxis, and commuter services, unable to reach the center, are in an awkward position. Waymo is well aware of this, so the race continues. As they grow up, they will learn even faster.

Waymo uses maps to mark its service areas, while Tesla tries a car that drives with the most basic maps. Waymo's approach allows the company to be far ahead of Tesla, whose machines use cards and cannot operate without them. This is not a big problem for taxis because they have a limited service area covered with maps. The problem is Tesla is selling cars to customers who will drive them everywhere. Mapping costs money, but it is clearly cheaper than building and maintaining roads. If your company has a sufficiently large volume of services provided, the cost of drawing up a map in terms of customer mileage is insignificant. Moreover, you will receive additional added value and accelerate your development in the market.

Waymo also has an operations center, whose employees monitor unmanned vehicles without drivers and give them hints in situations that are not clear (for the systems). Operations center employees cannot control vehicles remotely and therefore cannot prevent accidents. In addition to helping to avoid mishandling in difficult situations, if they had the ability to remotely manage, they could play a role in maintaining good security performance.

Is there enough data?

Waymo cars have not been involved in major accidents, and the company denies its fault even in minor incidents. In part, this may be because their cars are trying to drive on roads limited to 72 km / h (or lower), and also trying to take advantage of the ease of driving in the suburbs discussed above. Since fatal accidents occur on average every 120 million kilometers (and every 290 million kilometers when traveling on a motorway), it can be argued that 9.6 million kilometers is not enough to draw conclusions about mortality. A 2016 article from Rand argues that it takes billions of kilometers to prove safe driving ( Rand published research this week that softens this estimate somewhat).

The best approach is not to approach in terms of “proof of safety”, but to quantify low risks (this is the twin of security). When deciding on the appearance and implementation of robotic vehicles, society will need to make sure that the risks are acceptable, and not reduce them to zero or strive for absolute proof. In particular, it is important to understand that in almost always life it is not only acceptable, but also necessary to take risks - this can lead to significant benefits (especially if the benefit is to significantly reduce risks in the future).

Thus, Waymo cannot claim that their vehicles will have fewer fatal accidents than every 120 million kilometers, since the company has a data of only 9.6 million kilometers (or 32 million if you count other test zones. and billions of kilometers in simulators). However, the company can reasonably state that the risks of significant danger to their vehicles are quite low. In addition, it would quickly become clear from the harm done (even minimized).

On the other hand, the risks and dangers of human driving are well known and quite high. With each passing year, the rollout of self-driving systems has been delayed further, and the longer these risks remain - with dire consequences of 40,000 US deaths each year. Waiting for certainty becomes like madness, because we already perfectly understand how dangerous the alternative is.

Whether it's Cruise, Waymo, or someone else who manages to travel through busy cities with acceptable risks, we don't want to postpone this day. All of this is worth a little risk. Think of the fact that anyone who goes overspeed (that is, almost everyone) puts themselves and everyone else at a much greater risk than self-driving systems, and the only benefit for all these people is to get somewhere a minute early.

As for human drivers, we have a DMV test for them - half an hour of driving, and you can get a license. We go for it, although we know that newly minted teenage drivers are much more dangerous than adults. We're probably not sure if they're “safe enough” (in fact, we're pretty sure they aren't). Indeed, since Waymo cars have never made serious accidental mistakes in eight human lives, no human has yet come close to driving safety. People can make serious mistakes, provoke serious accidents, clearly indicate their danger - and still retain their driver's license.

The reality is that we (as a society) have to be willing to take reasonable risks and allow developers to do what seems to be very risky on a small scale. Thus, when they achieve significant success and development, they will be able to significantly reduce risks on a global scale. The risks they will take in the first stages will be associated with small vehicle fleets and are initially limited. But the bottom line is that Waymo was able to prove - there are no big risks here.

Green light for Waymo

Waymo will soon make its Chandler service available to the public. Their cars can be used like Uber, often without a driver. The company is going to bring all pilots back as soon as all vehicles have anti-virus barriers. I think they are ready to go ahead and release all their cars without drivers.

(The company believes that what it did in Arizona is already a real deployment. Waymo allowed participants in the Waymo One Beta test campaign to invite their friends, although they would still need to get permission from Waymo as these new members will not be covered by the NDA I think the actual deployment looks like Uber - anyone can download the app and go. However, this is very difficult, since the company can simply inundate with requests)

Are other teams close to such successes? We do not know. Nobody has published such detailed data yet. The glove is thrown. If Cruise, Zoox, Argo, Tesla and others want to say that they are in the game, then they need to publish the same data. If they don't, then we can assume that they are afraid of it for some reason. There is no need to reveal internal secrets. There are some useful insights to be drawn from the data, all of which should be shared for the good of the industry.

Will Waymo roll out as boldly as described above? Probably not. As I have often said, "people don't want to die at the hands of robots." We'd rather be killed by a drunkard. We expect perfection from robotic cars that they cannot provide, although we do not expect this from human drivers. For people, the risks associated with deploying Waymo systems are not forgiven low, they are lower than the risks of driving themselves. And I'm not just talking about today's society. Let's assume that Waymo grows based on launch dates, and that if they launch in a month, the company will grow significantly in another month. Then the math tells us that the risk they will prevent is actually the sum of all the risks of people who drove on their own all the time Waymo was a small company.and it will also be equal to the risks of all travel in the month in which the company becomes really big. If Waymo manages to handle up to 10% of all travel in the United States, then delaying deployment by a month would result in 80,000 accidents and 250 deaths due to all those people driving themselves rather than using Waymo unmanned vehicles. And so every month there is a delay.

Delaying the deployment of Waymo is immoral, as is any regulation that would get in the way of the company.

- Russia's first serial control system for a dual-fuel engine with functional separation of controllers

- In a modern car, there are more lines of code than ...

- Free Online Courses in Automotive, Aerospace, Robotics and Engineering (50+)

- McKinsey: rethinking electronics software and architecture in automotive

Vacancies

, , , - .

, , , .

, , . , , , , , .

, , .

, , , .

, , . , , , , , .

, , .

- -

- -

About ITELMA

- automotive . 2500 , 650 .

, , . ( 30, ), -, -, - (DSP-) .

, . , , , . , automotive. , , .

, , . ( 30, ), -, -, - (DSP-) .

, . , , , . , automotive. , , .

List of useful publications on Habré

- - Automotive, Aerospace, (50+)

- [] (, , )

- DEF CON 2018-2019

- [] Motornet —

- 16 , 8

- open source

- McKinsey: automotive

- …